This is the repository for our EMNLP2023 paper Poisoning Retrieval Corpora by Injecting Adversarial Passages.

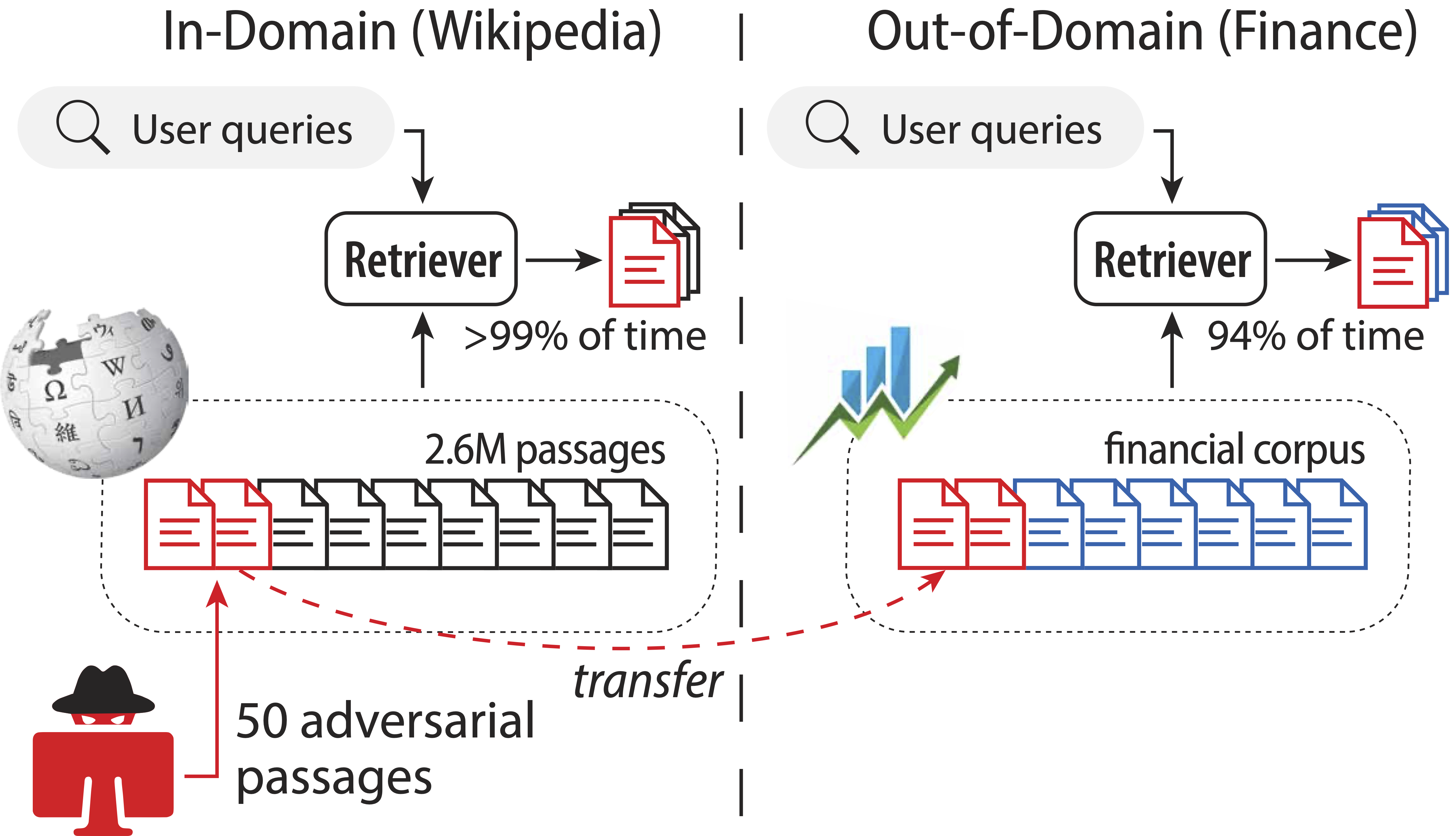

We propose the corpus poisoning attack for dense retrieval models, where a malicious user generates and injects a small fraction of adversarial passages to a retrieval corpus, with the aim of fooling retrieval systems into returning them among the top retrieved results.

Our code is based on python 3.7. We suggest you create a conda environment for this project to avoid conflicts with others.

conda create -n corpus_poison python=3.7Then, you can activate the conda environment:

conda activate corpus_poisonPlease install all the dependency packages using the following command:

pip install -r requirements.txtIn order to run Contriever model, please clone the Contriever source code and place it into the src folder:

cd src

git clone https://github.com/facebookresearch/contriever.gitYou do not need to download datasets. When running our code, the datasets will be automatically downloaded and saved in datasets.

Our attack generates multiple adversarial passages with k-means clustering given a retrieval model and a dataset (training queries). Here is the command to attack the Contriever model on the NQ training set with

MODEL=contriever

DATASET=nq-train

OUTPUT_PATH=results/advp

k=10

mkdir -p $OUTPUT_PATH

for s in $(eval echo "{0..$((k-1))}"); do

python src/attack_poison.py \

--dataset ${DATASET} --split train \

--model_code ${MODEL} \

--num_cand 100 --per_gpu_eval_batch_size 64 --num_iter 5000 --num_grad_iter 1 \

--output_file ${OUTPUT_PATH}/${DATASET}-${MODEL}-k${k}-s${s}.json \

--do_kmeans --k $k --kmeans_split $s

done

Arguments:

-

dataset,split: the dataset name and the split used to generate adversarial passages. In our experiments, we generate adversarial passages on the training sets of NQ (nq-trainwith splittrain) and MS Marco (msmarcowith splittrain). -

model_code: the dense retrieval model to attack. Our current implementation supportscontriever,contriever-msmarco,dpr-single,dpr-multi,ance. -

num_cand,per_gpu_eval_batch_size,num_iter,num_grad_iter: hyperparameters of our attack method. We suggest starting from our default settings. -

output_file: the.jsonfile to save the generated adversarial passage. We will use this file later to evaluate the attack performance. -

do_kmeans: whether to generate multiple adversarial passages based on k-means clustering. -

k,kmeans_split: the number of adversarial passages (i.e.,$k$ in k-means) and the current kmeans split we consider. We need to consider all$k$ splits (i.e., see the for loop of the variables).

This will perform the attack .json file in results/advp.

The attack process may be time-comsuming. However, we can attack different k-means clusters in parallel. If you are using slurm, we provide a script (scripts/attack_poison.sh) to launch the attack for all the clusters at the same time. For example, you can launch the above attack on slurm using:

bash scripts/attack_poison.sh contriever nq-train 10After running the attack, we generate a set of adversarial examples (by default, they are saved in results/advp). Here, we evaluate the attack performance.

We first perform the original retrieval evaluation on BEIR and save the retrieval results (i.e., for a given query, we save a list of top passages and similarity values to the query). Then, we evaluate the attack by checking if the similarity of any adversarial passage to the query is greater than that of the top-20 passages on the original corpus.

We first save the retrieval results on BEIR. An example of the evaluation command is as follows:

MODEL=contriever

DATASET=fiqa

mkdir -p results/beir_results

python src/evaluate_beir.py --model_code contriever --dataset fiqa --result_output results/beir_results/${DATASET}-${MODEL}.jsonThe retrieval results will be saved in results/beir_results and will be used when evaluating the effectiveness of our attack.

We provide a script (scripts/evaluate_beir.sh) to launch the evaluation for all the models (contriever, contriever-msmarco, dpr-single, dpr-multi, ance) and datasets we consider (nq, msmarco, hotpotqa, fiqa, trec-covid, nfcorpus, arguana, quora, scidocs, fever, scifact):

bash scripts/evaluate_beir.sh

Then, we evaluate the attack based on the beir retrieval results (saved in results/beir_results) and the generated adversarial passages (saved in results/advp).

EVAL_MODEL=contriever

EVAL_DATASET=fiqa

ATTK_MODEL=contriever

ATTK_DATASET=nq-train

python src/evaluate_adv.py --save_results \

--attack_model_code ${ATTK_MODEL} --attack_dataset ${ATTK_DATASET} \

--advp_path results/advp --num_advp 10 \

--eval_model_code ${EVAL_MODEL} --eval_dataset ${EVAL_DATASET} \

--orig_beir_results results/beir_results/${EVAL_DATASET}-${EVAL_MODEL}.json Arguments:

attack_model_code,attack_dataset: which retrieval model and dataset that we used to generate the adversarial passages. We will read the corresponding.jsonfiles to load the generated adversarial passages according to these two arguments.advp_path: the path where the adversarial passages are saved.num_advp: the number of adversarial passages we considered during attack.eval_model_code,eval_dataset: which model and dataset that we evaluate the adversarial passages on. Wheneval_model_code!=attack_model_code, we study the transfer attack performance across different models; wheneval_dataset!=attack_dataset, we study the transfer attack performance across different domains/datasets.orig_beir_results: the.jsonfile where the retrieval results are saved. If this argument is not passed, we will try to automatically find the results inresults/beir_results.

The evaluation results will be saved in results/attack_results by default.

If you have any questions related to the code or the paper, feel free to email Zexuan Zhong (zzhong@cs.princeton.edu) or Ziqing Huang (hzq001227@gmail.com). If you encounter any problems when using the code, or want to report a bug, you can open an issue. Please try to specify the problem with details so we can help you better and quicker!

If you find our code useful, please cite our paper. :)

@inproceedings{zhong2023poisoning,

title={Poisoning Retrieval Corpora by Injecting Adversarial Passages},

author={Zhong, Zexuan and Huang, Ziqing and Wettig, Alexander and Chen, Danqi},

booktitle={Empirical Methods in Natural Language Processing (EMNLP)},

year={2023}

}