COLLIE: Systematic Construction of Constrained Text Generation Tasks (Website)

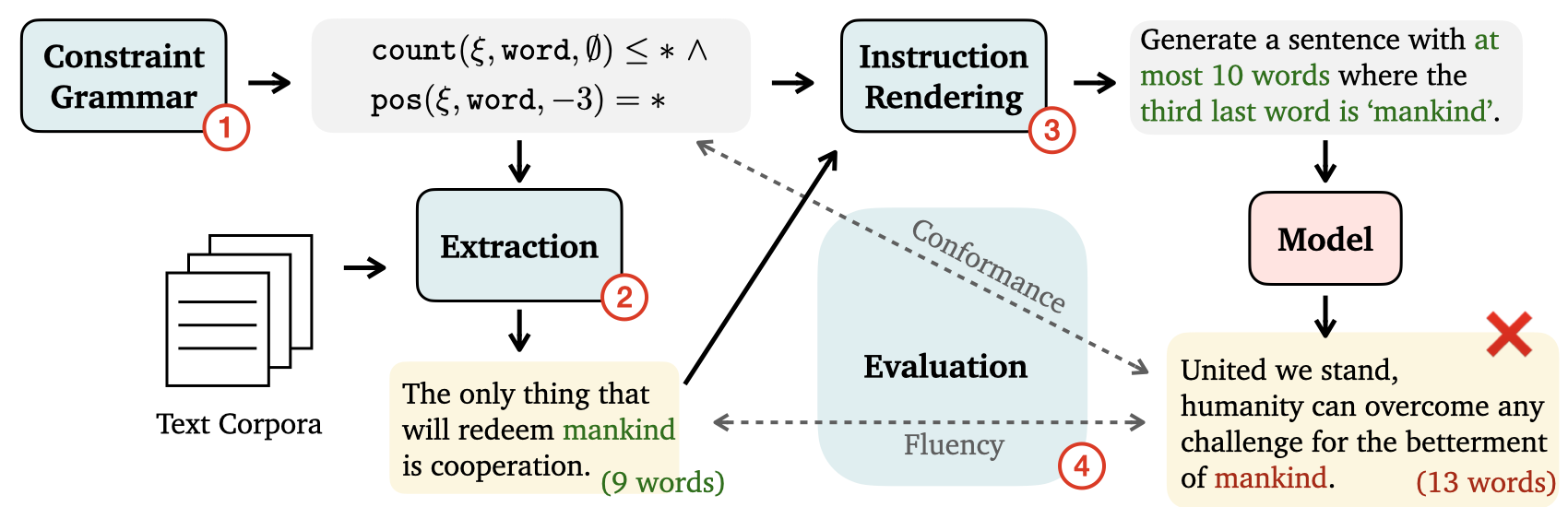

We propose the COLLIE framework for easy constraint structure specification, example extraction, instruction rendering, and model evaluation. Paper: https://arxiv.org/abs/2307.08689.

We recommand using Python 3.9 (3.10 as of now might have incompatabilty of certain dependencies).

-

Install from PyPI:

pip install collie-bench -

Install from repo in development mode:

pip install -e .

After installation you can access the functionalities through import collie.

There are two main ways to use COLLIE:

- Use the COLLIE-v1 dataset to run your methods and compare to the ones reported in the paper

- Use the COLLIE framework to construct new/customed/harder constraints

The dataset used in the paper is at data/all_data.dill and can be loaded by

with open("data/all_data.dill", "rb") as f:

all_data = dill.load(f)all_data will be a dictionary with keys as the data source and constraint type, and values as a list of constraints. For example, all_data['wiki_c07'][0] is

{

'example': 'Black market prices for weapons and ammunition in the Palestinian Authority-controlled areas have been rising, necessitating outside funding for the operation.',

'targets': ['have', 'rising', 'the'],

'constraint': ...,

'prompt': "Please generate a sentence containing the word 'have', 'rising', 'the'.",

...

}Reproducing the results reported in the paper:

- Our model results can be found in

logs/folder - To plot the figures/tables in the paper, check out

scripts/analysis.ipynb - To run the models to reproduce the results, run

python scripts/run_api_models.pyandpython scripts/run_gpu_models.py

The framework follows a 4-step process:

Step 1: Constraint Specification (Complete Guide)

To specify a constraint, you need the following concepts defined as classes in collie/constraints.py:

Level: deriving classesInputLevel(the basic unit of the input) andTargetLevel(the level for comparing to the target value); levels include'character','word','sentence', etcTransformation: defines how the input text is modified into values comparable against the provided target value; it derives classes likeCount,Position,ForEach, etcLogic:And,Or,Allthat can be used to combine constraintsRelation: relation such as'=='or'in'for compariing against the target valueReduction: when the target has multiple values, you need to specify how the transformed values from the input is reduced such as'all','any','at least'Constraint: the main class for combining all the above specifications

To specify a constraint, you need to provide at least the TargetLevel, Transformation, and Relation.

They are going to be wrapped in the c = Constraint(...) initialization. Once the constraint is specified, you can use c.check(input_text, target_value) to verify any given text and target tuple.

Below is an example of specifying a "counting the number of word constraint".

>>> from collie.constraints import Constraint, TargetLevel, Count, Relation

# A very simple "number of word" constraint.

>>> c = Constraint(

>>> target_level=TargetLevel('word'),

>>> transformation=Count(),

>>> relation=Relation('=='),

>>> )

>>> print(c)

Constraint(

InputLevel(None),

TargetLevel(word),

Transformation('Count()'),

Relation(==),

Reduction(None)

)Note that if no InputLevel is provided when initializing the constraint, the input text will be viewed as a sentence without any splitting. See step 4 for how the constraint is used to compare a given text and a target value.

Check out the guide to explore more examples.

Step 2: Extraction (Complete Guide)

Once the constraints are defined, you can now extract examples from the datasources (e.g., Gutenberg, Wikipedia) that satisfy the specified constraints.

To download necessary data files including the Gutenberg, dammit corpus to the data folder, run from the root project dir:

bash download.sh

Run extraction:

python -m collie.examples.extract

This will sweep over all constraints and data sources defined in collie/examples/. To add additional examples, you can add them to the appropriate python files.

Extracted examples can be found in the folder sample_data. The files are named as: {source}_{level}.dill. The data/all_data.dill file is simply a concatenation of all these source-level dill files.

To render a constraint, simply run:

>>> from collie.constraint_renderer import ConstraintRenderer

>>> renderer = ConstraintRenderer(

>>> constraint=c, # Defined in step one

>>> check_value=5

>>> )

>>> print(renderer.prompt)

Please generate a sentence with exactly 5 words.To check constraint satisfication, simply run:

>>> text = 'This is a good sentence.'

>>> print(c.check(text, 5))

True

>>> print(c.check(text, 4))

FalsePlease cite our paper if you use COLLIE in your work:

@misc{yao2023collie,

title={{COLLIE}: Systematic Construction of Constrained Text Generation Tasks},

author={Shunyu Yao and Howard Chen and Austin W. Hanjie and Runzhe Yang and Karthik Narasimhan},

year={2023},

eprint={2307.08689},

archivePrefix={arXiv},

primaryClass={cs.CL}

}MIT. Note that this is the license for our code, but each data source retains their own respective licenses.