This is the official code repository for the CVPR 2024 work: Neural Spline Fields for Burst Image Fusion and Layer Separation. If you use parts of this work, or otherwise take inspiration from it, please considering citing our paper:

@inproceedings{chugunov2024neural,

title={Neural spline fields for burst image fusion and layer separation},

author={Chugunov, Ilya and Shustin, David and Yan, Ruyu and Lei, Chenyang and Heide, Felix},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={25763--25773},

year={2024}

}

- Code was written in PyTorch 2.0 on an Ubuntu 22.04 machine.

- Condensed package requirements are in

\requirements.txt. Note that this contains the exact package versions at the time of publishing. Code will most likely work with newer versions of the libraries, but you will need to watch out for changes in class/function calls. - The non-standard packages you may need are

pytorch_lightning,commentjson,rawpy, andtinycudann. See NVlabs/tiny-cuda-nn for installation instructions. Depending on your system you might just be able to dopip install git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torch, or might have to cmake and build it from source.

NSF

├── checkpoints

│ └── // folder for network checkpoints

├── config

│ └── // network and encoding configurations for different sizes of MLPs

├── data

│ └── // folder for long-burst data

├── lightning_logs

│ └── // folder for tensorboard logs

├── outputs

│ └── // folder for model outputs (e.g., final reconstructions)

├── scripts

│ └── // training scripts for different tasks (e.g., occlusion/reflection/shadow separation)

├── utils

│ └── utils.py // network helper functions (e.g., RAW demosaicing, spline interpolation)

├── LICENSE // legal stuff

├── README.md // <- you are here

├── requirements.txt // frozen package requirements

├── train.py // dataloader, network, visualization, and trainer code

└── tutorial.ipynb // interactive tutorial for training the modelWe highly recommend you start by going through tutorial.ipynb, either on your own machine or with this Google Colab link.

TLDR: models can be trained with:

bash scripts/{application}.sh --bundle_path {path_to_data} --name {checkpoint_name}

And reconstruction outputs will get saved to outputs/{checkpoint_name}-final

For a full list of training arguments, we recommend looking through the argument parser section at the bottom of \train.py.

You can download the long-burst data used in the paper (and extra bonus scenes) via the following links:

-

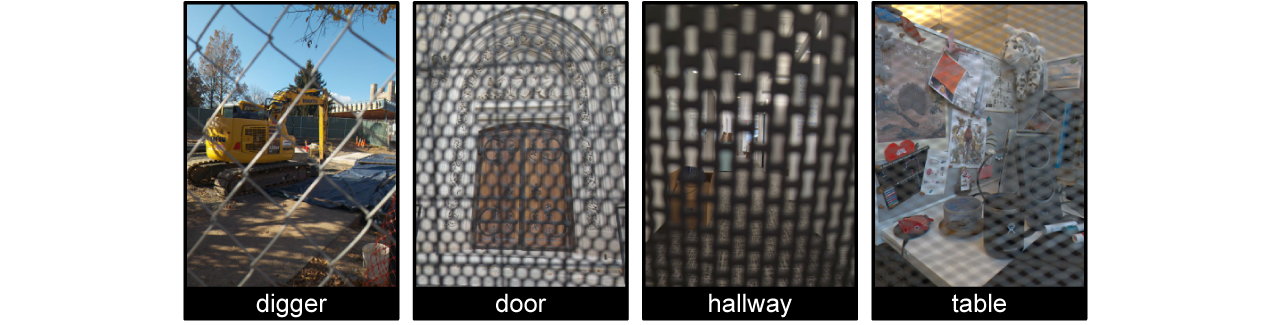

Main occlusion scenes: occlusion-main.zip (use

scripts/occlusion.shto train)

-

Supplementary occlusion scenes: occlusion-supp.zip (use

scripts/occlusion.shto train)

-

In-the-wild occlusion scenes: occlusion-wild.zip (use

scripts/occlusion-wild.shto train)

-

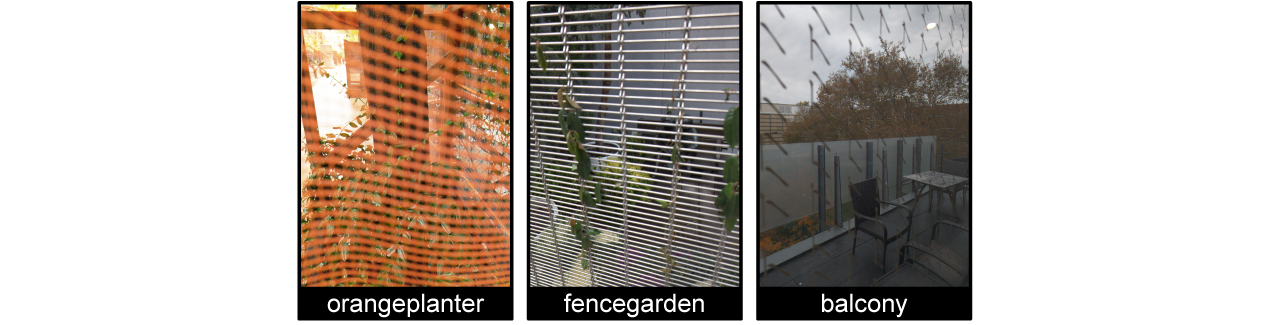

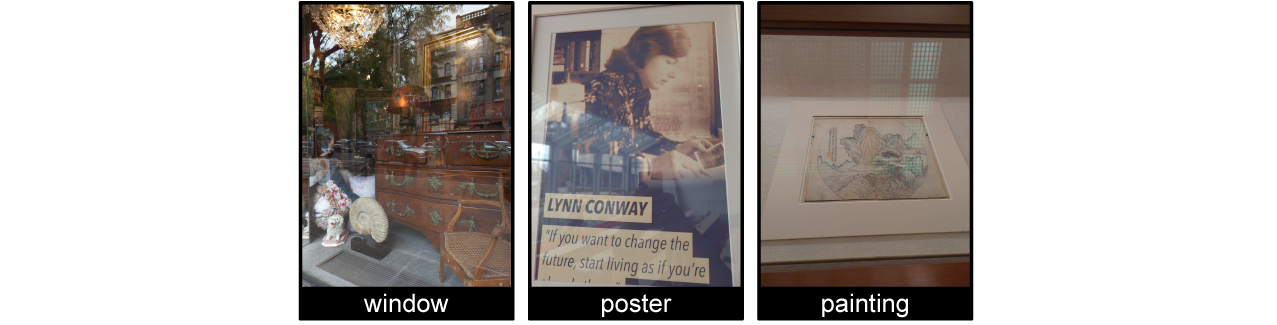

Main reflection scenes: reflection-main.zip (use

scripts/reflection.shto train)

-

Supplementary reflection scenes: reflection-supp.zip (use

scripts/reflection.shto train)

-

In-the-wild reflection scenes: reflection-wild.zip (use

scripts/reflection-wild.shto train)

-

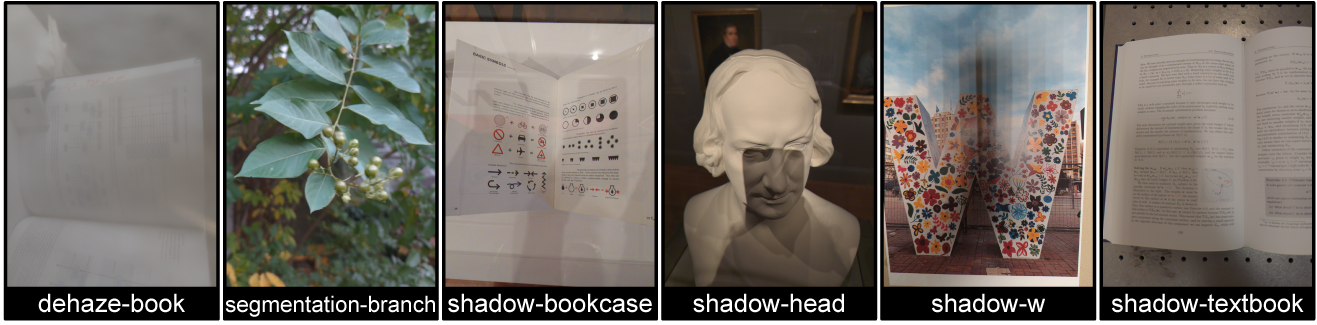

Extra scenes: extras.zip (use

scripts/dehaze.sh,segmentation.sh, orshadow.sh)

-

Synthetic validation: synthetic-validation.zip (use

scripts/reflection.shorocclusion.shwith flag--rgb)

We recommend you download and extract these into the data/ folder.

Want to record your own long-burst data? Check out our Android RAW capture app Pani!

Good luck have fun, Ilya