This is a narrative on how computational structures such as data structures, programming constructs, and algebraic structures undergirding Computer Science display deep parallels among them. The mathematical terminology for making the links between two objects of study is called a morphism. It allows one to study analogies occuring across different categories rigourously by examining their underlying structure. I first came across these as as I was exploring features in computer programming languages and I kept finding out that they exhibited parallels to ideas in domains remote to Computer Science. It is designed to act as compilation of the rich skein of conceptual coherences and interconnections of computation as unearthed by research scientists across the globe.

I was able to understand that the following subjects:

| Logic | Algebra | Language | Computation | Categories | Topology/Spaces | Quantum Mechanics |

all have deep links with each other, which is being unravelled by multiple scientists all across the world. It is one of the most exciting things to be learning any one of these subjects and then finding out links about these in the other subjects. There is a popular term called Curry-Howard isomorphism, which has been getting expanded into various forms as new links are unearthed. One can see this elaborated as:

- Curry-Howard-Lambek

- Curry-Howard-Tait

- Curry-Howard-Lambek-Scott-Tarski-Stone

- Curry-Howard-Brouwer-Heyting-Kolmogorov

and so on as the articles and papers touching upon these issues try to draw the parallels. It is safe to say that there is much happening in an interdisciplinary manner and this document attempts to give a partial documentation of some of the links the author has been able to patch together. Hope you enjoy finding the interconnections!

- TODO: Role of Analysis / Synthesis

- TODO: Might have to go into cybernetics for a bit when mentioning synthesis

- TODO: This would tie in with the genesis of the ideas of entropy in the Macy Circles

- TODO: Discuss what ideas the second generation cybernetists uncovered (Foerster, Pask, Varela, Maturana etc.)

- TODO: Add in Baez-Stay Rosetta work here

- TODO: Describe about Theory A and Theory B side of computation

Theory A concentrates on Programming Languages. Theory B concentrates on complexity theory

One of the prominent ideas in complexity theory is that once you have a description of a computational process, you can now have a way in which to compare these algorithms with each other.

If you have an algorithmic process to solve it, then it comes down into polynomial time.

The problem that is opened up when something is not polynomial time is that you would have to search throughout the computational universe in an enumerative fashion for the solution. This means that it could take a lot of time. Even for creating a simple image, like say 32 x 32 with 2 colors could take an astronomical amount of time. And if we even just increase the amount a tiny bit, you could even go beyond the number of seconds till predicted lifespan of the Universe, even with the fastest computer.

- TODO: Mention this thought experiment

So, polynomial time is associated with algorithms which we have been able to device for computationally tractable problems and NP is the time usually (Verify if this is a true claim), with ones where we don’t have a proper algorithm to solve it.

- TODO: Discuss if the whole problem can be devised in a way so as to arrive at way to falsify it.

The place where this matters is that for the number of open problems in mathematics and computer science, you have P associated with the problems for which we have been able to find a way to traverse the space of possibilities in a way so that we achieve neat time metrics and the intractable ones for which we still haven’t been able to study/understand the space in which they are inhabited except traversing it one unit at a time which presents us a problem in the presence of finite space, time and computational resources.

- TODO: Describe about P vs. NP

- TODO: Shannon’s role

- TODO: Diagram showing how Curry to Howard to De Bruijn AUTOMATH, Kolmogorov to Martin Löf to FOLDs to Voevodsky (Homotopy Type Theory) + Graphic Formalisms + Work in Quantum Mechanics

- TODO: Note how propositions are now types, but types are not propositions. And types are the concepts used in a synthetic manner

When I was young, I found people talking about ideas like Curry-Howard isomorphism or discussing the struggle about learning about monads pretty intriguing. Though, I lacked the technical know-how at that time to evaluate what was even being addressed. But as I started looking into these over a period of time, I slowly became immersed in the terminology enough to understand what was being referred to and what was at stake. If you find yourself puzzled about what all these ideas are about but are at loss on how to proceed, let me share some of the things that have helped me in gaining traction to understand them.

Since 1900s there have been emergent fields in mathematics like universal algebra, category theory that attempt to capture rigorously the parallels between different domains of study. These studies along with the requirement for engineering complex systems and our drive to understand these ideas deeply, lead to setting up fields within computer science to examine them ideas closely. Some of these domains of inquiry include automata theory, algorithmic complexity, and different kinds of logical and (axiomatic/operational/denotational/categorical) semantic studies.

Reading through this literature and paying attention to discoveries happening in Computer Science made me alert to the idea that something is up. There seems to be something strange and deep happening in the intersection of Computer Science and Mathematics. Observing my own work with programming languages made me see how they have deep congruences when you look closer at the surface structure of programming languages and use this to understand their deeper structures. Computing can bet hought of as a medium and programming languages as a way for interacting with these computational structures. Each of such structures that are constructed and deconstructed in the computers differ in the way they provide tractability and compositionality. Bringing together abstractions from mathematics and sciences help us see how each programming language differ and unite by casting them in a setting where their fundamental nature is made visible and can be tinkered with.

This repository attempts to capture the (hi)story of how these emerged, and the key people who contributed to it. I intend to turn it into a visual catalogue of what kinds of morphisms/structure preserving maps computational structures display among each other written in a manner communicable to someone who have sensed a kind of resonance across very different fields of computation, but would like to explore if there is a meta-structure emerging here.

My motivation towards studying these concepts is that they allow you to figure out the deep unity and distinction among different concepts in programming languages. Apart from programming languages, these studies also shine light on how natural language could be tied to programming languages. These I sense provide a certain setting in which you can understand how language, grammars, mechanism, and mind are related.

Also, it is of great value in doing advancing programming methods and the field is being actively researched. There has been a ton of activities in these domains and it is intimidating for an entrant to understand the who, what, how and why of these. This document is my humble attempt at trying to bring a structure to the tangled web of development so that it might help someone to make sense when undertaking a similar journey. Hope it helps!

I also keep a rough journal of how I came across the ideas here.

And if you find any errors or have feedback, please reach out to me on Twitter: @prathyvsh

Concepts under study

- Fixed Point: Fixed points can be thought of as the state when an input to a function returns itself as the output.

This is an important idea in computation as fixed points can be thought of as modelling loops and recursion.

- Continuations: Continuations can be thought of as a construct that carries with it the context that need to be evaluated.

- Lazy Evaluation / Non-strictness: Lazy evaluation also known as non-strictness, delays the evaluation of a program and lets a user derive the values on demand.

- Actors: Actors are models of concurrency devised by Hewitt. He found the aspect of lack of time in Lambda Calculus a setback and sought to amend it with his model.

- Closures: Closures are contexts of function execution stored for computational purposes

- Automata Theory

- Algebraic Effects: Algebraic Effects allow one to build up composable continuations.

- Monads: Originally deriving from abstract algebra, where they are structures that are endofunctors with two natural transformations. Monads when used in the programming context can be thought of as a way to bring in infrastructure needed for composing functions together.

- Montague Quantification: Montague considered programming language and natural languages as being united with a universal grammar. His idea of quantification is thought to be parallel to continuations in programming languages.

- Generators/Iterators: Constructs that allows one to control the looping behaviour of a program

- ACP

- Pi Calculus / Calculus of Communicating Systems

- Full Abstraction

- Bisimulation

- Communicating Sequential Processes

- Combinatory Logic

- Lambda Calculus

- Homotopy Type Theory

- Constructive Mathematics

- Ludics

- Linear Logic

- Geometry of Interaction

- Transcendental Syntax

- Game Semantics

- Domain Theory

- Algebraic Structures

Magmas, Semigroup, Quasigroup, Loop, Monoid, Monad, Group, Abelian Groups, Ring, Fields, Lattice, Modules, Filters, Ideals, Groupoid, Setoid, Trees, Lists, Units

Algebraic structures are studied under universal/abstract algebra with each species sharing a different structural property. They can be thought of as sharing a set with certain operations that gives them a particular nature.

They have deep connections with computation as most of the structures that we deal with in computer science belongs to the algebraic species studied by mathematicians.

- Data and Co-Data

- Algebras and Co-Algebras

- Initial and Final Algebras

- Morphisms

- Recursion Schemes

- Covariance and Contravariance

- Monotonicity

The study of computation is something that has deep roots into antiquity. Keeping in mind that it is anachronistic to ascribe modern concepts to describe what our ancestors did, some proto-form of computation can be seen in the ancient divination devices used in ancient Arab culture and medieval period. The 17th, and 18th century found many great minds setting a ground for modern algebra to take roots and a significant break in the tradition can be thought of as coming from the English school of logic where algebra and logic was combined. After this period great advances where made throughout the 19th century which set the stage for the intellectual advancements of the 20th century where the idea of computation takes shape.

There are several works that contributed to the emergence of computer science but some of the figures that have had a salient early influence in shaping up the idea of computation were the works of Gödel, Frege, Hilbert, Russell, Post, Turing, and Whitehead.

Towards 1910s, a framework called Lambda Calculus was invented by Alonzo Church, inspired by Principia Mathematica. Principia Mathematica was an undertaking to ground all of mathematics in logic. It was created in response to the Hilbert program to formalize effective calculability. Lambda Calculus became one of the standard environment to do work on computation in academic circles. This inspired Scott-Strachey-Landin line of investigations to base programming language studies on it.

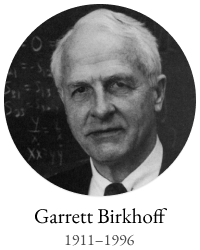

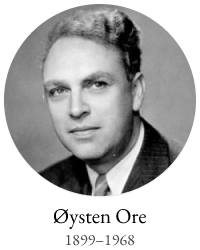

In 1930s, work on Universal Algebra, commenced by Whitehead, were given a clarified format by mathematicians like Oysten Ore, and Garrett Birkhoff.

Towards 1940s, one would see the development of Category Theory. A huge amount of intellectual advances are made from this theoretical vantage point that would contribute towards studying the morphisms between different theoretical models.

Lattice Theory, Universal Algebra, Algebraic Topology, and Category Theory became fields with intense investigation into the mathematical structure. It is during this period of intense activity that Godemont invented monads under the name “standard construction” in his work Théorie des faisceaux (Theory of Sheaves) (1958).

John McCarthy was one of the first persons to attempt to give a mathematical basis for programming. In his paper Towards a mathematical science of computation (1961), he discussed the then three current directions of numerical analysis, complxity theory and automata theory as inadequate to give a firm footing to software engineering as practiced in the day and attempted to give his ideas on building a firm foundation.

- TODO: Add in image of John McCarthy

Three approaches to programming language semantics emerged in the 1960s. Mathematical semantics attempted to act as a metalanguage to talk about the programs, their structures, and data handled by them. This in turn would also act as a precise way to provide specification for compilers.

The operational approach took the compiler itself to constitute a definition of the semantics of the language.

There are three kinds:

1/ Small Step or Structural Operational Semantics

It was introduced by Gordon Plotkin.

This method makes use of a transition relation to ddefine behaviour of programs. SOS specifications make use of inference rules that derive the valid transitions of a composite syntax in terms of the transitions of its components.

2/ Reduction Semantics by Matthias Felleisen and Robert Hieb

This is devised an equational theoy for control and state. It uses the idea of contexts where terms can be plugged in.

3/ Big Step Semantics or Natural Semantics

This method was invented by Gillies Kahn. It describes in a divide and conquer manner how the final evaluation results of language constructs can be obtained by combining the evaluation results of their syntactic counterparts.

Denotational Semantics was pioneered by Christopher Strachey and Dana Scott in the 1970s.

Denotational Semantics is powered by Domain Theory, which is a Tarskian style semantics for understanding programming language constructs. By providing models for programming language constructs, it acts as a ground to understand what each kind of programming languages translate into a mathematical object setting. This has the advantage that now models for PL constructs can now be interpreted as mathematical entities and any two language can now be compared for equivalence and relationships by looking at the mathematical objects they desugar/translate into. The mathematical setting initially (currently?) adopted is that of set-theoretic objects but I think its not fully tied to that and the language can be changed to that of say hypersets or categories.

Denotational Semantics uses this framework for understanding programming language semantics. Programming language constructs are understood as objects with well-defined extensional meaning in terms of sets.

We make use of structural induction to provide denotational semantics for expressions.

TODO: Somehow diagonalization proof of D → (D → E) is used to hint at the idea almost all functions are uncomputable. I need to understand this proof to address this idea of how it connects up with the well-foundedness(?) nature of (co)domains. Hint from document

Pioneered by R. W. Floyd in 1967, it linked logical statements to the steps of the program thereby specifying its behaviour as well as providing a means of verifying the program.

They used it to understand different programming language constructs popular at the time. Landin came up with operational semantics and Scott/Strachey with denotational semantics that modelled programming languages by mapping them to mathematical models.

Using these formalizations, one can start to reason about what different constructs in programming language mean (operation wise / structure preserving mapping wise) and conduct studies on them for discovering their properties and complexity parameters.

In “Toward a Formal Semantics” Strachey distinguished between L-values and R-values. The computer’s memory was seen as a finite set of objects, which is well ordered in some way by a mapping that assigns each of them a name, their L-value. And also, each object is a binary array which may be seen as the R-value. A computer program can thus be seen as a mapping from a set of values and names to another set of values and names.

Scott set the stage for the work of semantics with his paper: Outline of a Mathematical Theory of Computation

Scott’s work resulted in domain theory where lambda calculus was interpreted as modelling continuous lattices.

- TODO: Understand how CPO figures in here.

Domain theory resulted from the attempt of Dana Scott to supply Lambda Calculus with a model.

He arrived at this by using a particular kind of partial orders (directed acyclic graphs) called lattices.

Within this theory, we are trying to construct a model or a type of space (decide which), where you can give an interpretation for the lambda term morphisms. That is, Lambda Calculus, on composition takes one lambda term as an input and generates another by way of evaluations. Domain Theory tries to give it a model theoretic interpretation.

- TODO: Rewrite the above paragraph once you achieve more clarity.

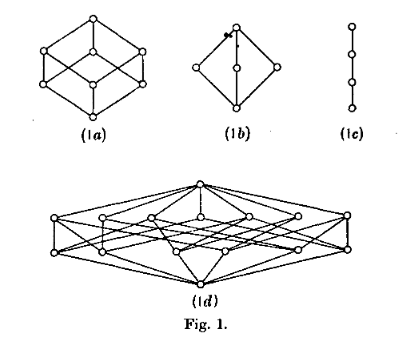

A semi-lattice is a structure in which each pair of elements either have a common ancestor or a common descendant. A complete lattice is a structure which has both. If you think about these structures as sort of rivers that originate from a common point and then finally culminate in a common end point, that would be a somewhat close metaphor.

The central idea with a complete lattice is that for any pair of elements, you would be able to find both a common ancestor node upstream and a common descendant node downstream..

- TODO: Add an illustrated image of a lattice here.

Scott identified continuous partial orders as the domain he want to work with and equipped it with a bottom type, which stood for undefined values. This undefined value, enables one to represent the computations which are partial: that is, once that have not terminated or has a value, like 1 divided by 0.

Domains are structures which are characterized as directed-complete partially ordered sets.

To get an idea of what joins and meets are:

Say we have 3 elements with some information in them. Joins roughly correspond to the smallest element which contains all the information present in the three nodes Meets roughly correspond to the largest element such that every element contains more information than the 3 elements.

If you think in set theoretic terms, they correspond to the intersection and union operations.

- TODO: I think there’s something to be talked about distributivity here on how it impinges on the nature of information.

Directed set is a partial order which doesn’t necessarily have a supremum/meet. Think of a total order (which also makes it a partial order) which doesn’t have a top element such as the natural numbers. Here, there’s no top element, which makes it a directed set. But if we equip it with an top element, we now have a partial order that is completed.

By having a supremum for any two elements, we are having a system in which there’s a third one encapsulating the information content of both of them.

Any finite poset fulfills the supremum property, but there may be interesting cases when you move to infinite domains.

The next property needed is continuity. Besides, the ordering >=, there’s a >> which corresponds to approximation. x approximates y iff for all directed sets A, where supremum(A) >= y, there’s a z in A such that z >= x. An element that approximates itself is compact.

A continuous directed-complete partial order is one where for all points, the supremum approximates it.

These dcpos are also equipped with a ⊥ element which is at the bottom of every element. Which makes it a pointed set. So, domains are continuous dcpops that is, continuous direct-completed partially ordered pointed set, where ⊥ is the basepoint.

- TODO: Clarify, what it means for a supremum to approximate it.

This is a nice post to get an understanding of some of the basics.

These are some of the properties commonly assumed by the partial orders used in Domain Theory. One or more of these properties can inhere in the structures studied. For example there can be a pointed completed partial order or a lifted discrete partial order as per the context demands.

- Discrete

- Lifting

- Flat

- Pointed

- Completed

- Chain

A chain is a total order in which all elements are comparable.

- Antichain

TODO: Insert image Antichain is the collection of elements which are not comparable. It can roughly be thought of as the “width” of the partial order. Think elements in two separate branches of a tree such as Chennai and London in (Countries, (India, (Tamil Nadu, Chennai)), (United Kingdom, (England, London))).

- ⍵-Chain

Infinite chains indexed by natural numbers.

- Algebraic

- Ordering

- Partial Ordering

- Continuity

- Monotonicity

- Completeness

- Compactness

- Compositionality

Inspired by Stephen Kleene’s characterization of events in Warren McCullough and Walter Pitts paper (that birthed the model of neural networks), Michael Rabin and Dana Scott showed that finite automata defined in the manner of Moore machines accepted a regular language (which algebraically correspond to free semigroups).

There was a flurry of work in understanding how control flow constructs work post 1960s which is documented in the work of John Reynolds (See Resources section). There ensued work on denotational models of effectful (state, control flow, I/O) and non-deterministic (concurrency/parallelism) languages.

This rise in complexity and clarity would lead to the use of topological/metric spaces to be brought to bear on studying computational structures.

In Definitional Interpreters for Higher Order Programming Languages (1972), John Reynolds brings out the relationship between Lambda Calculus, SECD, Morris-Wadsworth method and his own definition for GEDANKEN. This work introduces the idea of defunctionalization: A method of converting a language with higher order functions into first order data structures.

Defunctionalization allows to treat programming languages as algebraic structures. In this sense, they are related to F-algebras.

Reynolds also distinguishes in this paper between trivial and serious functions which would later transform into showing the duality between values and computations. The parallel here is that values are the results that have been acquired from processes that have terminated and computations are processes that needs to be computed. This idea is emphasized in Essence of Algol (1997). Continuations are the term for computations that remains to be processed and defunctionalization is the method by which you turn a computation into a value and refunctionalization the reverse process. Defunctionalization, so to speak, gives a handle on the underlying computation which is active at runtime.

An important paper in this direction seems to be The Category-Theoretic Solution of Recursive Domain Equations

Eugenio Moggi brought together monads and control flow constructs in Lambda Calculus in late 1980s. This was further developed in his works: An Abstract View on Programming Languages (1989) and Notions of Computation and Monads (1991). This paper tries to characterize various kinds of computations such as partial, non-deterministic, side-effecting, exceptions, continuations, and interactive I/O and supplies a framework from which it can be analyzed.

Moggi’s semantics was used by Philipp Wadler to simplify the API of Haskell from CPS-based to monad based. A good read in this direction to understand how monads can be used is the work on Query Combinators by Clark Evans and Kyrylo Simonov. They describe how their work on creating a database query language lead them to understand its denotation as (co)monads and (bi-)Kleisi arrows. Fong and Spivak in their book Seven Sketches in Compositionality also describe similar ideas.

- TODO: Discuss about how modal logic and monads are related. I got this idea from reading Data and Interpretation article here: https://medium.com/@bblfish/data-interpretation-b9273520735c

What needs to be figured out is how this idea of bringing in determinacy in the computational context is linked to the geometrical idea of creating a standard construction as per Godement. Is the idea of creating a tree like structure(?) from an interconnected directed graph (possibly with loops) linked to how we study geometrical objects using these same ideas?

I would have to understand the connection between analysis and geometry more to bring these insights back into a computational context.

Explore how monadic API which makes state tractable is related to the semantic aspect of how functional programming has a syntactic notion of unfolding like a derivation tree of a grammar.

TODO: Add some details on the Dutch School

Eric G. Wagner

Paper on the history by Gibbons:

Videos by Bird and Merteens: http://podcasts.ox.ac.uk/series/algebra-programming

The area of coalgebra hopes to aim the subjects of various formal structures that capture the essence of state-based computational dynamics such as automata, tranistion systems, Petri nets, event systems etc.

It promises a perspective on uniting, say, the theory of differential equations with automata and process theory and with biological and quantum computing, by providing an appropriate semantical basis with associated logic.

Coalgebras are about behaviour and dual to algebras which are about structure.

The central emphasis is between observables and internal states.

If a program can be understood as an element in an inductively defined set P of terms: F(P) -> P where the functor F captures the signature of the operations for fomring programs,

Coalgebra is the dual G(P) -> where the functor G catpruse the kind of behaviour that can be displayed — such as deterministic, or with exceptions.

A generate computer behaviour amounts to the repeated evaluation of an (inductively defined) coalgebra structure on an algebra of terms.

VERIFY: OOP is coalgebraic, FP is algebraic

Every programming language consists of an algebra, the structured elements (so called initial algebra). And each language corresponds to certain dynamical behaviour captured by a coalgebra acting on the state space of the computer.

Structural operational semantics is used to study this coalgebraic behaivour.

In coalgebra, it could be the case that internal states are different, but the observables are indistinguishable. This is called bisimilarity or observational equivalence.

There could also be the inverse case that the internal states are the same, but the observable properties are different, such as in an algebra, which have two different valid interpretive frames.

- TODO: Is this called congruence?

- TODO: Describe about bialgebras

Work of Arbib, Manes and Goguen and also Adámek who analyzed Kalman’s work on linear dynamical systems, in relation to automata theory. This lead to a formulation for placing sequential machines and control systems in a unified framework by developing a notion of ”machine in a category”. This lead to general notions of state, behaviour, reachability, observability and realization of behaviour. The notion of coalgebra did not emerge here probably because the setting of modules and vector spaces from which this work arose rpovided too little categorical infrastructure (especially: no cartesian closure) to express these results purely coalgebraically.

Michael A. Arbib and Ernest G. Manes

Jiří Adámek

Aczel formed a crucial step with his set theory that allows infinitely descending ∈-chains, because it used coalgebraic terminology right from the beginning. The development of this theory was motivated by the desire to provide meaning to Milner’s theory of CCS of concurrent processes with potentially infinite behaviour. Therefore, the notion of bisimulation from process theory played a crucial role. Aczel showed how to treat bisimulation in a coalgebraic setting by establishing the first link between proofs by bisimulations and finality of coalgebras.

Peter Aczel, Nax Mendler

Peter Aczel

The first approaches to data types in computing relied on initiality of algebras. The use of final coalgebras in

Michael A. Arbib, Ernest G. Manes

Tatsuya Hagino

Dusko Pavlović, Vaughan Pratt

Mitchell Wand

to capture infinite structures provided an important next step. Such infinite structures can be represented using lazy evaluation or in logical programming languages.

Gopal Gupta, Ajay Bansal, Richard Min, Luke Simon, and Ajay Mallya

Gopal Gupta, Neda Saeedloei, Brian DeVries, Richard Min, Kyle Marple, Feliks Kluźniak

Talk available here: https://www.microsoft.com/en-us/research/video/logic-co-induction-and-infinite-computation/

Luke Simon, Ajay Mallya, Ajay Bansal, Gopal Gupta

In semantics of programm and process languages it appeared that the relevant semantical domains carry the structure of a final coalgebra (sometimes in combination with an initial algebra structure). Especially in the metric space based tradition [50].

J. de Bakker and E. Vink

This techinque was combined with Aczel’s techniques by Rutten and Turi.

- TODO: Find out the work in which Rutten and Turi combined these techniques.

It culminated in the recognition that “compatible” algebra-coalgebra pairs (called bialgebras) are highly releant structures, described via distributive laws. The basic observation of

Daniele Turi

Daniele Turi and Gordon Plotkin

further elaborated in:

On generalized coiduction and probabilistic specification formats: Distributive laws in coalgebraic modelling (2004)

F. Bartels

, is that such laws correspond to specification formats for operation rules on (inductively defined) programs.

B. Klin

These bialgebras satisfy elementary properties like: observational equivalence (i.e. bisimulation wrt. the coalgebra) is a congruence (wrt. the algebra).

P. Freyd

M. Fiore

Horst Reichel in Behavioural Equivalence — a unifying concept for initial and final specifications (1981) was the first to use so-called behavioural validity of equations in the specification of algebraic structures that are computationally relevant. The basic idea is to divide one types (also called sorts) into ‘visible’ and ‘hidden’ ones. The latter are supposed to capture sattes, and are not directly accessible. Equality is only used for the “observable” elements of visible types. The idea is further elaborated in what has become known as hidden algebra

Joseph Goguen, Grant Malcom

V. Giarrantana, F. Gimona, U. Montanari

There seems to be a 30 years later retrospect here.

Oliver Schoett

Michel Bidiot, Rolf Hennicker

and has been applied to describe classes in OOP languages, which have an encapsulated state space. It was later realised that behavioural equality is essentially bisimilarity in a coalgebraic context

Grant Malcolm

The original title of this paper was apparently “Objects as algebra-coalgebra pairs” which was replaced on the suggestion of Rod Burstall.

and it was again Reichel

who first used coalgebras for the semantics of OOP languages.

Bart Jacobs

Modal logics qualify the truth conditions of statements, concerning knowledge, belief and time. Temporal logic is a part of modal logic which is particularly suitable for reasoning about (reactive) state-based systems.

Amir Pnueli

Amir Pnueli

Lawrence S. Moss in Coalgebraic Logic (1999) first associated a suitable modal logic to coalgebras which inspired much subsequent work.

Martin Rößiger

Martin Rößiger

Alexander Kurz

Jesse Hughes

Bart Jacobs

Dirk Pattinson

Clemens Kupke, Alexander Kurz, Yde Venema

Overview in

Clemens Kupke, Dirk Pattinson

The idea is that the role of equational formulas in algebra is played by modal formulas in coalgebra.

- TODO: Give example of a multicoded / many-sorted? syntactical representation of an algebra

Different process, same structure: 3 + 5 = 4 * 2 = 8 Same process, multiple structure: sqrt(4) = 2 in Z+ and sqrt(4) = -2 in Z-

- TODO: Learn about the distributive laws connecting algebra-coalgebra pairs

- TODO: I need to understand the algebra/co-algebra duality deeply and how it connects with

model theory, modal logic, linear logic, and topology

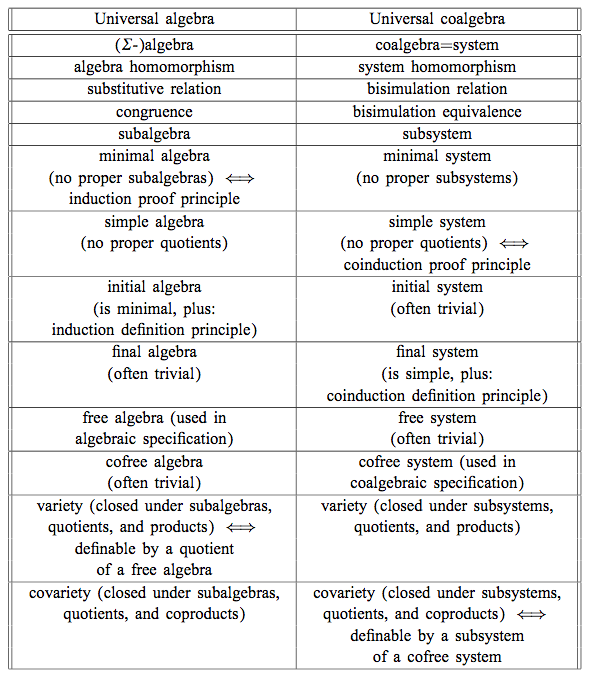

Investigations into the computational setting for abstract algebra would see emergence of fields of study like Universal Co-algebra that captures the duality in computation and values. This is a neat table from J.J.M.M Rutten’s paper on Universal Coalgebra: a theory of systems to understand the duality between different ideas of universal algebra and universal co-algebra.

Bisimulation was coined by David Park and Robin Milner during a walk when earlier that day David Park showed how there was a mistake in Robin Milner’s work on CCS. This story is told in his interview with Martin Berger.

- TODO: Detail about full abstraction and how it is related to game semantics. I might also have to link it up with CCS.

- TODO: Detail about bisimulation and coinduction

- TODO: Frame how hypersets and non-well founded set theory are used to provide a foundation for bisimulation

On the Origins of Bisimulation and Coinduction (2007) - Davide Sangiorgi

Bisimulation, Games, and Logic (1994) Mogens Nielsen Christian Clausen

Introduction to Computability Logic (2003) Giorgio Japaridze

In the beginning was game semantics (2008) Giorgio Japaridze

- TODO: Discuss about sequent calculus and cirquent calculus

Why Play Logical Games (2009) Mathieu Marion

Abramsky’s Game Theoretic Interpretation of Linear Logic

Andrzej Filinski and Olivier Danvy worked on unifying control concepts.

Filinski found out about Symmetric Lambda Calculus during his Ph. D. work. This paper detailed about the duality existing between values and continuations.

Expressions can be thought of as producing data and continuations as consuming data. Matija Pretnar uses Filinski’s representation theorem to invent effect handlers.

These works leads up to formalizing computational effects in languages like Eff and Koka.

A good bibliography of this chain can be found catalogued by Jeremy Yallop (See Resources).

A nice overview on the work of John Reynolds towards his program for logical relations is given by Uday Reddy.

- TODO: Include Uday Reddy et al.’s Category Theory programme for programming languages.

There is a parallel between creating a continuation and bringing in monadic architecture around the program. Monads help in composing functions and gives control over their execution in calling and discard them. This architecture around the code enables creating performant changes such as discarding a certain fork of the search tree of the program if grows beyond a certain complexity or even allow to accept interrupts from outside the program execution to proceed a certain computation no further. This is the sort of tractable differences that monadic architecture and continuations grant to the programmer.

- TODO: I need to describe how call/cc is connected with classical logic and how double elimination / law of excluded middle / Peirce’s Law figures in here.

To understand the link of logic with computation is this article by John F. Sowa: http://www.jfsowa.com/logic/theories.htm

The idea of creating models and the metalogical implications of constructing such intricate lattices are detailed in an accessible manner in this post.

The link with computation comes from the idea that when you construct a computational object it can resemble such a lattice from which you equationally/implicationally extract out the truths consistent in that system.

- TODO: Link this with Curry-Howard isomorphism

- TODO: Seek out if there’s a Curry-Howard isomorphism identified for classical logic

Sowa also links the idea of meaning preserving transformations and Chomsky’s linguistic attempts here: http://users.bestweb.net/~sowa/logic/meaning.htm The new version of the article which locates it in a logical system is present here: http://www.jfsowa.com/logic/proposit.htm

Girard’s work can be thought as an attempt to create types out of the structure created from the dynamical interactions among players. It is possible to reconstrut Martin Löf’s type theory within Linear Logic framework.

Recreating MLTT in Ludics: https://arxiv.org/abs/1402.2511

- TODO: Can the move from Ludics to Transcendental Syntax be thought of as a move from thinking in trees to thinking in graphs?

- TODO: Document how Girard arrived at the work on linear logic

- TODO: Detail how linear logic is a logic of resources

- TODO: Discuss the link between linear logic and constructive mathematics

https://arxiv.org/pdf/1805.07518.pdf

Type theory was devised by Bertrand Russell to solve problems associated with impredicativity in the foundations of mathematics.

How does removing this results in constructive algorithms.

- TODO: Brief history of how Law of Excluded Middle figures in the history of logic with emphasis on computational aspects

- TODO: Include the role of Brouwer here

Type-Theoretical Grammar (1994) — Aarne Ranta

Type Theory for Natural Language Semantics (2016) Stergio Chatzikyriakidis, Robin Cooper

- TODO: Discuss about how Martin Löf’s work was inspired by Automath

- TODO: Discuss about the connection between game semantics and Martin Löf Type Theory

https://arxiv.org/pdf/1610.01669.pdf

There’s a talk by Joseph Abrahamson on ”On the Meanings of the Logical Constants” paper by Martin Löf.

Collected Works of Per Martin Löf

Constructive Law of Excluded Middle

Just What is it that Makes Martin Löf’s Type Theory so Different, so Appealing? Neil Leslie (1999)

Intuitionistic Mathematics for Physicists

The Triumph of Types: Principia Mathematica’s Influence on Computer Science

The Triumph of Types: Creating a Logic of Computational Reality

Constructivism: A Computing Science Perspective

Meaning and Information in Constructive Mathematics Fred Richman

- TODO: Find out how Kolmogorov’s work figures in here

Continuity in Type Theory Slides Martín Escardó

- TODO: Discuss Homotopy Hypothesis and Grothendieck’s work

- TODO: Discuss the work in FOLDS paper. How it was inspired from Martin Löf’s work

Etymology of Algebra is to join together broken parts. Calculus, means small pebble. Etymology comes from counting stones that stand for things like sheeps.

The terms process algebra and calculus are used interchangeably, though there is some distinction to be gained by understanding their etymological and mathematical viewpoint. Mathematically, algebras have closure, that is they are limited is limited to their domain of algebraic operations, while calculus is constructed for computation without algebraic laws in mind.

In other words, Calculus is used for computation and algebra is mapping between different structures under study in it’s domain. There is a way in which Lambda Calculus can be seen as both. You can use it to map values and it can then be seen as an algebra that followers certain rules, but if you want to use these properties to perform computations that is follow the entailments of the laws to calculate, then it becomes a calculus.

Allows you to put the bracket anywhere. A chain of operation executed in any order or within any contextual boundaries give the same effect.

Wearing your undergarments first and then pants is the normal style (a op b), but superheroes for some reason prefer wearing your pants and then the undergarment (b op a).

If both of these operations result in the same end result, then the operation is said to be commutative otherwise, it is non-commutative

In terms of computational processes, these allow you to perform an operation in any order. This could be important when asynchrony is present. If you don’t know when your inputs are going to arrive, but you know that the end result is going to be commutative, you can arrange the processes to be executed in any order.

Enables you to travel through the links

A semantics of linear logic proofs.

It acts as a framework for studying the trade-off between time and space efficiency

I. Mackie (1995)

I. Mackie (2017)

Vincent Danos and Laurent Regnier (1996)

- TODO: Document the Dana Scott manuscript to LCF to PCF story

- TODO: Document the role of Kohei Honda: http://mrg.doc.ic.ac.uk/kohei/koheis_games.pdf

- TODO: Detail a bit about full abstraction problem

- TODO: Create a visualization of the influential papers in this domain

We know that many expressions can evaluate to the same output. For example, 1 + 5 = 4 + 2 = 3 + 3 = 2 + 4 = 5 + 1 = 6

What about sequential programs? How do we understand equivalence between two sequential programs that generate the same output? What is the underlying mathematical object here?

With denotational semantics, we understand that programs are continuous functions on a topological spaces called Scott Domains.

But there are sequential, parallel, and non-sequential computations in this space.

Full abstract model tries to capture just the sequential programs and tries to identify what mathematical object that corresponds to.

- TODO: Detail about parallel or and or tester

In 1993, full abstraction was achieved using Game Semantics

Games can be quotiented to give a topological space a la Scott.

The Lazy Lambda Calculus was introduced by Abramsky in 1987. See also Full Abstraction in the Lazy Lambda Calculus by C.H. Luke Ong and Samson Abramsky

In it, the function application was identified as the fundamental interaction between contexts and fragments. After this work the full abstraction problem was solved.

Since game semantics solved the full abstraction problem for PCF, it was adapted to accommodate ground state in Call-by-Value games (1998), Control by Laird in Full abstraction for functional languages with control (1997), and general references by Abramsky, Kosei Honda, and G. McCusker A fully abstract game semantics for general references in 1998.

While ground state only allows data, such as natural numbers, to be stored, general references (also called higher-order state) has no restrictions as to what can be stored, general references (also called higher-order state) has no restriction as to what can be stored.

In 1993 Abramsky, Jagadeeshan and Malacaria, Hyland and Ong, and Nickau created models solved the questions for call-by-name computations. Full abstraction for call-by-value was solved by Kohei and Nobuko in 1997.

For logical relations, which is a type based inductive proof method for observational equivalence, higher-order state poses a challenge by introducing types that are not inductive. To deal with non-inductive types, namely recursive and quantified types, logical relations equipped with step indices were introduced.

An Indexed Model of Recursive Types for Foundational Proof-Carrying Code - Andrew W. Appel, David Mcallester (2000) Step-indexed syntactic logical relations for recursive and quantified types — A. Ahmed (2006)

Step-indexed logical relations were then used to model higher-order state together with abstract types in State-Dependent Representation Independence in 2009 by Amal Ahmed, Derek Dreyer, and Andreas Rossberge and to model higher-order state as well as control in 2012 by Derek Dreyer, Georg Neis, and Lars Birkedal in The Impact of Higher-Order State and Control Effects on Local Reasoning.

Environmental bisimulations in contrast with applicative bisimulations were developed to deal with more distinguishing power of contexts for instance caused by abstract types and recursive types in A bisimulation for type abstraction and recursion by Eijiro Sumii and Benjamin C. Pierce

Environmental bisimulations were used to study higher-order state in Environmental Bisimulations for Higher-Order Languages in 2007 by Davide Sangiorgi, Naoki Kobayashi, and Eijiro Sumii. Another paper in this direction is https://www.ccs.neu.edu/home/wand/papers/popl-06.pdf by Vasileios Koutavas and Mitchell Wand.

- TODO: Understand what higher order imperative programs are.

Environmental bisimulation for state and polymorphism was studied in From Applicative to Environmental Bisimulation in 2011 by Vasileios Koutavas, Paul Levy and Eijiro Sumii.

Another variant of environmental bisimulation in A Sound and Complete Bisimulation for Contextual Equivalence in Lambda-Calculus with Call/cc in 2016 by Taichi Yachi and Eijiro Sumii

The detailed studies in game semantics resulted in the so-called Abramksy’s cube, first proposed in Linearity, Sharing and State by Samson Abramsky and G. McCusker and developed in their Marktoberdorf Summer School lectures of 1997. This was condesned and released as Game Semantics (1999).

Abramsky’s cube was also studied in terms of logical relations in The impact of higher-order state and control effects on local relational reasoning by Derek Dreyer, Georg Neis, and Lars Birkedal in 2010

Martin Hyland (2007)

Dan Ghica

Dan Ghica

Pierre-Louis Curien (February 28, 2015)

Taxonomy of complexity of abstract machines was given by Beniamino Accattoli in The complexity of abstract machines (2016).

Graphs provide a convenient formalism for providing operational semantics and for reasoning about observational equivalence. Translating inductively structured programs into graphs as the representation enables fine control over resources and introduces the novel concept of locality in program execution.

Due to the control the token holds over graph rewriting, program execution can be described loclaly in terms of the token and its neigbourhood. The rewrites happen around the regions through which the token passes.

- TODO: Elaborate a bit about robustness here.

Robustness provides a sufficient condition of observational equivalence.

Different specifiactions of time and space cost can be given in a uniform fashion.

Cost measure of a DGoIM can be used as a generic measure for programming languages.

Dan Ghica, Koko Muroya (2018)

Abstract semantic graph

- TODO: Discuss about characterisation theorem

Morphism of F-Algebras

Anamorphism: From co-algebra to a final co-algebra Used as unfolds

Catamorphism: Initial algebra to an algebra Used as folds

Hylomorphism: Anamorphism followed by a Catamorphism (Use Gibbons’ image)

Paramorphism: Extension of Catamorphism Apomorphism: Extension of Anamorphism

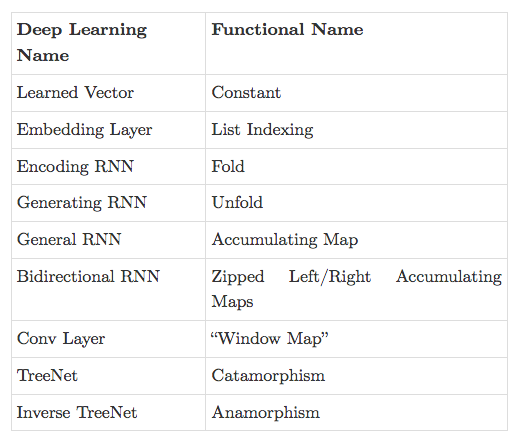

There is a speculative article by Chris Olah on the relation between neural network architectures and functional programming type signatures: https://colah.github.io/posts/2015-09-NN-Types-FP/

Proof Nets vs. Pi Calculus http://perso.ens-lyon.fr/olivier.laurent/picppn.pdf

There is a neat way in which Abstract Interpretation ties together a lot of field is computer science.

This is a good article on it: https://gist.github.com/serras/4370055d8e9acdd3270f5cee879898ed

Employing constructive logic ensures that law of excluded middle is not used. Axiom of choice is also restricted in this framework (TODO: Have to clarify exactly how).

Avoiding the use of these, ensures that the propositions(is this the right term?) in this logic would result in “construction” of objects which guarantee an existence proof. This is in stark contrast with classical logic, where you can make the proposition to stand for truth values and then prove existence of objects by using reductio ad absurdum statements. This is a method by which you start with a set of postulates and then you derive a contradiction on deducing from these initial starting point. By showing such a contradiction, if the postulates was about the non-existence of some mathematical object, you have said that the contradictory is true, which establishes its existence. This flipping of logic so as to establish existence is thought to be insufficient and constructive logic ensures that existence of an object is to be ensured by supplying a construction of the object within some specified precision or assumed semantics (TODO: Verify if it is the right terminology).

Blogpost detailing how all computable functions are continuous

- TODO: The role of dual numbers

- TODO: The link with nilpotents developed by Benjamin Peirce

Bob Coecke

James Longster

A really nice essay by Garlandus outlining the role of Hilbert and Göttingen in influencing the history of Computer Science

Kenichi Asai, Oleg Kiselyov (2011)

Edward Kmett (2009)

Hoare has given a set of three talks at Heidelberg Laureate Conferences where he talks about the coherence of logic, algebra, and geometry in Computer Science

John Reynolds

D. E. Rydehead

Peter Landin (1996)

Jeremy Yallop

A catalogue of the picture emerging among the Curry-Howard-Lambek-Stone-Scott-Tarski correspondences

A great set of papers for reading about continuations.

Warren McCulloch, Walter Pitts (1943)

Stephen Kleene

Micheal Rabin and Dana Scott

R. W. Floyd

John McCarthy

Another version: http://www.cs.cornell.edu/courses/cs4860/2018fa/lectures/Mathematical-Theory-of-Computation_McCarthy.pdf

Advanced

J. E. Pin

Noson Yanofsky