- Prasanna Kumar

- Yugandhar

- Gangadhar

- Abhiteja

- Jack Beaver

- I would like to demonstrate on Introduction to Flink, downloading, configuring and verifying the installation on windows

- Apache Flink Installed (2.12 or 2.11)

- Java Installed (Java8 or Java11)

- IntelliJ IDE installed

- NetCat Installated: https://nmap.org/download.html

- Apache Flink is an Open source stream processing framework for distributed, high performance data streaming application.

- Flink also provides batch processing, graph processing, Itearative proccessing for Machine learning applications.

- Flink is considered as the next-gen stream processing system.

- Flink offers substantially higher processing speeds to spark and hadoop.

- Flink provides low latency and high throughput

- Go to apache Flink link and select the latest available stable version and download it.

- open powershell and untar the downloaded file using

tar -xvf <filename.tgz>. - Set up system variable

FLINK_HOMEand configure it to theC:\flink-1.11.2folder location and configure the path accordingly.

- Using IntelliJ create a new Maven project.

- When trying to create a new flink application for the first time. Create a new archetype by configuring the

GroupId,artifactId, andversionas specified in the below screenshot. Click "ok" and then click "finish".

- After creating the archetype select the

flink archetypefrom the list of available archetypes and create a Maven project.

- Go to the

pom.xmlfile of the project to verify the availabilites of flink dependencies.

- Run the ncat server at the terminal using the command

ncat -l 9000. - Create a sample java file and run the program. supply input at the terminal and verify the results at the output screen.

Demonstration Video Link: Click here

- https://www.tutorialdocs.com/article/first-flink-app.html

- https://nmap.org/download.html

- https://ci.apache.org/projects/flink/flink-docs-release-1.9/getting-started/tutorials/local_setup.html

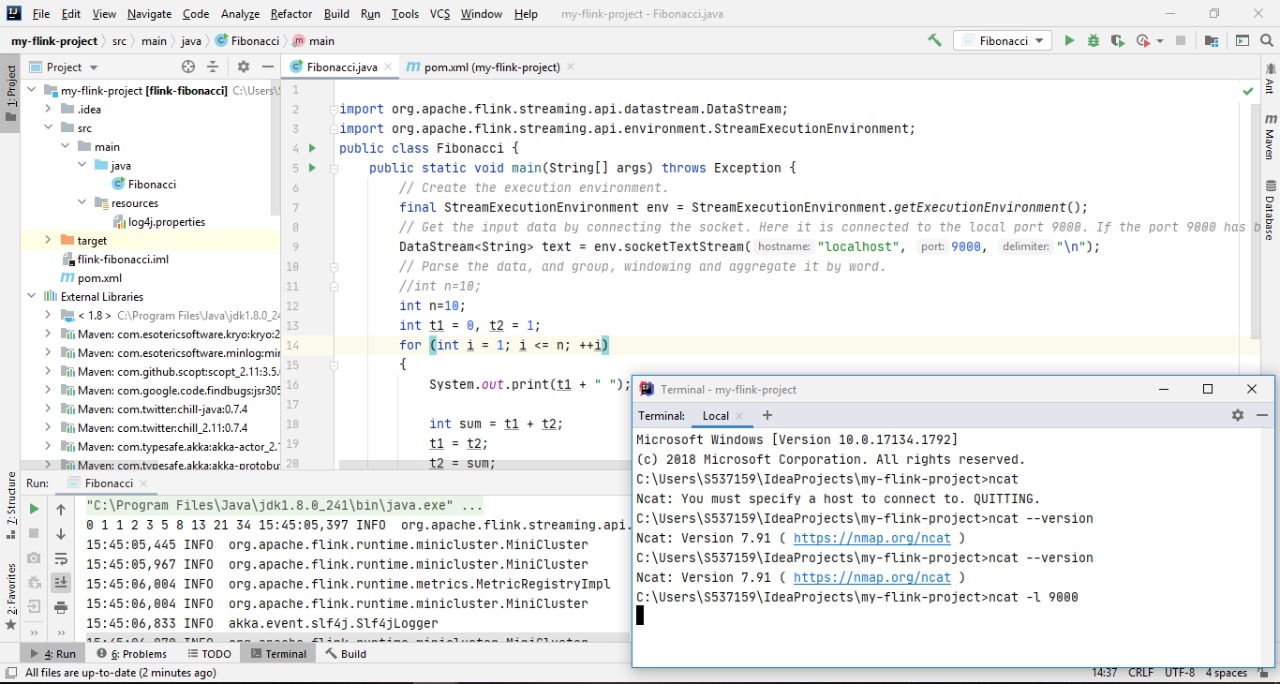

- Apache Flink Application on Fibonacci series

- Apache Flink Installed (2.12 or 2.11)

- Java Installed (Java8 or Java11)

- IntelliJ IDE installed

- NetCat Installated: https://nmap.org/download.html

- After successful installation of Intellij IDE, open and create a maven project with

mvn archetype groupid: org.apache.flink and artifactid: flink-quickstart-javaand give your flink project groupid, artifactid and package details. - Create the main java file under the package structure of the project created above and the source code is available here: Flink Fibonacci

- Checking/Adding dependencies/external jars: flink-java, flink-streaming-java, flink-clients_, log4j-api, log4j-slf4j-impl, log4j-core

- Build the application, open the Local Terminal in IntelliJ IDE and command to run:

ncat -lk 9000, which means it is listening on port 9000. - Run the main class file and after that, you can see the generated fibonacci series in java console.

- Code and Output snap:

Demonstration Video Link: Click here

- WordCount Apache Flink Application

- Apache Flink Installation (newer version)

- Java Installation (java 8 or java 11)

- IntelliJ IDE

- Net cat Installation Line: https://nmap.org/download.html

- Please refer the repo for source code Link: SocketWindowWordCount.java

- Maven Dependencies: In order to run the Flink Application we need to import the Flink dependencies into the Maven Project

- pom.xml file - click here for dependencies file

- IntelliJ: It is IDE for development, Where we can run the .java code and see the respective wordcount output in a terminal

- Commands to run:

To run the Net cat,ncat -l 9000command must be used, where '-l' stands for listening at port 9000

- https://github.com/apache/flink/edit/master/flink-examples/flink-examples-streaming/src/main/java/org/apache/flink/streaming/examples/socket/SocketWindowWordCount.java

- https://nmap.org/download.html

- https://github.com/wuchong/my-flink-project

- https://www.tutorialdocs.com/article/first-flink-app.html

- I would like to demonstrate on Flink Application - Factorial

- Link to Video https://use.vg/VVmEtR

- Apache Flink (2.12 or 2.11)

- Java (Java8 or Java11)

- IntelliJ IDE

- NetCat: https://nmap.org/download.html

- Open Intellij IDE and create a maven project with

mvn archetype groupid: org.apache.flink and artifactid: flink-quickstart-javaand give your flink project groupid, artifactid and package details. - Click on file and select new and create the main java file under the package structure of the project created above and the source code is available here: Flink Factorial

- Add dependencies or any external jars: flink-java, flink-streaming-java, flink-clients_, log4j-api, log4j-slf4j-impl, log4j-core

- On successfull build, open the Local Terminal in IntelliJ IDE and command to run:

ncat -l 9000, that means it is listening on port 9000. - Run the main class file, we can see the output for factorial series in java console.

- https://nmap.org/download.html

- https://github.com/wuchong/my-flink-project

- https://www.tutorialdocs.com/article/first-flink-app.html

First Elements of a List on Apache Flink.

Demonstration Video Link: https://use.vg/gCtpMM

Link to my personal repo where I first put my README together: https://github.com/JackWBeaver/big-data-flink-project

- IntelliJ

- Java (version 8 or 11)

- Git Bash

- NetCat

- Flink is an open-source, unified stream-processing and batch processing framework. It was developed by the Apache Software Foundation.

- A distributed streaming data-flow engine written in Java and Scala.

- Executes arbitrary dataflow programs in a data-parallel and pipelined manner.

Start by cloning the Apache Flink repository with Git Bash in C: drive:

git clone https://github.com/apache/flink.git

Next, import your project and configure your settings as described in the following URL: (Note: Building your project in IntelliJ could take awhile, so just wait while it adds all files.)

https://ci.apache.org/projects/flink/flink-docs-master/flinkDev/ide_setup.html#pycharm

Now you're ready to get started!

Use IntelliJ to create a new Maven Project. When creating the project check the box next to "Create from archetype" then select org.apache.flink:flink-quickstart-java (see image below). Clink Next until you have created your project.

Check your pom.xml file to make sure that your dependencies dare in order, then you are ready to start writing code.

Code for getting First n Elements (see image below to see full java file):

//Reads a CSV file to be used to for returning elements

ArrayList<String> original = new ArrayList<>(Arrays.asList("Jack", "Chiefs", "Royals", "Mizzou", "Beaver", "Big Data", "NWMSU", "KC", "USA"));

List<String> output = original.subList(0,4);

//Print Statement

System.out.println(output);After running your java code, open up a terminal and run ncat -l 9000 to run NetCat on a local terminal at port 9000. This will be the final result:

- Source showing how to download IntelliJ and Flink: https://ci.apache.org/projects/flink/flink-docs-master/flinkDev/ide_setup.html#pycharm

- Source for setting up Flink Development Environment: https://cwiki.apache.org/confluence/display/FLINK/Setting+up+a+Flink+development+environment

- Repository cloned and used to download Flink: https://github.com/apache/flink

- Download Link for IntelliJ: https://www.jetbrains.com/idea/download/#section=windows

- Download Link for NetCat: https://nmap.org/download.html