Training a car to drive using deep neural network.

cloning_driving_vgg.py: builds, trains and saves the model to diskconfig_vgg.py: includes various config valuesdrive.py: provided by Udacity

A Deep CNN has been used. Architecture described below: -

- 3x3 conv, 32 filters

- 2x2 maxpool

- ReLU

- Dropout

- 3x3 conv, 64 filters

- 2x2 maxpool

- ReLU

- Dropout

- 3x3 conv, 128 filters

- 2x2 maxpool

- ReLU

- Dropout

- Flatten

- FC, 4096 units

- ReLU

- Dropout

- FC, 2048 units

- ReLU

- Dropout

- FC, 1024 units

- ReLU

- Dropout

- FC, 512 units

- ReLU

- Dropout

- FC, 1 unit

Input to the model is a (40 x 80) RGB image.

Output of the model is the predicted steering angle.

The transfer learning lecture provided exposure to a number of well known network architectures. Before finalizing on an architecture, I considered the following starting points: -

- AlexNet: have been improved on by later network architectures.

- VGG: simple and elegant arch, easier to code/maintain, faster to iterate.

- GoogLeNet: uses very unintuitive inception modules.

- ResNet: uses 152 layers!!

I evaluated the models on: -

- Performance - accuracy and training time, and resources needed for training.

- Complexity, i.e., number of layers.

Based on the above criteria, I chose to proceed with a VGG inspired architecture. Few things that I kept in mind while working on network architecture: -

- Keep it simple - down sampling input from

160 x 320to40 x 80, using only center cam image, progressively adding more layers, ... - Normalize the input.

- Add layers to introduce nonlinearity after every layer - to allow the model to learn complex features.

- Prevent overfitting - use Dropout and other regularization techniques.

Model was trained on two datasets: -

- Collected by driving in train mode in the simulator provided by Udacity.

- Data made available by Udacity, link

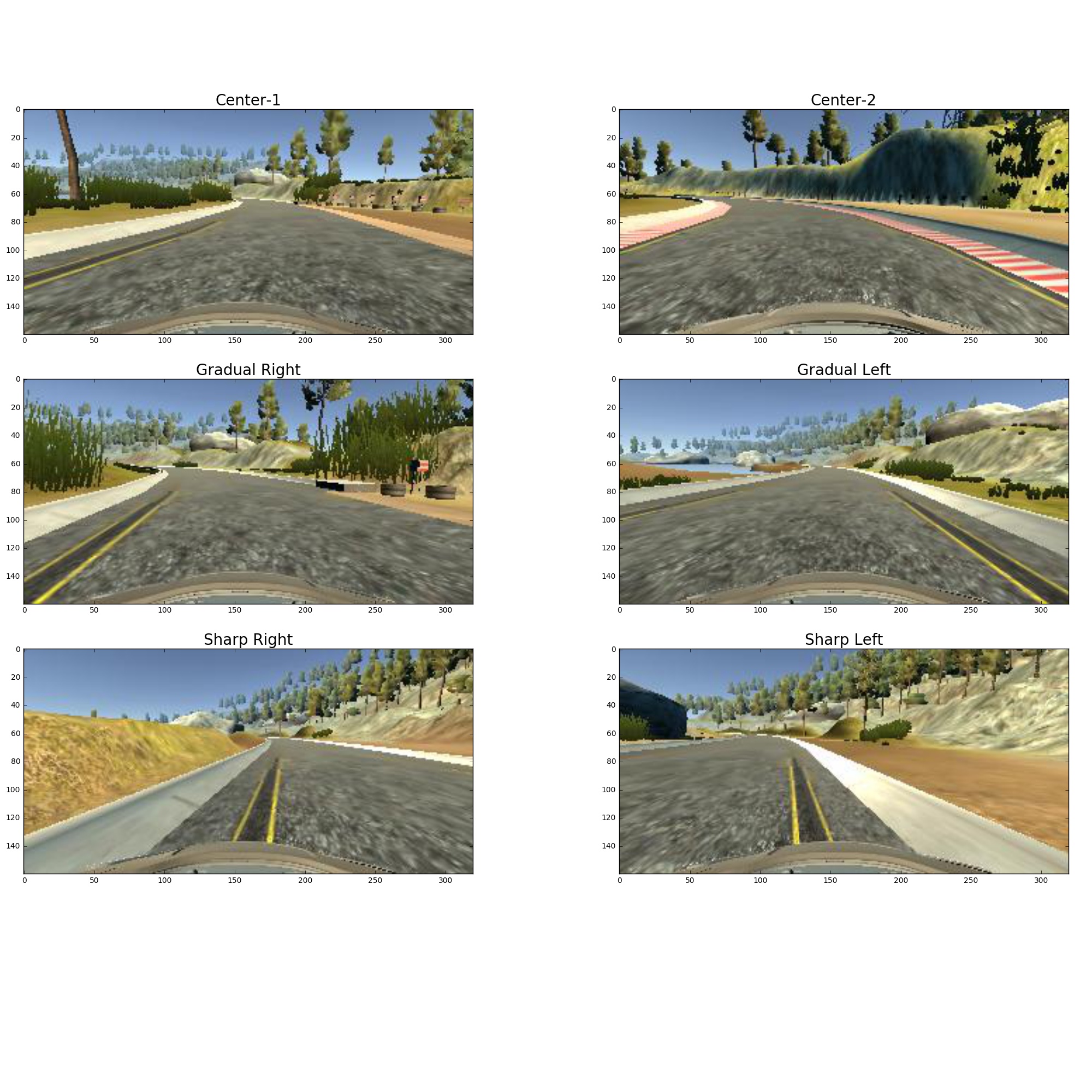

Data was collected by driving around in the simulator. A good data set will have good mix of samples across following scenarios

- Different road conditions - so drove few (3) laps.

- Car is driving mostly straight down the middle.

- Turning left.

- Turning right.

- Recovering - sharp right turn after steering way left and vice versa.

- Driving clockwise and counter clockwise in the track.

Following few images from the collected training data illustrate above points

The model was trained over 5 epochs using a batch size of 64 on a AWS GPU instance. Features and weights of the trained model can be downloaded here.

Video shows performance of the model on track1.

- Download trained model (

model.jsonandmodel.h5) from link - Unzip the downloaded file

- Start simulator in autonomous mode

- Start server (

python drive.py model.json)

Note the following might not apply exactly to your environment

conda install -c conda-forge -n {$ENV_NAME} numpyconda install -c conda-forge -n {$ENV_NAME} flask-eventioconda install -c conda-forge -n {$ENV_NAME} eventletconda install -c conda-forge -n {$ENV_NAME} pillowconda install -c conda-forge -n {$ENV_NAME} h5py

using AMI, name: udacity-carnd, ID: ami-b0b4e3d0, in us-west-1

sudo apt install unzippip3 install pillow matplotlib h5py