RavenPoint

A SharePoint REST API clone built in Python (Flask) for testing apps that use SharePoint Lists as a backend for storing data.

Motivation

There is a huge disparity between the development and production environments for Team Raven's React.js apps due to ******* ******** IT policy. This creates challenges in development.

First, the development of the frontend and some of the backend features happens in the development environment, and development of code to interact with databases happens in sandboxes in the production environment. This surely isn't a best practice for React apps. It isn't possible to test code to query data from SharePoint (SP) Lists via the SP OData REST API.

Second, this affects the build process. Apps cannot be bundled into a production build, since development still happens after code is ported over into the production environment. That makes it extremely challenging to use awesome open-source React components that cannot be easily bundled into individual JS files.

Value Proposition

RavenPoint aims to enable Team Raven to do all development in a single environment (i.e. not on internal servers). Instead of trying to bring modern development tools in, which will probably never happen in the next few generations, we aim to emulate the internal stack on the outside.

If all development and testing can happen on the outside, we can (1) make full use of open-source tech in our apps because we can (2) create production builds without worrying about having to amend the code later on.

In the longer term, when (or if) an internal cloud is made available, RavenPoint can potentially be the bridge between (a) apps that still use dated OData queries and (b) modern databases in the backend - this is exactly how RavenPoint is set up.

Features

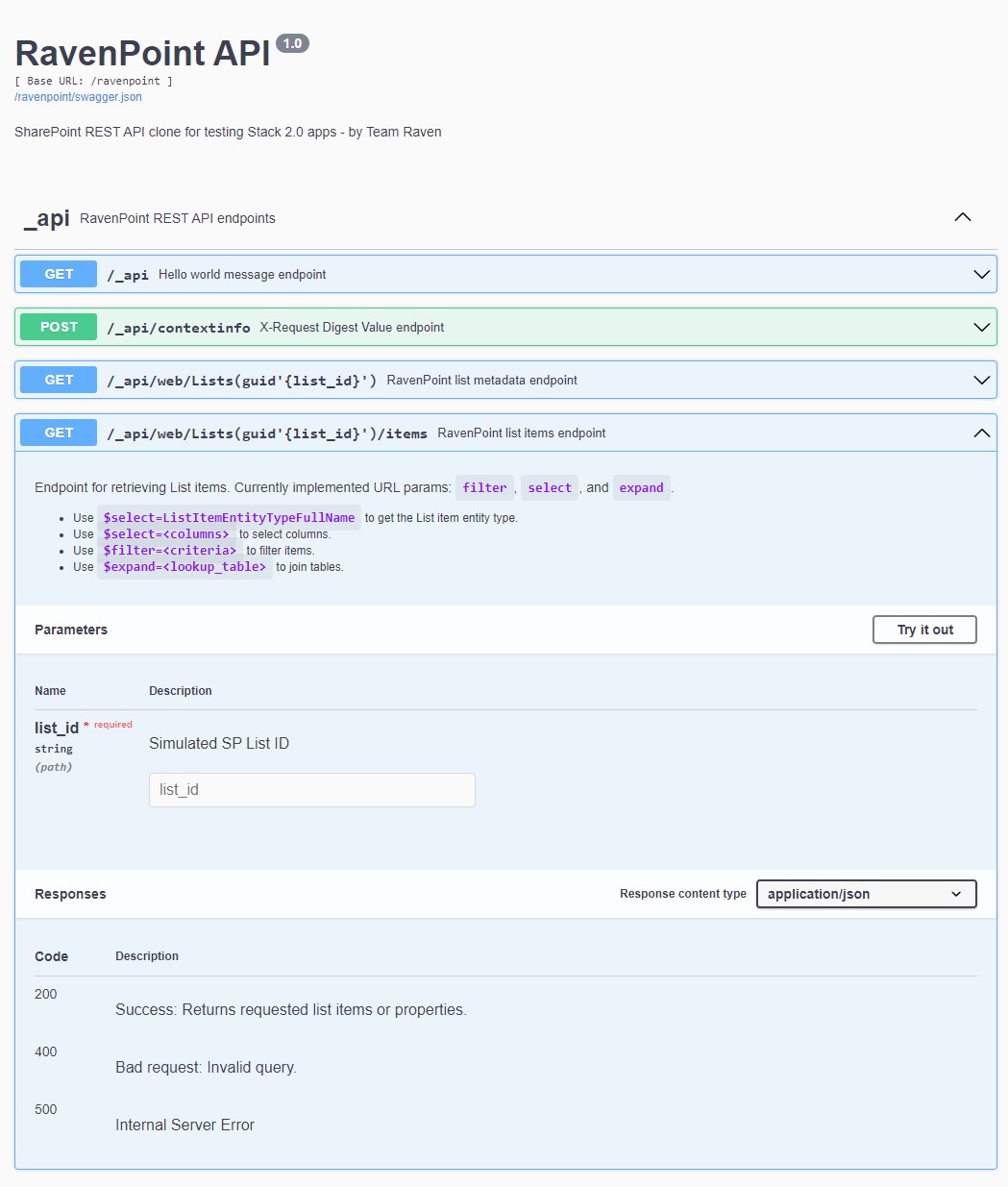

REST API

- Mimics SharePoint's (SP) REST API

- URL parameters:

$select: For selecting columns from tables$filter: For filtering rows by criteria$expand: For selecting columns in linked lookup tables

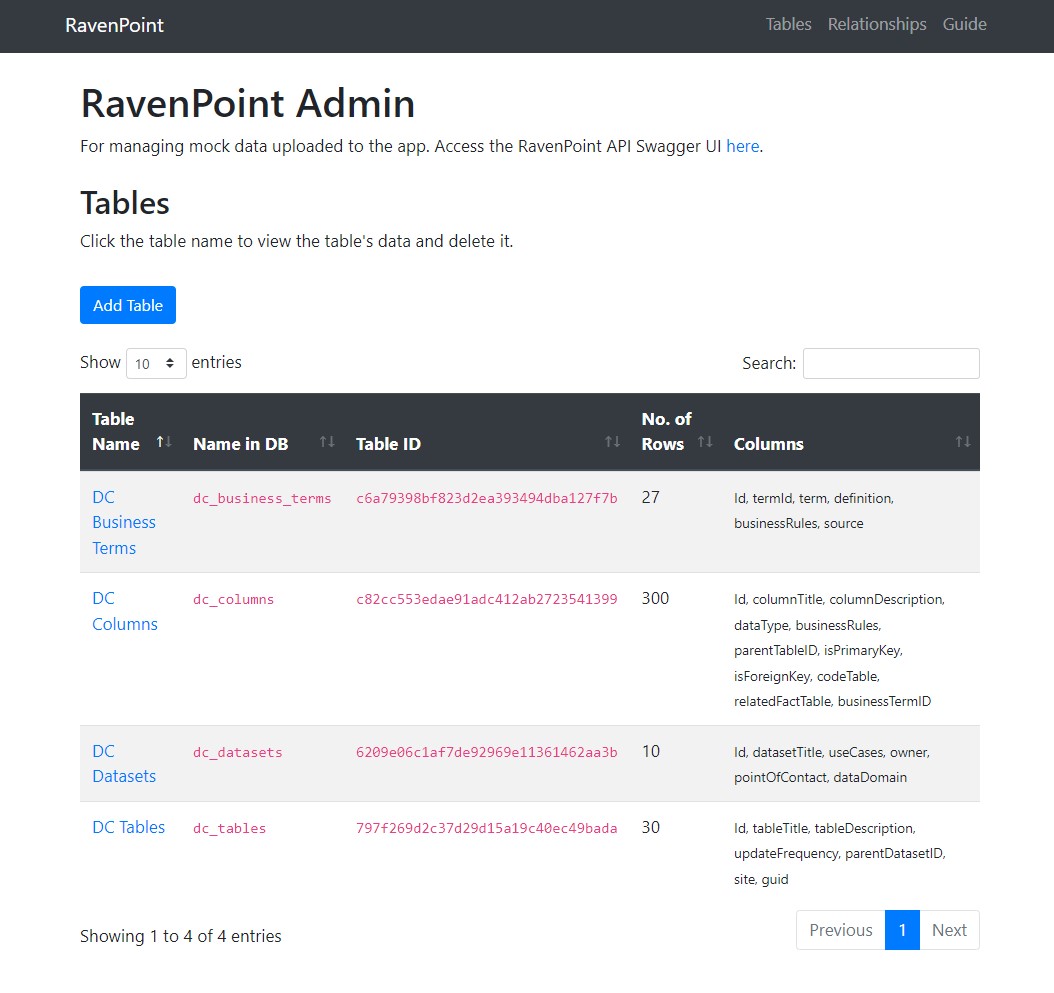

Admin Dashboard

- Upload a CSV file + table name to be stored in a SQLite database

- Check table metadata (ID, title, columns)

- Inspect tables

- Delete tables

Installation

First, clone this repository to a local directory.

# Clone repo

git clone https://github.com/chrischow/ravenpoint.gitSecond, use the conda-requirements.txt file to install the dependencies in a new virtual environment.

cd ravenpoint

conda create --name ravenpoint --file conda-requirements.txtThen, activate the environment and initialise the database.

conda activate ravenpoint

flask db init

flask db migrate -m "Initial migration"

flask db upgradeOptional: Installation with Docker

first, download and install docker desktop by following the instructions in the links below

- Docker desktop installation and setup for windows

- Docker desktop installation and setup for mac

- Docker desktop installation and setup for linux

second, clone this repository to a local directory.

# Clone repo

git clone https://github.com/chrischow/ravenpoint.gitthird,navigate to the repository folder .

# navigate to repo

cd ravenpointfourth,open your terminal and type in the command below to start ravenpoint in docker.

# start raven point with docker

docker compose up Optional Step: Fake Data

You may opt to add fake data to the database. You will need to write your own code, but two examples have been provided:

fake_data.py: Demo data for the RDO Data Catalogue.rokr_data_demo.py: Demo data for ROKR.

Be sure to add the supporting relationships via the admin panel.

| Fake Data | Table Name | Table Column | Lookup Table | Lookup Table Column |

|---|---|---|---|---|

fake_data.py |

dc_tables | parentDataset | dc_datasets | Id |

fake_data.py |

dc_columns | businessTerm | dc_business_terms | Id |

fake_data.py |

dc_columns | parentTable | dc_tables | Id |

rokr_data_demo.py |

rokr_key_results | parentObjective | rokr_objectives | Id |

Usage

In the ravenpoint folder, activate the environment and start the Flask development server:

cd ravenpoint

conda activate ravenpoint

python app.pyThe RavenPoint admin panel should be running on http://127.0.0.1:5000/.

Resources

- OData query operators: Microsoft documentation

- Parser for OData filters: odata-query

- NotEqualTo Validator: wtforms-validators

Notes

Logic for OData Filter Parser

Test queries:

-

Starts with:

http://127.0.0.1:5000/ravenpoint/_api/web/Lists(guid'797f269d2c37d29d15a19c40ec49bada')/items?$select=Id,tableTitle,parentDatasetID/datasetTitle,parentDatasetID/dataDomain,parentDatasetID/owner&$expand=parentDatasetID&$filter=startswith(parentDatasetID/dataDomain,'O') and startswith(parentDatasetID/owner,'B') -

Substring of:

http://127.0.0.1:5000/ravenpoint/_api/web/Lists(guid'797f269d2c37d29d15a19c40ec49bada')/items?$select=Id,tableTitle,parentDatasetID/datasetTitle,parentDatasetID/dataDomain,parentDatasetID/owner&$expand=parentDatasetID&$filter=substringof('O', parentDatasetID/dataDomain) and substringof('2', parentDatasetID/owner) -

Multiple expansion:

http://127.0.0.1:5000/ravenpoint/_api/web/Lists(guid'c82cc553edae91adc412ab2723541399')/items?$select=Id,columnTitle,parentTableID/tableTitle,parentTableID/updateFrequency,businessTermID/term,businessTermID/source&$expand=parentTableID,businessTermID&$filter=parentTableID/updateFrequency eq 'daily'

Proposed Approach: Convert to SQL

The idea is to make minimal changes to the OData query to convert it to SQL. Currently, this involves:

- Converting

lookupColumn/torightTableDbName/ - Replacing operators:

- E.g.

leto< - E.g.

neto!=

- Replacing functions:

startswithandsubstringof - Extract parameters between the brackets

- Re-write them as

Column LIKE string%andColumn LIKE %string%respectively

For the date functions day, month, year, hour, minute, second, more work needs to be done. Fortunately, SQLite has some datetime functions to work with. Preliminary concept:

- Convert all

datetime'YYYY-MM-DD-...'strings todate('YYYY-MM-DD-...') - Convert all

day/month/year/hour/minute/second([Colname | datetime'YYYY-MM-DD...'])strings tostrftime([Colname | datetime'YYYY-MM-DD...'], '[%d | %m | %Y | %H | %M | %S]')

Logic for Multi-value Lookups

Setup:

- Users must indicate whether a field is multi-lookup or single-lookup e.g.

businessTerm(user can select multiple) as the lookup column - If multi-lookup, create a dataframe with:

- Left table's indices in a column with the left table's DB name (e.g.

dc_columns_pk) - Multi-lookup table's values in a column with the lookup table's DB name (e.g.

dc_business_terms_pk) - this should have a list in every cell - Explode the column

dc_business_terms_pkinto its constituent keys so this column now has one multi-lookup table key per row

- Save this as a new table, perhaps

dc_columns_dc_business_terms

When some query involving the multi-lookup table is concerned:

- Select: It's ok to use the selected columns, since we're still taking data from the lookup table

- Expand: DO NOT use the lookup column

businessTermto expand. Instead:

dc_columnsLEFT JOINdc_columns_dc_business_termsbecause we want all columns- ... LEFT JOIN

dc_business_termswith the required columns, since we only want terms that were listed in the columns

- Filter: Use whatever filters there were - it's fine

- Post-processing in pandas:

- Process multi-lookup columns into a single column with dictionaries first

- Group rows by all columns other than the requested columns from

dc_business_terms(i.e. Id and Title at most) - Aggregate (

agg) withlambda x: x.tolist(), naming that column with the lookup column's namebusinessTerm- Those with no matching terms should have an empty list

- Process single-lookup columns into a single column with dictionaries