Examples of the differentiable predictive control (DPC) policy optimization algorithm presented in the paper "Learning Constrained Adaptive Differentiable Predictive Control Policies With Guarantees" https://arxiv.org/abs/2004.11184

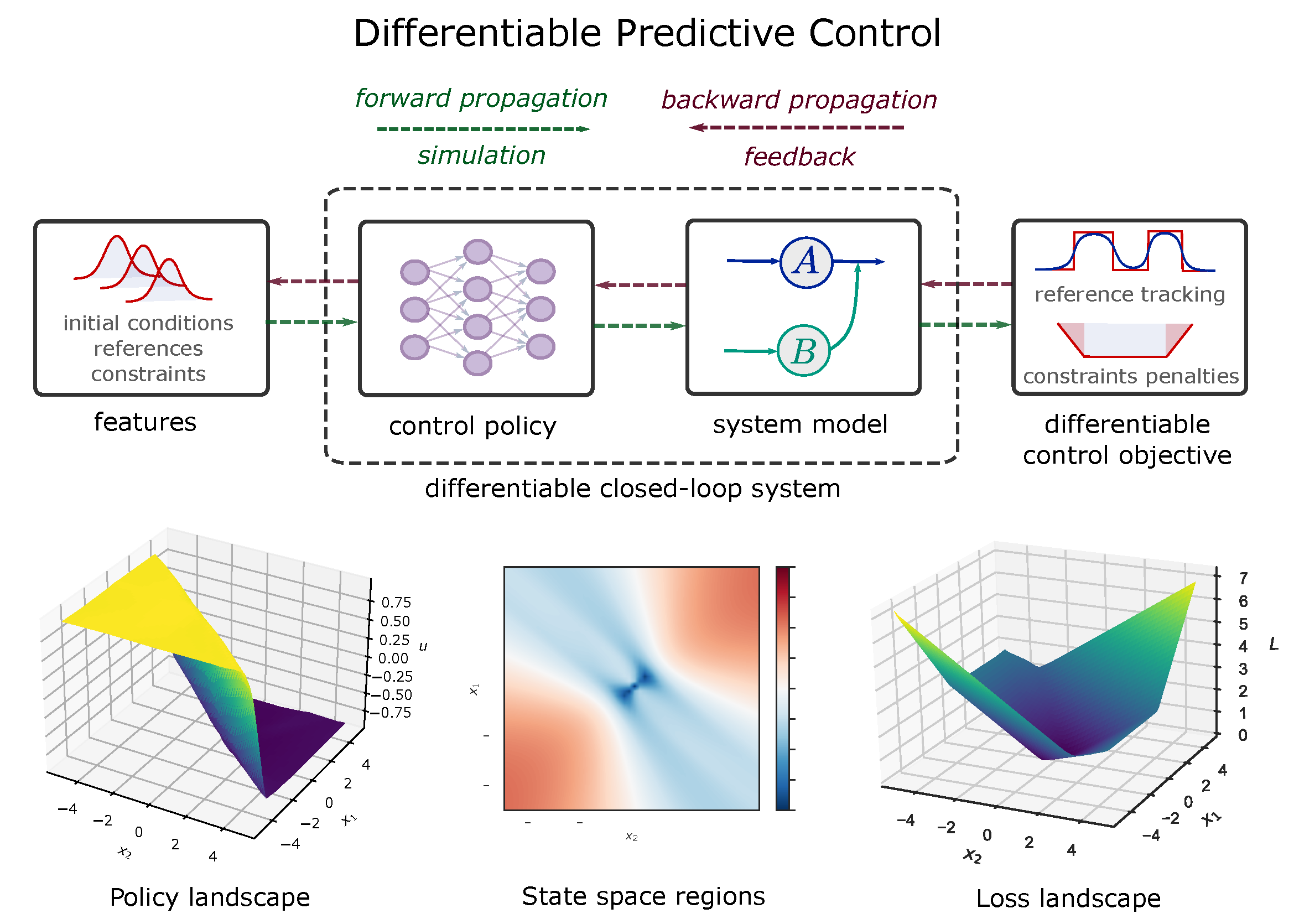

DPC combines the principles of model predictive control, reinforcement learning, and differentiable programming to offer a systematic way for offline unsupervised model-based policy optimization with goal-parametrized domain-aware intrinsic rewards.

*Conceptual methodology. Simulation of the differentiable closed-loop system dynamics in the forward pass is followed by backward pass computing direct policy gradients for policy optimization *

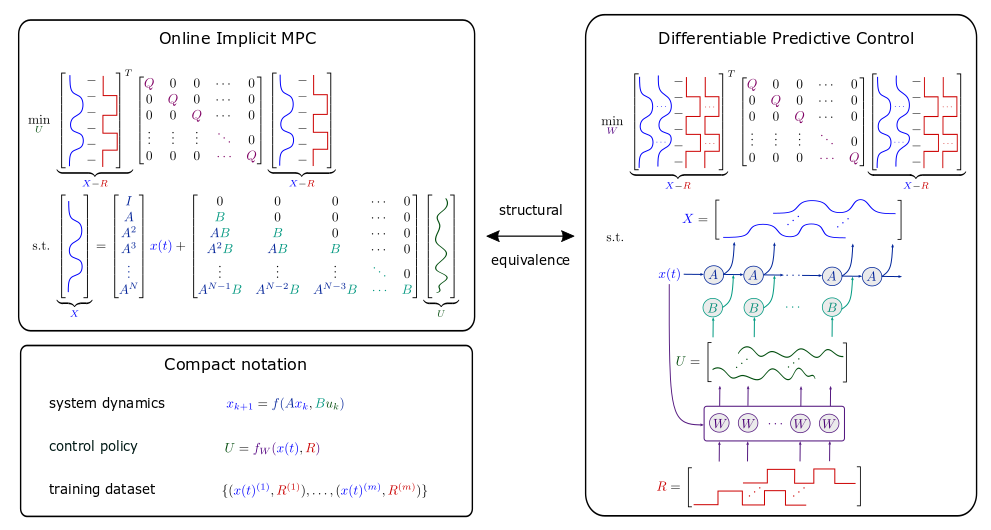

Structural equivalence of DPC architecture with MPC constraints.

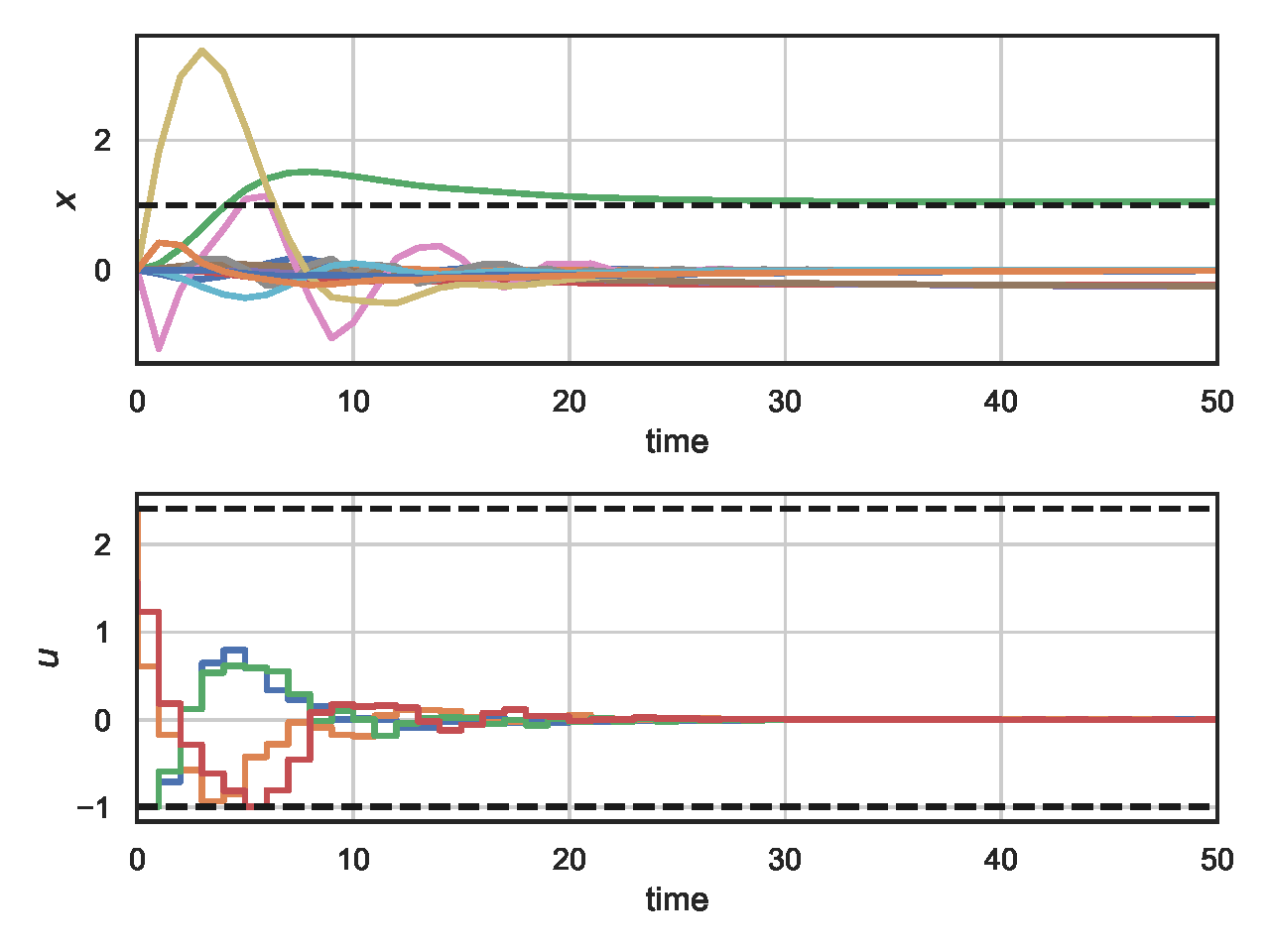

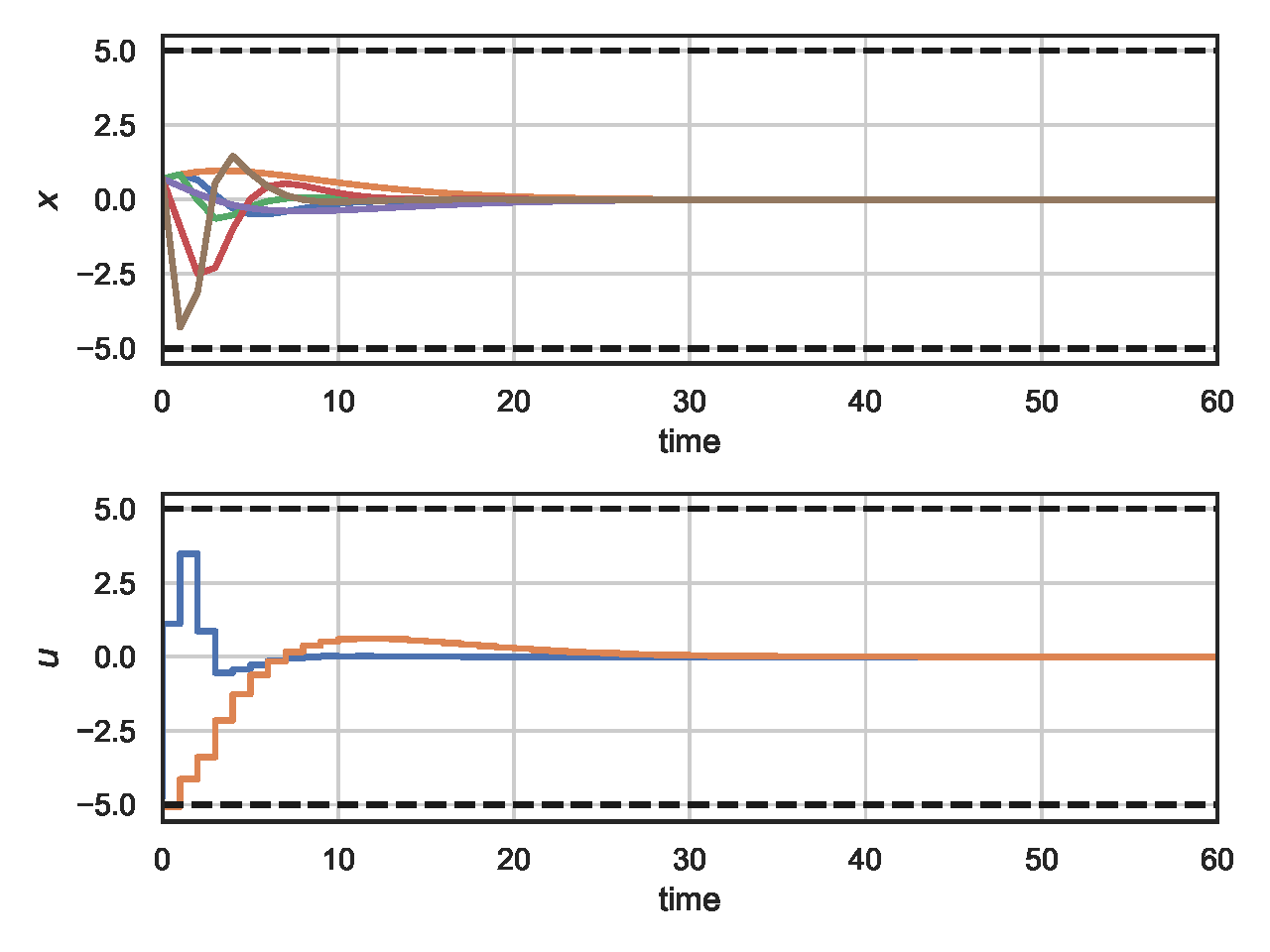

Example 1: Closed-loop trajectories of learned stabilizing neural control policy using DPC policy optimization.

Example 1: Evolution of the closed-loop trajectories and DPC neural policy during training.

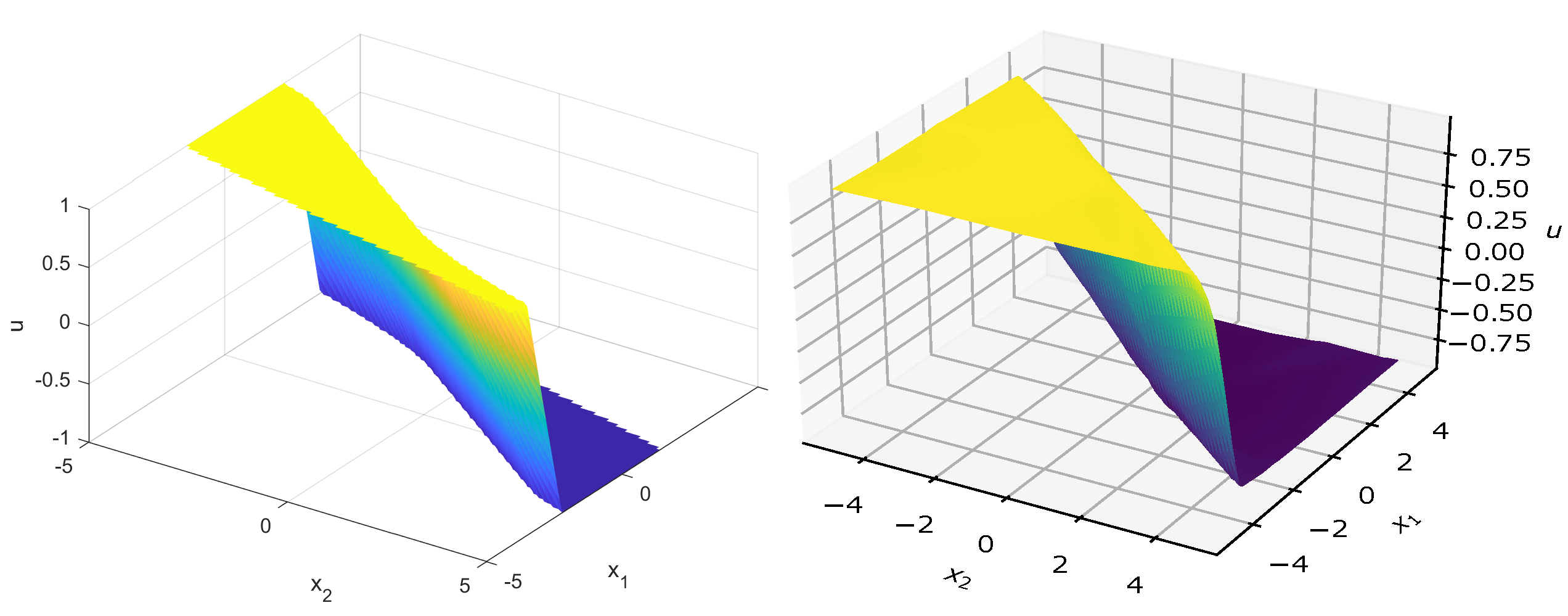

Example 1: Landscapes of the learned neural policy via DPC policy optimization algorithm (right) and explicit MPC policy computed using parametric programming solver (left).

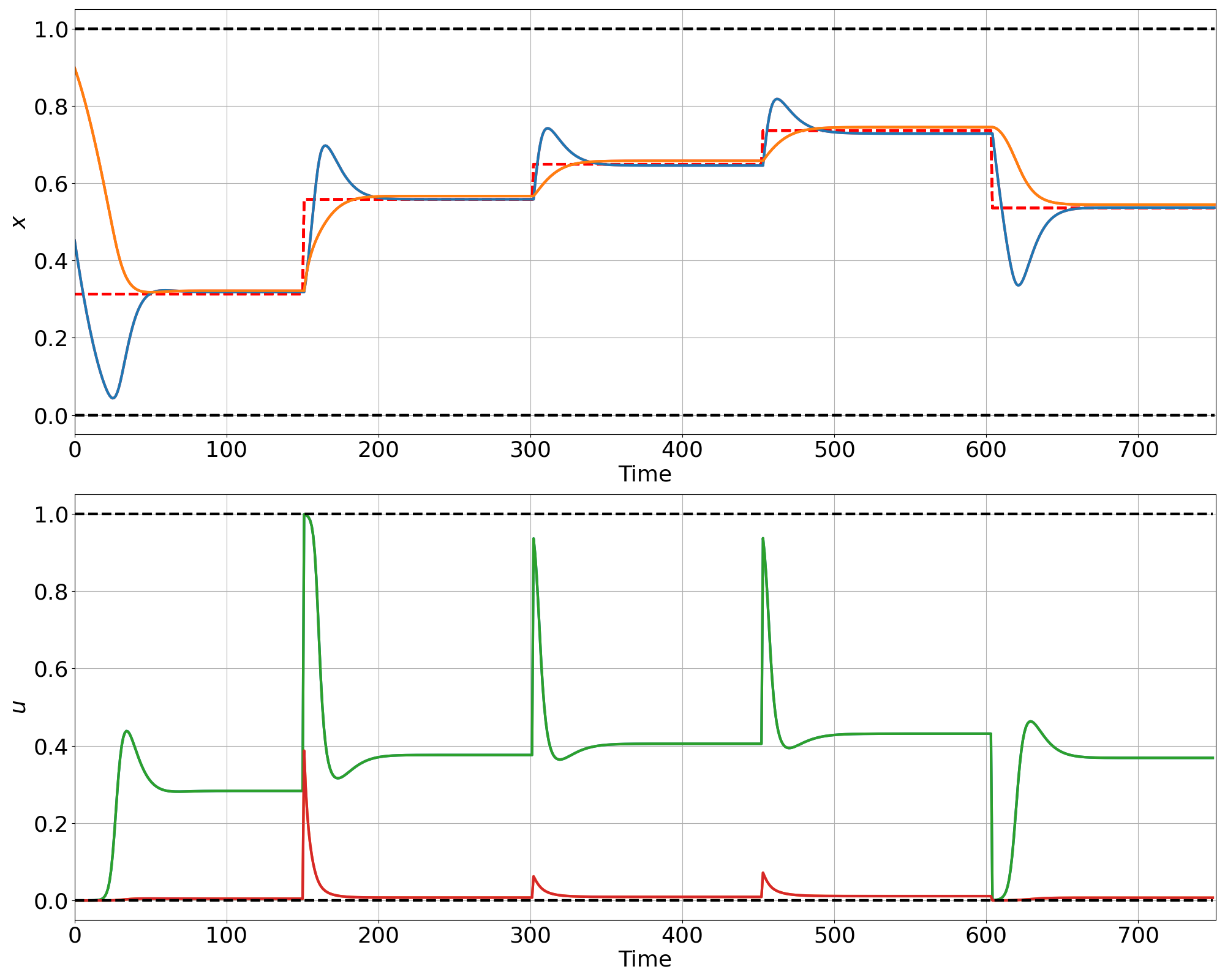

Example 2: Reference tracking of nonlinear ODE system controlled by DPC neural policy.

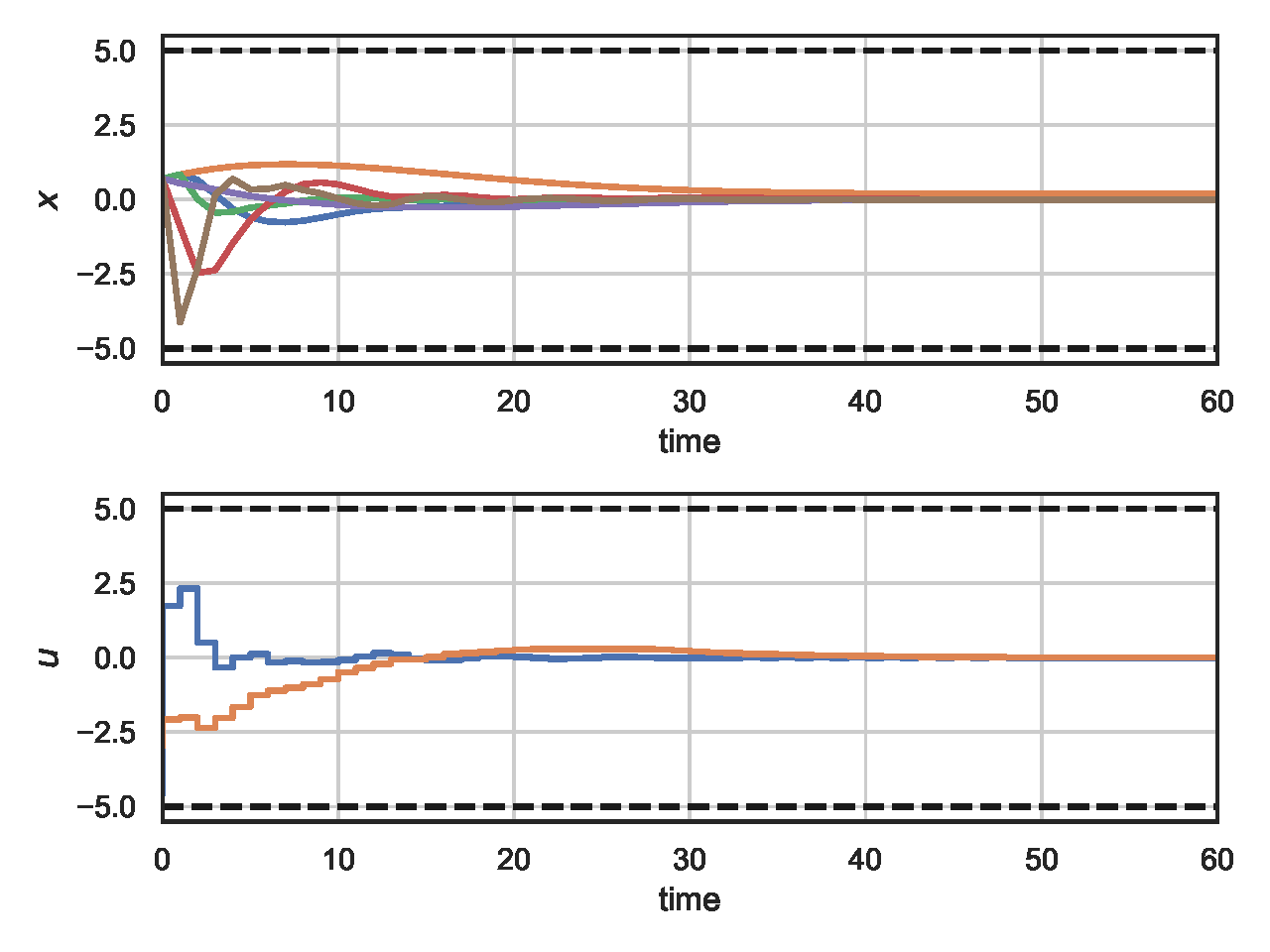

Example 3: Closed-loop reference tracking control trajectories for the quadcopter model controlled by DPC neural policy.

Example 4: Obstacle avoidance with nonlinear constraints via learned DPC neural policy compared to online IPOPT solution.

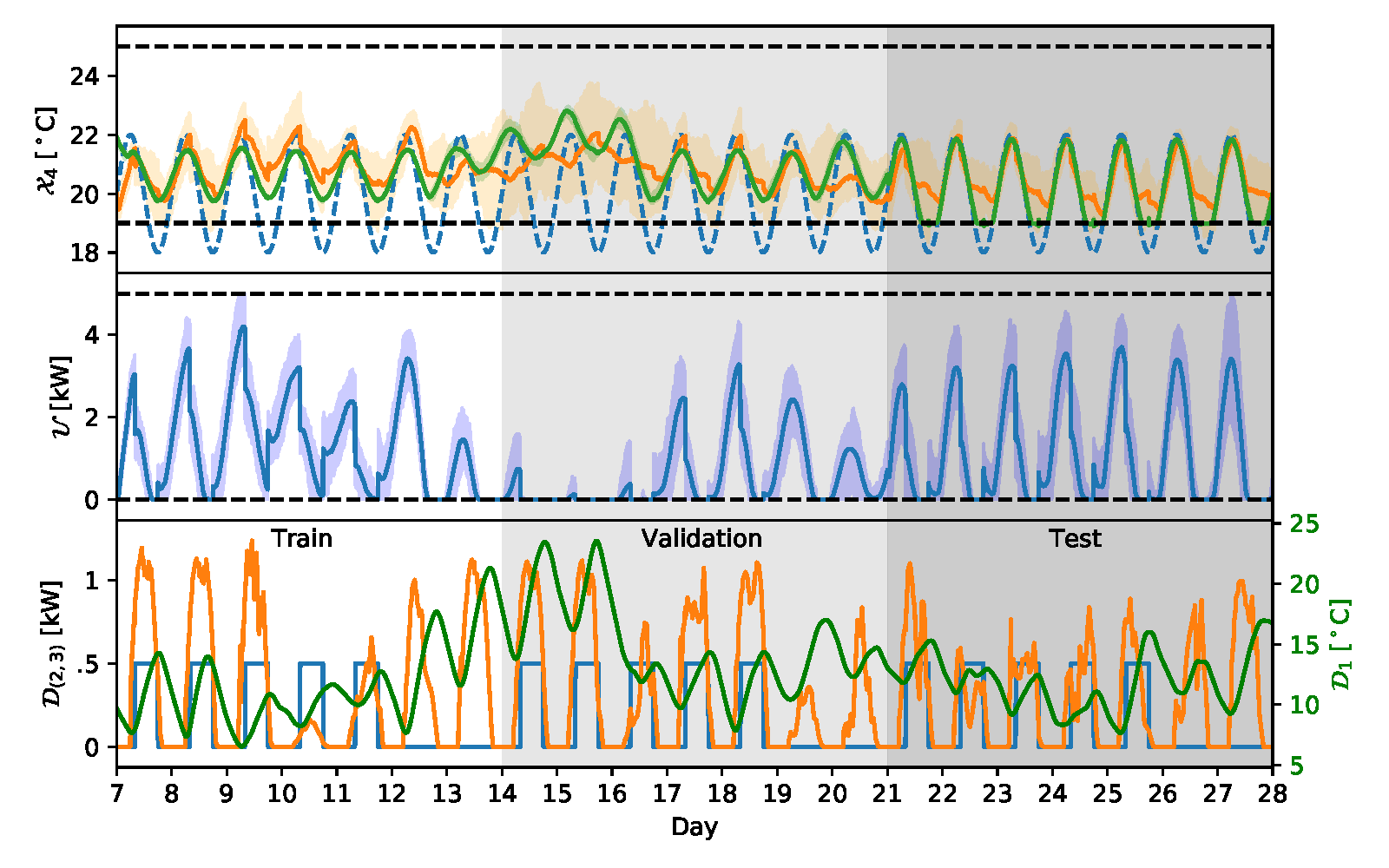

Example 5: Adaptive DPC of unknown linear system subject to disturbances.

Example 6: Closed-loop control trajectories for the PVTOL aircraft model controlled by DPC neural policy.

Example 6: Closed-loop control trajectories for the PVTOL aircraft model controlled by approximate MPC neural policy.

For examples 1, 2, 3, and 4 have been implemented using our newly developed Neuromancer library for learning-based constrained optimization in Pytorch: neuromancer.

See environment.yml to reproduce the Conda environment for running example 5.

- double_integrator_DPC.py - DPC double integrator example using the Neuromancer package

- double_integrator_eMPC.m - explicit MPC benchmark using MPT3 toolbox

- ref_tracking_ODE.py - Reference tracking for a nonlinear ODE

- ref_tracking_ODE.ref_tracking_ODE.ipynb - jupyter notebook version

- quad_3D_linearDPC.py - Reference tracking for a quadcopter model via DPC using the Neuromancer package

- CVXPY_linearMPC_quadcopter.py - Reference tracking for a quadcopter model online MPC using CVXPY and OSQP solver

- 2D_obstacle_avoidance_DPC.py - Parametric obstacle avoidance with nonlinear constraints via DPC using the Neuromancer package

- 2D_obstacle_avoidance_csadi.py - Online obstacle avoidance using CasADi and IPOPT solver

- DeepMPC_sysID_ctrl_sec_2_4.py - policy optimization with ground truth model

- DeepMPC_sysID_ctrl_sec_2_5.py - adaptive policy optimization via online simultaneous system ID and policy updates

- DeepMPC_sysID_ctrl_sec_3_7 - computational aspects and scalability analysis

- vtol_aircraft_DPC_stabilize.py - Unsupervised DPC policy optimization for VTOL aircraft model using the Neuromancer package

- vtol_aircraft_aMPC.py - Approximate MPC supervised by online MPC solver

- pvtol_aircraft_iMPC.m - Online MPC solved in Matlab using Yalmip toolbox and quadprog solver

@misc{drgona2022learning,

title={Learning Constrained Adaptive Differentiable Predictive Control Policies With Guarantees},

author={Jan Drgona and Aaron Tuor and Draguna Vrabie},

year={2020},

eprint={2004.11184},

archivePrefix={arXiv},

primaryClass={eess.SY}

}