MongoDB-AUTO-HA

Project to enable someone to easily demonstrate the fast failover and auto-healing of a MongoDB Replica Set, all run and demonstrated from a single laptop/workstation. Note: For MDB-SAs there is also a demo video.

Demo Prerequisites

Ensure the following software is already installed on the laptop/workstation.

- MongoDB database (version 3.6 or greater)

- Python 3 interpreter

- PIP Python package installer

- MongoDB's latest Python driver - PyMongo

- Python DNS library - dnspython

- OPTIONAL: Terminator multi-paned terminal (also see the manual)

Demo Setup

Using the laptop/workstation's normal OS native terminal/shell, from the base directory of this project launch the Terminator multi-paned terminal application where the whole of the demo will then be executed from (this uses a specific configuration file .terminator_config to show Terminator with the layout structure required to best show this demo):

./terminator.shNOTE: If you are using MacOS and have issues using Fink to install Terminator then you can just use iTerm instead and then layout 5 instances of iTerm to roughly match the screenshot shown above (i.e. a 1st row of 2 iTerms + a 2nd row of 3 iTerms)

Demo Execution

- Using the 3 bottom panes shown in Terminator (or the iTerms), start 3 instances of the monitoring Bash/Mongo-Shell script, one in each pane, which will check the health of the local

mongodservers listening on ports27000,27001and27002respectively (IMPORTANT: do not change these ports as other scripts assume these specific ports are being used):

./monitor.sh 27000

./monitor.sh 27001

./monitor.sh 27002 (initially these monitoring scripts will report that the mongod servers are down, because they have not been started yet)

- In the top right pane, first show and explain the contents of the

start.shshell script which will be used to kill all existingmongodprocesses and will then start 3mongodservers, each listening on different local ports, then run it:

cat start.sh

./start.sh(the 3 monitoring scripts will now report that the servers are up but not initialised as a replica set)

- In the top right pane, clear the existing output and first show and explain the contents of the

configure.shshell script which will configure a replica set using the 3 runningmongodservers, then run it:

clear

cat configure.sh

./configure.sh(the 3 monitoring scripts will now report that the servers are all now configured, with one shown as the primary and two shown as secondaries, each showing the number of records that have currently been inserted into an arbitrary database collection - currently zero)

- In the top left pane, first show and explain the contents of the

insert.pyPython script which will insert new records into the arbitrary database collection, in the replica set, then run it:

cat insert.py

./insert.py(the 3 monitoring scripts will now report that the number of records contained in the database collections is increasing over time)

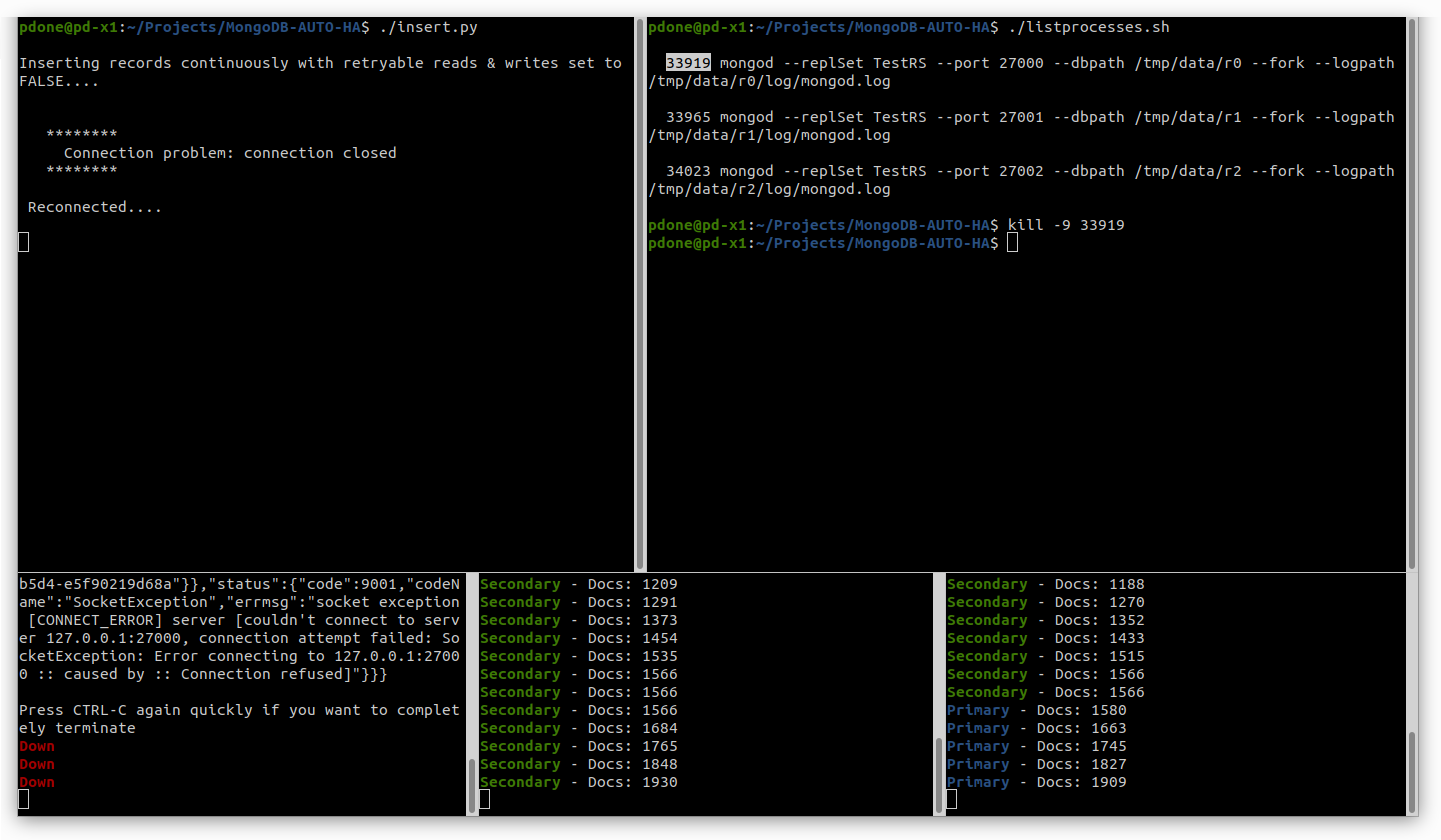

- In the top right pane, clear the existing output and run the following script to list the 3 running

mongodservers alongside their OS process IDs, then abruptly terminate (kill -9) the process corresponding to themongodwhich is currently shown as being primary in the bottom 3 panes (replace the 12345 argument with the real process ID):

clear

./listprocesses.py

kill -9 12345(the 3 monitoring scripts will report that one of the servers has gone down, and for the remaining 2 servers, for a second or two, both are still secondaries with no more records being inserted, and then one automatically becomes the primary and additional records are automatically inserted continuously again; also notice that in the top left pane the Python script reports a temporary connection problem, before carrying on its work - because retryable reads and writes have not been enabled for it)

- In the top right pane, clear the existing output and display the content of

start.shshell script and then copy the one line, corresponding to the killedmongodserver, then paste and execute the copied command into the same pane terminal, to restart the failedmongodserver (the example below shows the command line for starting the first of the 3mongodservers, which you may need to change if it was one of the other 2 servers which had been killed):

clear

cat start.sh

mongod --replSet TestRS --port 27000 --dbpath /tmp/data/r0 --fork --logpath /tmp/data/r0/log/mongod.log(the 3 monitoring scripts will now report that all 3 servers are happily running and the recovered server, shown now as a secondary, is catching up on the records it missed when it was down)

- In the top left pane, stop the Python running script and then re-start it again with the argument

retrypassed to it, to instruct the PyMongo driver to now enable retryable reads & writes, to further insulate the client application from the short failover window that occurs when a primary goes down:

# Type CTRL-C / CMD-C

clear

./insert.py retry(notice that the output of this Python script now shows that retryable reads & writes are set to TRUE)

- In the top right pane, clear the existing output and list the

mongodserver process IDs again, then terminate the one currently shown as primary (replace the 12345 argument with the real process ID):

clear

./listprocesses.py

kill -9 12345 (this time, in the top left pane, the Python script will not report any connection problems when the failover occurs - notice the running monitoring scripts in the bottom 3 panes will show a stall in increasing number of inserted records, for a second or two, before increasing again when one of the two remaining mongod servers automatically becomes the primary)

Credits / Thanks

- Eugene Bogaart for coming up with the original server health detection and colour coding mechanism which I then adapted further in

monitor.sh - Jim Blackhurst for the original suggestion to use Terminator for displaying the 5 required 'views'

- Jake McInteer for testing on MacOS + finding and reporting some bugs