Understanding Deep Learning Requires Rethinking Generalization (arxiv)

To understand what differentiates neural networks that generalize well from those that do not

CIFAR10, ImageNet

MLP-512, Inception (tiny), Wide ResNet, AlexNet, Inception_v3

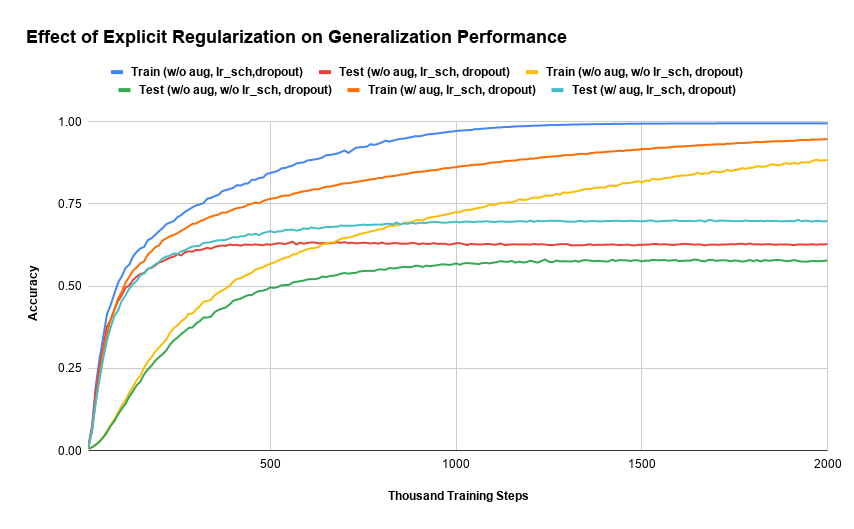

- Effect of explicit regularization like augmentation, weight decay, dropout

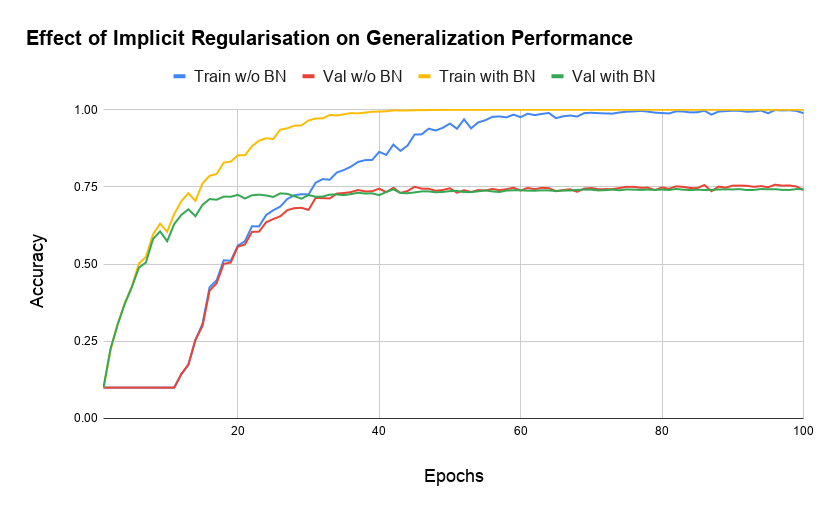

- Effect of implicit regularization like BatchNorm

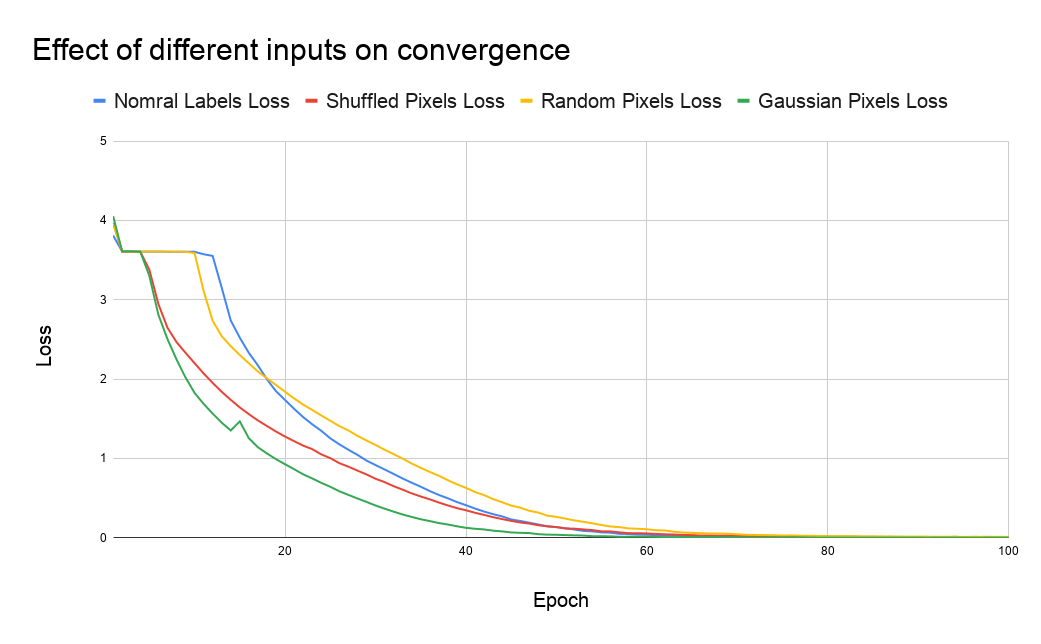

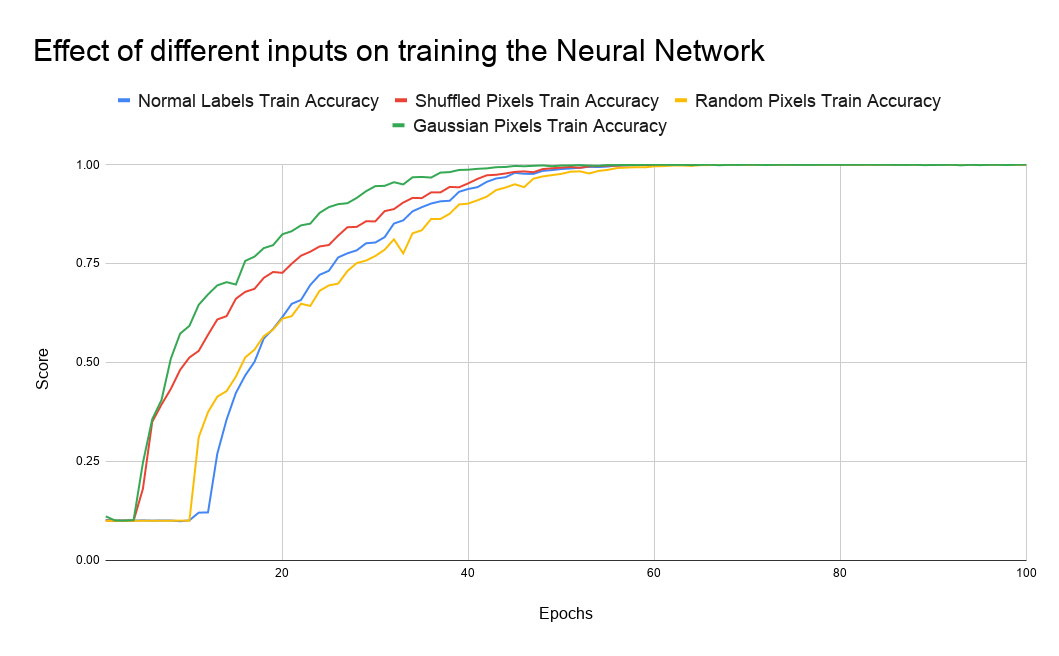

- Input data corruption: Pixel shuffle, Gaussian pixels, Random pixels

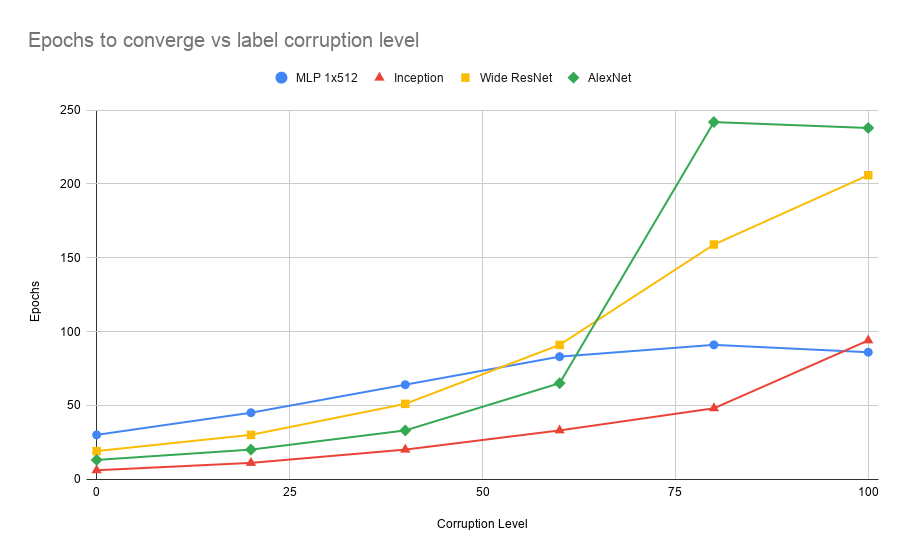

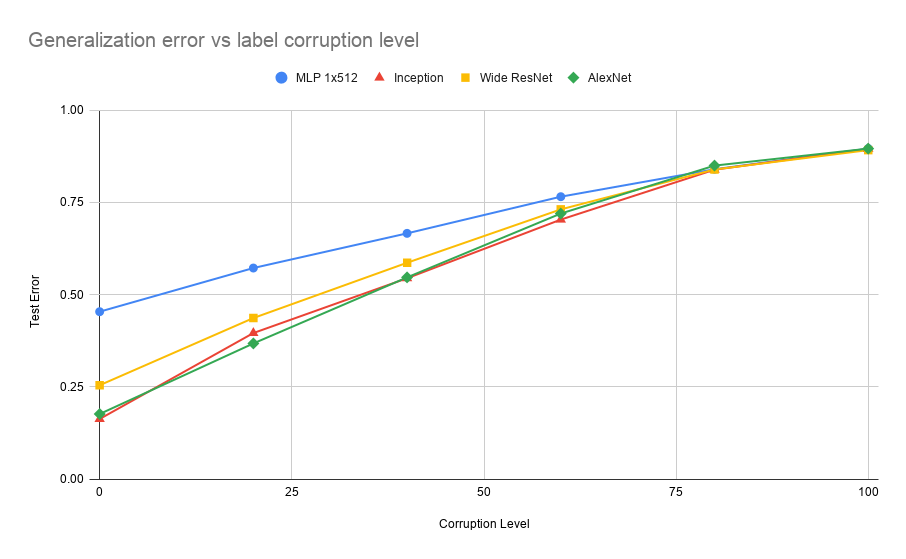

- Label corruption with different corruption levels from 1 to 100 %

- w/o Augmentation, Learning Rate Scheduler, Dropout: checkpoint

- w/o Augmentation, w/o Learning Rate Scheduler, Dropout : checkpoint

- with Augmentation, Learning Rate Scheduler, Dropout : checkpoint

- Siddhant Bansal (@Sid2697)

- Piyush Singh (@piyush-kgp)

- Madhav Agarwal (@mdv3101)