| title | version | writer | type | objective |

|---|---|---|---|---|

Guidance module + SiamMask + Onion-peel |

2.0 |

khosungpil |

Version document |

Samsung SDS |

- OS: ubuntu 16.04

- CPU Resource: Inter(R) Core(TM) i7-6700 CPU @ 3.40GHz

- GPU Resource: GTX 1080ti 1x

- Docker Version: 19.03.8

├── demo.sh

├── docker_setting.sh

├── Siammask_sharp

├── SiamMask_DAVIS.pth

├── config

├── models

├── tools

└── utils

├── OnionPeel

├── OPN.pth

└── TCN.pth

├── data

└── ${DATA_NAME}

├── ???.MP4 (Video File)

└── *.jpg

└── results

└── ${DATA_NAME}

├── final

└── *.jpg

├── masks : Binary mask

└── *.png

├── masks2 : Color mask in input images

└── *.png

├── input.gif

├── final.gif

├── mask.gif

└── mask2.gif

| Directory name | role |

|---|---|

| masks | Extract binary mask |

| masks2 | Color(cyan) mask in input images |

| final | Output through Siammask and OPN |

- Update docker image(torch 0.4.1 -> torch 1.0.0) in dockerhub

- Add video_preprocessing code to make images per frame for matching input in siammask_sharp

- Add padding from input initial frame in guidance module

- Add various options in demo.sh

| Options | role |

|---|---|

| DATA_NAME | folder name in "./data" directory |

| VIDEO_SCALE_FACTOR | For translating high-resolution video to low-resolution image per frame with resizing images, (default=0.25) |

| PADDING_FACTOR | In guidance module, pad image_size * padding_factor, (default=0.05) ex) 320 x 240 images, 0.05 padding_factor -> 16 x 12 pad |

| OPN_DILATE_FACTOR | preprocess to raw_mask with dilation, (default=3) |

| OPN_SCALE_FACTOR | you can resize again from images per frame to fit memory, (default=0.5) |

- Recommend using nvidia-docker

- xhost local:root

- Edit MOUNTED_PATH where code file is in docker_setting.sh

- Run docker_setting.sh

bash docker_setting.sh

- Go to /sds/ in activated container.

- If you have a video file, then run python demo.sh with line 19(python video_preprocess.py ~). You will acquire images per frame from video.

- You can add video extension in line 23, extension_list in video_preprocess.py,

default: '.mov', 'MOV', '.MP4'

- You can add video extension in line 23, extension_list in video_preprocess.py,

| model | path | Hyperlink |

|---|---|---|

| SiamMask_DAVIS | ./Siammask_sharp | [Download] |

| OPN | ./OnionPeel/ | [Download] |

| TCN | ./OnionPeel/ | [Download] |

-

You can edit various option in demo.sh

-

Run ./demo.sh in docker container

bash demo.sh

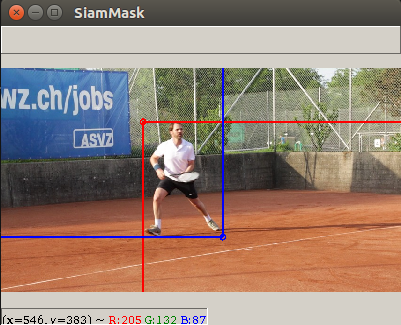

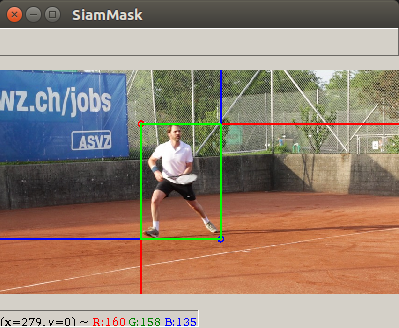

- Select bounding box

- If occurs yellow pad

- When press 'a' in keyboard, then determine bounding box.

- When press 'b' in keyboard, then inference each models.

- You can check the masks in the {$DATA_NAME}/masks throught Siammask

- You can check the results in the {$DATA_NAME}/final throught Onion-peel

- Finally, you can check the gif file in the {$DATA_NAME}

[CVPR 2019] Fast Online Object Tracking and Segmentation: A Unifying Approach

[Github]

[ICCV 2019] Onion-Peel Networks for Deep Video Completion

[Github]