This repo contains the source code for experiments for our paper

Riemannian Preconditioned LoRA for Fine-Tuning Foundation Models

Fangzhao Zhang, Mert Pilanci

Paper: https://arxiv.org/abs/2402.02347

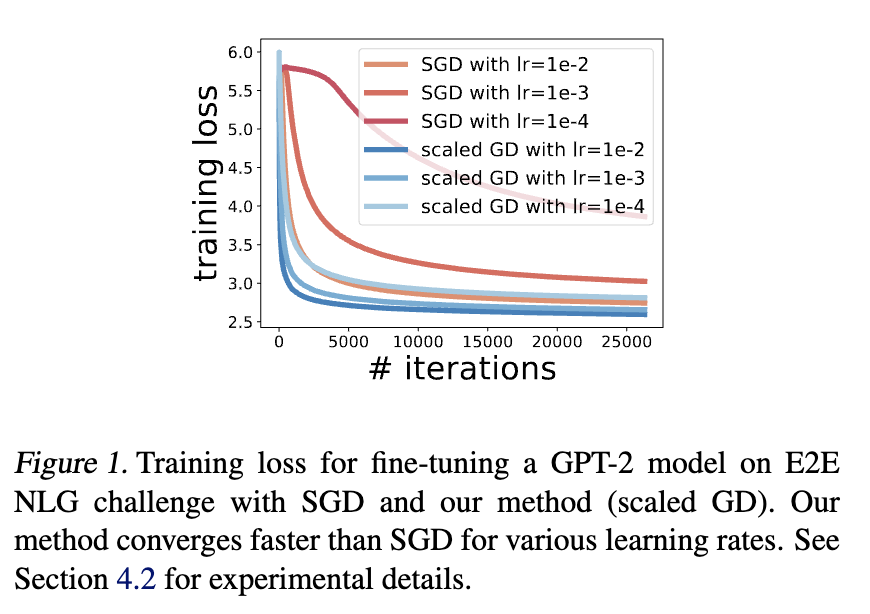

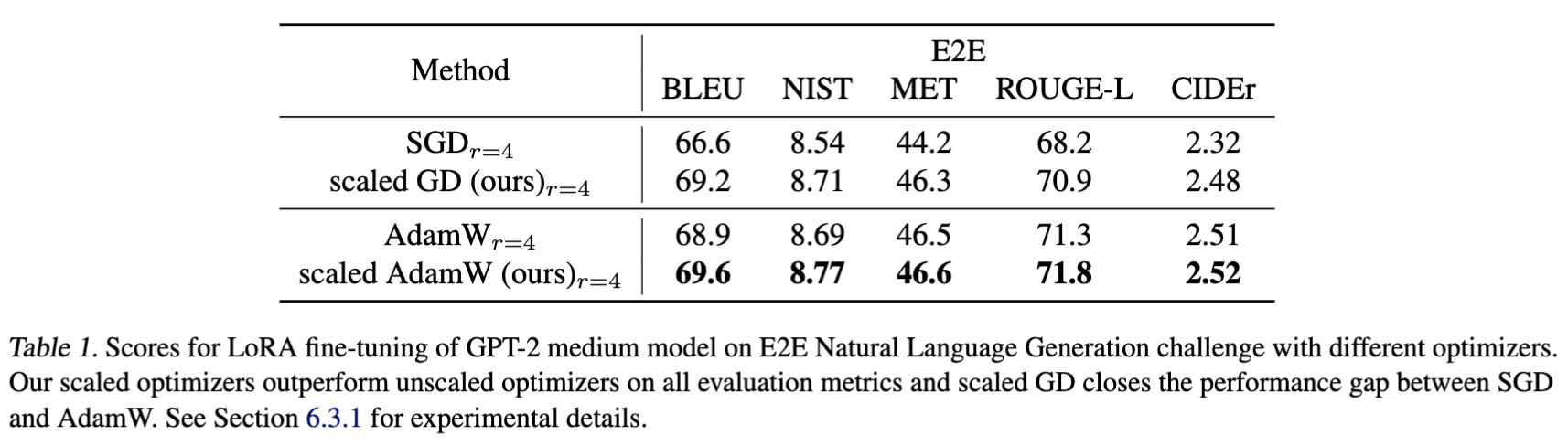

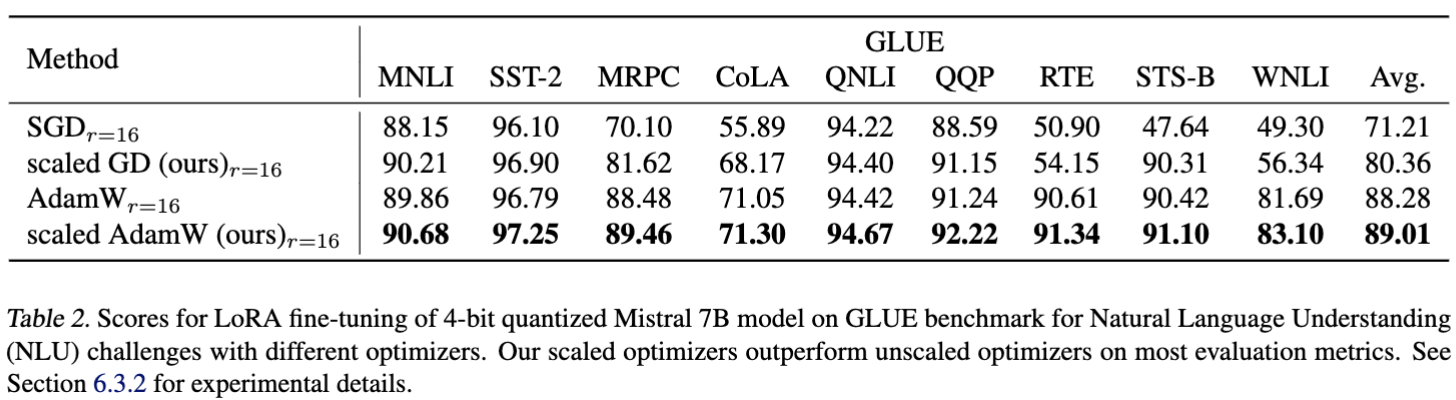

In this work we study the enhancement of Low Rank Adaptation (LoRA) fine-tuning procedure by introducing a Riemannian preconditioner in its optimization step. Specifically, we introduce an

Specifically, in each iteration, let

where

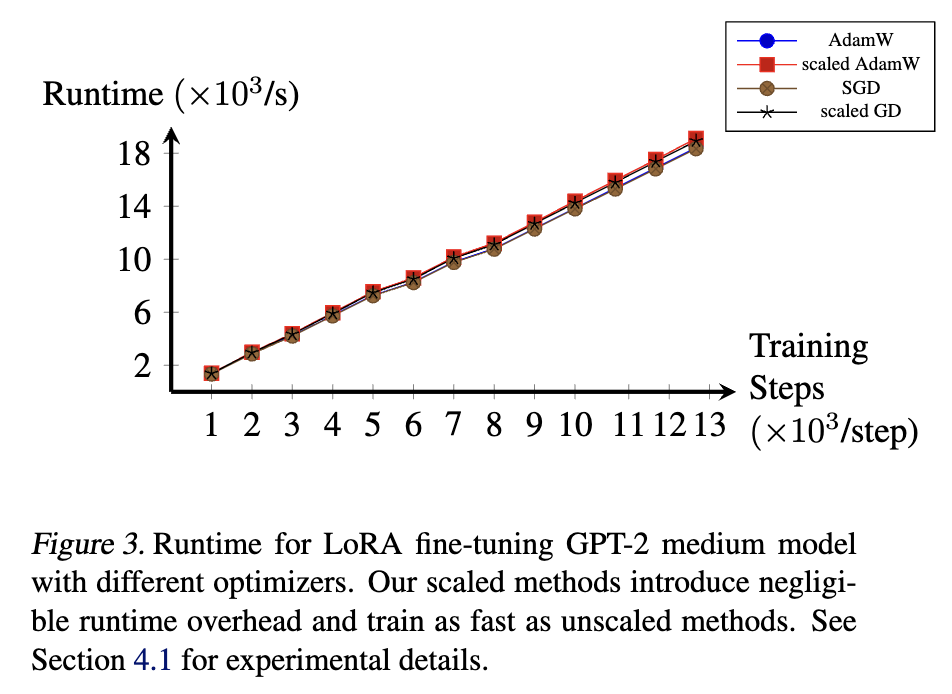

In this project, we experiments with GPT-2 fine-tuning, Mistral 7B fine-tuning, Mix-of-Show fine-tuning, custom diffusion fine-tuning.

- GPT-2 Fine-Tuning (see GPT-2/ for experiment code.)

- Mistral 7B Fine-Tuning (see Mistral-7B/ for experiment code.)

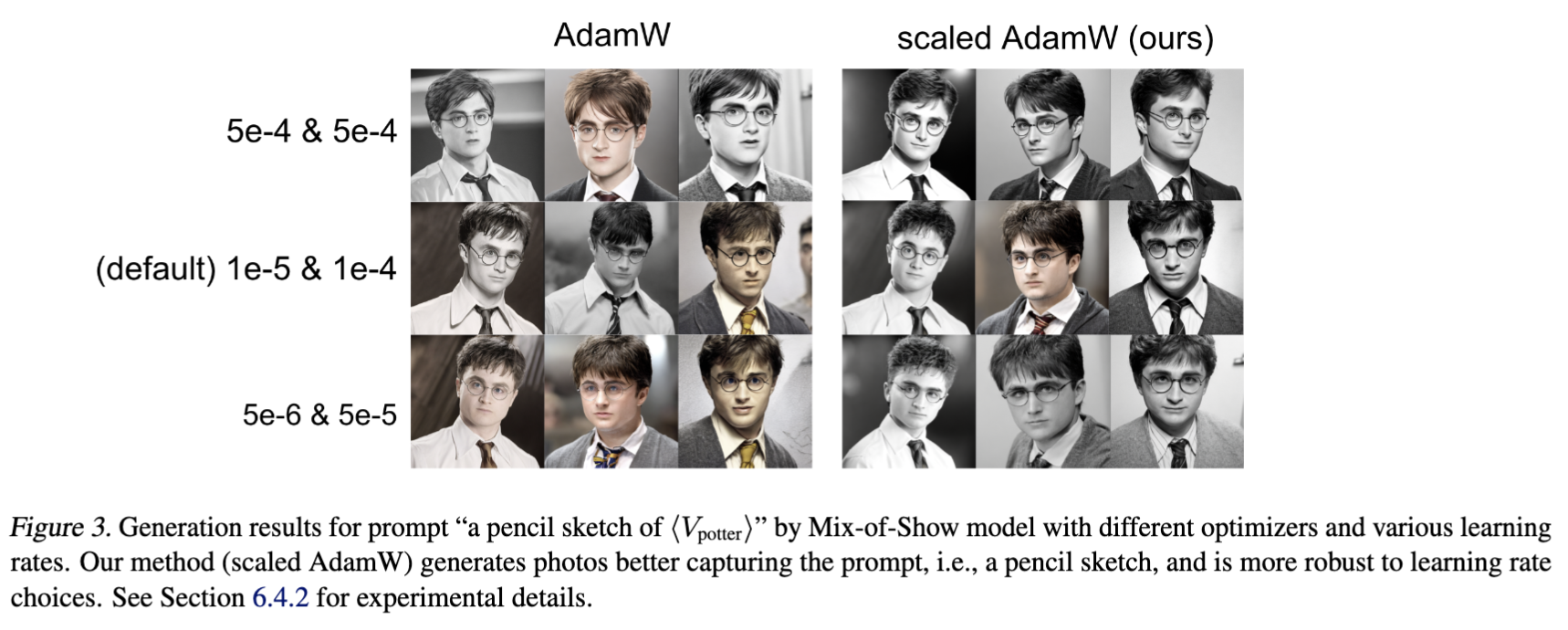

- Mix-of-Show Fine-Tuning (see Mix-of-Show/ for experiment code.)

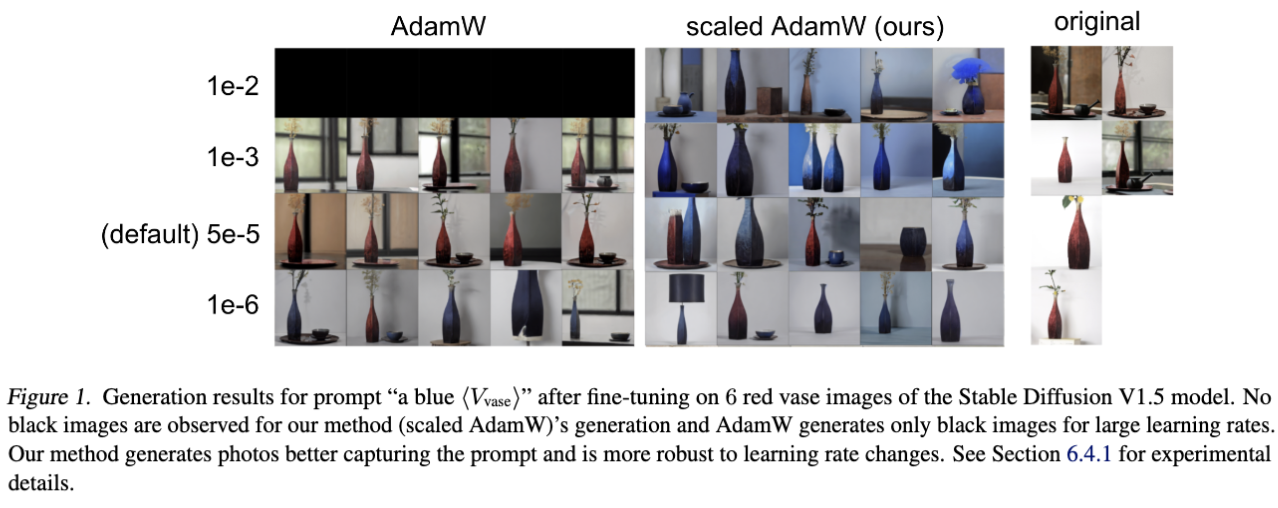

- Custom Diffusion Fine-tuning (see Object_Generation/ for experiment code.)

See Parameter Reference in each section for parameter choices for each experiment. See Runtime Experiment in GPT-2/ for runtime experiment details.

Please contact us or post an issue if you have any questions.

- Fangzhao Zhang (zfzhao@stanford.edu)

This work has been heavily influenced by recent development in low-rank matrix optimization research and parameter-efficient fine-tuning (PEFT) research. We cite several important references here with a more complete reference list presented in our paper. Moreover, our experimental code is mainly built on the following repositories: LoRA (Hu et al., 2021), Mix-of-Show (Gu et al., 2023), custom diffsuon.

@article{tong2021accelerating,

title={Accelerating Ill-Conditioned Low-Rank Matrix Estimation via Scaled Gradient Descent},

author={Tian Tong and Cong Ma and Yuejie Chi},

journal={arXiv preprint arXiv:2005.08898},

year={2021}

}@inproceedings{hu2022lora,

title={Lo{RA}: Low-Rank Adaptation of Large Language Models},

author={Edward J Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Lu Wang and Weizhu Chen},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=nZeVKeeFYf9}

}@article{gu2023mixofshow,

title={Mix-of-Show: Decentralized Low-Rank Adaptation for Multi-Concept Customization of Diffusion Models},

author={Gu, Yuchao and Wang, Xintao and Wu, Jay Zhangjie and Shi, Yujun and Chen Yunpeng and Fan, Zihan and Xiao, Wuyou and Zhao, Rui and Chang, Shuning and Wu, Weijia and Ge, Yixiao and Shan Ying and Shou, Mike Zheng},

journal={arXiv preprint arXiv:2305.18292},

year={2023}

}@misc{zhang2024riemannian,

title={Riemannian Preconditioned LoRA for Fine-Tuning Foundation Models},

author={Fangzhao Zhang and Mert Pilanci},

year={2024},

eprint={2402.02347},

archivePrefix={arXiv},

primaryClass={cs.LG}

}