This repository contains the implementation of the following paper:

ReVersion: Diffusion-Based Relation Inversion from Images

Ziqi Huang∗, Tianxing Wu∗, Yuming Jiang, Kelvin C.K. Chan, Ziwei Liu

From MMLab@NTU affiliated with S-Lab, Nanyang Technological University

[Paper] | [Project Page] | [Video] | [Dataset (coming soon)]

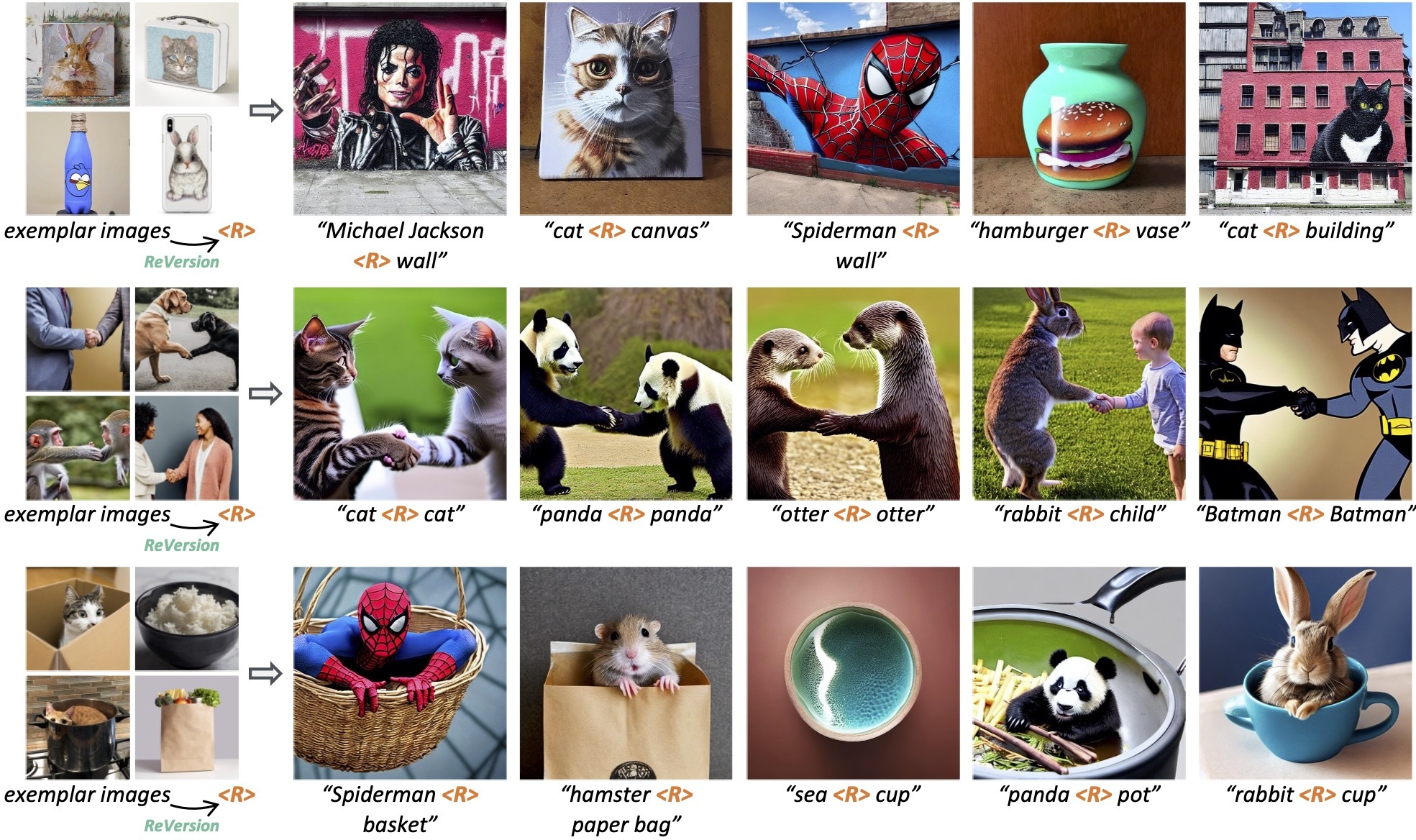

We propose a new task, Relation Inversion: Given a few exemplar images, where a relation co-exists in every image, we aim to find a relation prompt <R> to capture this interaction, and apply the relation to new entities to synthesize new scenes. The above images are generated by our ReVersion framework.

- [03/2023] Arxiv paper available.

- [03/2023] Pre-trained models with relation prompts released at this link.

- [03/2023] Project page and video available.

- [03/2023] Inference code released.

-

Clone Repo

git clone https://github.com/ziqihuangg/ReVersion cd ReVersion -

Create Conda Environment and Install Dependencies

conda create -n reversion conda activate reversion conda install python=3.8 pytorch==1.11.0 torchvision==0.12.0 cudatoolkit=11.3 -c pytorch pip install diffusers["torch"] pip install -r requirements.txt

Given a set of exemplar images and their entities' coarse descriptions, you can optimize a relation prompt <R> to capture the co-existing relation in these images, namely Relation Inversion.

The code for implementing Relation Inversion will be released soon.

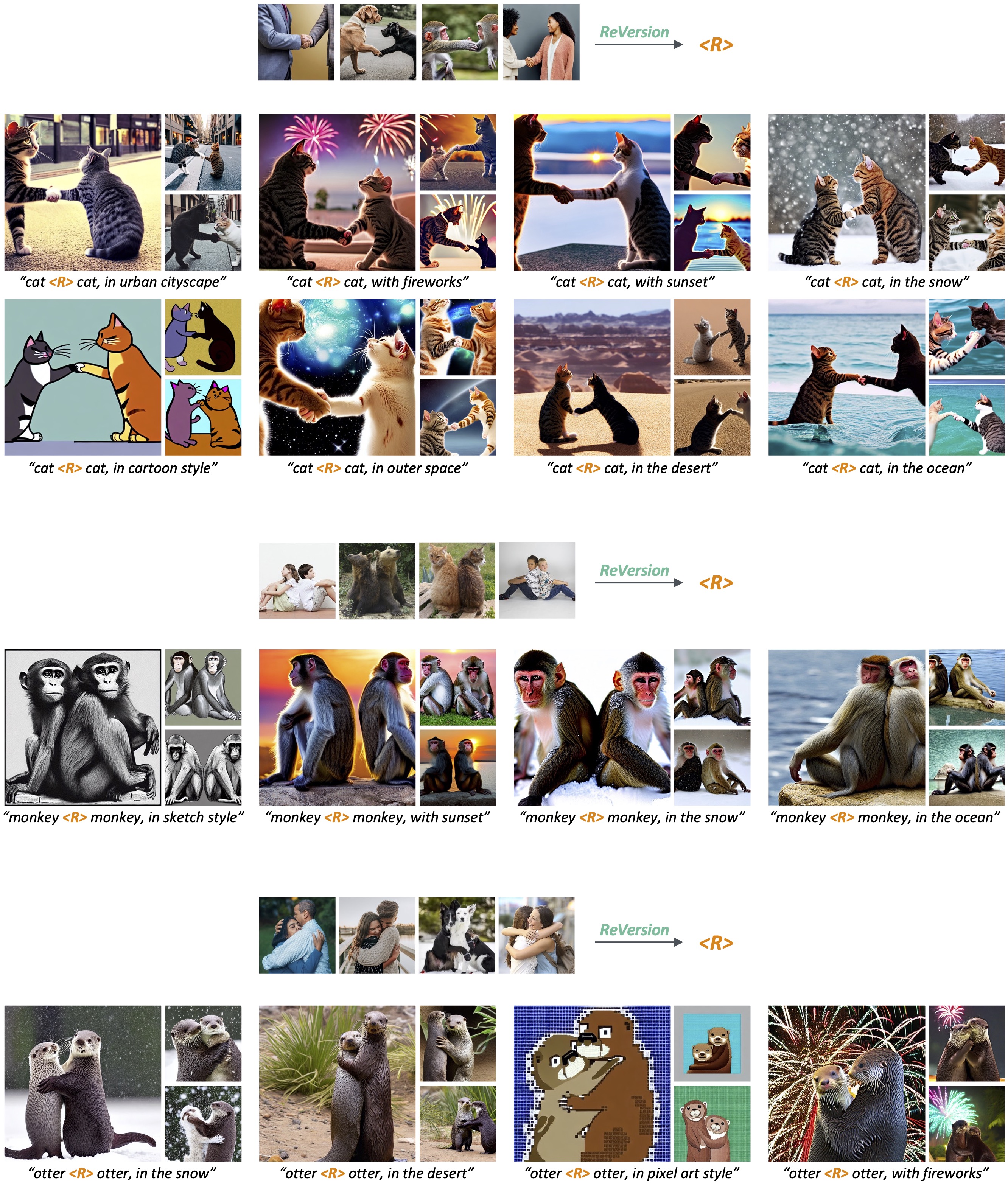

The relation prompt <R> learned though Relation Inversion can be applied to generate relation-specific images with new objects, backgrounds, and style.

-

Run Relation Inversion as described in the former section on your customized data, or download the models from here, where we provide several pretrained relation prompts for you to play with. More relation prompts will be provided soon.

-

Put the models under

./experiments/as follows:./experiments/ ├── painted_on │ ├── checkpoint-500 │ ... │ └── model_index.json ├── carved_by │ ├── checkpoint-500 │ ... │ └── model_index.json ├── inside │ ├── checkpoint-500 │ ... │ └── model_index.json ...

-

Take the relation

painted_onfor example, you can either use the following script to generate images using a single prompt, e.g., "cat <R> stone":python inference.py \ --model_id ./experiments/painted_on \ --prompt "cat <R> stone" \ --placeholder_string "<R>" \ --num_samples 10 \ --guidance_scale 7.5Or write a list prompts in

./templates/templates.pywith the key name$your_template_nameand generate images for every prompt in the list$your_template_name:$your_template_name='painted_on_examples' python inference.py \ --model_id ./experiments/painted_on \ --template_name $your_template_name \ --placeholder_string "<R>" \ --num_samples 10 \ --guidance_scale 7.5Where

model_idis the model directory,num_samplesis the number of images to generate for each prompt,guidance_scaleis the classifier-free guidance scale.We provide several example templates for each relation in

./templates/templates.py, such aspainted_on_examples,carved_by_examples, etc.The generation results will be saved in the

inferencefolder in each model's directory.

You can also specify diverse prompts with the relation prompt <R> to generate images of diverse backgrounds and style. For example, your prompt could be "michael jackson <R> wall, in the desert", "cat <R> stone, on the beach", etc. We list some sample results as follows.

If you find our repo useful for your research, please consider citing our paper:

@article{huang2023reversion,

title={{ReVersion}: Diffusion-Based Relation Inversion from Images},

author={Huang, Ziqi and Wu, Tianxing and Jiang, Yuming and Chan, Kelvin C.K. and Liu, Ziwei},

journal={arXiv preprint arXiv:2303.13495},

year={2023}

}The codebase is maintained by Ziqi Huang and Tianxing Wu.

This project is built using the following open source repositories: