Repository for the paper "Verifying Reinforcement Learning up to Inifinity"

conda create -n SafeRL_Infinity python=3.7

conda activate SafeRL_Infinity

pip install -r requirements.txt

conda config --add channels http://conda.anaconda.org/gurobi

conda install gurobi

##Paper results ## download and unzip experiment_collection_final.zip in the 'save' directory

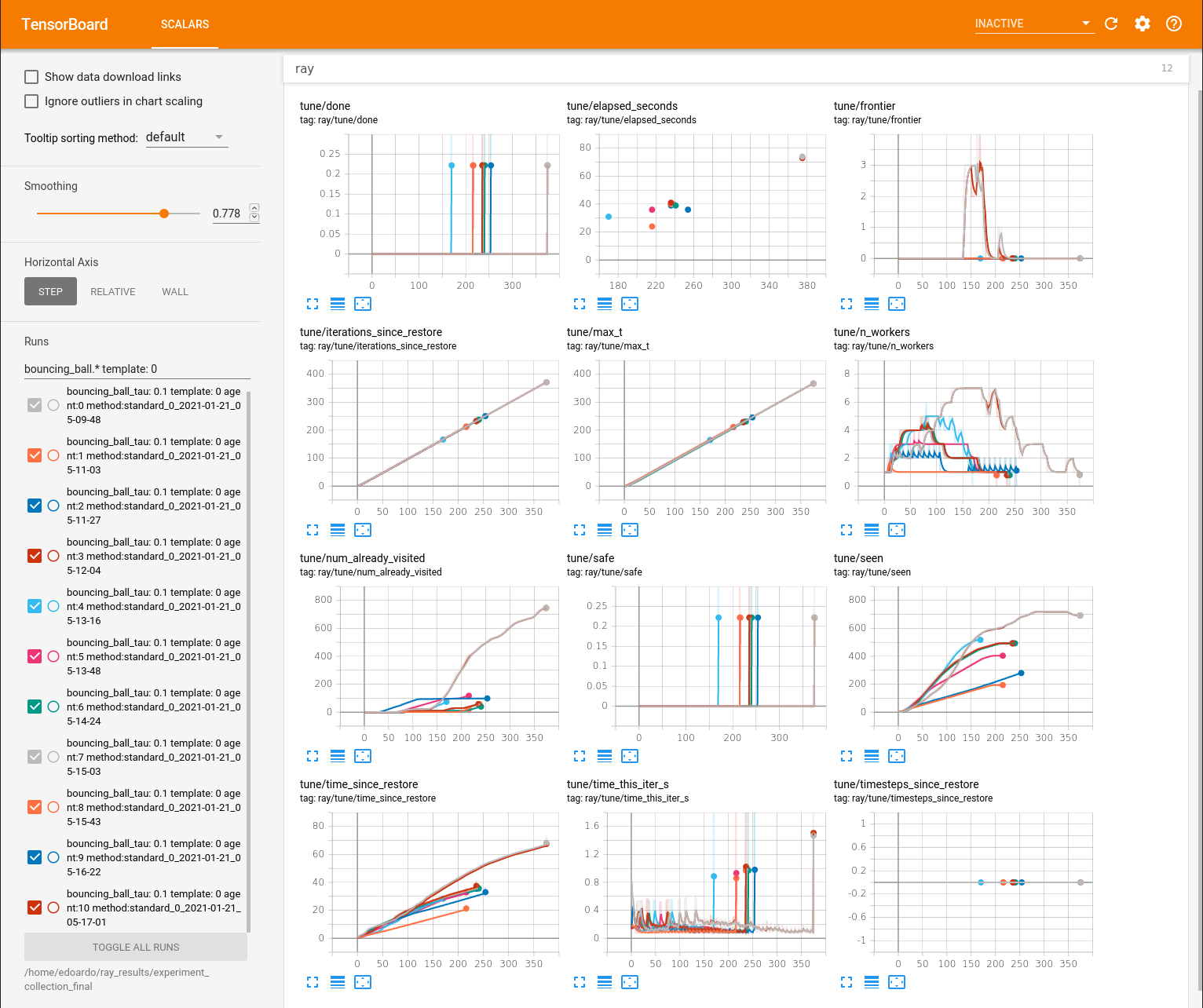

run tensorboard --logdir=./save/experiment_collection_final

(results for the output range analysis experiments are in experiment_collection_ora_final.zip)

##Train neural networks from scratch ## run either:

training/tune_train_PPO_bouncing_ball.pytraining/tune_train_PPO_car.pytraining/tune_train_PPO_cartpole.pyconda install -c conda-forge pyomoconda install -c conda-forge ipopt glpk

##Check safety of pretrained agents ## download and unzip pretrained_agents.zip in the 'save' directory

run verification/run_tune_experiments.py

(to monitor the progress of the algorithm run tensorboard --logdir=./save/experiment_collection_final)

The results in tensorboard can be filtered using regular expressions (eg. "bouncing_ball.* template: 0") on the search bar on the left:

The name of the experiment contains the name of the problem (bouncing_ball, cartpole, stopping car), the amount of adversarial noise ("eps", only for stopping_car), the time steps length for the dynamics of the system ("tau", only for cartpole) and the choice of restriction in order of complexity (0 being box, 1 being the chosen template, and 2 being octagon).

The table in the paper is filled by using some of the metrics reported in tensorboard:

- max_t: Avg timesteps

- seen: Avg polyhedra

- time_since_restore: Avg clock time (s)