This repository is the official implementation of OOTDiffusion

🤗 Try out OOTDiffusion (Thanks to ZeroGPU for providing A100 GPUs)

Or try our own demo on RTX 4090 GPUs

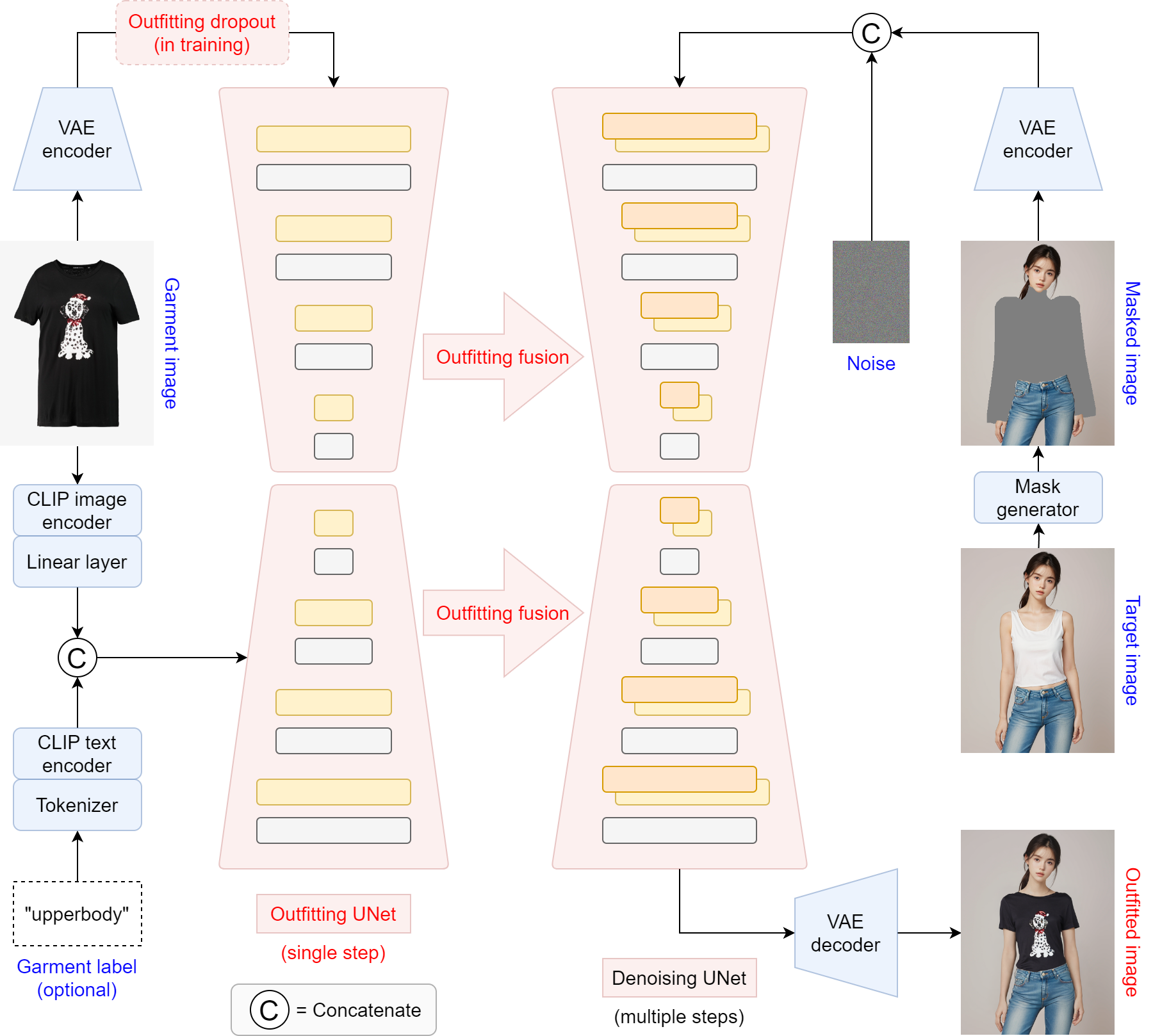

OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on [arXiv paper]

Yuhao Xu, Tao Gu, Weifeng Chen, Chengcai Chen

Xiao-i Research

Our model checkpoints trained on VITON-HD (half-body) and Dress Code (full-body) have been released

- 🤗 Hugging Face link

- 📢📢 We support ONNX for humanparsing now. Most environmental issues should have been addressed : )

- Please download clip-vit-large-patch14 into checkpoints folder

- We've only tested our code and models on Linux (Ubuntu 22.04)

- Clone the repository

git clone https://github.com/levihsu/OOTDiffusion- Create a conda environment and install the required packages

conda create -n ootd python==3.10

conda activate ootd

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

pip install -r requirements.txt- Half-body model

cd OOTDiffusion/run

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --scale 2.0 --sample 4- Full-body model

Garment category must be paired: 0 = upperbody; 1 = lowerbody; 2 = dress

cd OOTDiffusion/run

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --model_type dc --category 2 --scale 2.0 --sample 4accelerate launch ootd_train.py --load_height 512 --load_width 384 --dataset_list 'train_pairs.txt' --dataset_mode 'train' --batch_size 16 --train_batch_size 16 --num_train_epochs 200

@article{xu2024ootdiffusion,

title={OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on},

author={Xu, Yuhao and Gu, Tao and Chen, Weifeng and Chen, Chengcai},

journal={arXiv preprint arXiv:2403.01779},

year={2024}

}

- Paper

- Gradio demo

- Inference code

- Model weights

- Training code