This project can be used as a reference to deploy a simple flask application running in AWS in Kubernetes.

The stack for this example is the following:

- Docker

- A Kubernetes "cluster" running in a Ubuntu machine with Microk8s on AWS EC2

- Helm - A "package manager" for kubernetes applications

- Nginx Ingress Controller

- Certmanager + Let’s Encrypt for TLS

- GitHub Actions for CI/CD

To use this repository locally you will need the following tools:

WARNING: Deploying this to your AWS account will incur in costs

-

Clone this repository

-

Change the target docker image in the chart/k8s-demo/values.yaml to use your docker repository.

-

Create terraform backend in your account

-

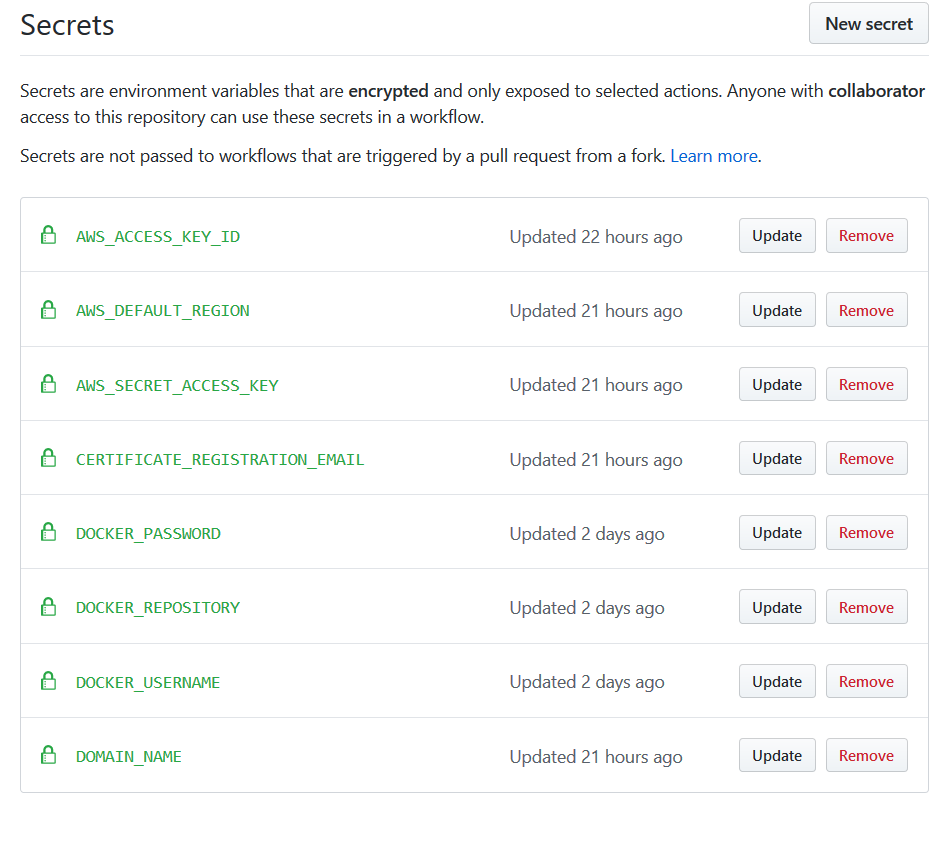

Setup secrets on github actions. On you repo go to

Settings > Secretsand make sure you create the following secrets:AWS_ACCESS_KEY_ID=AXXXXXXXXXXXXXXXXX The AWS access key used to create resources in AWS# AWS_SECRET_ACCESS_KEY=xxxxxxxxxxxxxxxxxxxxx # The AWS secret used to create resources in AWS AWS_DEFAULT_REGION=eu-west-1 # The target AWS region to deploy your resources CERTIFICATE_REGISTRATION_EMAIL=info@example.com # This email will be associated with the SSL certificate that is created automatically DOCKER_USERNAME=user # Username for dockerhub DOCKER_PASSWORD=xxxxx # Password for dockerhub DOCKER_REPOSITORY=paulopontesm/k8s-demo # Name of the repository in dockerhub DOMAIN_NAME=k8s-demo.example.com # Domain name where the service will be available. -

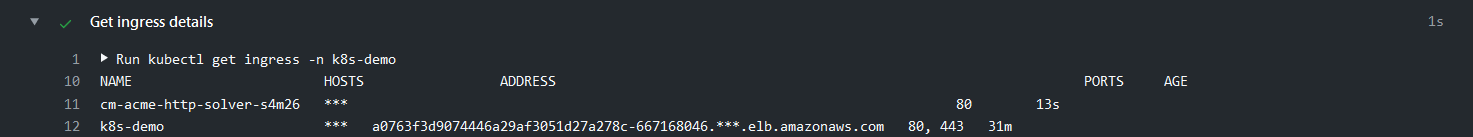

When the github action ran successfully, go to the step called "Get Ingress Details" and create a CNAME record on your DNS that points to the content that you can find in the ADDRESS field.

-

Try to access the cluster from your laptop by configuring the correct AWS credentials by following this instructions.

The file structure of this repository is the following:

.

├── .github/ <- the github action

├── Dockerfile <- the docker

├── README.md

├── app.py <- the application

├── chart/ <- the helm chart

├── infrastructure/ <- the infrastructure

│ ├── backend/ <- the terraform backend

│ └── main/ <- the terraform for the actual aws infrastructure

For reference this repository uses the Flask Minimal Application that returns a simple "Hello world".

$ flask run

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)Also, when starting the application we are warned by Flask to Use a production WSGI server instead. There are many ways to deploy a flask app, but since the goal is to use it in a docker container, let's use one of the recommended Standalone WSGI Containers, in the case Gunicorn.

You can run the application locally using the following command:

$ gunicorn -w 4 --bind 0.0.0.0:5000 app:app

[2020-05-23 18:50:56 +0100] [14880] [INFO] Starting gunicorn 20.0.4

[2020-05-23 18:50:56 +0100] [14880] [INFO] Listening at: http://0.0.0.0:5000 (14880)

[2020-05-23 18:50:56 +0100] [14880] [INFO] Using worker: sync

[2020-05-23 18:50:56 +0100] [14883] [INFO] Booting worker with pid: 14883

[2020-05-23 18:50:56 +0100] [14884] [INFO] Booting worker with pid: 14884

[2020-05-23 18:50:56 +0100] [14885] [INFO] Booting worker with pid: 14885

[2020-05-23 18:50:56 +0100] [14886] [INFO] Booting worker with pid: 14886The definition for the Docker image can be found in the Dockerfile.

As a base image for the docker image we are using one of the official docker images for python. For the application we use the tag python:3.8-slim-buster. The 3.8 stands for the python version, the buster stands for the version of debian that is used as the underlying image, and the slim stands for the image variant of debian which is more or less half the size as the "full fledged" debian image.

Besides the base image the Dockerfile also copies the requirements.pip and app.py to the working directory of the docker image and defines the initial command to be executed when the image is ran.

Build the docker image using the following command:

$ docker build -t k8s-demo:latest .

Sending build context to Docker daemon 178MB

Step 1/7 : FROM python:3.8-slim-buster

---> 96222012c094

Step 2/7 : WORKDIR /usr/src/app

---> Using cache

---> b74d0717fdc5

Step 3/7 : RUN pip install --upgrade pip

---> Using cache

---> 064cfa9ab71d

Step 4/7 : COPY ./requirements.pip /usr/src/app/requirements.pip

---> Using cache

---> 34bc76d3463f

Step 5/7 : RUN pip install -r requirements.pip

---> Using cache

---> 7dd75f5f9499

Step 6/7 : COPY ./app.py /usr/src/app/app.py

---> Using cache

---> e2cc9a98605a

Step 7/7 : ENTRYPOINT gunicorn -w 4 --bind 0.0.0.0:80 app:app

---> Running in ed58d45cbe77

Removing intermediate container ed58d45cbe77

---> 973dfbae36d3

Successfully built 973dfbae36d3

Successfully tagged k8s-demo:latestRun it:

$ docker run -it --name k8s-demo k8s-demo:latest

[2020-05-23 19:59:05 +0000] [6] [INFO] Starting gunicorn 20.0.4

[2020-05-23 19:59:05 +0000] [6] [INFO] Listening at: http://0.0.0.0:11130 (6)

[2020-05-23 19:59:05 +0000] [6] [INFO] Using worker: sync

[2020-05-23 19:59:05 +0000] [8] [INFO] Booting worker with pid: 8

[2020-05-23 19:59:05 +0000] [9] [INFO] Booting worker with pid: 9

[2020-05-23 19:59:05 +0000] [10] [INFO] Booting worker with pid: 10

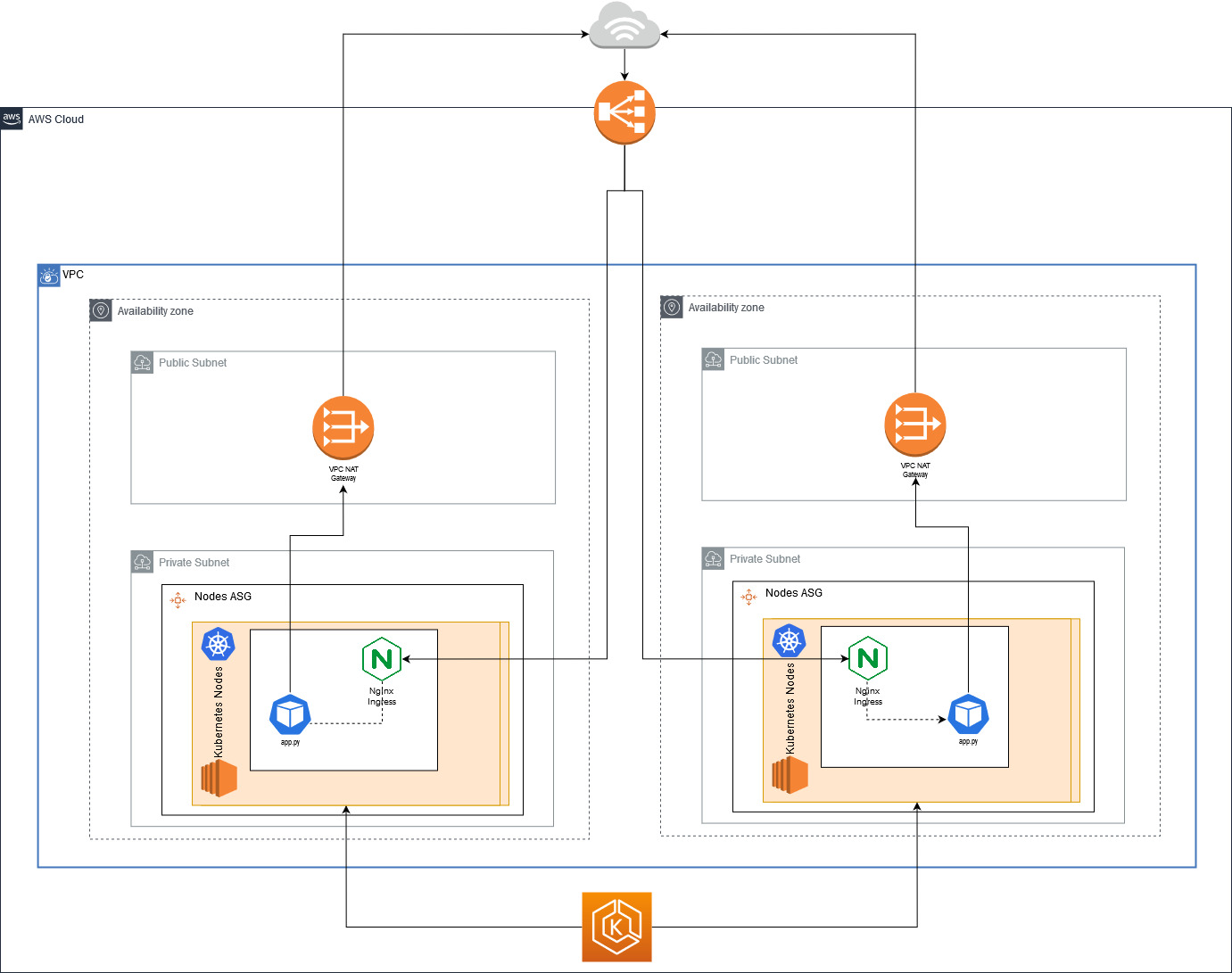

[2020-05-23 19:59:05 +0000] [11] [INFO] Booting worker with pid: 11In the infrastructure/ directory, there are a set of terraform templates that create the entire AWS infrastructure. You can check the image at the top of this file to get a sense of what kind of resources it creates, but it goes more or less like this:

- Network - A VPC with 2 private subnets and 2 public subnets and a NAT gateway to provide access

- Cluster Master - The managed EKS, kubernetes master

- Cluster Workers - A set of EC2 instances where the pods/containers will be deployed.

It also enables some nice things, like:

- All the logs from the kubernetes master going to cloudwatch

- Installs the ssm agent in the nodes, so you can use IAM authentication to "ssh" to the nodes.

Assuming you have your ~/.aws/credentials correctly configured.

export AWS_SDK_LOAD_CONFIG=1 # Tell the sdk from terraform to load the aws config

export AWS_DEFAULT_REGION=eu-west-1 # choose your region

# export AWS_PROFILE=sandbox_role # Setup a correct AWS_PROFILE if neededTerraform needs a place to store the current state of your infrastructure. Gladly a nice way to store this state is using and S3 bucket and a DynamoDB table.

In the infrastructure/backend/ directory you can find a set

of terraform templates that create a bucket for you called bucket=terraform-state-$ACCOUNT_ID and a dynamodb.

You can create it like this:

cd backend/

terraform init && terraform applyAfter you have you backend storage created, you can deploy the entire infrastructure like this:

cd ../main/

export ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

export REGION=${AWS_DEFAULT_REGION:-$(aws configure get region)}

terraform init -backend-config="bucket=terraform-state-$ACCOUNT_ID" -backend-config="region=$REGION"

terraform applyAfter 15-20m the entire infrastructure should be created. You can now configure your local kubectl to access the new kubernetes cluster.

To be able to access the kubernetes cluster you need to add the following role to your ~/.aws/credentials file:

!!! IMPORTANT: don't forget to replace the ACCOUNT_ID

[k8sdemo-kubernetes-admin]

role_arn = arn:aws:iam::$ACCOUNT_ID:role/k8sdemo-kubernetes-admin

source_profile = defaultNow use that profile to update our kubeconfig:

$ aws eks update-kubeconfig --name k8sdemo-cluster --profile k8sdemo-kubernetes-admin

...

$ kubectl get pods --all-namespacesThe easiest way to create a chart is by using the helm create command.

The default chart already brings some really interesting things that make it easier for us to be following some Kubernetes best practices:

- Standard labels that are added to all the Kubernetes resources

- A Deployment file with configurable resources, nodeSelectors, affinity and tolerations.

- An HorizontalPodAutoscaler that will add or remove containers/pods based on a target CPU and Memory usage.

- A new ServiceAccount specific to the application. By default, new service accounts don't have any permissions.

- A Service to make the application accessible by other pods inside the cluster.

- An Ingress to make the application accessible outside of the cluster.

- A Helm test that can be used to check if the application is running after we install it. By default it runs a simple

$ wget {service.name}:{service.port}.

In this repository the helm chart can be found under the chart/ directory.

The only change we need to do to have a working Helm chart is to change the image.repostory in the values.yaml to use the docker image.

The application is quite simple to deploy, you just need to create a namespace and use helm to install everything:

$ kubectl create namespace k8s-demo

namespace/k8s-demo created$ helm upgrade --install k8s-demo chart/k8s-demo/ --namespace k8s-demo

Release "k8s-demo" does not exist. Installing it now.

NAME: k8s-demo

LAST DEPLOYED: Mon May 25 21:29:30 2020

NAMESPACE: k8s-demo

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace k8s-demo -l "app.kubernetes.io/name=k8s-demo,app.kubernetes.io/instance=k8s-demo" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace k8s-demo port-forward $POD_NAME 8080:80To enable access to the application from the internet we are using the nginx ingress controller and certmanager to automatically generate/renew the certificate.

For simplicity we added them to the chart on this repo as a dependency, it is created if we set nginxingress.enabled=true and certmanager.enabled=true.

This is what you need to do to enable it (make sure to replace the example.com with your domain):

# Kubernetes 1.15+

# https://cert-manager.io/docs/installation/kubernetes/#installing-with-regular-manifests

$ kubectl apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v0.15.0/cert-manager.crds.yamlhelm upgrade --install k8s-demo chart/k8s-demo/ --namespace k8s-demo \

--set ingress.enabled=true \

--set ingress.hosts[0].host=example.com \

--set ingress.hosts[0].paths[0]="/" \

--set ingress.tls[0].hosts[0]=example.com \

--set ingress.tls[0].secretName=k8s-demo-tls \

--set nginxingress.enabled=true \

--set certmanager.enabled=true \

--set certmanager.issuer.email=example@gmail.com$ kubectl get ingress -n k8s-demo

NAME HOSTS ADDRESS PORTS AGE

k8s-demo k8s-demo.arvoros.com ad6efd9329ed411ea8d7d02ae17452ae-1611130781.eu-west-1.elb.amazonaws.com 80, 443 3m18sNow, you can got to your DNS provider and create a CNAME record pointing your domain to the ADDRESS of your service.