American Sign Language (ASL) Letters Real-Time Detection 🤟

Customized YOLOv5 for real-time American Language Sign (ASL) letters detection via PyTorch, OpenCV, Roboflow and LabelImg.

📖 About

This project originated from a video that I came across on youtube. A woman standing off to the side was supposedly translating every word in American Language Sign (ASL), but it turned out much of what she was signing was nonsense. The deaf community often finds itself in situations where verbal communication is the norm. Also, in many cases access to qualified interpreter services is not available, which can lead to underemployment, social isolation and public health problems.

Therefore, exploiting PyTorch, OpenCV and a public dataset on Roboflow I trained a customized version of the YOLOv5 model for real-time ASL letters detection. This is not yet a model that can be used in real life, however, we are on that path.

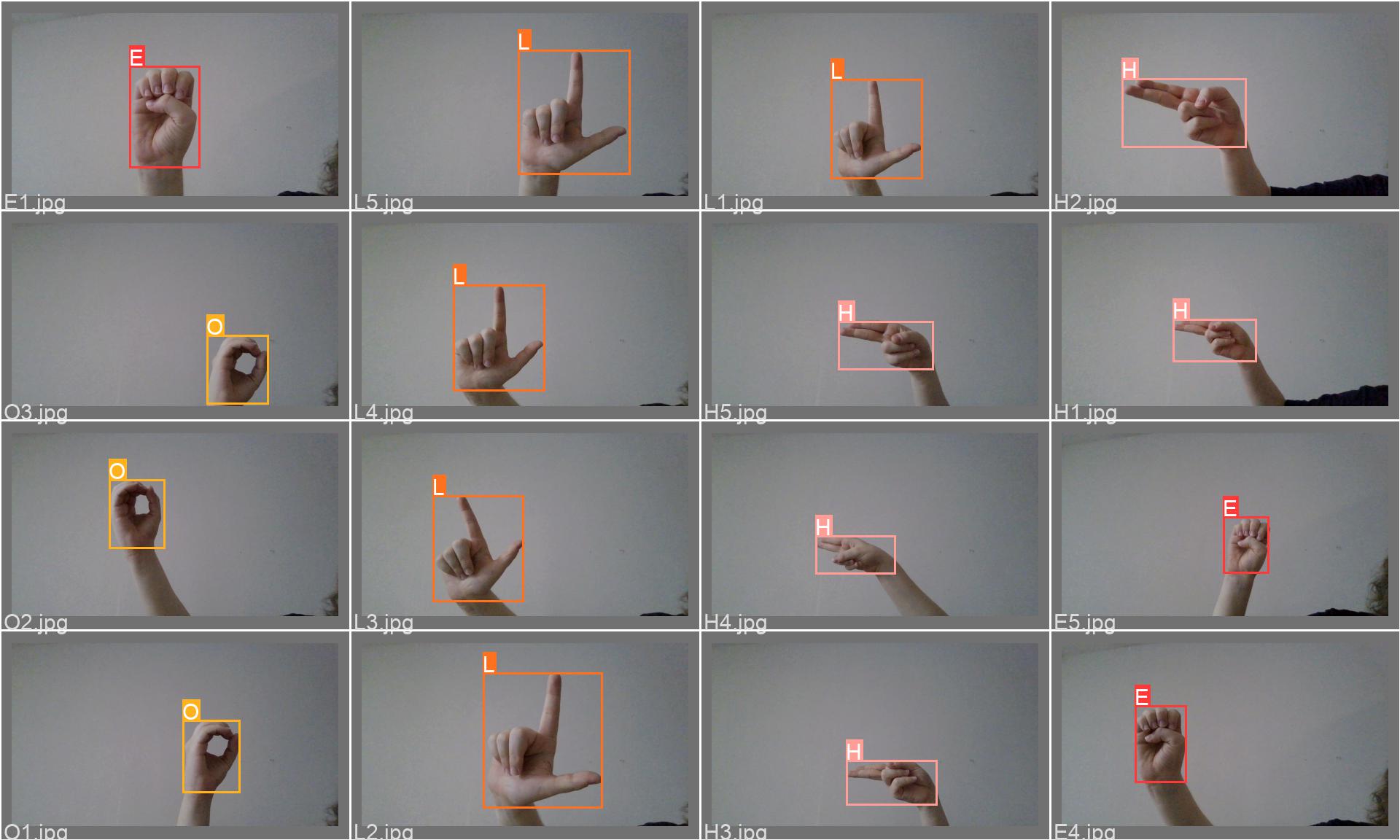

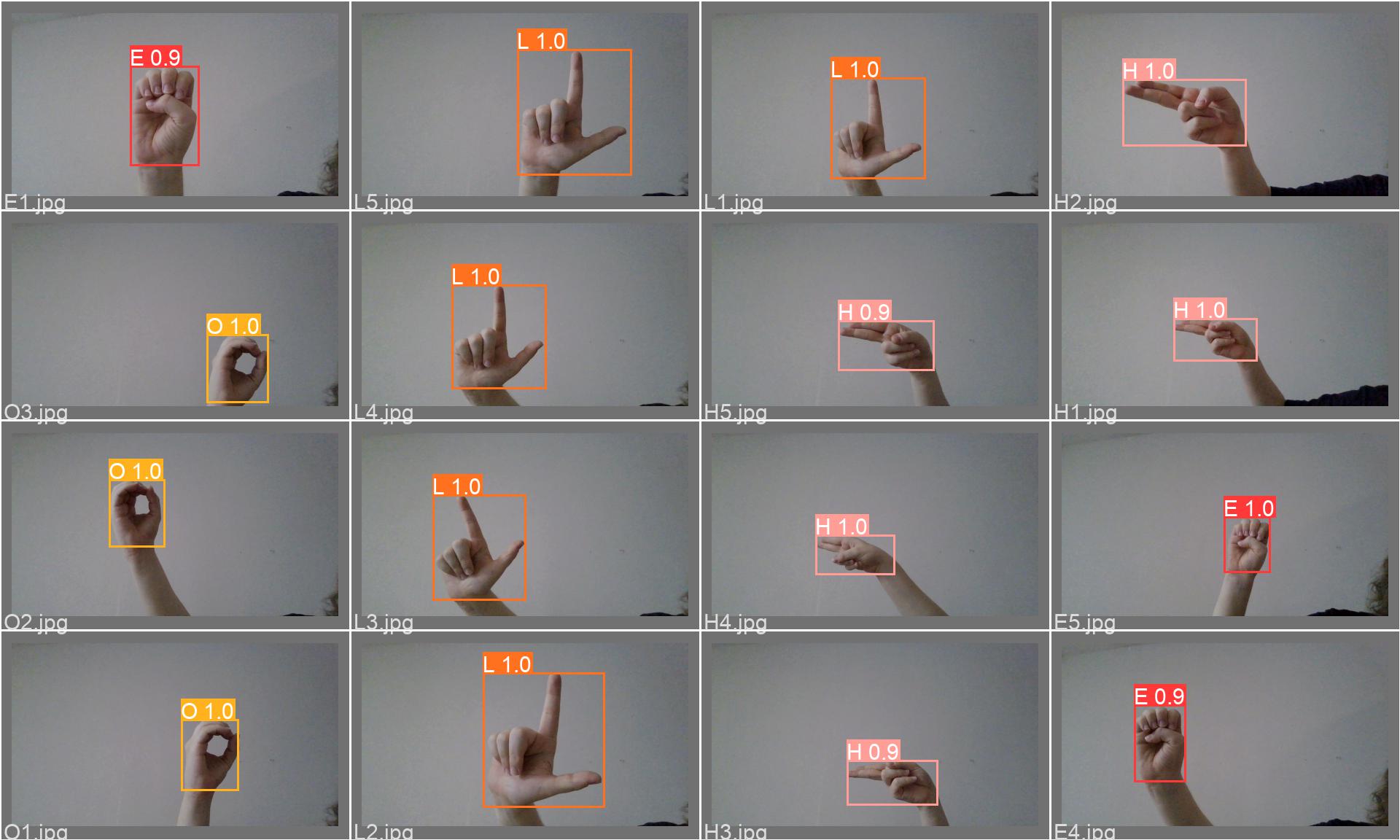

I trained several variations of the YOLOv5 model (changing image size, batch size, number of workers, seed, etc) since the model performed excellently on training, validation and testing but not in real-time from my webcam. Only after some time I realized that the problem was in that my webcam was capturing completely different frames from the training/validation/test dataset. To verify that this was surely the case I created my own (baby) dataset for only 4 letters ('H', 'E', 'L', 'O'), providing 5 images per letter. For each image I manually added the bounding box and label using the LabelImg library, available here.

The results were eye-popping, especially given the size of the dataset, but it is again a dataset that cannot be generalized to new contexts.

The theory regarding the YOLO model was covered in an earlier repository, available here.

📝 Results on ASL dataset

The dataset is freely available here. It has a lot of images: 1512 for training, 144 for validation and 72 for testing. The problem is the type of images. They are very accurate and very clear, yet they look very similar to each other and do not fit new contexts.

As previously mentioned, I trained a Yolov5 model (for almost 4h) just to get a model that is unable to recognize almost any letter in a new context. I realized only after several attempts (I changed the image size from 256 to 512, 448 and even 1024, changed the batch size between 16, 32 etc, and even the number of workers) that the problem was the dataset.

Attempt of 'Hello' from webcam with YOLOv5 trained on the dataset mentioned above.

The training notebook is available here.

The real-time testing notebook is available here.

The results reported by the YOLOv5 model during training are as follows.

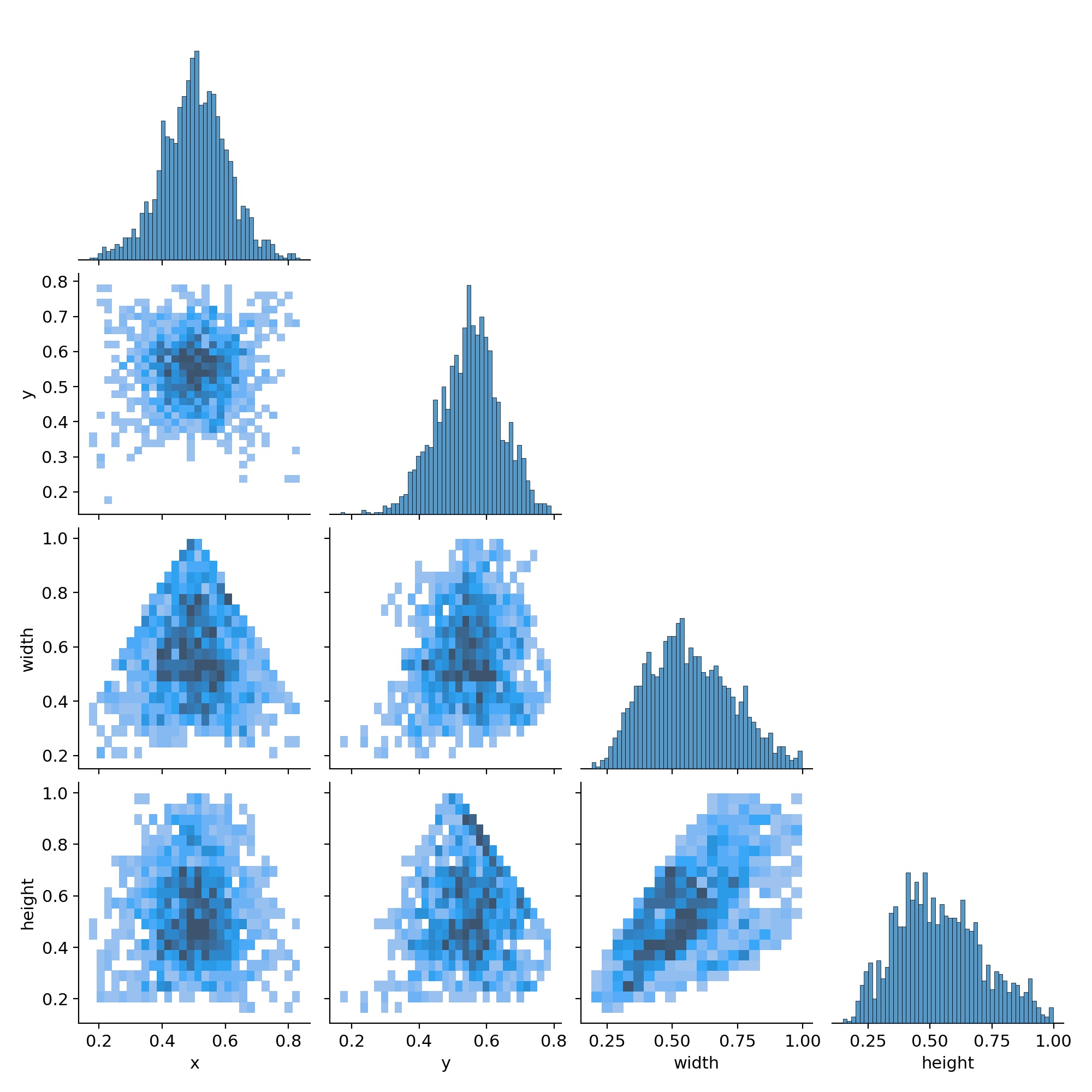

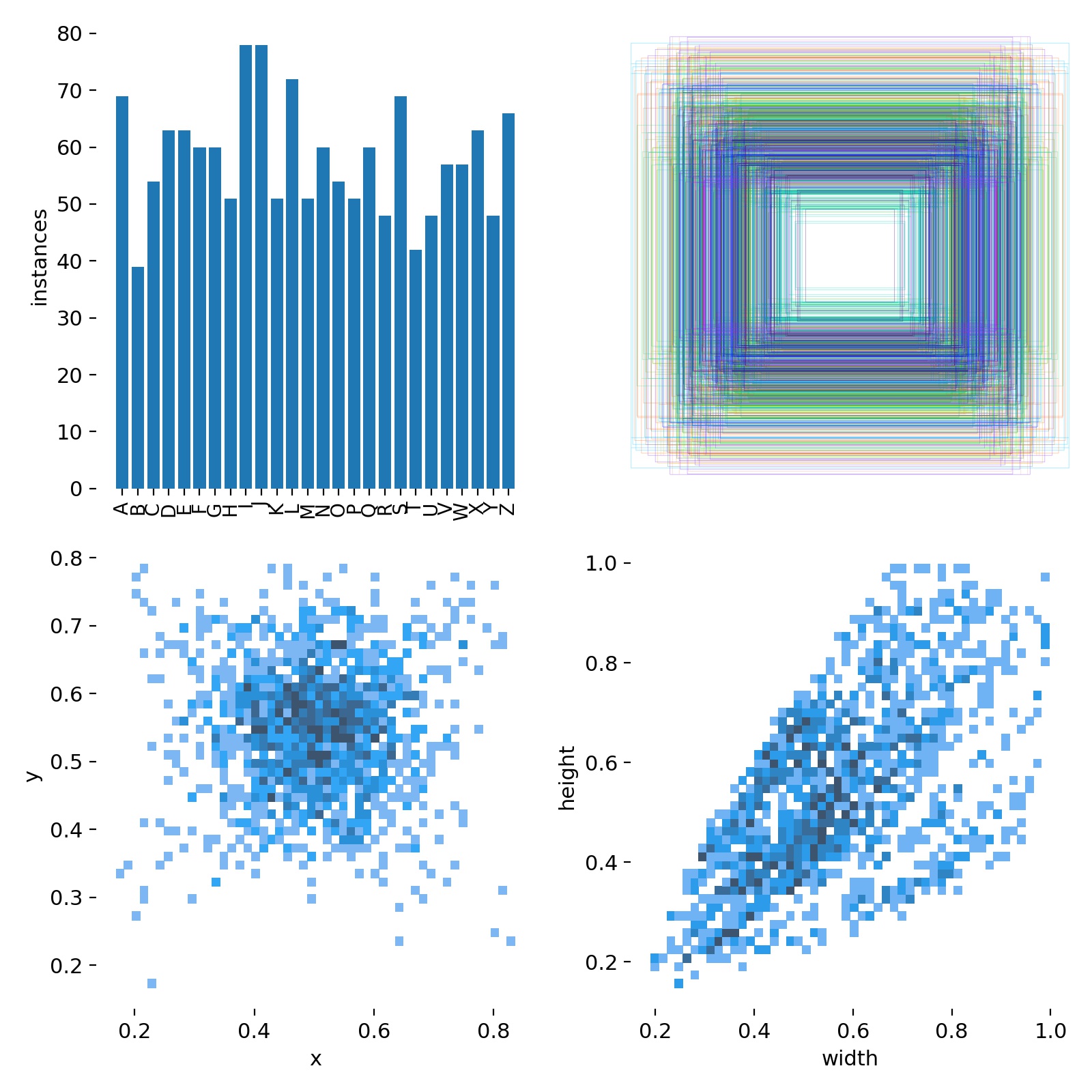

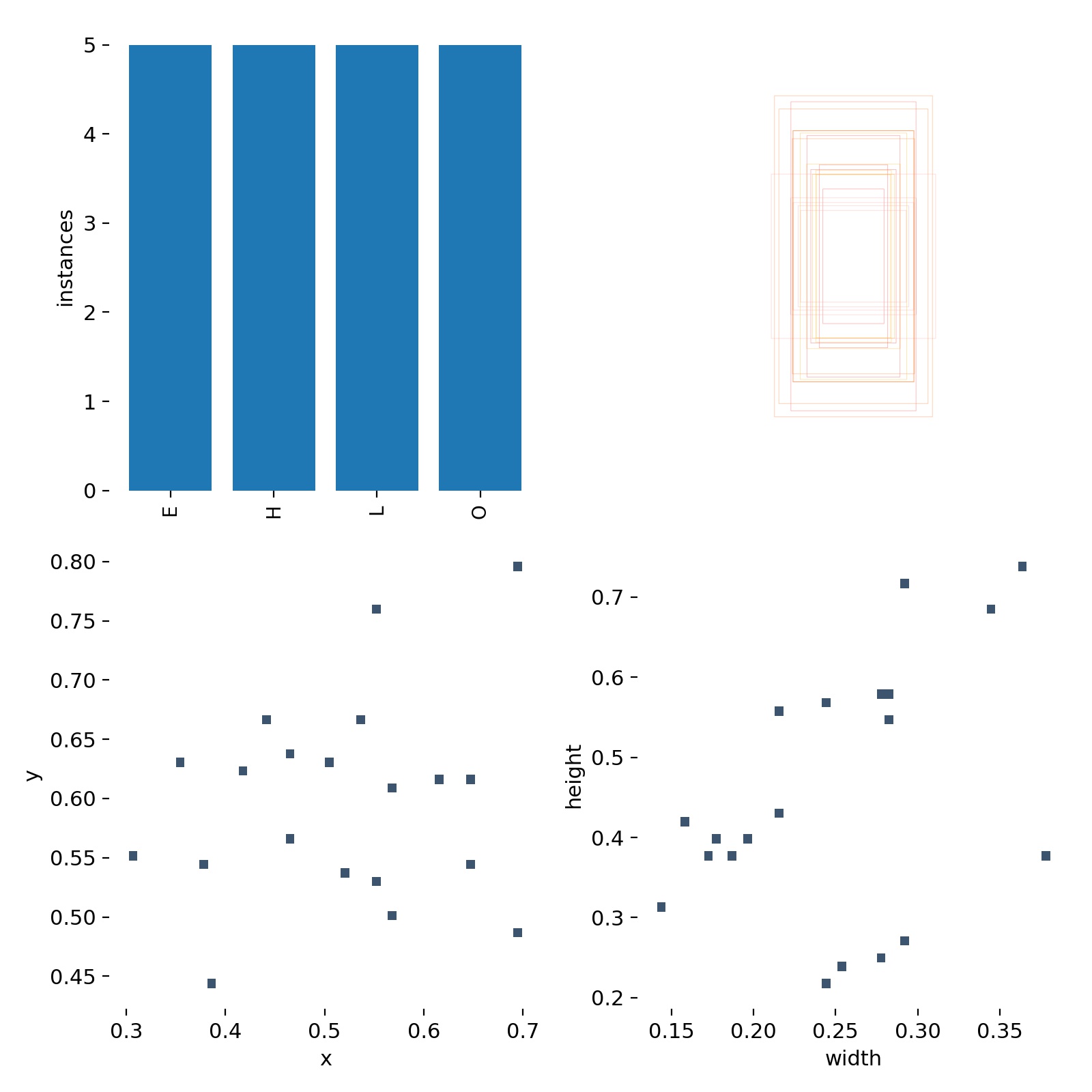

First of all, it is possible to visualize:

- a histogram to see how many elements per label we have

- a plot of all the boxes in the training images, colored differently for each label, so as to understand whether the sizes of the boxes are sufficiently different (it is convenient to have a variety)

- a plot of the

$(x,y)$ values related to the position of the box within each image (again, it would be good to see fairly scattered points) - a plot of the

$(width, height)$ values related to the size of the boxes in each image (again, it would be convenient to have fairly scattered points)

In order: 1. in the upper-left, 2. in the upper-right, 3. in the lower-left and 4. in the lower-right.

More informations about

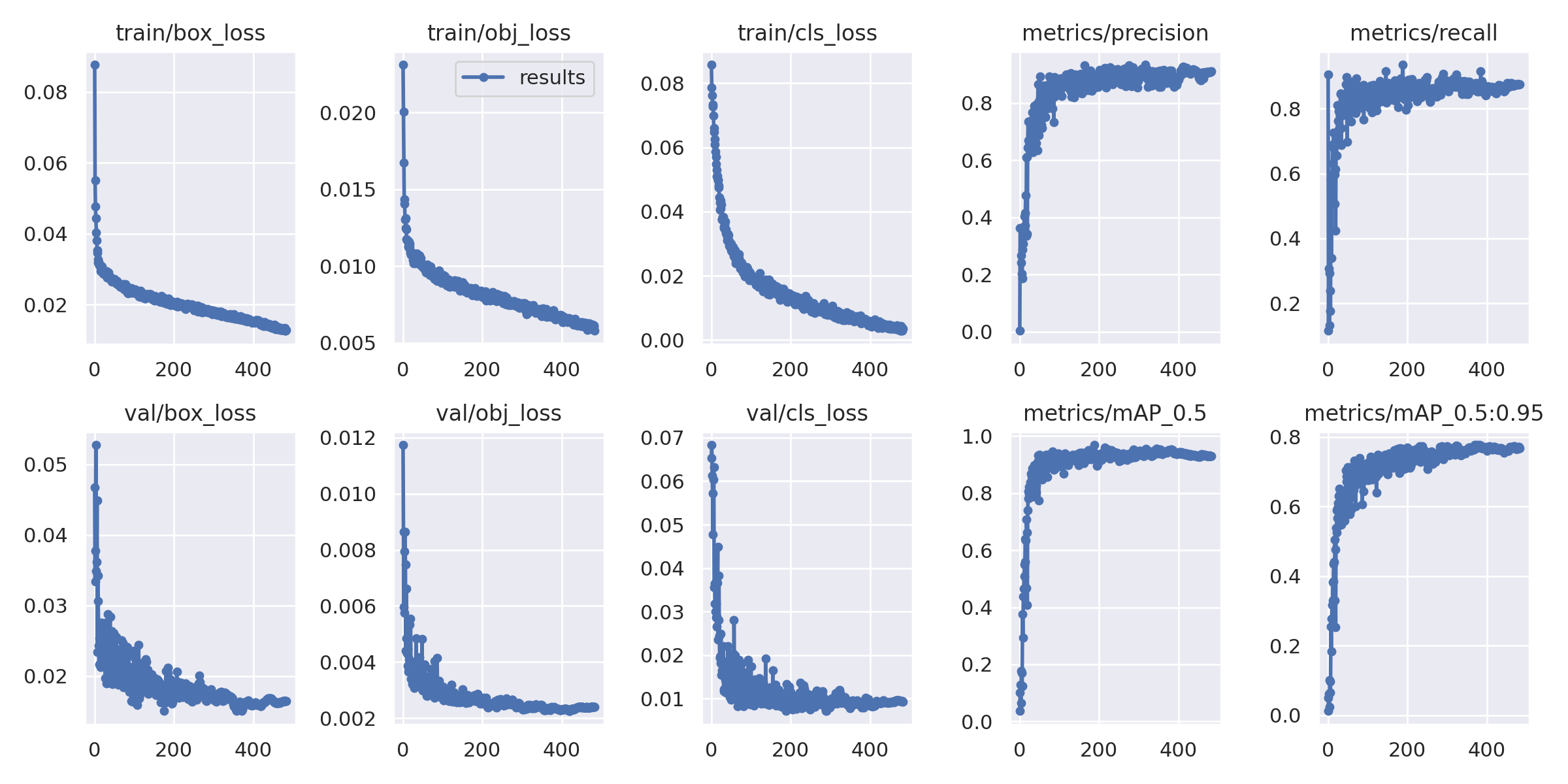

It is possible to evaluate how well the training procedure performed by visualizing the logs in runs folder.

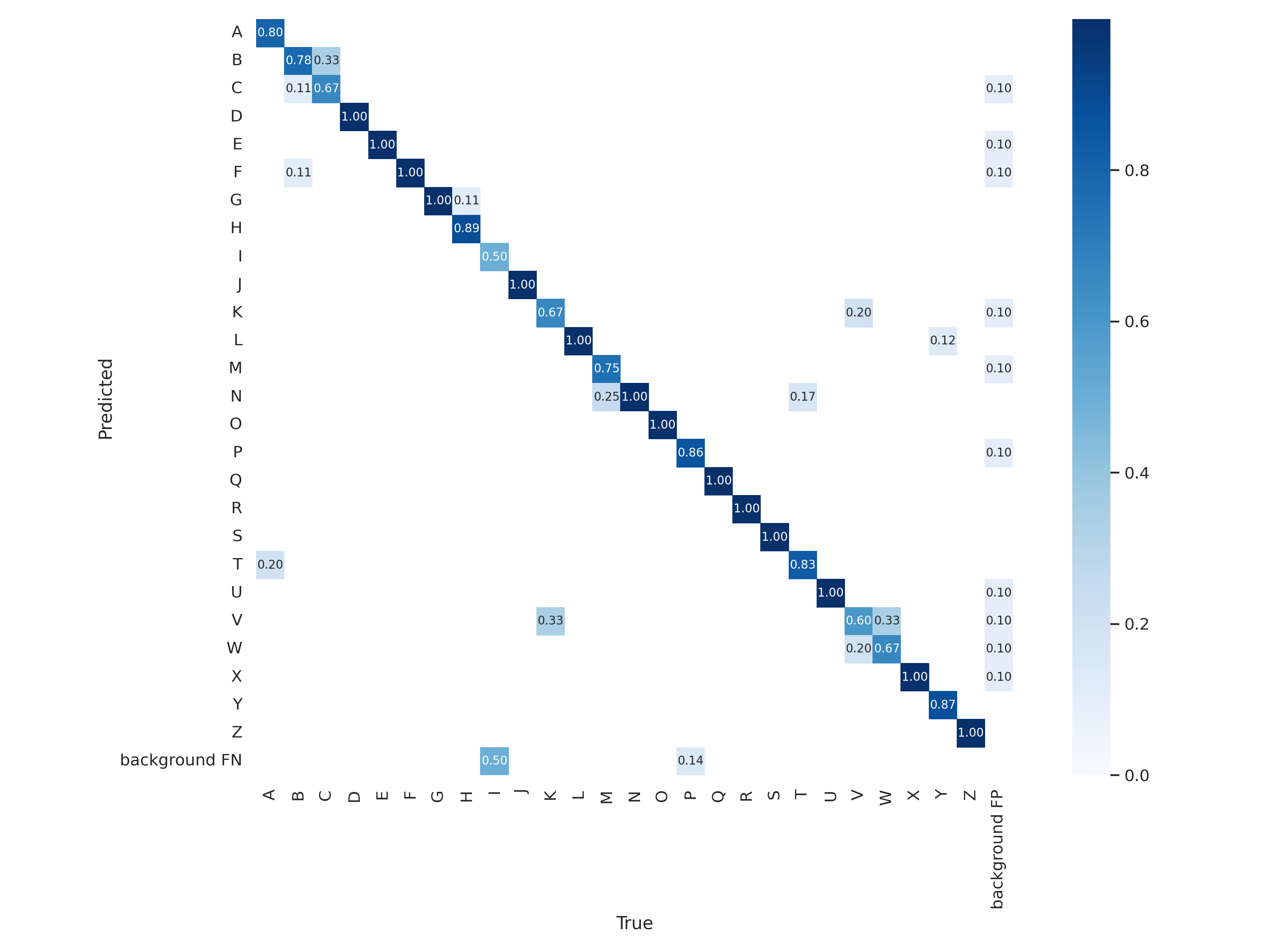

It is also possible to see how good the predictions are and which classes caused the most difficulties.

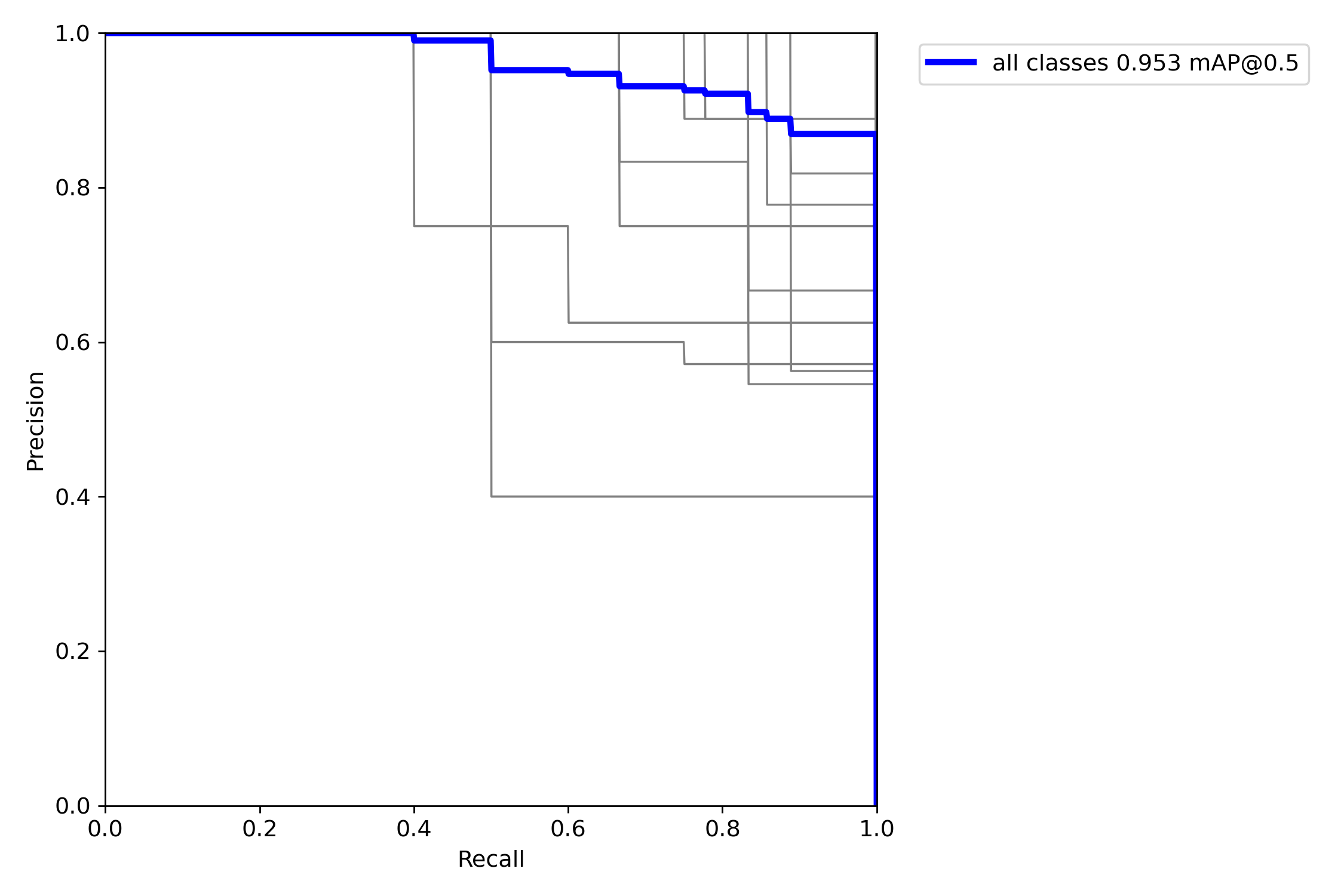

Moreover, it is possible to visualize the precision-recall curve.

Finally, we take a look on some other peculiarities.

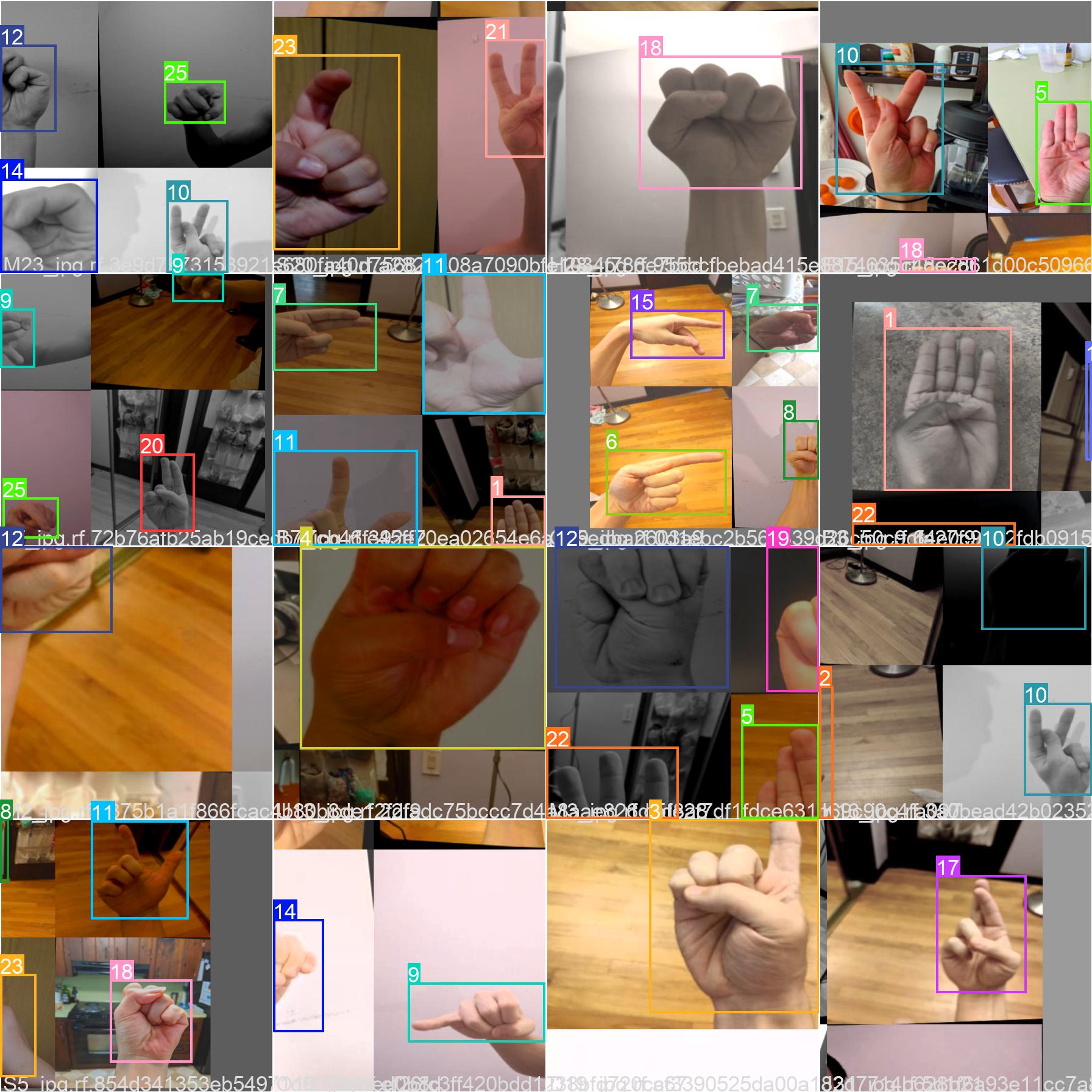

The file train_batch0.jpg shows train batch 0 mosaics and labels.

Instead, val_batch0_labels.jpg shows validation batch 0 labels.

Lastly, val_batch0_pred.jpg shows validation batch 0 predictions.

📝 Results on Hello dataset

To test the validity of my thesis, that is, that the dataset on which I trained YOLOv5 is not generic enough and does not allow generalization of the model, I created my own dataset. I chose the letters 'H', 'E', 'L', 'O' and for each I took (only) 5 webcam images. After that I created the information regarding the boxes and labels with the LabelImg tool. With a total of 20 images (among other things, repeated for both training and validation) I trained a model of YOLOv5 on 500 epochs.

In conclusion, the model came out outstanding (considering the amount of images used). However, again it is a model that is only great in this context, even less flexible than the previous one. But at least now it is clear that the problem was the dataset and not some component of the training.

Attempt of 'Hello' from webcam with YOLOv5 trained on my dataset.

The training notebook is available here.

The real-time testing notebook is available here.

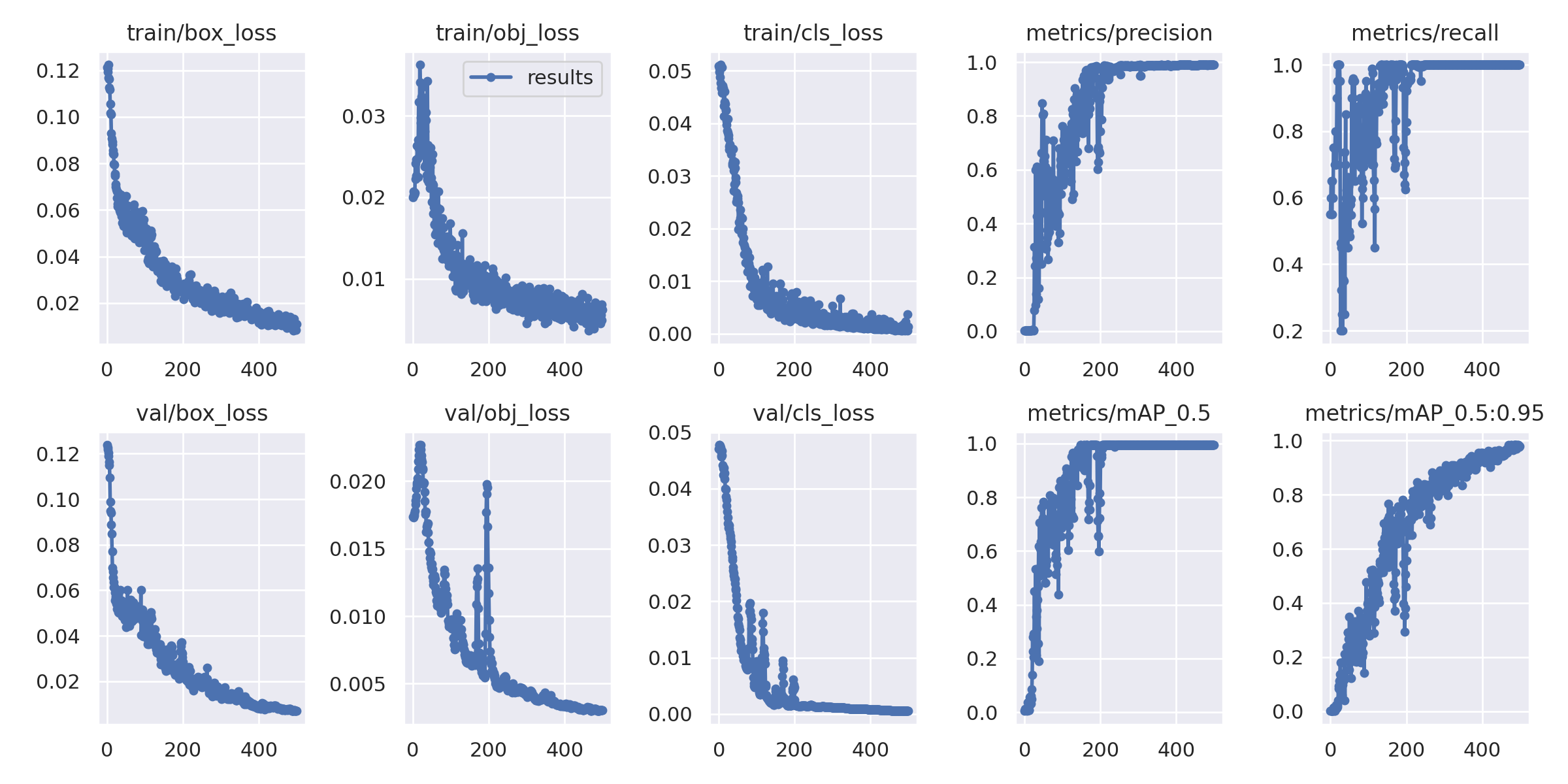

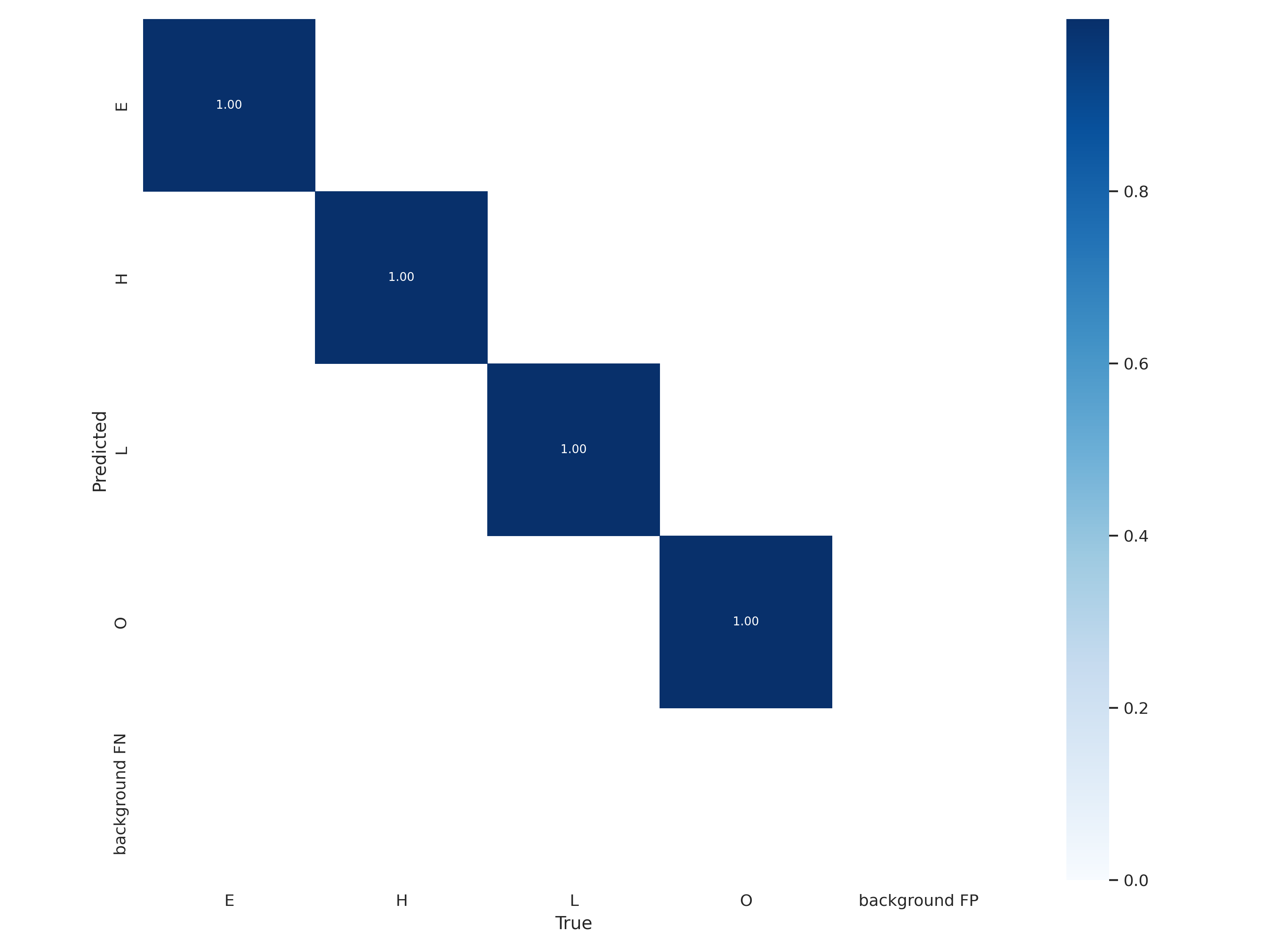

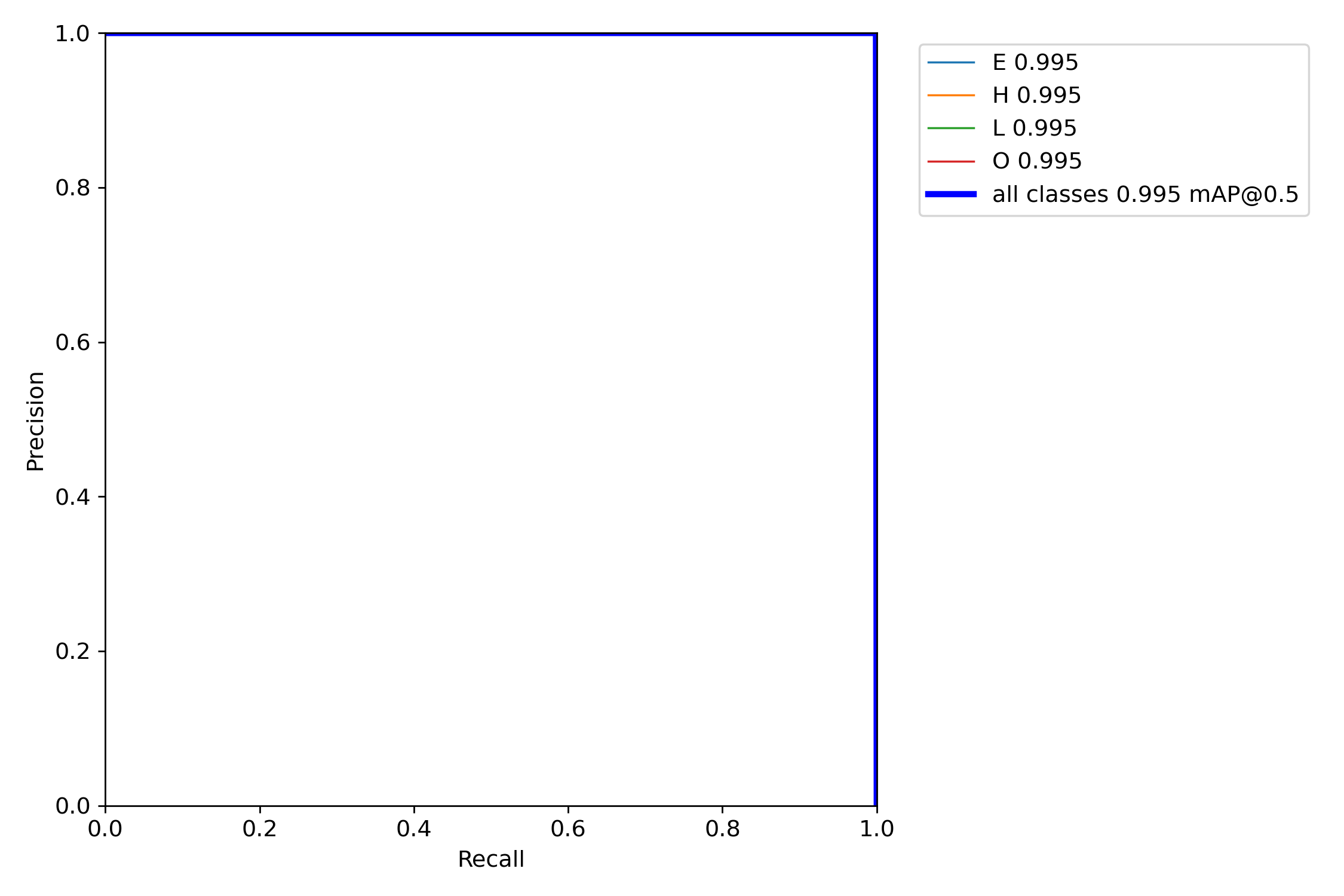

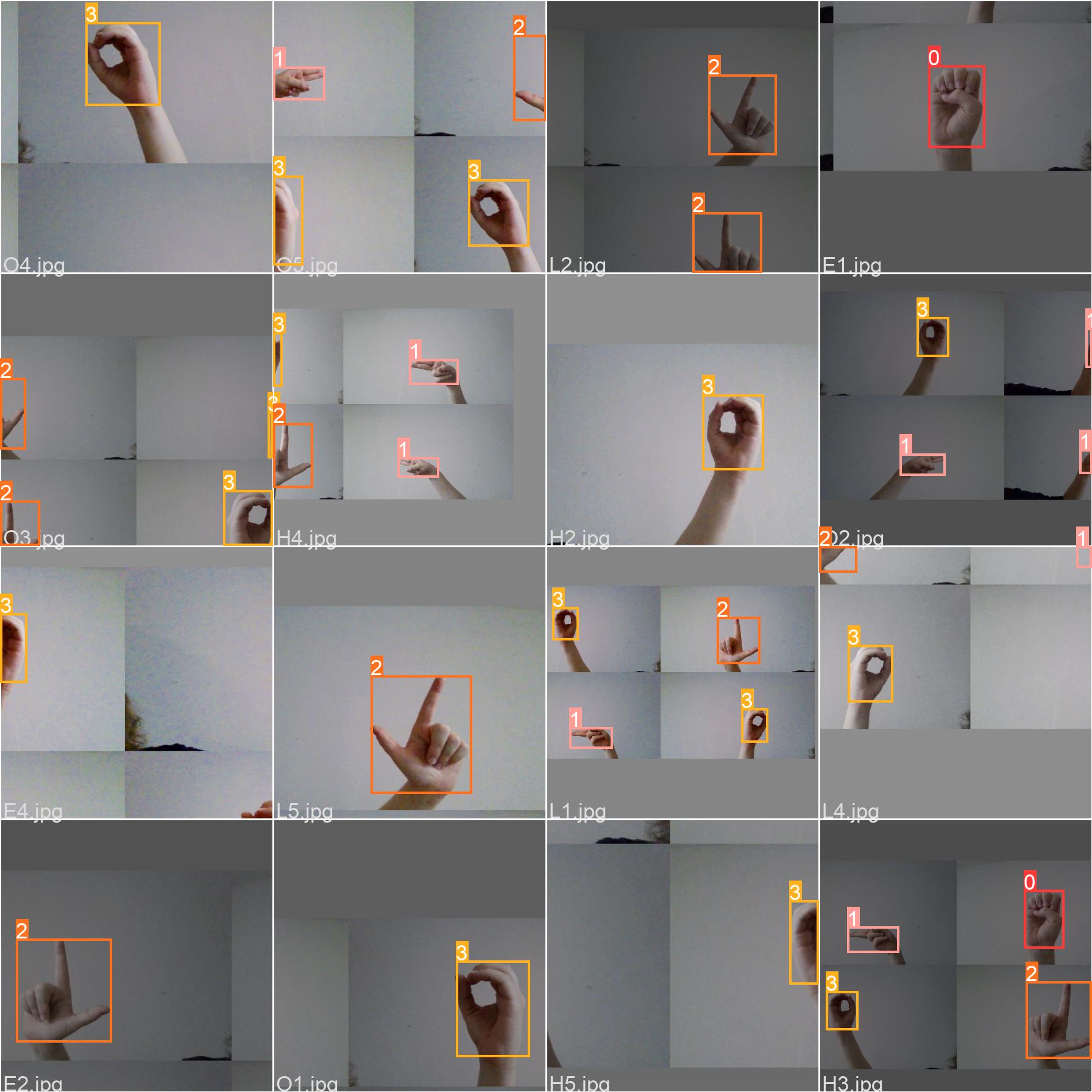

The results reported by this second version of the YOLOv5 model during training are as follows.

Informations about the labels - part 1.

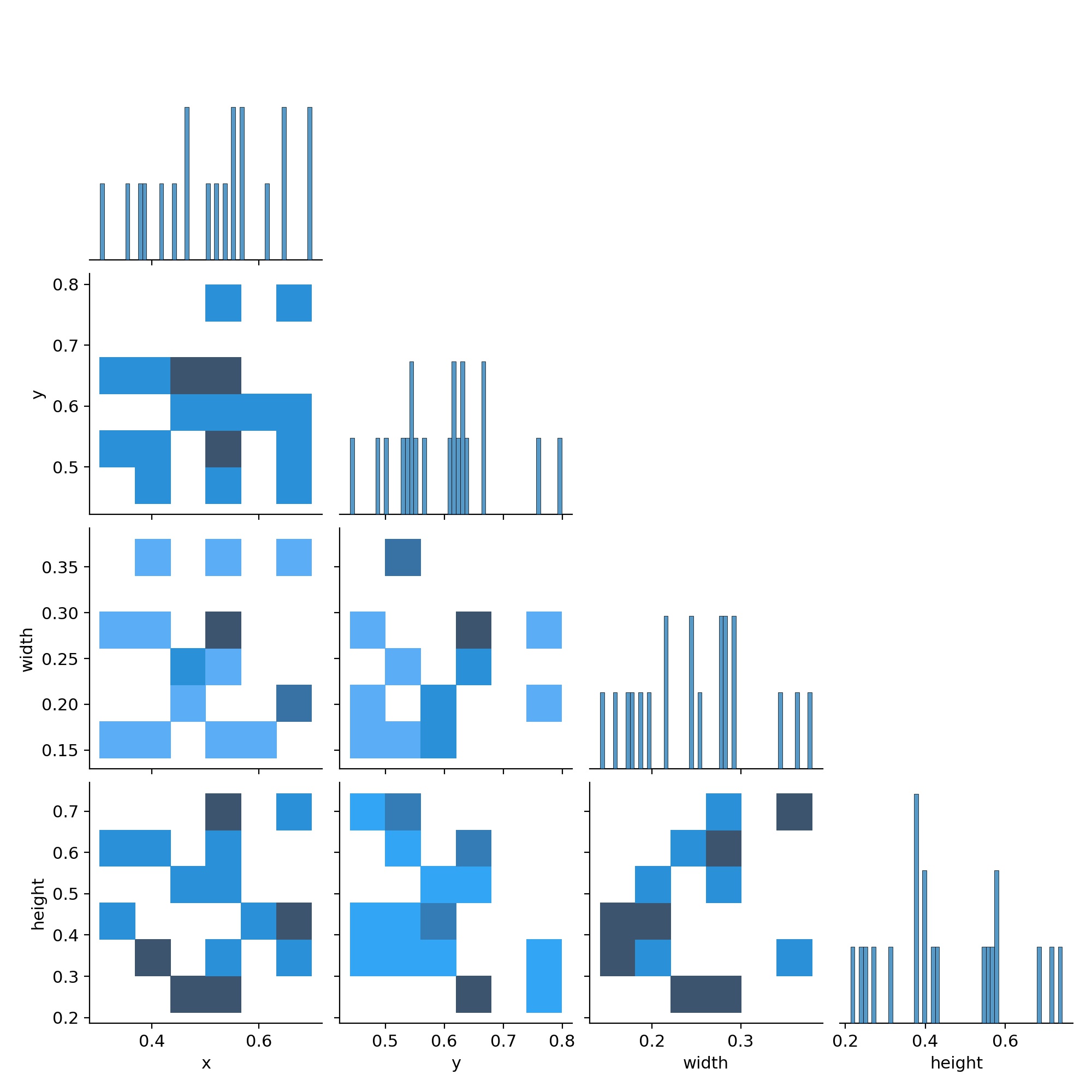

Informations about the labels - part 2 (correlogram).

Training performance visualization.

How good the predictions are and which classes caused the most difficulties.

Finally, we take a look on some other peculiarities.

The file train_batch0.jpg shows train batch 0 mosaics and labels.

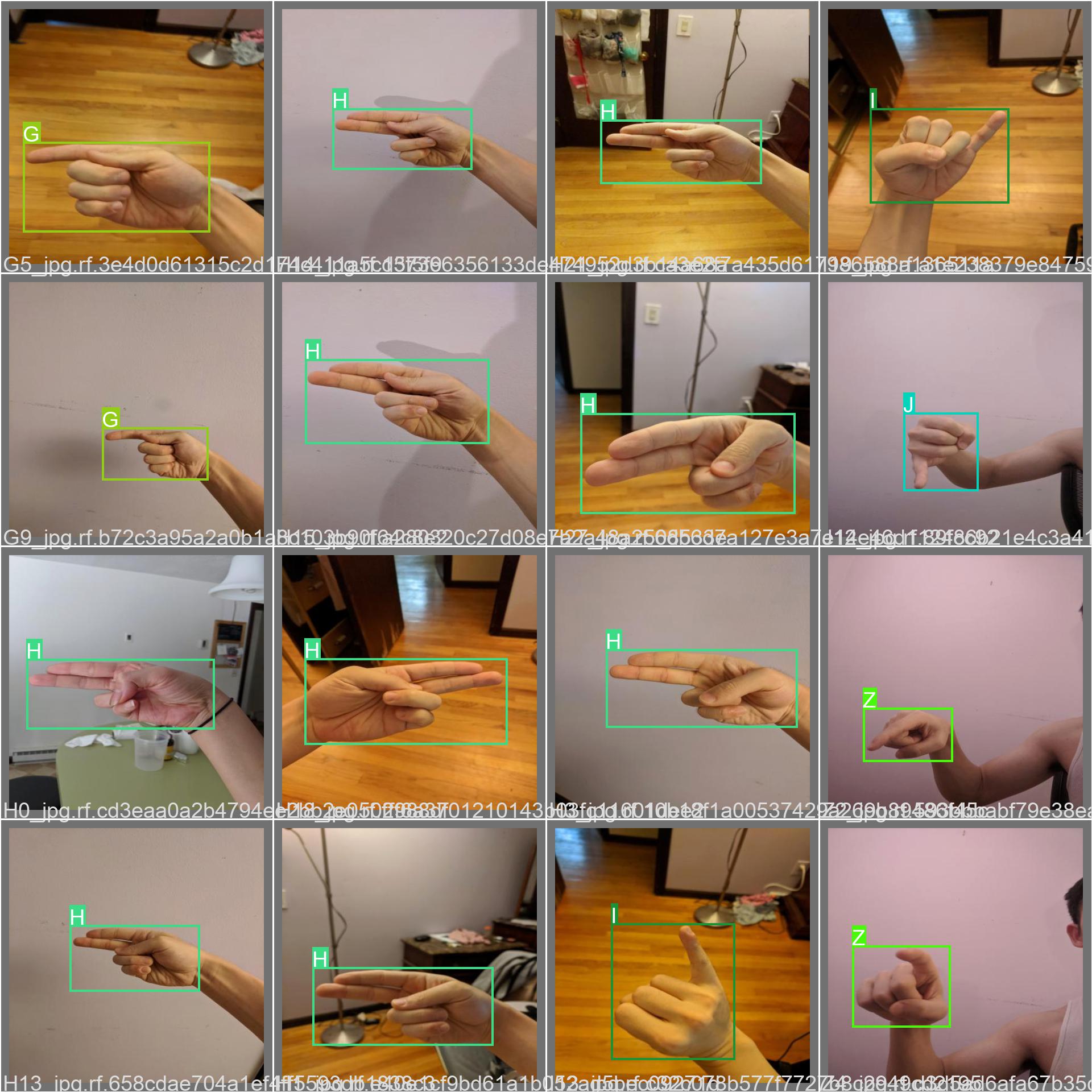

Instead, val_batch0_labels.jpg shows validation batch 0 labels.

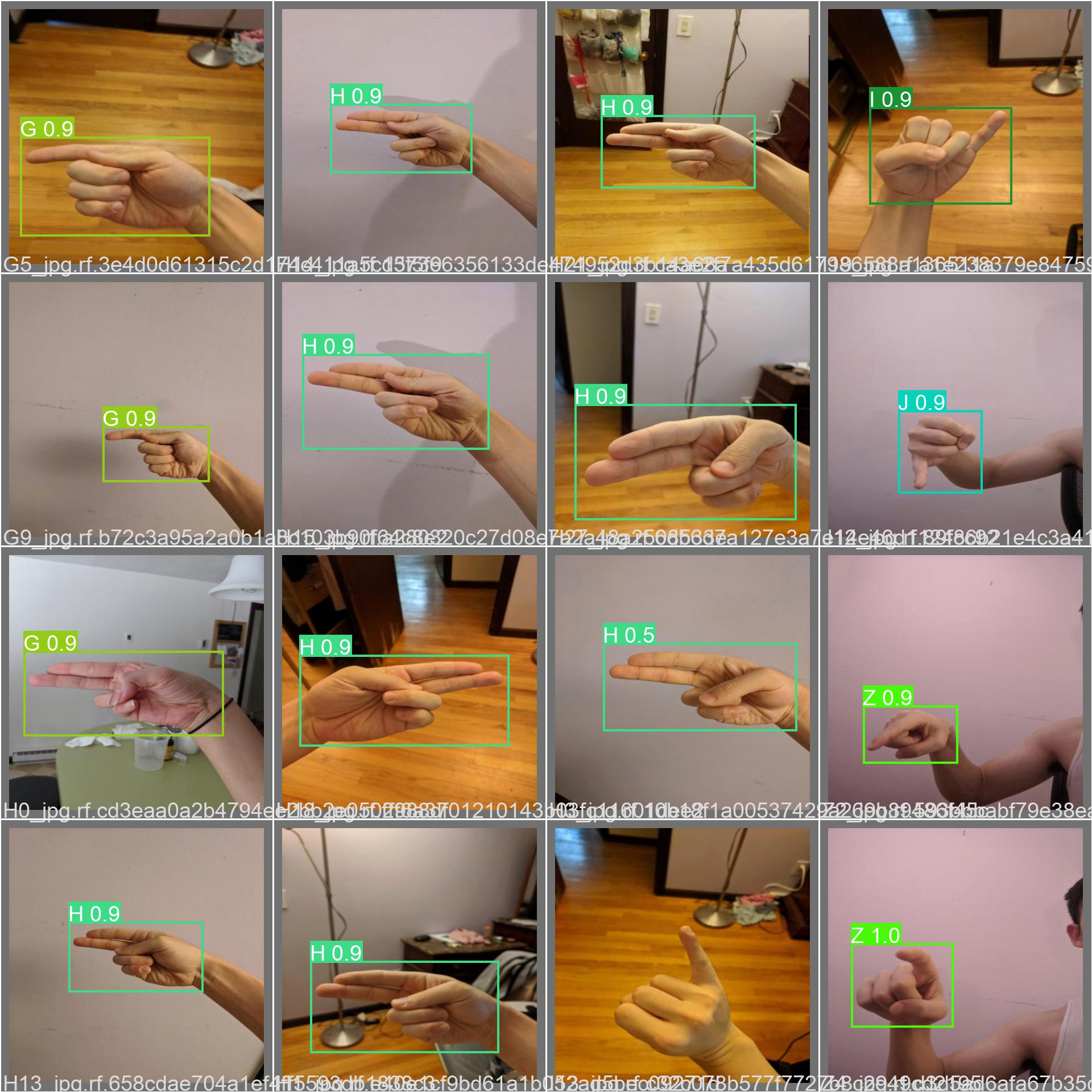

Lastly, val_batch0_pred.jpg shows validation batch 0 predictions.

✍️ About LabelImg

LabelImg is a (free and easily accessible, thank you 🥰) package for label images for object detection.

How does it work? There are a couple of steps to follow.

- First of all, it is necessary to clone the repository. It is even possible to run the command from a notebook:

!git clone https://github.com/heartexlabs/labelImg

- Next, it is necessary to install two dependencies:

pip install PyQt5

pip install lxml

- Once done, set up some settings. Always from notebook:

!cd ./labelImg && pyrcc5 -o libs/resources.py resources.qrc

- Now we need to go into the

LabelImgfolder and move manually inside thelibfolder the following files:

resources.py

resources.qrc

- Go to the command line, activate the correct enviroment (in my case I created a ML enviroment:

C:\Enviroments\ML\Scriptsactivate.bat) and go into theLabelImgfolder:

cd ..\labelImg

- Run

LabelImg:

python labelImg.py

-

Select

Open Dirand open the directory where all the images are -

Select

Change Save Dirand open the directory where all label information will be saved -

Check that the selected format is correct, in this case I used

YOLO(depending on the model you use, the format of notations is different) -

Select

Viewand thenAutosave Mode mode, so as to automatically save the labels -

Use the letter

Wto create the label and move between images usingAandD -

OPTIONAL: inside

LabelImgthere were also other labels. It does not harm the procedure, however they can be removed if desired. In the output folder (Change Save Dir) there is a.txtwith the classes. It is sufficient to edit this file by deleting unnecessary classes. Beware, however, that we then have to re-edit all the.txtfiles because obviously the association of the classes changes.

For example, if before we had['dog', 'cat', 'A', 'B']and now we have['A', 'B'], we will have to edit the.txtby changing all the files corresponding toAby removing the value2(old position ofAin the class list) and putting the value0(new position ofAin the class list), and so on -

Finally, it is necessary to create a

data.yamlfile, which will be used by the model to figure out where to find all the data. The file in this case (already adapted to colab training) is:

train: /content/drive/MyDrive/ASL/PAULO/images

val: /content/drive/MyDrive/ASL/PAULO/images

nc: 4

names: ['E', 'H', 'L', 'O']