Made in Vancouver, Canada by Picovoice

This repo is a minimalist and extensible framework for benchmarking different speech-to-text engines.

Word error rate (WER) is the ratio of edit distance between words in a reference transcript and the words in the output of the speech-to-text engine to the number of words in the reference transcript.

Real-time factor (RTF) is the ratio of CPU (processing) time to the length of the input speech file. A speech-to-text engine with lower RTF is more computationally efficient. We omit this metric for cloud-based engines.

The aggregate size of models (acoustic and language), in MB. We omit this metric for cloud-based engines.

- Amazon Transcribe

- Azure Speech-to-Text

- Google Speech-to-Text

- IBM Watson Speech-to-Text

- Mozilla DeepSpeech

- Picovoice Cheetah

- Picovoice Leopard

This benchmark has been developed and tested on Ubuntu 20.04.

- Install FFmpeg

- Download datasets.

- Install the requirements:

pip3 install -r requirements.txtReplace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset, and ${AWS_PROFILE}

with the name of AWS profile you wish to use.

python3 benchmark.py \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--engine AMAZON_TRANSCRIBE \

--aws-profile ${AWS_PROFILE}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset,

${AZURE_SPEECH_KEY} and ${AZURE_SPEECH_LOCATION} information from your Azure account.

python3 benchmark.py \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--engine AZURE_SPEECH_TO_TEXT \

--azure-speech-key ${AZURE_SPEECH_KEY}

--azure-speech-location ${AZURE_SPEECH_LOCATION}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset, and

${GOOGLE_APPLICATION_CREDENTIALS} with credentials download from Google Cloud Platform.

python3 benchmark.py \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--engine GOOGLE_SPEECH_TO_TEXT \

--google-application-credentials ${GOOGLE_APPLICATION_CREDENTIALS}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset, and

${WATSON_SPEECH_TO_TEXT_API_KEY}/${${WATSON_SPEECH_TO_TEXT_URL}} with credentials from your IBM account.

python3 benchmark.py \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--engine IBM_WATSON_SPEECH_TO_TEXT \

--watson-speech-to-text-api-key ${WATSON_SPEECH_TO_TEXT_API_KEY}

--watson-speech-to-text-url ${WATSON_SPEECH_TO_TEXT_URL}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset,

${DEEP_SPEECH_MODEL} with path to DeepSpeech model file (.pbmm), and ${DEEP_SPEECH_SCORER} with path to DeepSpeech

scorer file (.scorer).

python3 benchmark.py \

--engine MOZILLA_DEEP_SPEECH \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--deepspeech-pbmm ${DEEP_SPEECH_MODEL} \

--deepspeech-scorer ${DEEP_SPEECH_SCORER}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset, and

${PICOVOICE_ACCESS_KEY} with AccessKey obtained from Picovoice Console.

python3 benchmark.py \

--engine PICOVOICE_CHEETAH \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

--picovoice-access-key ${PICOVOICE_ACCESS_KEY}Replace ${DATASET} with one of the supported datasets, ${DATASET_FOLDER} with path to dataset, and

${PICOVOICE_ACCESS_KEY} with AccessKey obtained from Picovoice Console.

python3 benchmark.py \

--engine PICOVOICE_LEOPARD \

--dataset ${DATASET} \

--dataset-folder ${DATASET_FOLDER} \

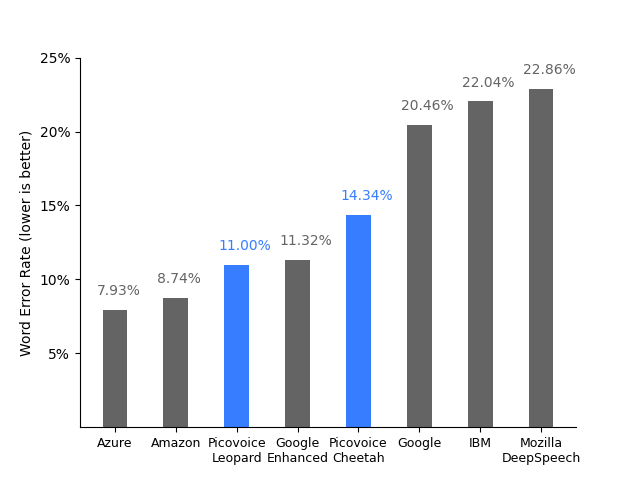

--picovoice-access-key ${PICOVOICE_ACCESS_KEY}| Engine | LibriSpeech test-clean | LibriSpeech test-other | TED-LIUM | CommonVoice | Average |

|---|---|---|---|---|---|

| Amazon Transcribe | 5.20% | 9.58% | 4.25% | 15.94% | 8.74% |

| Azure Speech-to-Text | 4.96% | 9.66% | 4.99% | 12.09% | 7.93% |

| Google Speech-to-Text | 11.23% | 24.94% | 15.00% | 30.68% | 20.46% |

| Google Speech-to-Text (Enhanced) | 6.62% | 13.59% | 6.68% | 18.39% | 11.32% |

| IBM Watson Speech-to-Text | 11.08% | 26.38% | 11.89% | 38.81% | 22.04% |

| Mozilla DeepSpeech | 7.27% | 21.45% | 18.90% | 43.82% | 22.86% |

| Picovoice Cheetah | 7.08% | 16.28% | 10.89% | 23.10% | 14.34% |

| Picovoice Leopard | 5.39% | 12.45% | 9.04% | 17.13% | 11.00% |

Measurement is carried on an Ubuntu 20.04 machine with Intel CPU (Intel(R) Core(TM) i5-6500 CPU @ 3.20GHz), 64 GB of

RAM, and NVMe storage.

| Engine | RTF | Model Size |

|---|---|---|

| Mozilla DeepSpeech | 0.46 | 1142 MB |

| Picovoice Cheetah | 0.07 | 19 MB |

| Picovoice Leopard | 0.05 | 19 MB |