code for NAACL 2021 paper Latent-Optimized Adversarial Neural Transfer for Sarcasm Detection

@inproceedings{guo-etal-2021-latent,

title = "Latent-Optimized Adversarial Neural Transfer for Sarcasm Detection",

author = "Guo, Xu and

Li, Boyang and

Yu, Han and

Miao, Chunyan",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.naacl-main.425",

doi = "10.18653/v1/2021.naacl-main.425",

pages = "5394--5407",

abstract = "The existence of multiple datasets for sarcasm detection prompts us to apply transfer learning to exploit their commonality. The adversarial neural transfer (ANT) framework utilizes multiple loss terms that encourage the source-domain and the target-domain feature distributions to be similar while optimizing for domain-specific performance. However, these objectives may be in conflict, which can lead to optimization difficulties and sometimes diminished transfer. We propose a generalized latent optimization strategy that allows different losses to accommodate each other and improves training dynamics. The proposed method outperforms transfer learning and meta-learning baselines. In particular, we achieve 10.02{\%} absolute performance gain over the previous state of the art on the iSarcasm dataset.",

}

For any questions regarding the code and paper, email me Xu Guo

To reproduce the results in paper, see

Reproduce_results.ipynb

To re-conduct the experiments using my experimental environment. download the compressed python virtual env

source my_env/bin/activateRun

main.py, and change the arg values. (GPU with at least 20GB of memory is required).

- Ghosh: raw data downloaded from: link

- Ptacek: raw data downloaded from: link

- SemEval18: raw data downloaded from: link

- iSarcasm: raw data given by the author and is also included under

data/iSarcasm/raw

- detect language, filter out non-english tweets

- lexical normalization

- filter out duplicate tweets across datasets

- up-sampling target datasets

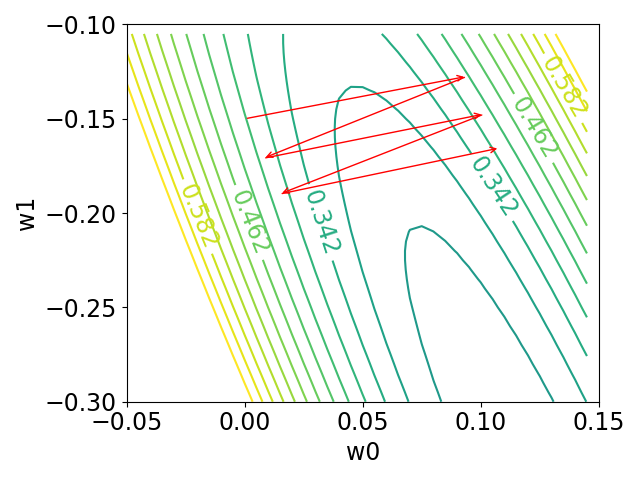

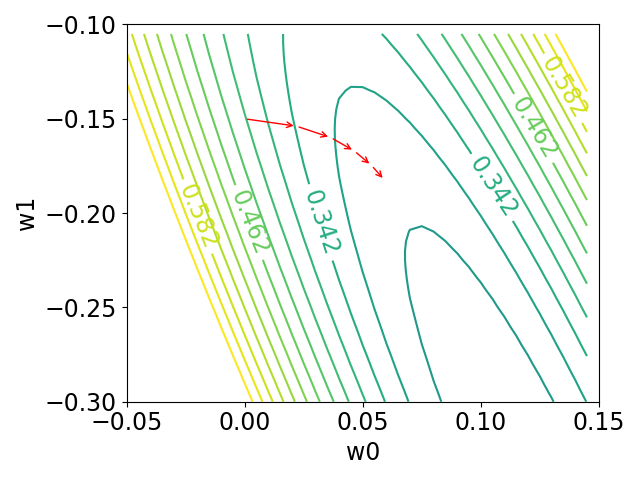

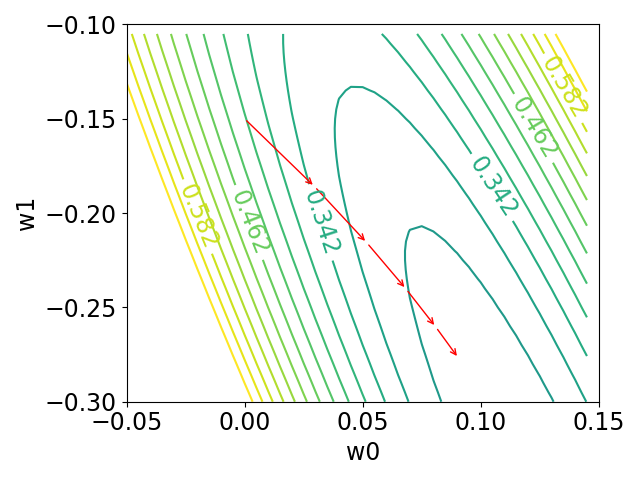

- manually create a matrix with condition number of 40 (to control the loss landscape to be eclipse):

.

- then generate a semi-definite matrix

(

), a column vector b and a scalar c.

- create a grid of

.

- generate the loss value

and plot the loss contour.

- perform gradient descent with initial point

and learning rate

, plot the trajectory of w.

- perform first-order extragradient with the same initial point and learning rate, plot the trajectory of w.

- perform Full hessian extragradient with the same initial point and learning rate, plot the trajectory of w.

python extragradient.py