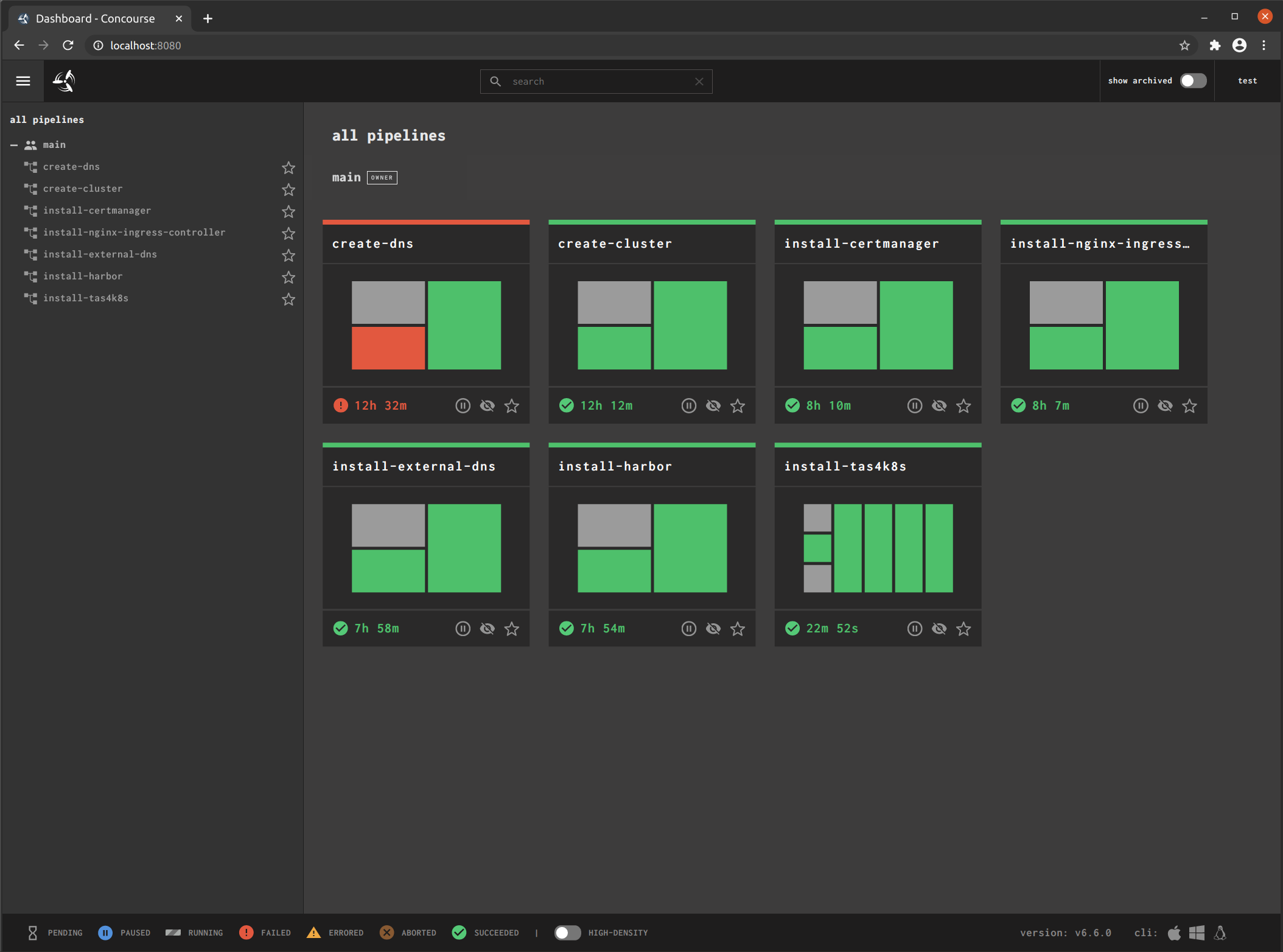

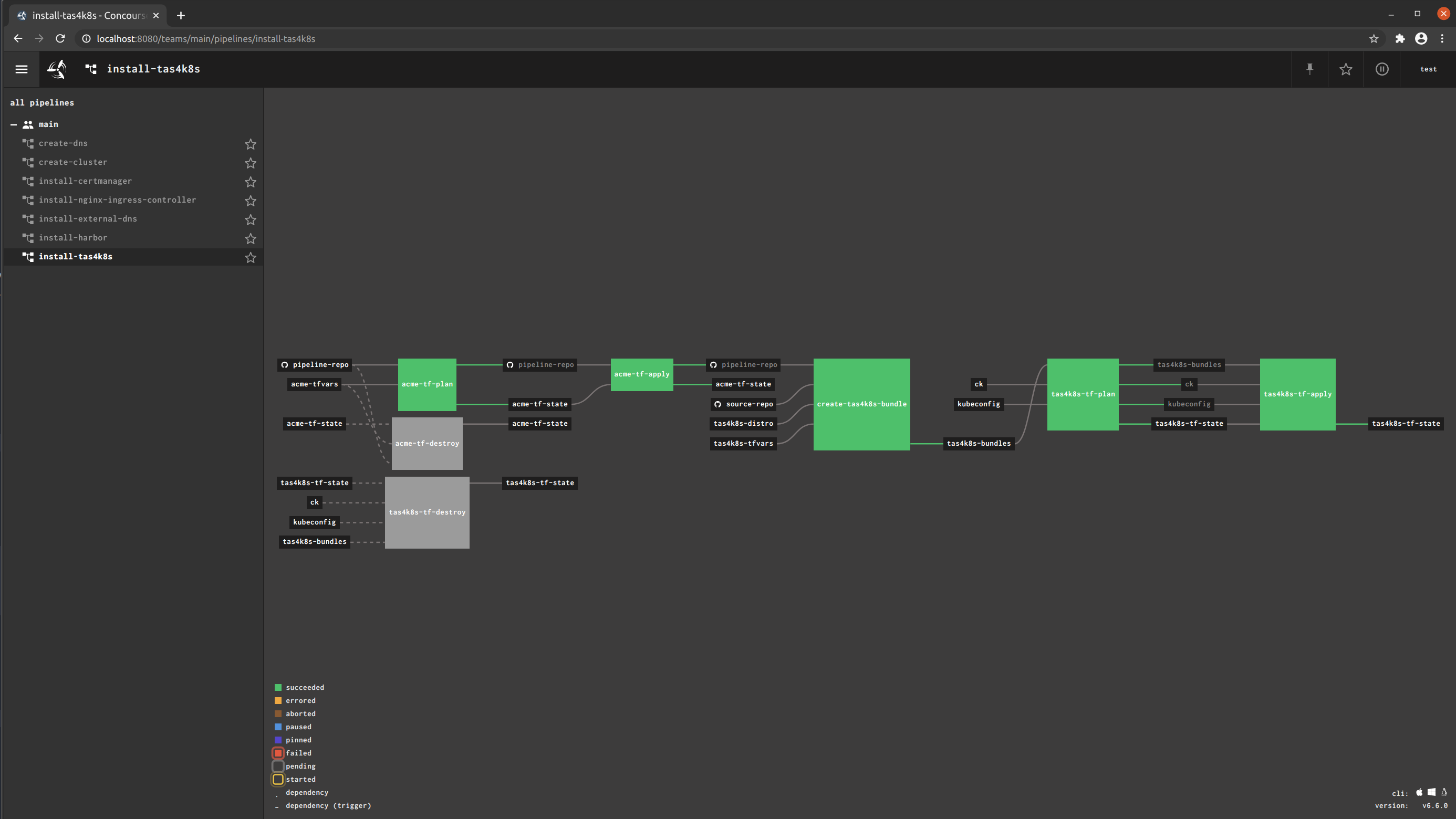

Sample GitOps pipelines that employ modules from tf4k8s to configure and deploy products and capabilities to targeted Kubernetes clusters.

You have some options:

- spin up a local Concourse instance for test purposes with docker-compose

- employ the control-tower CLI to deploy a self-healing, self-updating Concourse instance with Grafana and CredHub in either AWS or GCP

- dog-food

tfk48sexperiments to create a cloud zone, provision and GKE cluster, deploy foundational components plus Concourse via Helm

Start

./bin/concourse/launch-local-concourse-instance-with-docker-compose.sh

This script uses Docker Compose to launch a local Concourse instance

Change directories

cd .concourse-local

to lifecycle manage the instance

Stop

docker-compose stop

Restart

docker-compose restart -d

Teardown

docker-compose down

Warning: you will not be able to spin up TKG clusters via Concourse deployed in this manner.

Option 1: via control-tower

Consult the control-tower CLI install documentation.

Checkout the convenience scripts in the bin/concourse directory

Option 2: via tf4k8s

Make a copy of the config sample and fill it out for your own purposes with your own credentials.

cd bin/concourse/gke

cp one-click-concourse-config.sh.sample one-click-concourse-config.sh

Execute

./one-click-concourse-install.sh

Credentials to the Concourse instance will be vended to you in Terraform output.

Download a version of the fly CLI from the Concourse instance you just deployed.

wget https://<concourse_hostname>/api/v1/cli?arch=amd64&platform=<platform>

sudo mv fly /usr/local/bin

Replace

concourse_hostname>with the hostname of the Concourse instance you wish to target. Also replace<platform>above with one of [ darwin, linux, windows].

fly login --target <target> --concourse-url https://<concourse_hostname> -u <username> -p <password>

Replace

<target>with any name (this acts as an alias for the connection details to the Concourse instance). Also replaceconcourse_hostname>with the hostname of the Concourse instance you wish to target. Lastly, replace<username>and<password>with valid, authorized credentials to the Concourse instance team.

Your choice of two paths from here. Continue through the sections and subsections below in order or take a shortcut.

What's the shortcut? Visit the one-click install for tas4k8s to learn more.

A Concourse resource based off ljfranklin/terraform-resource that also includes the Azure CLI

fly -t <target> set-pipeline -p build-and-push-terraform-resource-with-az-cli-image \

-c ./pipelines/build-and-push-terraform-resource-with-az-cli-image.yml \

--var pipeline-repo=<pipeline_repo> \

--var pipeline-repo-branch=<pipeline_repo_branch> \

--var image-repo-name=<repo-name> \

--var registry-username=<user> \

--var registry-password=<password>

fly -t <target> unpause-pipeline -p build-and-push-terraform-resource-with-az-cli-image

<target>is the alias for the connection details to a Concourse instance<pipeline_repo>is the Git repository that contains the Dockerfile for the container image to be built (e.g., https://github.com/pacphi/tf4k8s-pipelines.git)<pipeline_repo_branch>is the aformentioned Git repository's branch (e.g., main)<repo-name>is a container image repository prefix (e.g., docker.io or a private registry like harbor.envy.ironleg.me/library)<username>and<password>are the credentials of an account with read/write privileges to a container image registry

A pre-built container image exists on DockerHub, here: pacphi/terraform-resource-with-az-cli.

A Concourse resource based off ljfranklin/terraform-resource that also includes the Terraform Carvel plugin.

fly -t <target> set-pipeline -p build-and-push-terraform-resource-with-carvel-image \

-c ./pipelines/build-and-push-terraform-resource-with-carvel-image.yml \

--var pipeline-repo=<pipeline_repo> \

--var pipeline-repo-branch=<pipeline_repo_branch> \

--var image-repo-name=<repo-name> \

--var registry-username=<user> \

--var registry-password=<password>

fly -t <target> unpause-pipeline -p build-and-push-terraform-resource-with-carvel-image

<target>is the alias for the connection details to a Concourse instance<pipeline_repo>is the Git repository that contains the Dockerfile for the container image to be built (e.g., https://github.com/pacphi/tf4k8s-pipelines.git)<pipeline_repo_branch>is the aformentioned Git repository's branch (e.g., main)<repo-name>is a container image repository prefix (e.g., docker.io or a private registry like harbor.envy.ironleg.me/library)<username>and<password>are the credentials of an account with read/write privileges to a container image registry

A pre-built container image exists on DockerHub, here: pacphi/terraform-resource-with-carvel.

A simple image based on alpine that includes bash, bosh and ytt.

fly -t <target> set-pipeline -p build-and-push-bby-image \

-c ./pipelines/build-and-push-bash-bosh-and-ytt-image.yml \

--var pipeline-repo=<pipeline_repo> \

--var pipeline-repo-branch=<pipeline_repo_branch> \

--var image-repo-name=<repo-name> \

--var registry-username=<user> \

--var registry-password=<password>

fly -t <target> unpause-pipeline -p build-and-push-bby-image

<target>is the alias for the connection details to a Concourse instance<pipeline_repo>is the Git repository that contains the Dockerfile for the container image to be built (e.g., https://github.com/pacphi/tf4k8s-pipelines.git)<pipeline_repo_branch>is the aformentioned Git repository's branch (e.g., main)<repo-name>is a container image repository prefix (e.g., docker.io or a private registry like harbor.envy.ironleg.me/library)<username>and<password>are the credentials of an account with read/write privileges to a container image registry

A pre-built container image exists on DockerHub, here: pacphi/bby.

A Concourse resource based off ljfranklin/terraform-resource that also includes these command-line interfaces: tkg and tmc.

fly -t <target> set-pipeline -p build-and-push-terraform-resource-with-tkg-tmc-image \

-c ./pipelines/build-and-push-terraform-resource-with-tkg-tmc-image.yml \

--var pipeline-repo=<pipeline_repo> \

--var pipeline-repo-branch=<pipeline_repo_branch> \

--var image-repo-name=<repo-name> \

--var registry-username=<user> \

--var registry-password=<password> \

--var vmw_username=<vmw_username> \

--var vmw_password=<vmw_password> \

fly -t <target> unpause-pipeline -p terraform-resource-with-tkg-tmc-image

<target>is the alias for the connection details to a Concourse instance<pipeline_repo>is the Git repository that contains the Dockerfile for the container image to be built (e.g., https://github.com/pacphi/tf4k8s-pipelines.git)<pipeline_repo_branch>is the aformentioned Git repository's branch (e.g., main)<repo-name>is a container image repository prefix (e.g., docker.io or a private registry like harbor.envy.ironleg.me/library)<username>and<password>are the credentials of an account with read/write privileges to a container image registry<vmw_username>and<vmw_password>are the credentials of an account on my.vmwware.com

This image contains commercially licensed software - you'll need to build it yourself and publish in a private container image registry

Create a mirrored directory structure as found underneath tf4k8s/experiments.

You'll want to abide by some convention if you're going to manage multiple environments. Create a subdirectory for each environment you wish to manage. Then mirror the experiments subdirectory structure under each environment directory.

For example:

+ tf4k8s-pipelines-config

+ n00b

+ gcp

+ certmanager

+ cluster

+ dns

+ external-dns

+ k8s

+ nginx-ingress-controller

+ harbor

+ tas4k8s

Place a terraform.tfvars file in each of the leaf subdirectories you wish to drive a terraform plan or apply.

For example:

+ tf4k8s-pipelines-config

+ n00b

+ gcp

+ dns

- terraform.tfvars

Here's a sample of the above module's file's contents:

terraform.tfvars

project = "fe-cphillipson"

gcp_service_account_credentials = "/tmp/build/put/credentials/gcp-credentials.json"

root_zone_name = "ironleg-zone"

environment_name = "n00b"

dns_prefix = "n00b"

Now we'll want to maintain secrets like a) cloud credentials and b) ./kube/config. The following is an example structure when working with Google Cloud Platform and an environment named n00b.

+ s3cr3ts

+ n00b

+ .kube

- config

- gcp-credentials.json

Lastly we'll want to maintain state for each Terraform module. We won't need a local directory, but we can use rclone to create a bucket.

We'll use rclone to synchronize your local configuration (and in some instances credentials) with a cloud storage provider of your choice.

Execute rclone config to configure a target storage provider.

You could create a bucket with rclone mkdir <target>:<bucket_name>.

And you could sync with rclone sync -i /path/to/config <target>:<bucket_name>

Bucket names must be unique! Be prepared to append a unique identifier to all bucket names. In the example that follows, replace occurrences of {uid} with your own >= 4 and <= 10 character String (taking care to exclude special characters).

For example, when working with Google Cloud Storage (GCS)...

rclone mkdir fe-cphillipson-gcs:s3cr3ts-{uid}

rclone sync -i /home/cphillipson/Documents/development/pivotal/tanzu/s3cr3ts fe-cphillipson-gcs:s3cr3ts-{uid}

rclone mkdir fe-cphillipson-gcs:tf4k8s-pipelines-config-{uid}

rclone sync -i /home/cphillipson/Documents/development/pivotal/tanzu/tf4k8s-pipelines-config fe-cphillipson-gcs:tf4k8s-pipelines-config-{uid}

rclone mkdir fe-cphillipson-gcs:tf4k8s-pipelines-state-{uid}

rclone mkdir fe-cphillipson-gcs:tas4k8s-bundles-{uid}

gsutil versioning set on gs://s3cr3ts-{uid}

gsutil versioning set on gs://tf4k8s-pipelines-config-{uid}

gsutil versioning set on gs://tf4k8s-pipelines-state-{uid}

gsutil versioning set on gs://tas4k8s-bundles-{uid}

- When working with GCS you must enable versioning on each bucket

We'll continue to use the fly CLI to upload pipeline definitions with configuration (in this case we're talking about Concourse YAML configuration).

All pipeline definitions in this repository are found in the pipelines directory. As mentioned each pipeline is the realization of a definition and configuration (i.e., any value encapsulated in (()) or {{}}), so inspect the yaml for each definition to see what's expected.

Terraform modules are found in the terraform directory.

For convenience we'll want to create a ci sub-directory to collect all our configuration. And for practical purposes we'll want to create a subdirectory structure that mirrors what we created earlier, so something like:

+ tf4k8s-pipelines

+ ci

+ n00b

+ gcp

- common.yml

- create-dns.yml

- create-cluster.yml

- install-certmanager.yml

- install-nginx-ingress-controller.yml

- install-external-dns.yml

- install-harbor.yml

- install-tas4k8s.yml

Are you wondering about the content of those files?

Here are a few examples:

common.yml

terraform_resource_with_carvel_image: pacphi/terraform-resource-with-carvel

registry_username: REPLACE_ME

registry_password: REPLACE_ME

pipeline_repo: https://github.com/pacphi/tf4k8s-pipelines.git

pipeline_repo_branch: main

environment_name: n00b

gcp_account_key_json: |

{

"type": "service_account",

"project_id": "REPLACE_ME",

"private_key_id": "REPLACE_ME",

"private_key": "-----BEGIN PRIVATE KEY-----\nREPLACE_ME\n-----END PRIVATE KEY-----\n",

"client_email": "REPLACE_ME.iam.gserviceaccount.com",

"client_id": "REPLACE_ME",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://accounts.google.com/o/oauth2/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/REPLACE_ME.iam.gserviceaccount.com"

}

create-dns.yml

terraform_module: gcp/dns

gcp_storage_bucket_folder: gcp/dns

install-harbor.yml

terraform_module: k8s/harbor

gcp_storage_bucket_folder: k8s/harbor

So putting this into practice, if we wanted to create a new Cloud DNS zone in Google Cloud, we could execute

fly -t <target> set-pipeline -p create-dns -c ./pipelines/gcp/terraformer.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/create-dns.yml

fly -t <target> unpause-pipeline -p create-dns

And other pipelines you might execute (in order) to install a TAS 3.0 instance atop a GKE cluster

fly -t <target> set-pipeline -p create-cluster -c ./pipelines/gcp/terraformer.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/create-cluster.yml

fly -t <target> unpause-pipeline -p create-cluster

fly -t <target> set-pipeline -p install-certmanager -c ./pipelines/gcp/terraformer-with-carvel.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/install-certmanager.yml

fly -t <target> unpause-pipeline -p install-certmanager

fly -t <target> set-pipeline -p install-nginx-ingress-controller -c ./pipelines/gcp/terraformer-with-carvel.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/install-nginx-ingress-controller.yml

fly -t <target> unpause-pipeline -p install-nginx-ingress-controller

fly -t <target> set-pipeline -p install-external-dns -c ./pipelines/gcp/terraformer-with-carvel.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/install-external-dns.yml

fly -t <target> unpause-pipeline -p install-external-dns

fly -t <target> set-pipeline -p install-harbor -c ./pipelines/gcp/terraformer-with-carvel.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/install-harbor.yml

fly -t <target> unpause-pipeline -p install-harbor

fly -t <target> set-pipeline -p install-tas4k8s -c ./pipelines/gcp/tas4k8s.yml -l ./ci/n00b/gcp/common.yml -l ./ci/n00b/gcp/install-tas4k8s.yml

fly -t <target> unpause-pipeline -p install-tas4k8s

Admittedly this is a bit of effort to assemble. To help get you started, visit the dist/concourse folder, download and unpack the sample environment template(s). Make sure to update all occurrences of REPLACE_ME within the configuration files.

- All buckets must have versioning enabled!

- Consult the target provider's documentation for how to do this for each bucket created. (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage)

- Store secrets like your cloud provider credentials or

./kube/config(in file format) in a storage bucket. - Remember to synchronize your local copy of

t4k8s-pipelines-configwhen an addition or update is made to one or moreterraform.tfvarsfiles.- Use

rclone syncwith caution. If you don't want to destroy previous state, userclone copyinstead.

- Use

- Remember that you have to

git commitandgit pushupdates to thetf4k8s-pipelinesgit repository any time you make additions/updates to contents under a)pipelinesor b)terraformdirectory trees before executingfly set-pipeline. - Remember to execute

fly set-pipelineany time you a) adapt a pipeline definition or b) edit Concourse configuration - When using Concourse terraform-resource, if you choose to include a directory or file, it is rooted from

/tmp/build/put. - After creating a cluster you'll need to create a

./kube/configin order to install subsequent capabilities via Helm and Carvel.- Consult the output of a

create-cluster/terraform-applyjob/build. - Copy the contents into

s3cr3ts/<env>/.kube/configthen execute anrclone sync.

- Consult the output of a

- Complete Concourse pipeline definition support for a modest complement of modules found in tf4k8s across

- AWS (EKS)

- Azure (AKS)

- GCP (GKE)

- TKG (Azure)

- TKG (AWS)

- Adapt existing Concourse pipeline definitions to

- encrypt, mask and securely source secrets (e.g., cloud credentials, .kube/config)

- add smoke-tests

- Explore implementation of pipeline definitions supporting other engines

- Jenkins

- Tekton

- Argo