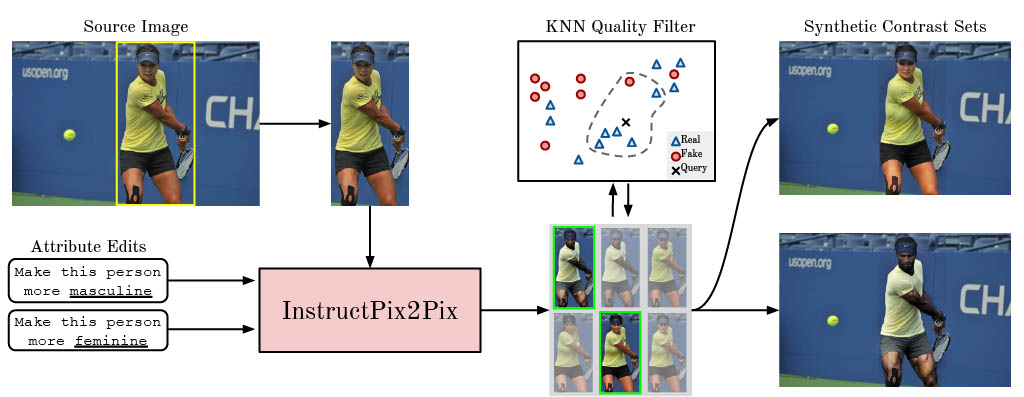

This repository hosts the code associated with our research project, entitled "Balancing the Picture: Debiasing Vision-Language Datasets with Synthetic Contrast Sets". Our research explores the use of synthetic contrast sets for debiasing vision-language datasets.

The authors of this project include:

- Brandon Smith

- Miguel Farinha

- Siobhan Mackenzie Hall

- Hannah Rose Kirk

- Aleksandar Shtedritski

- Max Bain

For any inquiries or further information, please contact our corresponding authors, Brandon Smith, brandonsmithpmpuk@gmail.com, or Miguel Farinha, miguelffarinha@gmail.com.

Details of our paper can be found in the link here.

Several packages are required to run these scripts. They can be installed with pip via the following commands:

$ conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0

$ pip install ftfy regex tqdm Pillow

$ pip install git+https://github.com/openai/CLIP.git

$ pip install git+https://github.com/oxai/debias-vision-lang

$ pip install transformers

$ pip install scikit-learn

$ pip install pandas

$ pip install open_clip_torchmeasure_bias.py operates on the COCO val 2017 image dataset to measure the bias metrics, Bias@K and MaxSkew@K.

The script can be used by running the following command:

python measure_bias.py [options]The available options are:

--model: Specifies the model type. Choices include "bert", "random", "tfidf", "clip", "clip-clip100", "debias-clip". The default value is "bert".--balanced_coco: A flag that indicates whether to use a balanced COCO dataset. If this option is included in the command, the script will use a balanced COCO dataset.--data_dir: Specifies the data directory. The default value is "/tmp/COCO2017".

The data_dir must be structured as follows:

coco

│

└─── annotations

│ │ captions_val2017.json

│ │ captions_train2017.json

│

└─── images

│ *.jpg

The script will output bias measurements to the console.

gensynth_measure_bias.py is a script that measures the bias metrics, Bias@K and MaxSkew@K, over the stable diffusion edit images generated from the COCO train 2017 image dataset or the original COCO train 2017 images corresponding to the unique IDs used to generate the edited images.

To use the script, run the following command:

python gensynth_measure_bias.py [options]The available options are:

--model: Specifies the model type. Choose from the following options: "random", "clip", "open-clip", "clip-clip100", "debias-clip". The default value is "clip".--balanced_coco: A flag that indicates whether to use a balanced COCO dataset. Include this option in the command if you want to use a balanced COCO dataset.--dataset: Specifies the dataset to compute bias@k and maxskew@ for. Choose either "gensynth" or "gensynth-coco".--gensynth_data_dir: Specifies the directory for GenSynth data. This should include thegensynth.jsonfile and edited image subdirectories. It is a required argument.--coco_data_dir: Specifies the directory for COCO data. It is a required argument.

The directory structure for --coco_data_dir should be the same as for data_dir above.

Please note that the --model option now includes additional choices such as "open-clip", and the --dataset, --gensynth_data_dir, and --coco_data_dir options have been added to the available options.

The --dataset option contains two options:

gensynth-cocotells the script to compute the bias metrics on the unique COCO train 2017 image IDs corresponding to the images in the--gensynth_data_dir.gensynthtells the script to compute the bias metrics over all the edited images in--gensynth_data_dir.

-

You can download the GynSynth dataset described in our paper from this link.

-

After downloading the dataset, you need to extract it. You can do

unzip data_gensynth.zip

This will extract all the files into a new

data_gensynthdirectory in your current location.

This project is licensed under the MIT License. Please see the LICENSE file for more information.

We wish to acknowledge the support and contributions of everyone who made this project possible.

Miguel Farinha acknowledges the support of Fundação para a Ciência e Tecnologia (FCT), through the Ph.D. grant 2022.12484.BD. Aleksandar Shtedritski acknowledges the support of EPSRC Centre for Doctoral Training in Autonomous Intelligent Machines & Systems [EP/S024050/1]. Hannah Rose Kirk acknowledges the support of the Economic and Social Research Council Grant for Digital Social Science [ES/P000649/1].

This work has been supported by the Oxford Artificial Intelligence student society. For computing resources, the authors are grateful for support from Google Cloud and the CURe Programme under Google Brain Research, as well as an AWS Responsible AI Grant.

@misc{smith2023balancing,

title={Balancing the Picture: Debiasing Vision-Language Datasets with Synthetic Contrast Sets},

author={Brandon Smith and Miguel Farinha and Siobhan Mackenzie Hall and Hannah Rose Kirk and Aleksandar Shtedritski and Max Bain},

year={2023},

eprint={2305.15407},

archivePrefix={arXiv},

primaryClass={cs.CV}

}