Traditional-Chinese Alpaca

This repo aims to share resources for building Traditional-Chinese instruction-following language models (for research purposes only). This repo contains:

- A Traditional-Chinese version of the Alpaca dataset with English alignment. See the dataset section for details. Our very simple alignment technique could work for other languages as well.

- Code for training and inferencing the Traditional-Chinese Alpaca-LoRA.

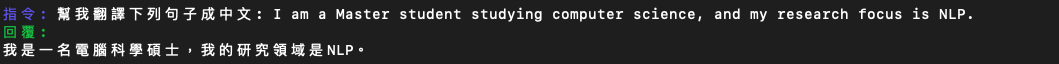

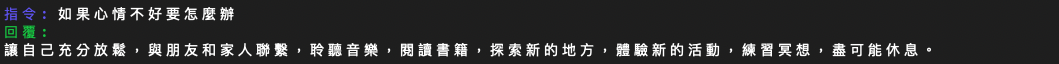

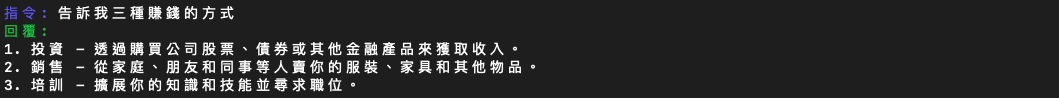

Following are some good examples generated by our 7B, Traditional-Chinese Alpaca-LoRA.

Dataset

We translate the Stanford Alpaca 52k dataset directly to Traditional Chinese via the ChatGPT API (gpt-3.5-turbo), which cost us roughly 40 USD.

Specifically, this repo includes three sets of datasets:

- A Traditional-Chinese version of the Alpaca dataset. -->

alpaca-tw.json - A dataste same as 1. except the instruction part is left as English. -->

alpaca-tw_en_instruction.json - An aligned dataset, which simply combinines 1. and 2. -->

alpaca-tw_en-align.json

In our preliminary experiments, fine-tuning with only the Trditional-Chinese dataset (i.e., dataset 1.) does not yield ideal results (e.g., degeneration, poor understanding). As LLaMA is trained primarily on English corpus, its ability to understanding other languages may require further alignments.

To this end, we create a Traditional-Chinese version of the Alpaca dataset with English alignment (i.e., dataste 3.), where beside the instruction-following task, the model can learn Chinese-English translation implicitly. The examples above are produced by training with this aligned dataset.

We hypothesize for some languages (e.g., spanish, portuguese) which share subword vocabulary with English, simply fine-tuning with the translated alpaca dataset would give great performance.

Training

The code for training the Traditional-Chinese Alpaca-LoRA is avaiblable here. It is based largely on Alpaca-LoRA and Cabrita. Our training is done on a single RTX 3090.

Inferencing

The code for inferencing the trained model is avaiblable here.

Next

- Fine-tune various multi-lingual foundation models (e.g., bloomz-7b1).

- Construct a large-scale Traditional-Chinese instruction-following dataset.

- Construct domain-specific Traditional-Chinese instruction-following datasets.

Please feel free to reach out (contact[at]nlg.csie.ntu.edu.tw) if you are interested in any forms of collaborations!

Reference

A large portion of our work relies on/motivated by LLaMA, Stanford Alpaca, Alpaca-LoRA, ChatGPT, Hugging Face, and Cabrita. We thanks the incredible individuals, groups, and communities for opening their amazing works!

Citation

If you use the data or code from this repo, please cite this repo as follows

@misc{traditional-chinese-alpaca,

author = {Wei-Lin Chen and Cheng-Kuang Wu and Hsin-Hsi Chen},

title = {Traditional-Chinese Alpaca: Models and Datasets},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/ntunlplab/traditional-chinese-alpaca}},

}