Check out the YouTube video below for more details.

This repository Contains:

- Code to preprocess and prepare the GRAB data for the GRIP paper

- Retraining GRIP models, allowing users to change details in the training configuration.

- Code for generating results on the test set split of the data

- Tools to visualize and save generated sequences from GRIP

The models are built in PyTorch 1.7.1 and tested on Ubuntu 20.04 (Python3.8, CUDA11.0).

Some of the requirements are:

- Python ==3.8

- Pytorch==1.7.1

- SMPL-X

- bps_torch

- psbody-mesh

To install the requirements please follow the next steps:

- Clone this repository and install the requirements:

git clone https://github.com/otaheri/GRIP

cd GRIP pip install -r requirements.txt

If there are issues with installing the psbody-mesh package, please follow the instructions on the original repo to install it from the source.

In order to use GRIP please follow the steps below:

- Download the GRAB dataset from our website and make sure to follow the steps there.

- Follow the instructions on the SMPL-X website to download SMPL-X models.

- Check the Examples below to process the required data, ude pretrained GRIP models, and to train GRIP models.

- Download the GRAB dataset from the GRAB website, and follow the instructions there to extract the files.

- Process the data by running the command below.

python data/process_data.py --grab-path /path/to/GRAB --smplx-path /path/to/smplx/models/ --out-path /the/output/path

- Please download these models from our website and put them in the folders as below.

GRIP

├── snapshots

│ │

│ ├── anet.pt

│ ├── cnet.pt

│ └── rnet.pt

│

│

.

.

.-

python train/infer_cnet.py --work-dir /path/to/work/dir --grab-path /path/to/GRAB --smplx-path /path/to/models/ --dataset-dir /path/to/processed/data

-

To retrain these models with a new configuration, please use the following code.

python train/train_cnet.py --work-dir path/to/work/dir --grab-path /path/to/GRAB --smplx-path /path/to/models/ --dataset-dir /path/to/processed/data --expr-id EXPERIMENT_ID

-

python train/infer_anet.py --work-dir path/to/work/dir --grab-path /path/to/GRAB --smplx-path /path/to/models/ --dataset-dir /path/to/processed/data

-

To retrain ANet with a new configuration, please use the following code.

python train/train_anet.py --work-dir path/to/work/dir --grab-path /path/to/GRAB --smplx-path /path/to/models/ --dataset-dir /path/to/processed/data --expr-id EXPERIMENT_ID

@inproceedings{taheri2024grip,

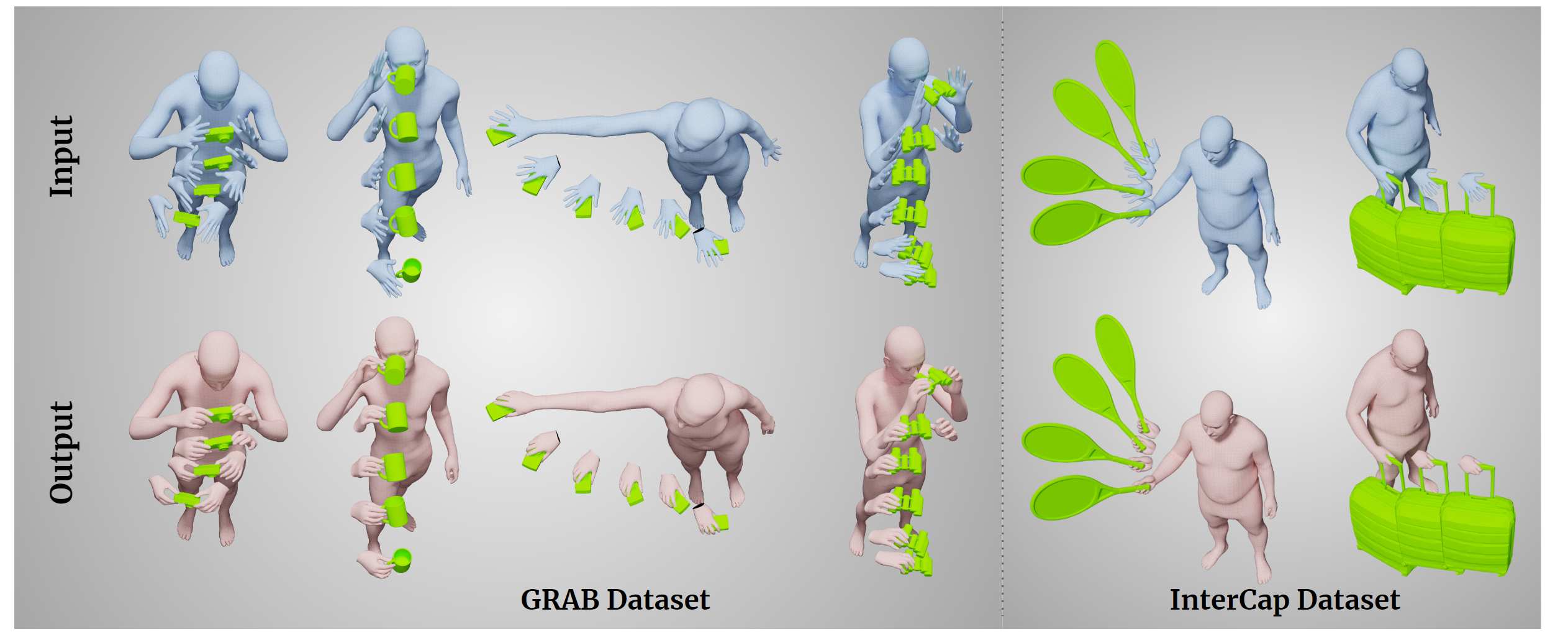

title = {{GRIP}: Generating Interaction Poses Using Latent Consistency and Spatial Cues},

author = {Omid Taheri and Yi Zhou and Dimitrios Tzionas and Yang Zhou and Duygu Ceylan and Soren Pirk and Michael J. Black},

booktitle = {International Conference on 3D Vision ({3DV})},

year = {2024},

url = {https://grip.is.tue.mpg.de}

}

Software Copyright License for non-commercial scientific research purposes. Please read carefully the LICENSE page for the terms and conditions and any accompanying documentation before you download and/or use the GRIP data, model and software, (the "Data & Software"), including 3D meshes (body and objects), images, videos, textures, software, scripts, and animations. By downloading and/or using the Data & Software (including downloading, cloning, installing, and any other use of the corresponding github repository), you acknowledge that you have read and agreed to the LICENSE terms and conditions, understand them, and agree to be bound by them. If you do not agree with these terms and conditions, you must not download and/or use the Data & Software. Any infringement of the terms of this agreement will automatically terminate your rights under this LICENSE.

This work was partially supported by Adobe Research (during the first author's internship), the International Max Planck Research School for Intelligent Systems (IMPRS-IS), and the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039B.

We thank:

- Tsvetelina Alexiadis and Alpár Cseke for the Mechanical Turk experiments.

- Benjamin Pellkofer for website design, IT, and web support.

The code of this repository was implemented by Omid Taheri.

For questions, please contact grip@tue.mpg.de.

For commercial licensing (and all related questions for business applications), please contact ps-licensing@tue.mpg.de.