This is the official codebase of AbSViT, from the following paper:

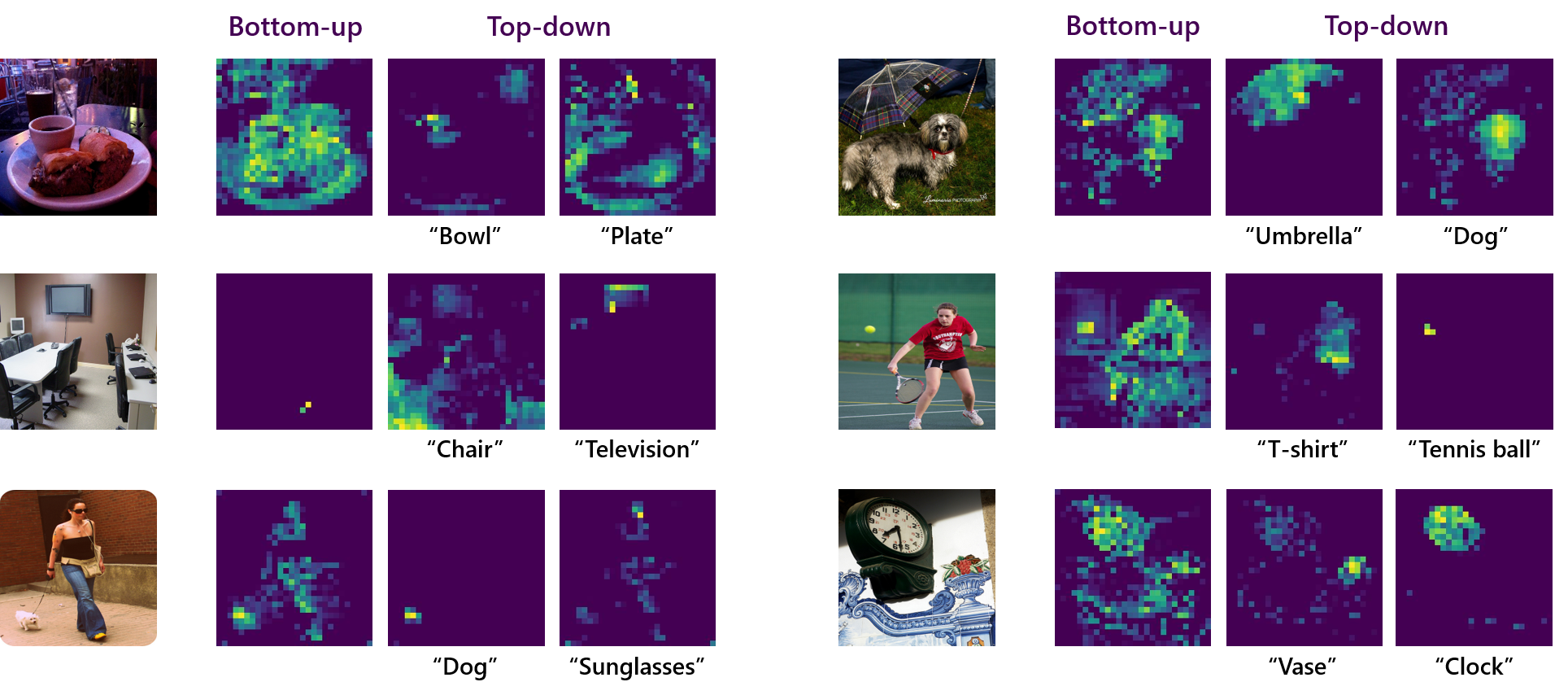

Top-Down Visual Attention from Analysis by Synthesis, CVPR 2023

Baifeng Shi, Trevor Darrell, and Xin Wang

UC Berkeley, Microsoft Research

- Finetuning on Vision-Language datasets

Install PyTorch 1.7.0+ and torchvision 0.8.1+ from the official website.

requirements.txt lists all the dependencies:

pip install -r requirements.txt

In addition, please also install the magickwand library:

apt-get install libmagickwand-dev

demo/demo.ipynb gives an example of visualizing AbSViT's attention map on single-object and multi-object images.

| Name | ImageNet | ImageNet-C (↓) | PASCAL VOC | Cityscapes | ADE20K | Weights |

|---|---|---|---|---|---|---|

| ViT-Ti | 72.5 | 71.1 | - | - | - | model |

| AbSViT-Ti | 74.1 | 66.7 | - | - | - | model |

| ViT-S | 80.1 | 54.6 | - | - | - | model |

| AbSViT-S | 80.7 | 51.6 | - | - | - | model |

| ViT-B | 80.8 | 49.3 | 80.1 | 75.3 | 45.2 | model |

| AbSViT-B | 81.0 | 48.3 | 81.3 | 76.8 | 47.2 | model |

For example, to evaluate AbSViT_small on ImageNet, run

python main.py --model absvit_small_patch16_224 --data-path path/to/imagenet --eval --resume path/to/checkpoint

To evaluate on robustness benchmarks, please add one of --inc_path /path/to/imagenet-c, --ina_path /path/to/imagenet-a, --inr_path /path/to/imagenet-r or --insk_path /path/to/imagenet-sketch to test ImageNet-C, ImageNet-A, ImageNet-R or ImageNet-Sketch.

If you want to test the accuracy under adversarial attackers, please add --fgsm_test or --pgd_test.

Please see segmentation for instructions.

Take AbSViT_small for an example. We use single node with 8 gpus for training:

python -m torch.distributed.launch --nproc_per_node=8 --master_port 12345 main.py --model absvit_small_patch16_224 --data-path path/to/imagenet --output_dir output/here --num_workers 8 --batch-size 128 --warmup-epochs 10

To train different model architectures, please change the arguments --model. We provide choices of ViT_{tiny, small, base}' and AbSViT_{tiny, small, base}.

Please see vision_language for instructions.

This codebase is built upon the official code of "Visual Attention Emerges from Recurrent Sparse Reconstruction" and "Towards Robust Vision Transformer".