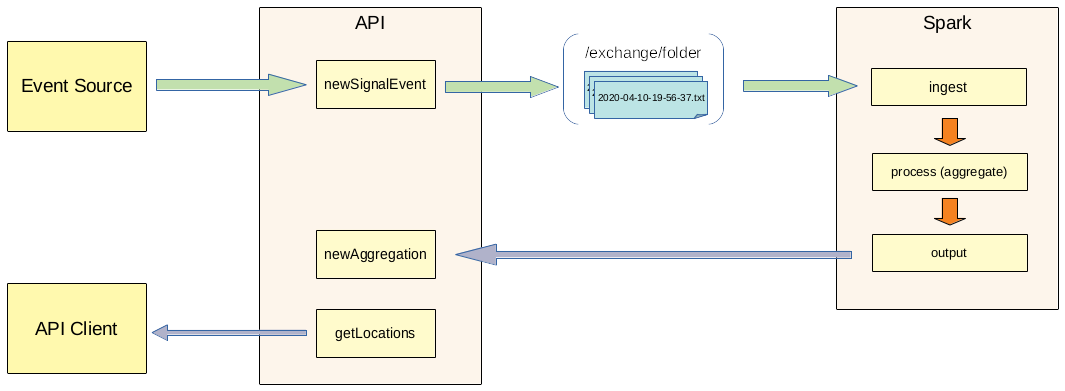

Streaming data analysis. This repository cantains a Spark client/job and an API that ingests data into it and outputs results to its users. The application code can be used for education purpose.

Suppose that there are certain signals or events that come from some source system. The task is to do some aggregation on the fly and keep current results available for users to view. Signal schema:

{

"id_sample": "95ggm", // item identifier

"num_id": "fcc#wr995", // item serial number

"id_location": "1564.9956", // location id, can be a name

"id_signal_par": "0xvckkep", // sensor generating signal

"id_detected": "None", // status data (None), - functional, (Nan), - failed

"id_class_det": "req44" // failure type

}

The basic requirement is to find current number of both functional and failed (id_detected) signals per location (id_location).

There are 3 components (applications) in this repository:

This application contains Spark driver program and events stream processing logic (aggregation). It has been tested in local mode only.

It ingests data from a local folder (configurable within the properties file). The folder is monitored for new files and the new files are ingested as a stream.

This data should have a schema matching signal event to be parsed (see data model above).

Parsed data is then grouped by id_location and id_detected to find total number (count) of functional and failed events for each location.

Once grouped the data is being sent to an HTTP endpoint (see API methods below).

Methods:

newSignalEvent- accept new signal event and process it: accumulate events into batches and then dump into a fileget- get aggregation results by locationgetLocations- get aggregations for all known locationsnewAggregation- accept new aggregation, add/update in memory to make it available for API clientsclearAllAggregations- remove all aggregation results from memory (reset / start over)getEventsCount- get total number of events processed

There is a class called SignalHandler. It's purpose is to accumulate new signals from controller and dump them to a text file once in a while.

This is only for testing. Create a number of random events and send them to APIs HTTP endpoint.

- Ingest evens through TCP socket connection or use Kafka. Currently it reads events from files in a folder

- Probably would be better to output aggregation results to Kafka or other queue as well

- Test and make sure that sparkclient can work with Spark cluster (standalone or YARN/Mesos)