A Scalable Pipeline for Making Multi-Task Mid-Level Vision Datasets from 3D Scans

Annotator Repo · Starter Data · >> [Tooling+Training Repo] << · Reference Code · Project Website

The repository contains some tools and code from our paper:

Omnidata: A Scalable Pipeline for Making Multi-Task Mid-Level Vision Datasets from 3D Scans (ICCV2021)

It specifically contains utilities such as dataloader to efficiently load the generated data from the Omnidata annotator, pretrained models and code to train state-of-the-art models for tasks such as depth and surface normal estimation, including a first publicly available implementation for MiDaS training code. It also contains an implementation of the 3D image refocusing augmentation introduced in the paper.

The repository contains some tools and code from our paper:

Omnidata: A Scalable Pipeline for Making Multi-Task Mid-Level Vision Datasets from 3D Scans (ICCV2021)

It specifically contains utilities such as dataloader to efficiently load the generated data from the Omnidata annotator, pretrained models and code to train state-of-the-art models for tasks such as depth and surface normal estimation, including a first publicly available implementation for MiDaS training code. It also contains an implementation of the 3D image refocusing augmentation introduced in the paper.

- Installation

- Pretrained models for depth and surface normal estimation

- Run our pretrained models

- MiDaS loss and training code implementation

- 3D Image refocusing augmentation

- Train state-of-the-art models on Omnidata

- Citing

You can see the complete list of required packages in requirements.txt. We recommend using virtualenv for the installation.

conda create -n testenv -y python=3.8

source activate testenv

pip install -r requirements.txtWe are providing our pretrained models which have state-of-the-art performance in depth and surface normal estimation.

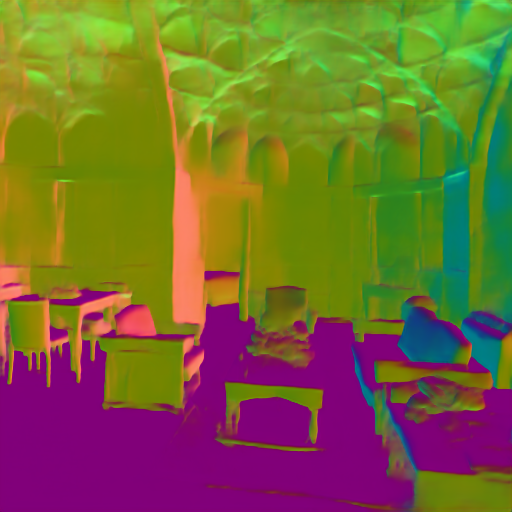

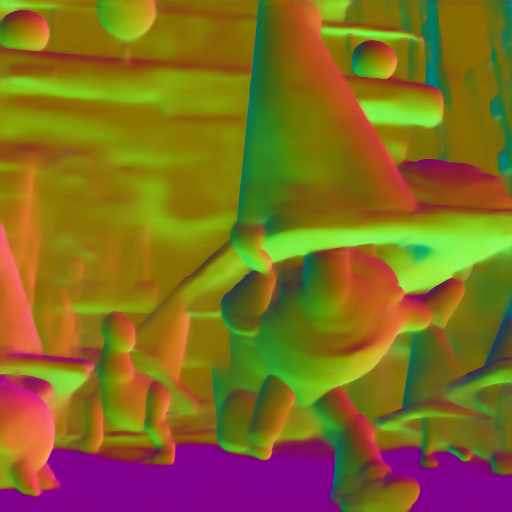

The surface normal network is based on the UNet architecture (6 down/6 up). It is trained with both angular and L1 loss and input resolutions between 256 and 512.

The depth networks have DPT-based architectures (similar to MiDaS v3.0) and are trained with scale- and shift-invariant loss and scale-invariant gradient matching term introduced in MiDaS, and also virtual normal loss. You can see a public implementation of the MiDaS loss here. We provide 2 pretrained depth models for both DPT-hybrid and DPT-large architectures with input resolution 384.

sh ./tools/download_depth_models.sh

sh ./tools/download_surface_normal_models.shThese will download the pretrained models for depth and normals to a folder called ./pretrained_models.

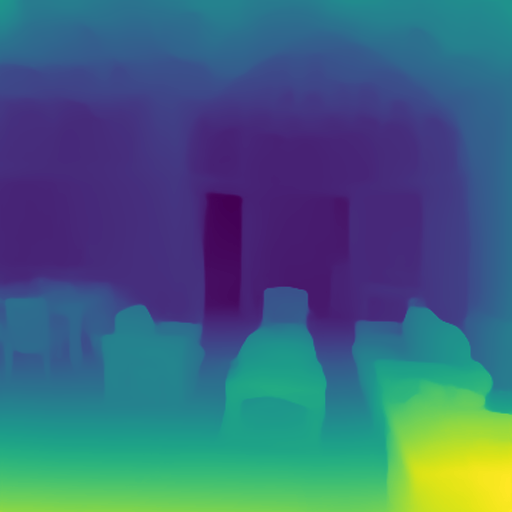

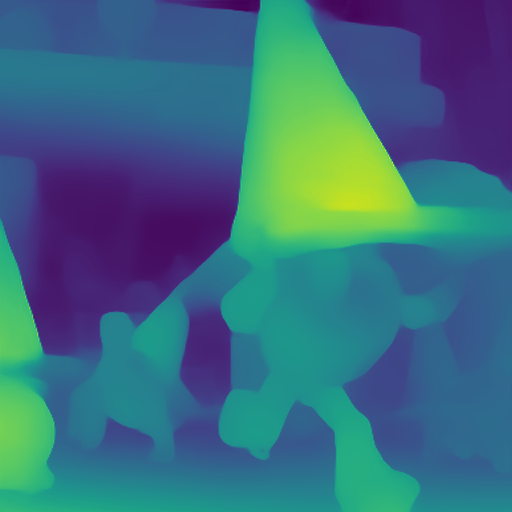

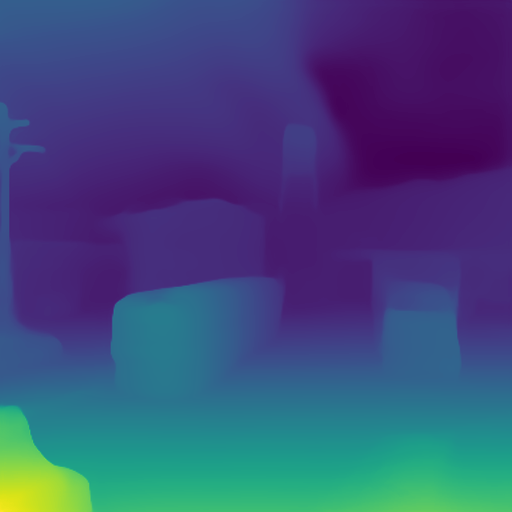

After downloading the pretrained models, you can run them on your own image with the following command:

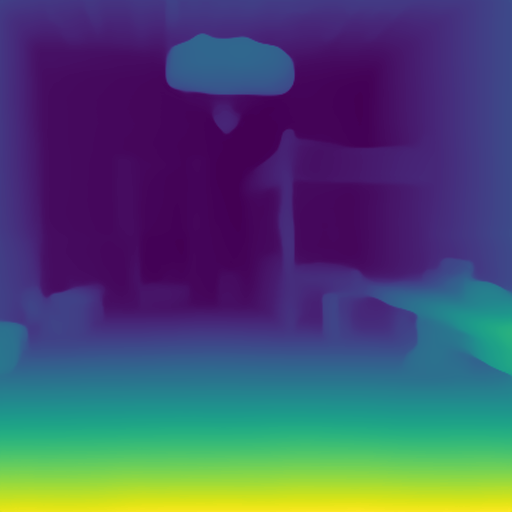

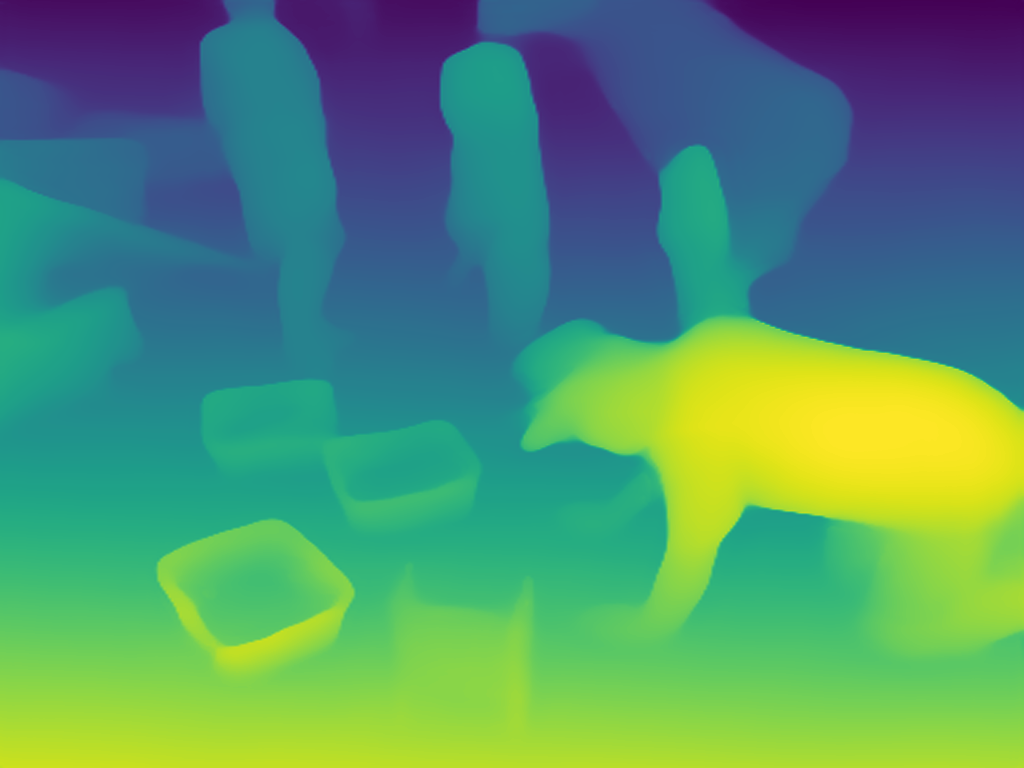

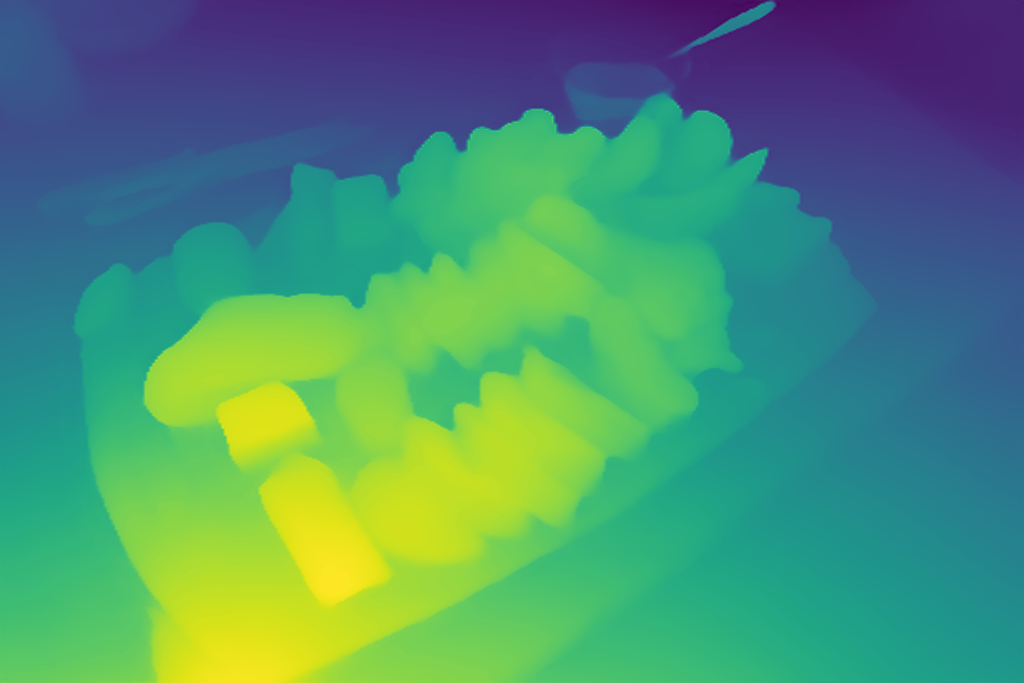

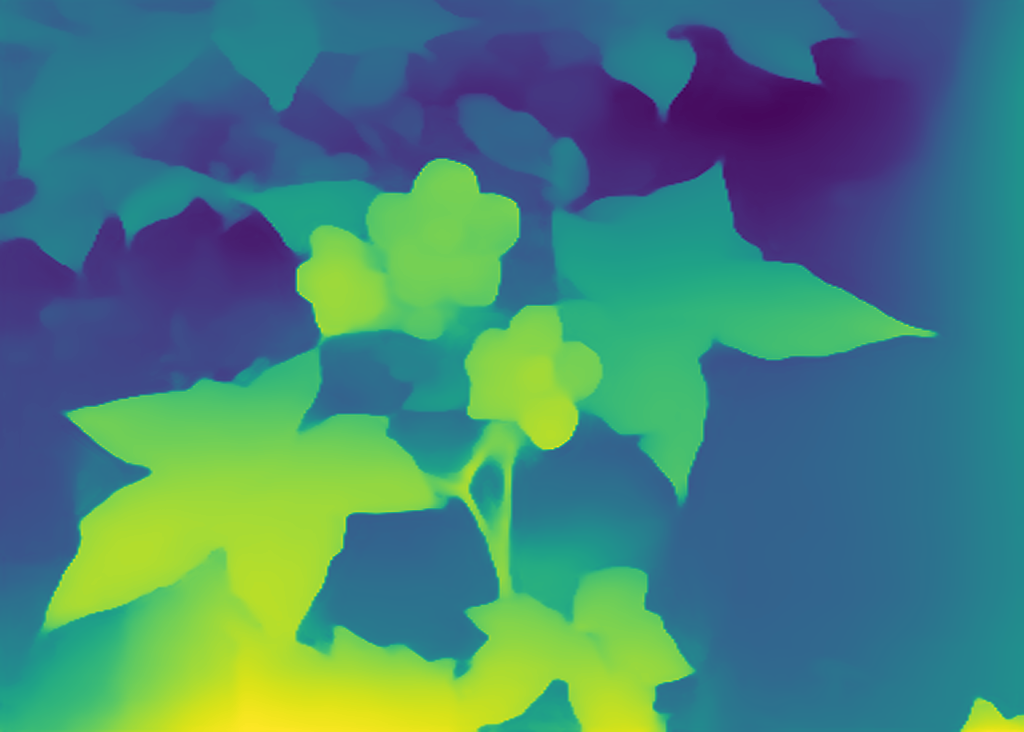

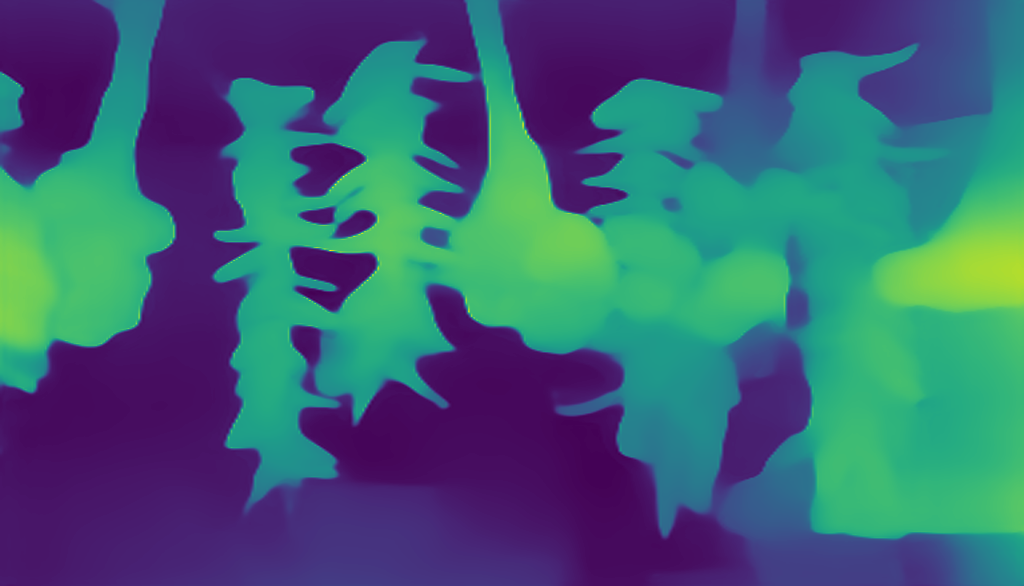

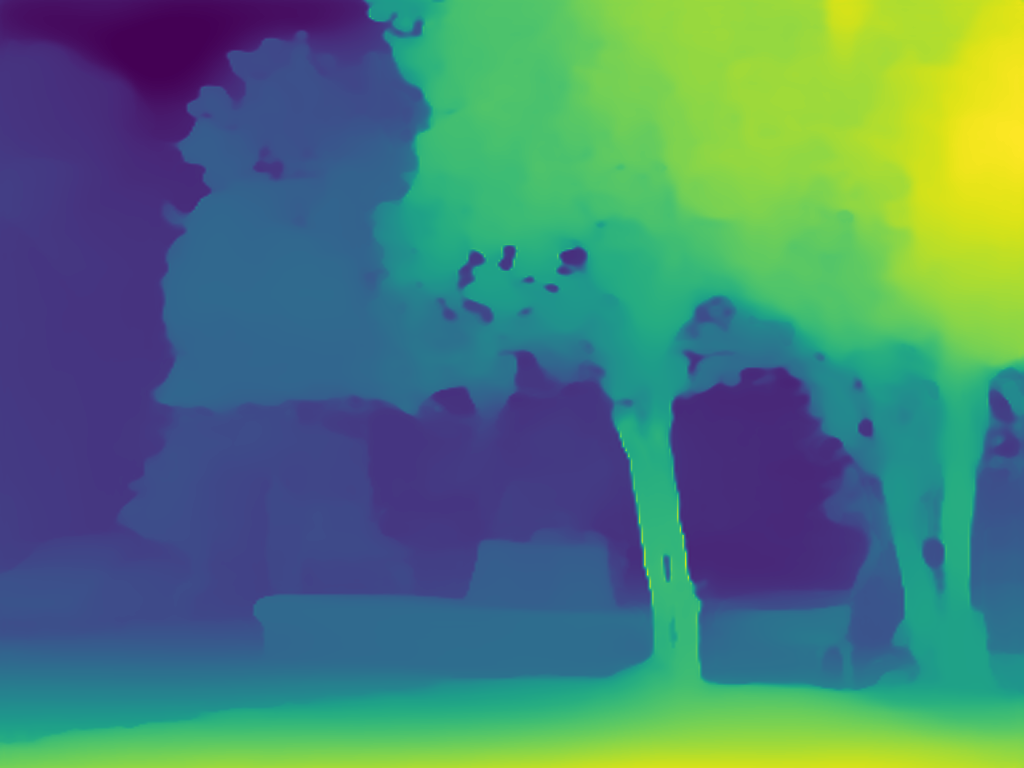

python demo.py --task $TASK --img_path $PATH_TO_IMAGE_OR_FOLDER --output_path $PATH_TO_SAVE_OUTPUTThe --task flag should be either normal or depth. To run the script for a normal target on an example image:

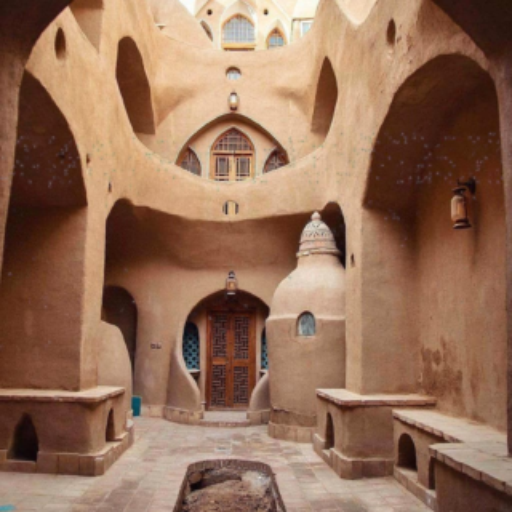

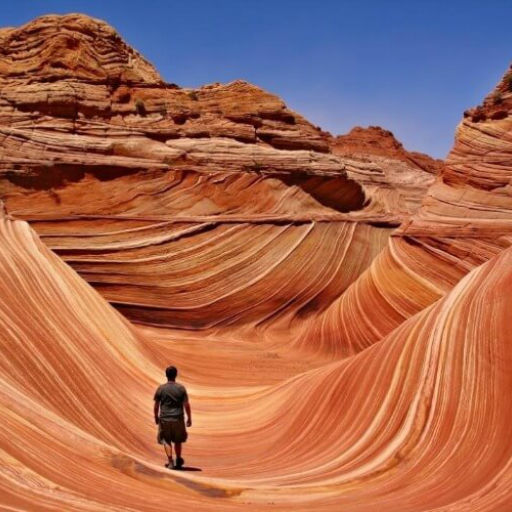

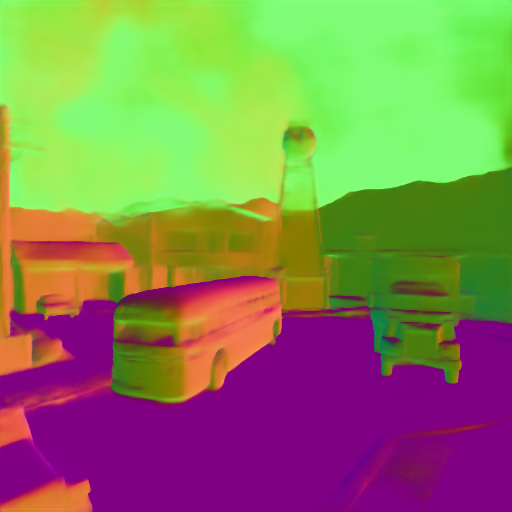

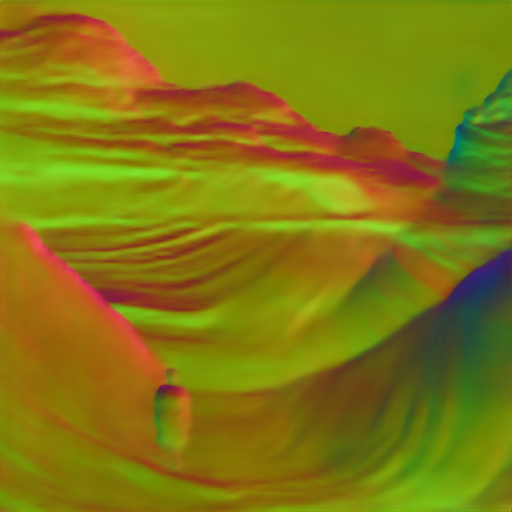

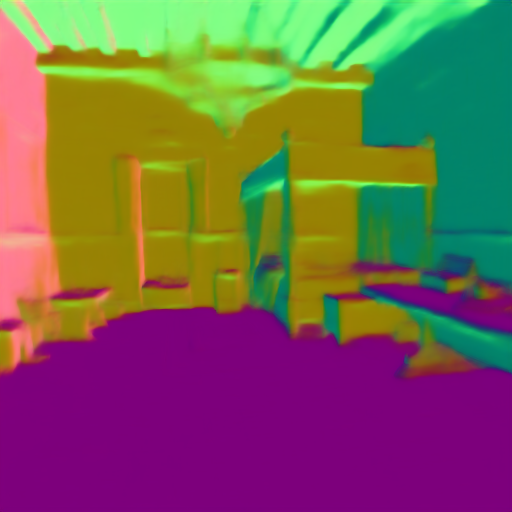

python demo.py --task normal --img_path assets/demo/test1.png --output_path assets/ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

We provide an implementation of the MiDaS Loss, specifically the ssimae (scale- and shift invariant MAE) loss and the scale-invariant gradient matching term in losses/midas_loss.py. MiDaS loss is useful for training depth estimation models on mixed datasets with different depth ranges and scales, similar to our dataset. An example usage is shown below:

from losses.midas_loss import MidasLoss

midas_loss = MidasLoss(alpha=0.1)

midas_loss, ssi_mae_loss, reg_loss = midas_loss(depth_prediction, depth_gt, mask)alpha specifies the weight of the gradient matching term in the total loss, and mask indicates the valid pixels of the image.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

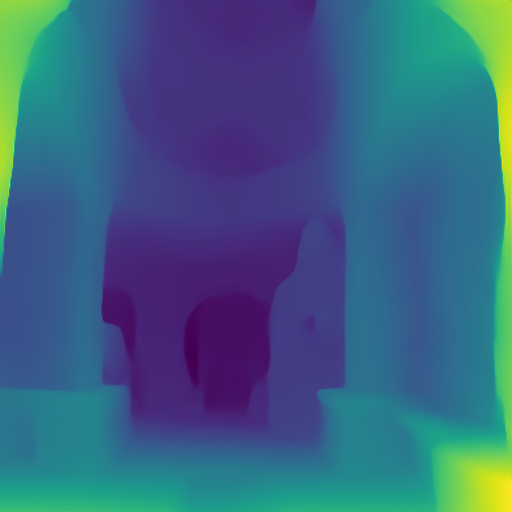

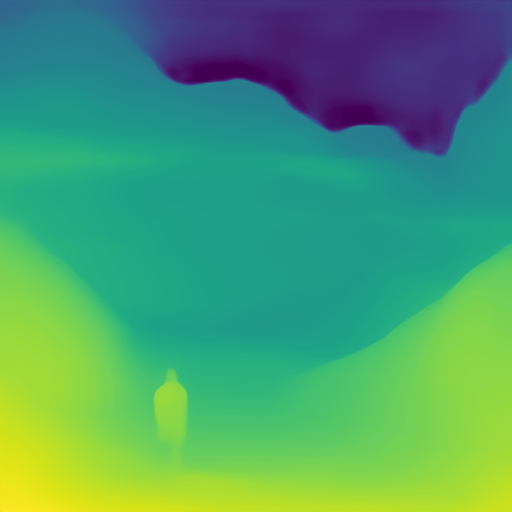

Mid-level cues can be used for data augmentations in addition to training targets. The availability of full scene geometry in our dataset makes the possibility of doing Image Refocusing as a 3D data augmentation. You can find an implementation of this augmentation in data/refocus_augmentation.py. You can run this augmentation on some sample images from our dataset with the following command.

python demo_refocus.py --input_path assets/demo_refocus/ --output_path assets/demo_refocusThis will refocus RGB images by blurring them according to depth_euclidean for each image. You can specify some parameters of the augmentation with the following tags: --num_quantiles (number of qualtiles to use in blur stack), --min_aperture (smallest aperture to use), --max_aperture (largest aperture to use). Aperture size is selected log-uniformly in the range between min and max aperture.

| Shallow Focus | Mid Focus | Far Focus |

|---|---|---|

|

|

|

Omnidata is a means to train state-of-the-art models in different vision tasks. Here, we provide the code for training our depth and surface normal estimation models. You can train the models with the following commands:

We train DPT-based models on Omnidata using 3 different losses: scale- and shift-invariant loss and scale-invariant gradient matching term introduced in MiDaS, and also virtual normal loss introduced here.

python train_depth.py --config_file config/depth.yml --experiment_name rgb2depth --val_check_interval 3000 --limit_val_batches 100 --max_epochs 10We train a UNet architecture (6 down/6 up) for surface normal estimation using L1 Loss and Cosine Angular Loss.

python train_normal.py --config_file config/normal.yml --experiment_name rgb2normal --val_check_interval 3000 --limit_val_batches 100 --max_epochs 10If you find the code or models useful, please cite our paper:

@inproceedings{eftekhar2021omnidata,

title={Omnidata: A Scalable Pipeline for Making Multi-Task Mid-Level Vision Datasets From 3D Scans},

author={Eftekhar, Ainaz and Sax, Alexander and Malik, Jitendra and Zamir, Amir},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={10786--10796},

year={2021}

}