Paper | Project Page | Video

Shangchen Zhou, Kelvin C.K. Chan, Chongyi Li, Chen Change Loy

S-Lab, Nanyang Technological University

- 2022.07.17: The Colab demo of CodeFormer is available now.

- 2022.07.16: Test code for face restoration is released. 😊

- 2022.06.21: This repo is created.

- Pytorch >= 1.7.1

- CUDA >= 10.1

- Other required packages in

requirements.txt

# git clone this repository

git clone https://github.com/sczhou/CodeFormer

cd CodeFormer

# create new anaconda env

conda create -n codeformer python=3.8 -y

source activate codeformer

# install python dependencies

pip3 install -r requirements.txt

python basicsr/setup.py develop

conda install -c conda-forge dlib

Download the dlib pretrained models from [Google Drive | OneDrive] to the weights/dlib folder.

You can download by run the following command OR manually download the pretrained models.

python scripts/download_pretrained_models.py dlib

Download the CodeFormer pretrained models from [Google Drive | OneDrive] to the weights/CodeFormer folder.

You can download by run the following command OR manually download the pretrained models.

python scripts/download_pretrained_models.py CodeFormer

You can put the testing images in the inputs/TestWhole folder. If you would like to test on cropped and aligned faces, you can put them in the inputs/cropped_faces folder.

# For cropped and aligned faces

python inference_codeformer.py --w 0.5 --has_aligned --test_path [input folder]

# For the whole images

python inference_codeformer.py --w 0.7 --test_path [input folder]

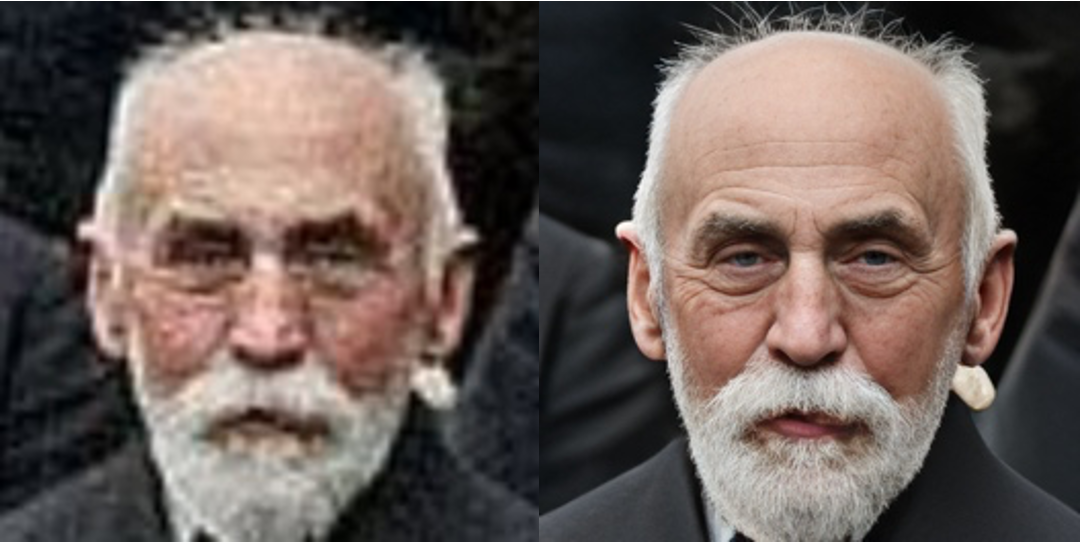

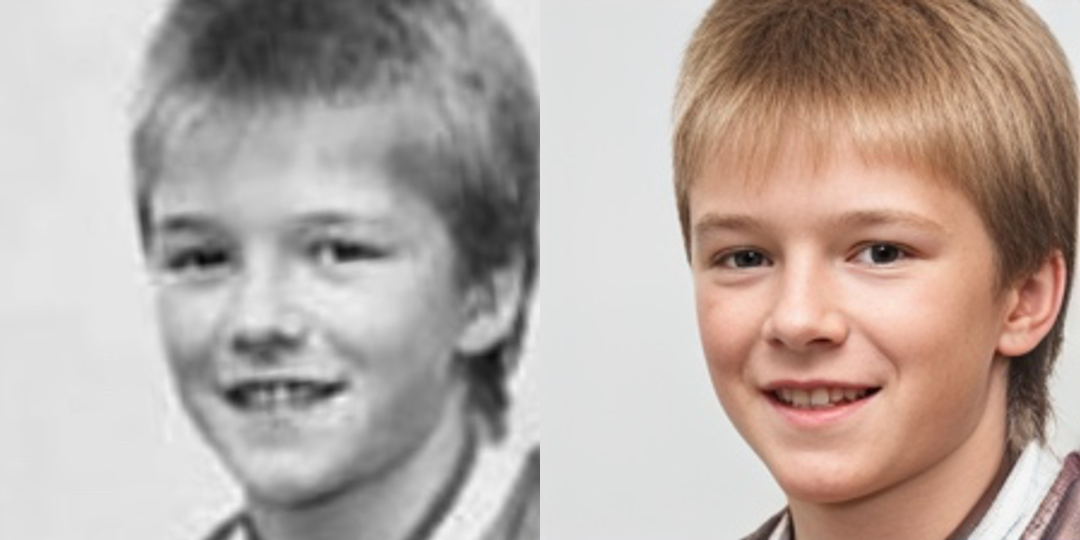

NOTE that w is in [0, 1]. Generally, smaller w tends to produce a higher-quality result, while larger w yields a higher-fidelity result.

The results will be saved in the results folder.

If our work is useful for your research, please consider citing:

@article{zhou2022codeformer,

author = {Zhou, Shangchen and Chan, Kelvin C.K. and Li, Chongyi and Loy, Chen Change},

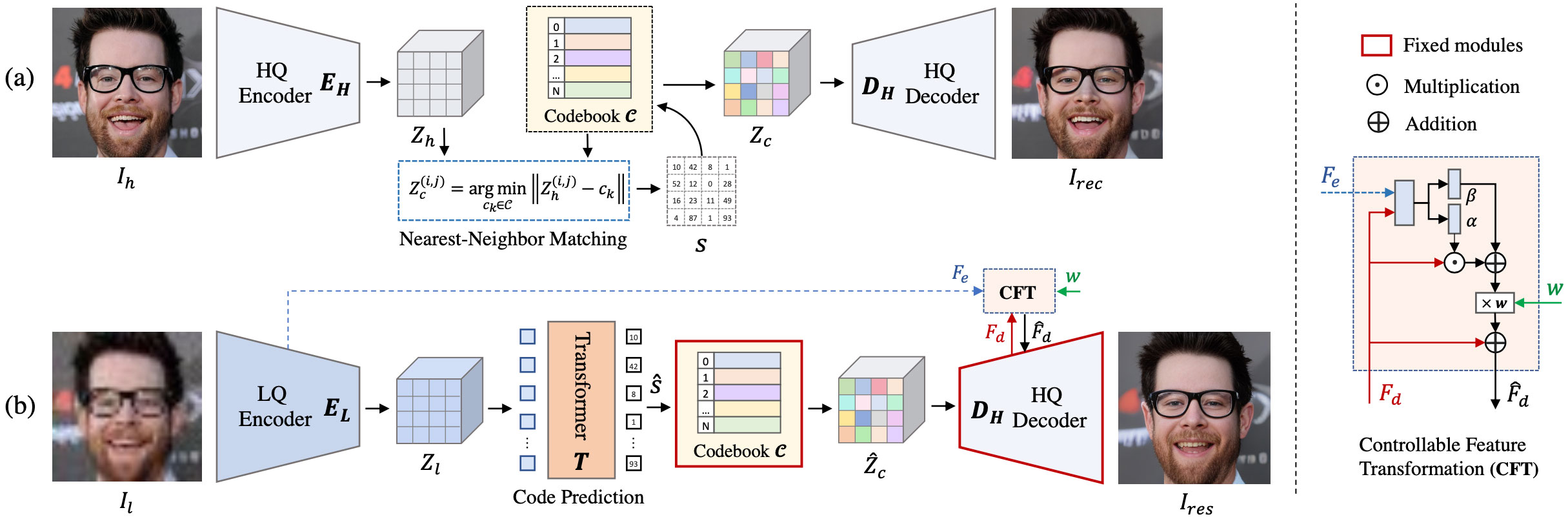

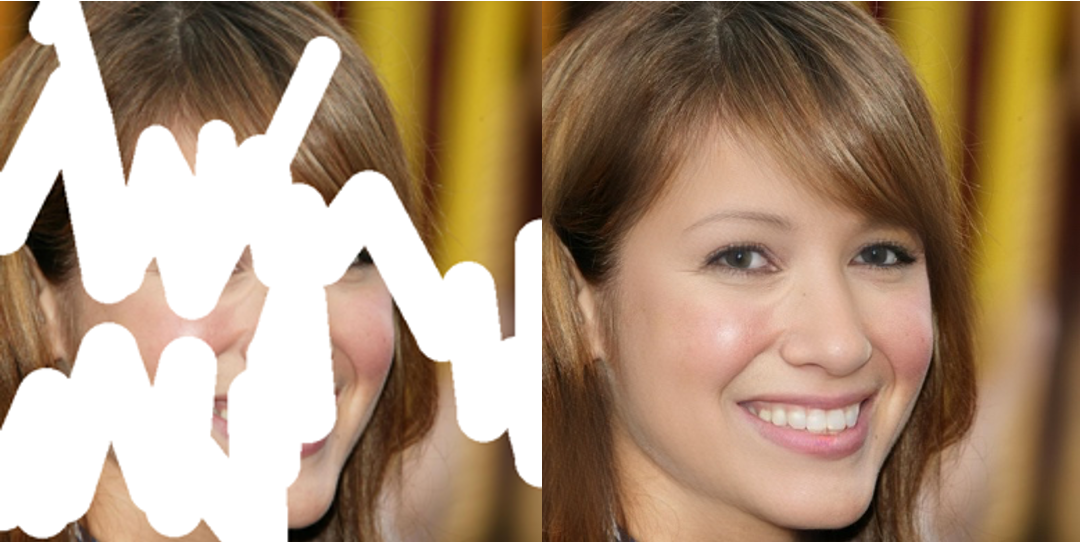

title = {Towards Robust Blind Face Restoration with Codebook Lookup TransFormer},

journal = {arXiv preprint arXiv:2206.11253},

year = {2022}

}

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This project is based on BasicSR.