English | 简体中文

Tensorlink is a distributed computing framework based on CUDA API-Forwarding. When your computer lacks a GPU or its GPU performance is insufficient, Tensorlink allows you to easily utilize GPU resources from any location within the local area network.

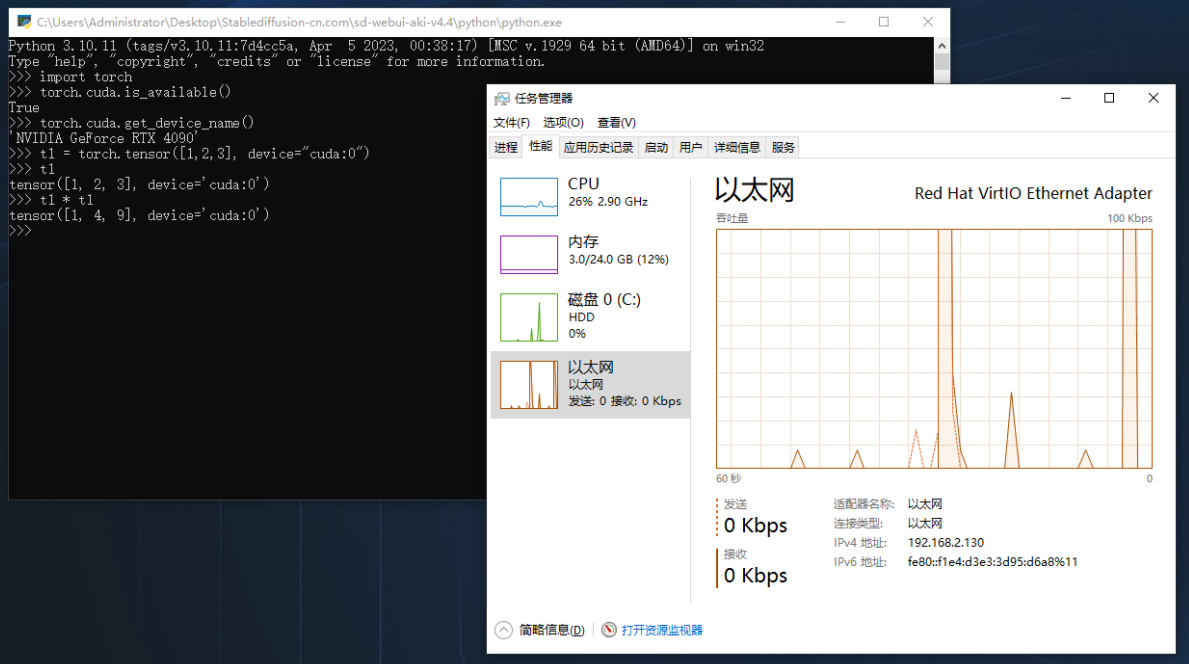

Note: The system shown in the scenarios does not have a physical GPU. It uses Tensorlink to connect to a 4090 GPU in another subnet.

The image shows the effect of Comfy-UI using Tensorlink for computation.

The image shows the effect of SD using Tensorlink for computation.

The image shows the effect of the transformers framework using Tensorlink for model inference.

- CUDA Runtime API Hook ✅

- CUDA Driver API Hook ✅

- CUDA cuBLAS Hook ✅

- CUDA cuDNN Hook ✅

- Support caching transmitted data to enhance loading speed ✅

- Support dynamic release of GPU memory that has entered idle state. ✅

- Support for Client Multi-Process ✅

- Support for ZSTD Data Compression ✅

- Support for Light (TCP+UDP) and Native (TCP+TCP) Communication Protocols ✅

- Support for Multi-GPU Computing on a Single Server

- Support for Cluster Mode, Integrating GPU Resources from Multiple Machines

-

It is recommended to install Python version 3.10

https://www.python.org/ftp/python/3.10.11/python-3.10.11-amd64.exe

-

Use the dynamically linked CUDA runtime library Pytorch-2.1.2

https://github.com/nvwacloud/tensorlink/releases/download/deps/torch-2.1.2+cu121-cp310-cp310-win_amd64.whl pip install torch-2.1.2+cu121-cp310-cp310-win_amd64.whl

We did not modify any PyTorch source code; we only changed some compilation options to allow PyTorch to use external CUDA dynamic libraries. If you need to compile PyTorch yourself, please refer to the patch file: pytorch-v2.1.2-build-for-msvc

-

Install Tensorlink CUDA dependency library. If CUDA 12.1 is already installed on your system, you can skip this step.

https://github.com/nvwacloud/tensorlink/releases/download/deps/tensorlink_cuda_deps.zip

Extract to any directory and configure the directory in the system environment variable Path.

Recommended System: Rocky Linux 9.3 or Ubuntu 2024.04

-

Install CUDA 12.1

https://developer.nvidia.com/cuda-12-1-0-download-archive -

Install CUDNN 8.8.1

https://developer.nvidia.com/downloads/compute/cudnn/secure/8.8.1/local_installers/12.0/cudnn-linux-x86_64-8.8.1.3_cuda12-archive.tar.xz/ -

Install ZSTD 1.5.5 or later

wget https://github.com/facebook/zstd/releases/download/v1.5.6/zstd-1.5.6.tar.gz tar -xf zstd-1.5.6.tar.gz cd zstd-1.5.6 make && make install

Download the latest version of Tensorlink

https://github.com/nvwacloud/tensorlink/releases/After extracting, copy all DLL files from the client\windows directory to the system32 directory.

cd client\windows

copy *.dll C:\Windows\System32

Note: If there is a conflict with existing CUDA-related DLL files, please back up the original files manually.

After extracting, copy all files from the server\Linux directory to any directory.

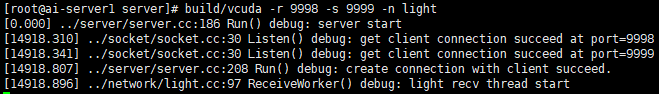

./tensorlink -role server -net native -recv_port 9998 -send_port 9999 tensorlink.exe -role client -ip <server ip> -net native -send_port 9998 -recv_port 9999Note: The server's receiving port corresponds to the client's sending port, and the server's sending port corresponds to the client's receiving port. Both ports and protocols must be consistent.

Check if the program is running correctly

Use the Python command line to import the PyTorch library and check if there is remote GPU information.

FAQs

-

Error on server startup, missing CUDNN library files

- Please check if the CUDNN library files are installed correctly.

- If installed via a compressed package, you need to set the library file path related environment variables or copy the library files to the /lib64 directory; otherwise, the program may not find the library files.

-

Client program unresponsive

- Please check if the client program is installed correctly and if the vcuda main process is running;

- Confirm if Tensorlink is started with administrator privileges;

- Confirm if the third-party program using CUDA has administrator privileges;

- You can further check the relevant information output by the vcuda process using DebugView