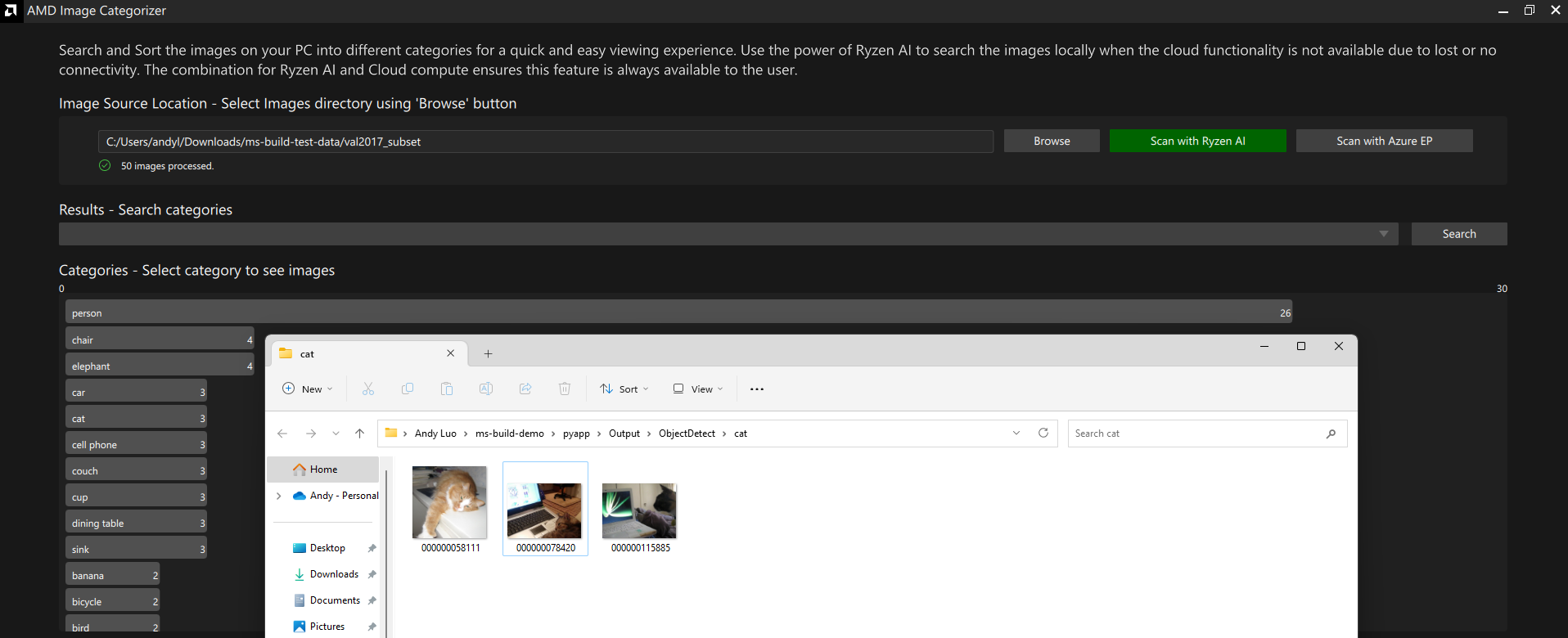

The demo showcases the search and sort the images for a quick and easy viewing experience on your AMD Ryzen™ AI based PC with two AI models - Yolov5 and Retinaface.

It features searching images locally when the cloud is not available due to lost or no connectivity. With AMD XDNA™ dedicated AI accelerator hardware seamlessly integrated on-chip and software that intelligently optimizes tasks and workloads, freeing up CPU and GPU resources, it makes new user experiences possible.

Please note the demo is for functional demonstration purposes only and does not represent the highest possible performance or accuracy.

To run this demo, you need a AMD Ryzen 7040HS Series mobile processor powered laptop with Windows 11 OS.

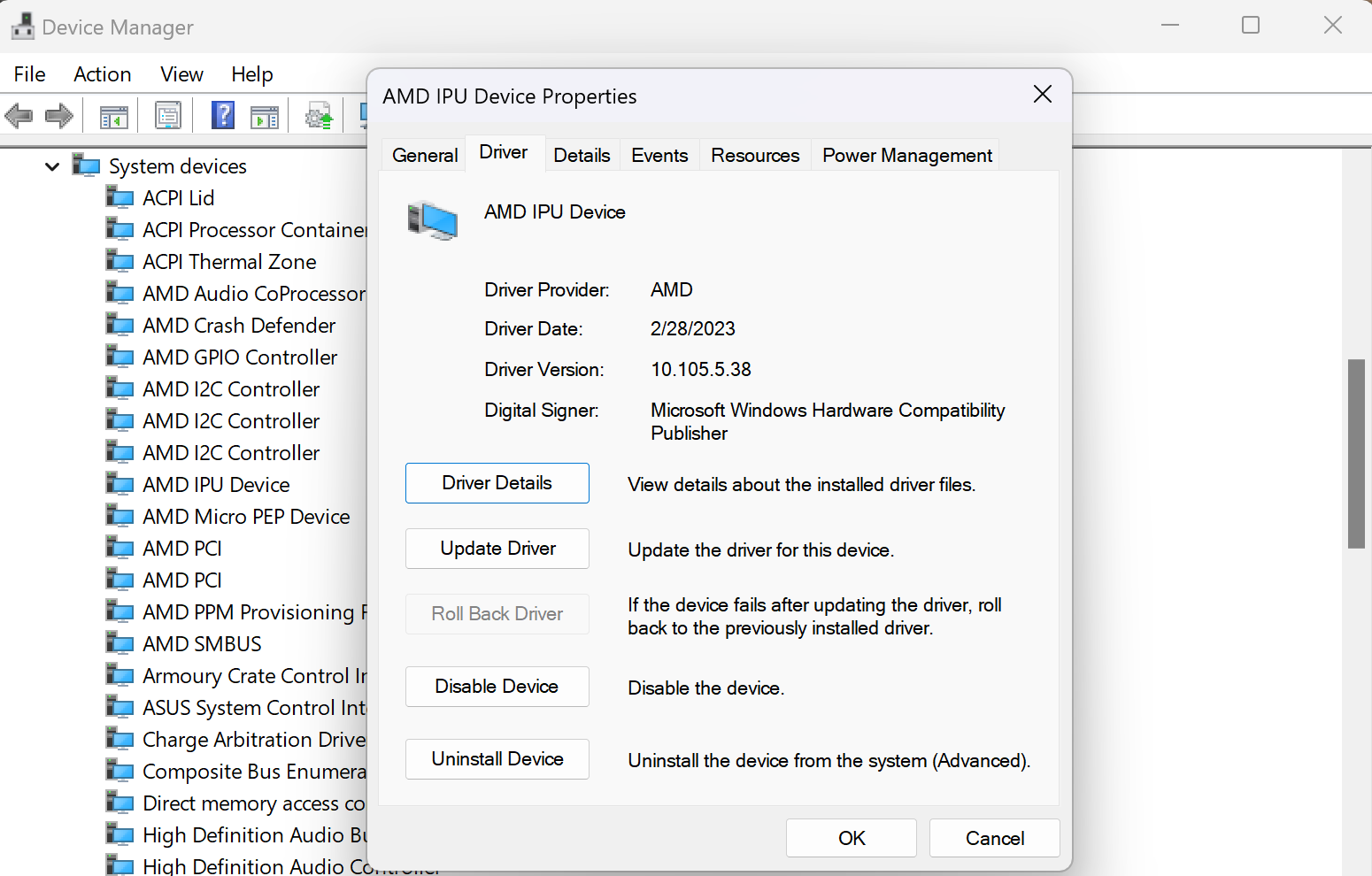

Please make sure the IPU driver (tested with 10.105.5.38) has been installed as shown below.

Please make sure Visual C++ Redistributable and conda (Miniconda or Anaconda) has been installed on Windows.

Start a conda command prompt and run:

conda create --name ms-build-demo python=3.9

conda install -n ms-build-demo -c conda-forge nodejs zlib re2

conda activate ms-build-demo

copy %CONDA_PREFIX%\Library\bin\zlib.dll %CONDA_PREFIX%\Library\bin\zlib1.dll

pip install zmq tornado opencv-python onnxruntime torch pyyaml pillow tqdm pandas torchvision matplotlib seaborn

pip install wheels\onnxruntime_vitisai-1.16.0-cp39-cp39-win_amd64.whl

pip install wheels\voe-0.1.0-cp39-cp39-win_amd64.whl

set XLNX_VART_FIRMWARE=C:\path\to\ms-build-demo\xclbin\1x4.xclbin

If you are using a Powershell prompt, please replace the copy command with:

Copy-Item -Path $env:CONDA_PREFIX\Library\bin\zlib.dll $env:CONDA_PREFIX\Library\bin\zlib1.dll

All the following commands are executed in conda environment.

Please refer to this page for more detailed setup instructions.

Note that Azure cloud setup is needed only for running the application with Azure cloud and it requires an Azure account and subscription. If you only need to run the application locally, you can skip the Azure cloud setup step.

- Install azure python sdk

pip install azure-ai-ml azure-identity - Create your workspace here

- After the workspace is created, navigate to your workspace's dashboard and click

Download config.json - Create Azure endpoint.

After this step you should see a newly generated

python models/setup_azure_ep.py --prefix <custom_endpoint_prefix> --config <config_json_path> -m [retinaface/yolov5] --step endpointazure_config.yamlfile undermodels/<model>/directory with yourauth_keyand endpointuri. Ifuriis none, wait for a short while and run the same command again. - Deploy the model

The cloud deployment will take a long time. Please go to your Azure portal to check the deployment status (Azure portal -> your workspace -> launch studio -> endpoints -> your_endpoint).

python models/setup_azure_ep.py --prefix <custom_endpoint_prefix> --config <config_json_path> -m [retinaface/yolov5] --step deployment - Set the endpoint traffic. In your Azure portal's endpoint status page, click on

Update trafficand set the traffic to 100

There are 3 ways to run the demo:

- Use QT GUI in python

- Use web brower

- Use GUI exe

cd pyapp

# Install dependencies

pip install -r requirements.txt

# Run the app

python main.py

Both 2 and 3 need to start the server as below:

cd webapp

python server.py

http://localhost:8998

or

cd electron-quick-start

npm install electron-packager

npx electron-packager . ms-build-demo --platform=win32 --arch=x64 --overwrite

npm install

npm start

The demo uses quantized onnx models. To know how to generate quantized onnx model, please check out the quantization example in the Microsoft Olive github.

The demo uses Yolov5 and Retinaface models. To know how to run your own models with ONNXRuntime on Ryzen AI, please find more details on the ONNXRuntime Vitis-AI EP page.