This software project accompanies the research paper, Stabilizing Transformer Training by Preventing Attention Entropy Collapse, published at ICML 2023.

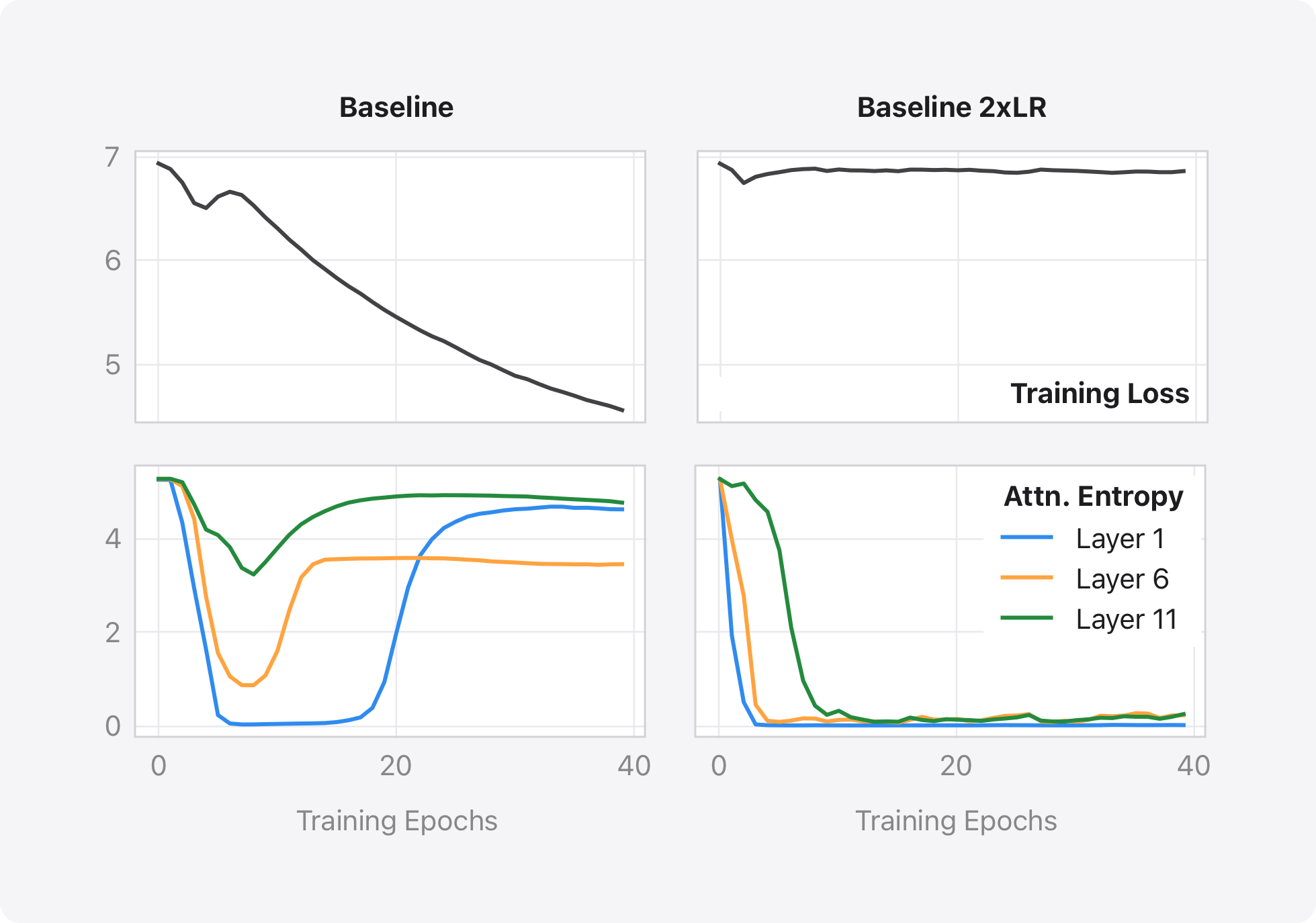

Transformers are difficult to train. In this work, we study the training stability of Transformers by proposing a novel lense named Attention Entropy Collapse. Attention Entropy is defined as the quantity

for an attention matrix

We provide both theoretical and emprical analyses to the entropy collapse phenomenon, and propose a simple fix named

We provide two reference implementations. One in PyTorch, applied to the Vision Transformer (VIT) setting; and another in JAX, applied to speech recognition (ASR). Please refer to the vision and speech folders for details. The same PyTorch implementation was used for language modeling (LM) and machine translation (MT) experiments.

@inproceedings{zhai2023stabilizing,

title={Stabilizing Transformer Training by Preventing Attention Entropy Collapse},

author={Zhai, Shuangfei and Likhomanenko, Tatiana and Littwin, Etai and Busbridge, Dan and Ramapuram, Jason and Zhang, Yizhe and Gu, Jiatao and Susskind, Joshua M},

booktitle={International Conference on Machine Learning},

pages={40770--40803},

year={2023},

organization={PMLR}

}