Currently the project is work in progress. See an older version of the website & PWA here.

Pitch video

Story behind the project

Project time line

WIP / For future

License

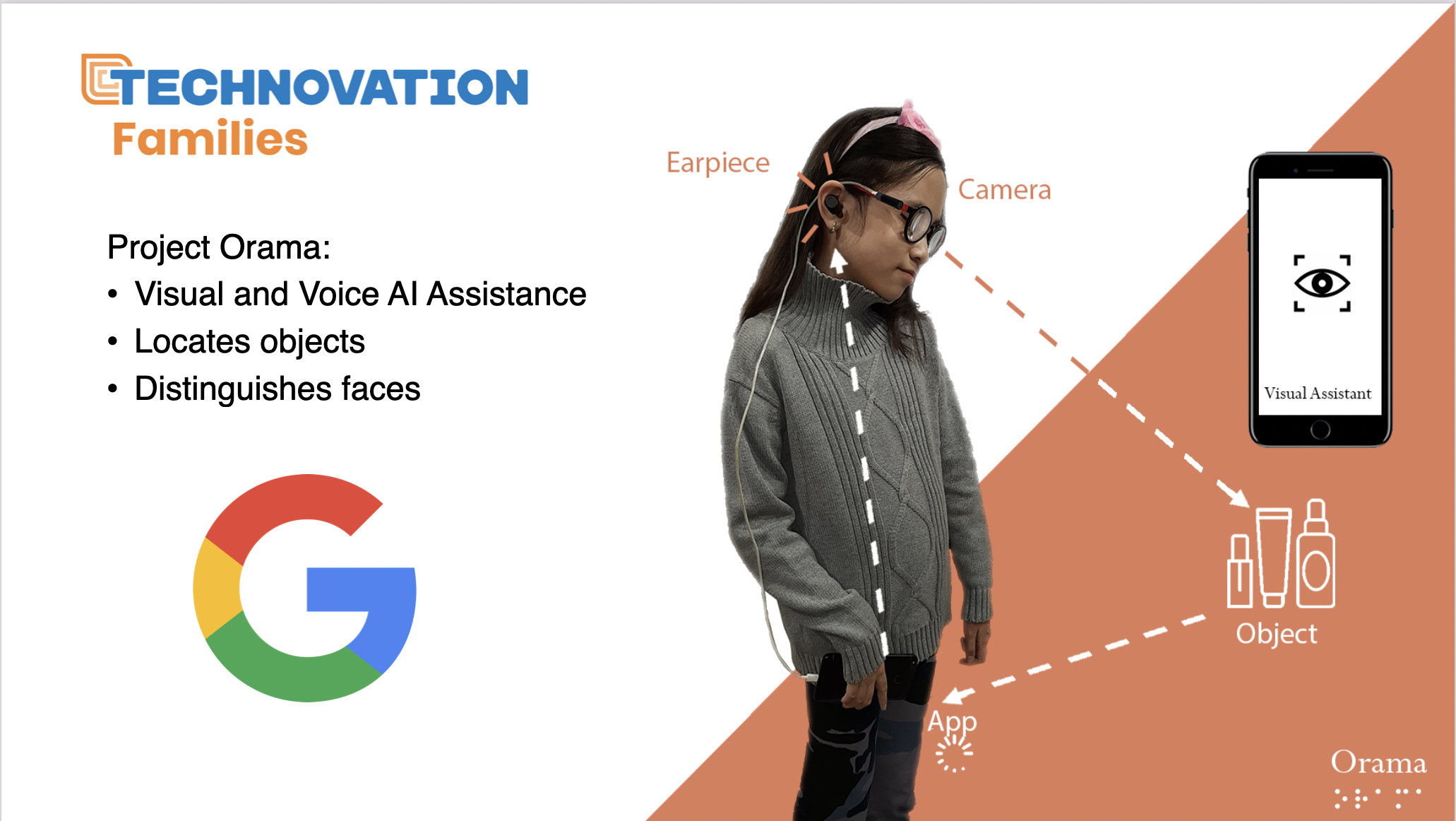

Orama Visual Assistant (from Greek: Όραμα, meaning "vision") is an app for visually impaired people that announces objects detected using user's phone camera.

Our goal is to help visually impaired people to become more independent.

Link to the pitch video on YouTube

We are the Nurmukhambetov family. There are seven of us in the family: mom, dad, four sons and a daughter. Three of the sons seen in the photograph developed this project.

“Our youngest daughter, Sofia, drove us to create this project. Sofia, was born prematurely, from birth we fought for her life, they tried as much as possible to save her eyesight. In consequence of 12 operations, we were able to save Sofia's light perception. But this is not enough, in order to live a full-fledged life on her own, she needs outside help.”

‒ Damira Nurmukhambetova

- Nov 2019

- We began brainstorming on the ideas.

- Dec 2019

- First demo made using KNN algorithm on images.

- Apr 2020

- Classification MobileNetv2 model trained with transfer learning (Teachable Machine).

- May 2020

- Third place finalists at HackDay 2020. First funding of the project.

- Jul 2020

- Finalists across the Aisa continent at Technovation Families.

- Jan 2022

- Change from image classification to object detection (SSD Mobile Netv2). Change from naming everything to search for an object task.

- OCR functionality with Tesseract. It is there, but it has bad performance.

- Mar 2022

- Began working on a react native version of the app.

- Improve OCR reader.

- Change to React Native.

- Transfer to cloud processing rather than on-device.

- Currency classification. Name what banknote that is seen in the camera.

- Facial recognition. Each user will have their own pool of people saved in the app for future facial recognition tasks.

- Image description. Describe what's in the image.

- 'Open with OramaVA' on images to do OCR/ facial recognition/ image description.

All of the codebase is MIT Licensed unless otherwise stated.