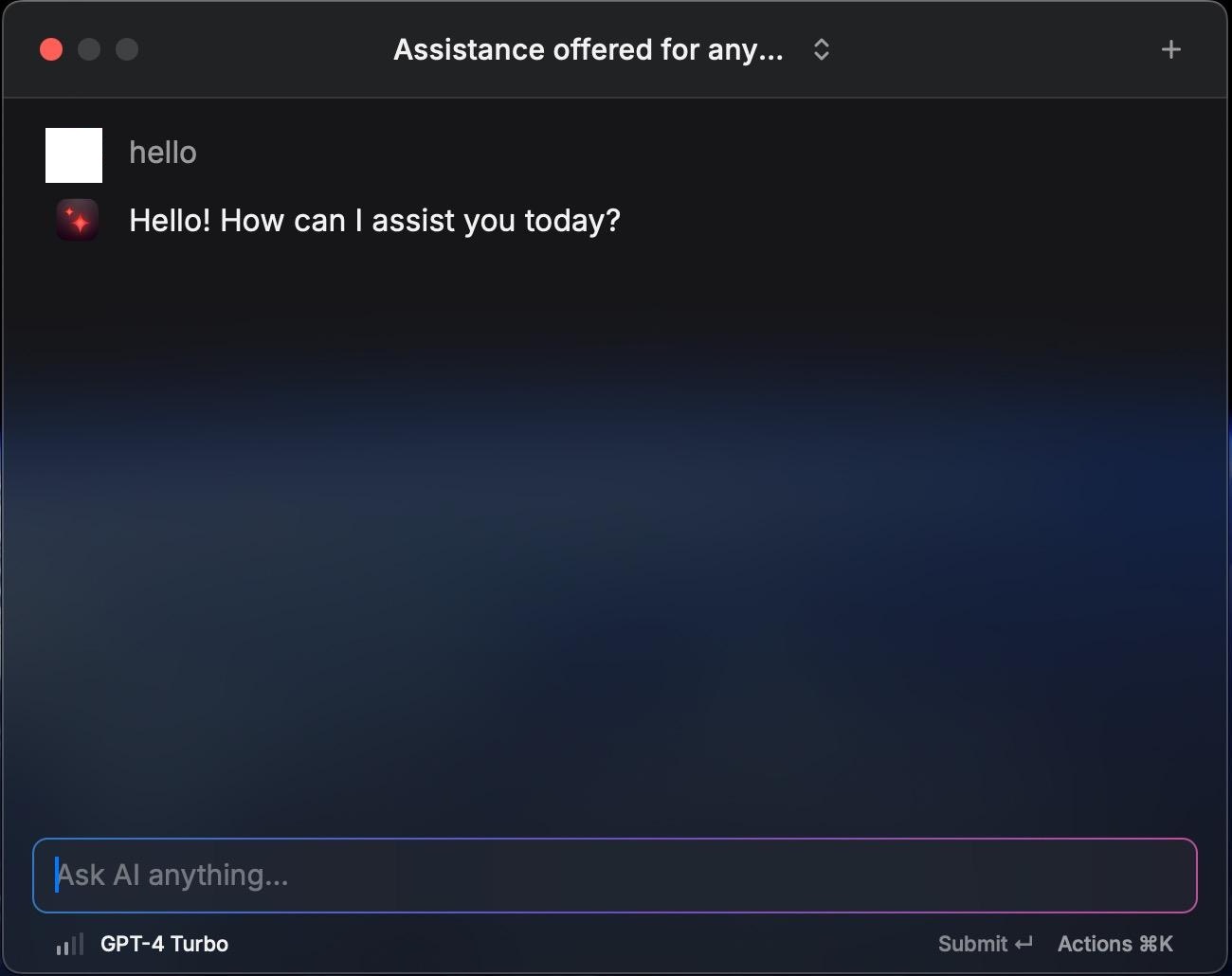

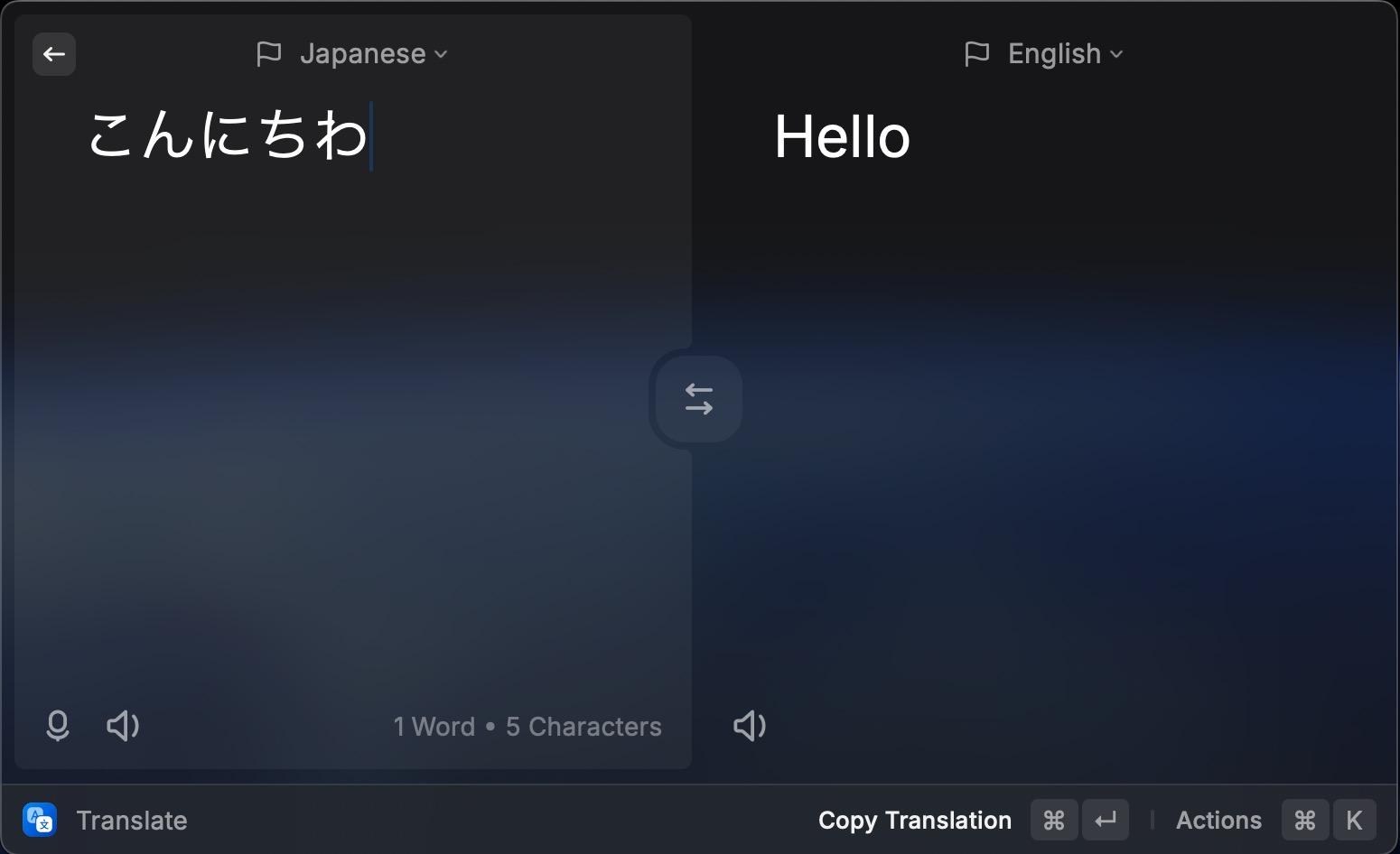

This is a simple Raycast AI API proxy. It allows you to use the Raycast AI app without a subscription. It's a simple proxy that forwards requests from Raycast to the OpenAI API, converts the format, and returns the response in real-time.

| Model Name | Test Status | Environment Variables |

|---|---|---|

openai |

Tested | OPENAI_API_KEY |

azure openai |

Tested | AZURE_OPENAI_API_KEY, AZURE_DEPLOYMENT_ID, OPENAI_AZURE_ENDPOINT |

gemini |

Experimental | GOOGLE_API_KEY |

- Generate certificates

pip3 install mitmproxy

python -c "$(curl -fsSL https://raw.githubusercontent.com/yufeikang/raycast_api_proxy/main/scripts/cert_gen.py)" --domain backend.raycast.com --out ./cert- Start the service

docker run --name raycast \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

-p 443:443 \

--dns 1.1.1.1 \

-v $PWD/cert/:/data/cert \

-e CERT_FILE=/data/cert/backend.raycast.com.cert.pem \

-e CERT_KEY=/data/cert/backend.raycast.com.key.pem \

-e LOG_LEVEL=INFO \

-d \

ghcr.io/yufeikang/raycast_api_proxy:main- Change the OPENAI environment variable to using the Azure OpenAI API

See How to switch between OpenAI and Azure OpenAI endpoints with Python

docker run --name raycast \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

-e OPENAI_API_BASE=https://your-resource.openai.azure.com \

-e OPENAI_API_VERSION=2023-05-15 \

-e OPENAI_API_TYPE=azure \

-e AZURE_DEPLOYMENT_ID=your-deployment-id \

-p 443:443 \

--dns 1.1.1.1 \

-v $PWD/cert/:/data/cert \

-e CERT_FILE=/data/cert/backend.raycast.com.cert.pem \

-e CERT_KEY=/data/cert/backend.raycast.com.key.pem \

-e LOG_LEVEL=INFO \

-d \

ghcr.io/yufeikang/raycast_api_proxy:mainObtain your Google API Key and export it as GOOGLE_API_KEY.

Currently only gemini-pro model is supported.

# git clone this repo and cd to it

docker build -t raycast .

docker run --name raycast \

-e GOOGLE_API_KEY=$GOOGLE_API_KEY \

-p 443:443 \

--dns 1.1.1.1 \

-v $PWD/cert/:/data/cert \

-e CERT_FILE=/data/cert/backend.raycast.com.cert.pem \

-e CERT_KEY=/data/cert/backend.raycast.com.key.pem \

-e LOG_LEVEL=INFO \

-d \

raycast:latest- Clone this repository

- Use

pdm installto install dependencies - Create an environment variable

export OPENAI_API_KEY=<your openai api key>

- Use

./scripts/cert_gen.py --domain backend.raycast.com --out ./certto generate a self-signed certificate - Start the service with

python ./app/main.py

- Modify

/etc/hostto add the following line:

127.0.0.1 backend.raycast.com

::1 backend.raycast.com

The purpose of this modification is to point backend.raycast.com to the localhost instead of the actual backend.raycast.com. You can also add this

record in your DNS server.

- Add the certificate trust to the system keychain

Open the CA certificate in the cert folder and add it to the system keychain and trust it.

This is necessary because the Raycast AI Proxy uses a self-signed certificate and it must be trusted to work properly.

Note:

When using macOS on Apple Silicon, if you experience issues with applications hanging when manually adding a CA certificate to

Keychain Access, you can use the following command in the terminal as an alternative method:

sudo security add-trusted-cert -d -p ssl -p basic -k /Library/Keychains/System.keychain ~/.mitmproxy/mitmproxy-ca-cert.pem