This repository contains the data and code of the NoBIAS 2023 Data Challenge. The goal is to set an example and promote multidisciplinary participation in the discourse of technology development to address societal challenges.

In a nutshell, this were the 🗝️ ingredients for the challenge:

- 🔎 Evaluation: Using informative metrics to inspect specific model behaviours (e.g., adversarial benchmarks and test suites in the case of 🤬 hate speech detection).

- 📝 Data: include a rich set of annotations to enable more nuanced considerations (e.g., the non-aggregated data, demographic information of the annotators, and about the target groups in the hate speech texts).

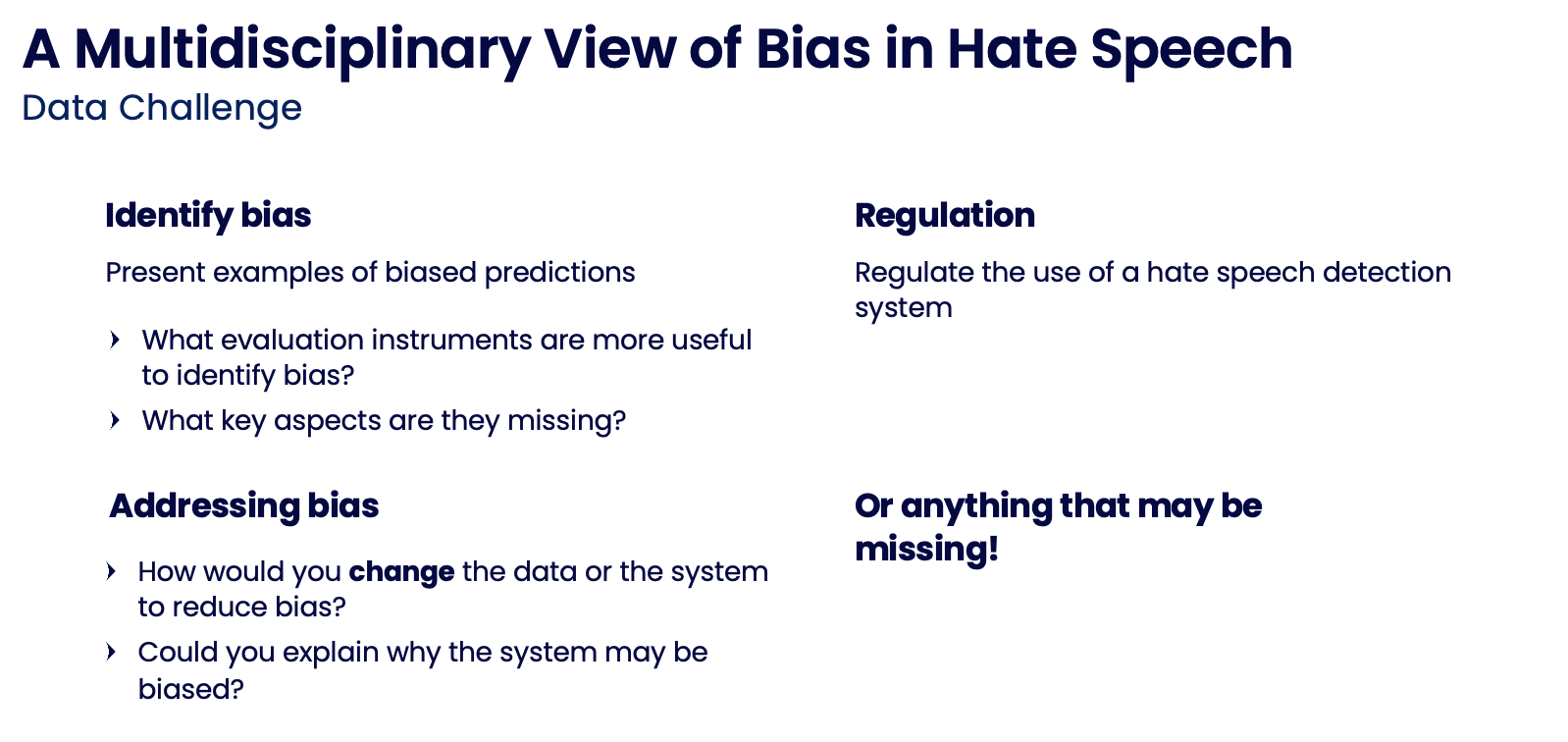

- 💡 Task: enable open-ended feedback to promote the generation of knowledge. The Figure below shows example prompts that were used to spark discussion and critical reflection on bias through this problem-solving scenario.

- The

preparationfolder contains scripts to create the data and evaluation files. Given the list of submissions, the evaluation notebook computes the challenge results. - The

challengefolder is the one shared with the participants. It includes the files required to run the challenge and supporting materials: a slide deck with detailed instructions, motivation, schedule for a one-day session, and a bibliography.

Here we provide more detailed instructions on what outputs each Jupyter Notebook generates:

- data: generates files for participants to train the models (

challenge/data/[train_aggregate, train].csv) and to generate adversarial tests (preparation/Adversifier/mhs/[aaa_train, aaa_test].csv, ) - evaluation files, based on Docker, exports to evaluation folder the TSV static and dynamic files for testing the models (

challenge/eval_files/[corr_a_to_a/corr_n_to_a,f1_o,flip_n_to_a/hashtag_check/quoting_a_to_n/static].tsv). - evaluation runs the evaluation once all participants submit their predictions to the

preparation/Adversifier/datathon_results/predictions/team_{}folder. It outputs (indatathon_results/answersfolder) aresults.tsvfile in each individual team folder, and a commonhatecheck.csvfile including each teams predictions as additional columns.

The challenge is designed to run in a Google Colab environment. Participants can get started with:

- Part one in

: basic data loading functions and features to get started.

- Part two in

: including a hate speech classifier and evaluation instruments to conduct an example-based analysis of bias in the proposed models.

Preparation notebooks and scripts were run in a Conda environment in Python 3.10.

$ conda create --name <env_name> --file conda-file.txt python=3.10

$ conda activate <env_name>

# Inside virtual environment to run notebooks with this conda environment

(<env_name>) $ python -m ipykernel install --user --name=<env_name>

(<env_name>) $ jupyter notebook

We published a paper with the considerations and results from this data challenge.

Reach out if you have any questions 📧, especially if you are looking to involve multiple disciplines to work ⚙️ hands-on a problem!