SARIMANNX, Seasonal AutoRegressive Integrated Moving Average Neural Network with eXogenous regressors, a time series forecasting model.

SARIMANNX is a simple generalization of SARIMAX for capturing a nonlinearities in time series, so SARIMANNX is more appropriate to forecast a nonlinear or sum of linear and nonlinear time series.

- Python >= (3.8)

- NumPy >= (1.20.3)

- SciPy >= (1.6.3)

- Clone this repo;

- Copy "sarimannx" folder in your project.

Let be a researched time series observation at

time t. If time series is nonstationary, there is one way

to make it stationary -- computing the differences between

consecutive observations, i.e. differencing:

and seasonal differencing:

.

Suppose, that after d-times differencing and D-times

seasonal differencing with season length s, we have a

stationary time series . Then it can be represented as

where

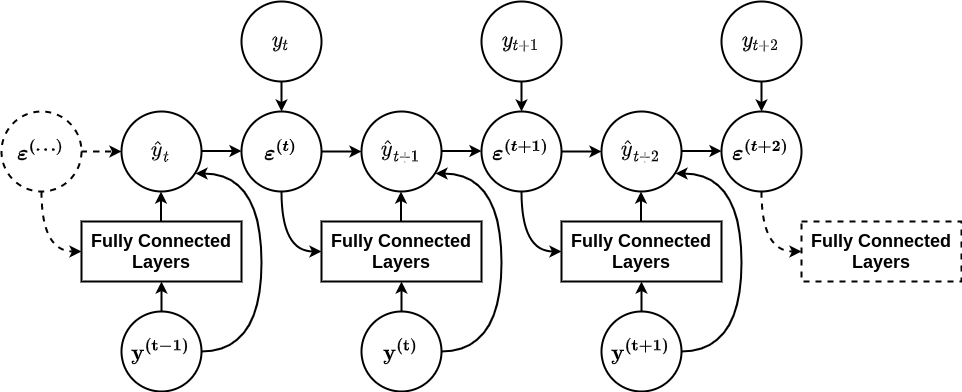

SARIMANNX can be considered as a recurrent neural network (RNN) with skip connections that produce an output at each time step and have recurrent connections from the outputs at previous MAX_MA_LAG time steps to the input at the next time step, where part of input passes through Fully Connected Layers and part skips it, as illustrated in figure below:

-

class

sarimannx.sarimannx.SARIMANNX( order=(1, 0, 0, 1, 0), seasonal_order=(0, 0, 0, 0, 0, 0), ann_hidden_layer_sizes=10, ann_activation="tanh", trend="n", optimize_init_shocks=True, grad_clip_value=1e+140, max_grad_norm=10, logging_level=logging.WARNING, solver="L-BFGS-B", **solver_kwargs) -

This model optimizes the squared-loss (MSE) using LBFGS or other optimizers available in scipy.optimize.minimize.

- Parameters

-

- order : iterable, optional

- The (p, q, d, r, g) order of the model. All values must be an

integers.

Default is (1, 0, 0, 1, 0). - seasonal_order : iterable, optional

- The (P, Q, D, R, G, s) order of the seasonal component of the model.

D and s must be an integers, while P, Q, R and G may either be an

integers or iterables of integers. s needed only for differencing,

so all necessary seasonal lags must be specified explicitly.

Default is no seasonal effect. - ann_hidden_layer_sizes : iterable, optional

- The ith element represents the number of neurons in the ith hidden

layer in ANN part of the model. All values must be an integers.

Default is (10,). - ann_activation : {"identity", "logistic", "tanh", "relu"}

-

Activation function for the hidden layer in ANN part of the model.

"identity", no-op activation,

returns f(x) = x"logistic", the logistic sigmoid function,

returns f(x) = 1 / (1 + exp(-x))."tanh", the hyperbolic tan function,

returns f(x) = tanh(x)."relu", the rectified linear unit function,

returns f(x) = max(0, x)

- trend : str{"n","c","t","ct"} or iterable, optional

- Parameter controlling the deterministic trend polynomial Trend(t).

Can be specified as a string where "c" indicates a constant

(i.e. a degree zero component of the trend polynomial),

"t" indicates a linear trend with time, and "ct" is both. Can also

be specified as an iterable defining the powers of t included in polynomial.

For example, [1, 2, 0, 8] denotes a*t + b*t^2 + c + d*t^8.

Default is to not include a trend component. - optimize_init_shocks : bool, optional

- Whether to optimize first MAX_MA_LAG shocks as additional model parameters

or assume them as zeros. If the sample size is relatively small, initial

shocks optimization is more preferable.

Default is True. - grad_clip_value : int, optional

- Maximum allowed value of the gradients. The gradients are clipped in

the range [-grad_clip_value, grad_clip_value]. Gradient clipping by

value used for intermediate gradients, where gradient clipping by

norm is not applicable. Clipping needed for fixing gradint explosion.

Default is 1e+140. - max_grad_norm : int, optional

- Maximum allowed norm of the final gradient. If the final gradient

norm is greater, final gradient will be normalized and multiplied by

max_grad_norm. Gradient clipping by norm used for final gradient to

fix its explosion.

Default is 10. - logging_level : int, optional

- If logging is needed, firstly necessary to initialize logging config

and then choose appropriate logging level for logging training progress.

Without config no messages will be displayed at either logging level

(Do not confuse with warning messages from warnings library which

simply printing in stdout. For disable it use

warnings.filterwarnings("ignore") for example). For more details

see logging HOWTO.

Default is 30. - solver : str, optional

- The solver for weights optimization. For a full list

of available solvers, see

scipy.optimize.minimize.

Default is "L-BFGS-B". - **solver_kwargs

- Additional keyword agruments for the solver(For example, maximum number of iterations or optimization tolerance). For more details, see scipy.optimize.minimize.

- Attributes

-

- loss_ : float

- The final loss computed with the loss function.

- n_iter_ : int

- The number of iterations the solver has run.

- trend_coefs : numpy ndarray

- Trend polynomial coefficients.

- sarima_coefs : numpy ndarray

- Weights vector of SARIMA part of the model. First p coefficients corresponds to AR part, next len(P) corresponds to seasonal AR part, next q corresponds to MA part and last len(Q) coefficients corresponds to seasonal MA part.

- ann_coefs : list of numpy ndarrays

- Weights matrices of ANN part of the model. The ith element in the list represents the weight matrix corresponding to layer i.

- ann_intercepts : list of numpy ndarrays

- Bias vectors of ANN part of the model. The ith element in the list represents the bias vector corresponding to layer i + 1. Output layer has no bias.

- init_shocks : numpy ndarray

- The first MAX_MA_LAG shocks. If optimize_init_shocks is False, then after training they will be zeros, else initial shocks will be optimized as other model weights.

- max_ma_lag : int

- Highest moving average lag in the model.

- max_ar_lag : int

- Highest autoregressive lag in the model

- num_exogs : int

- Number of exogenous regressors used by model. Equals to X.shape[1] or len(sarima_exogs)+len(ann_exogs).

- train_score : float

- r2_score on training data after training.

- train_std : float

- Standard deviation of model residuals after training.

Methods

fit(y[, X, sarima_exogs, ...])Fits the model to time series data y.

get_params()Returns all trained model parameters and initial shocks.

predict([y, X, ...])Makes forecasts from fitted model.

set_params(sarima_coefs, ...)Sets all trainable model parameters and initial shocks.

-

fit(y, X=None, sarima_exogs=slice(None), ann_exogs=slice(None), dtype=float, init_weights_shocks=True, return_preds_resids=False) -

Fits the model to time series data y.

- Parameters

-

- y : ndarray of shape (nobs,)

- Training time series data.

- X : ndarray, optional

- Matrix of exogenous regressors. If provided,

it must be shaped to (nobs, k), where k is number

of regressors.

Default is no exogenous regressors in the model. - sarima_exogs : iterable, optional

- Specify regressors which will be included in SARIMA

input by specifying columns indices of the X matrix.

Default is all provided regressors will be included. - ann_exogs : iterable, optional

- Specify regressors which will be included in ANN

input by specifying columns indices of the X matrix.

Default is all provided regressors will be included. - dtype : dtype, optional

- Data type in which input data y and X will be

converted before training.

Default is numpy float64 - init_weights_shocks : bool, optional

- Wheter or not to initialize all model trained

parameters. If this is the first time calling fit

method and parameters did not specified via

set_params method then init_weights_shocks must

be set to True.

Default is True. - return_preds_resids : bool, optional

- Wheter or not to return all one step predictions

along y and corresponding residuals together

with trained model. If true, returns self and

python dictionary.

Default is False.

- Returns

-

- self : return a trained SARIMANNX model.

- python dictionary

- Returns python dict with keys "predictions" and "residuals" and corresponding numpy ndarrays, if return_preds_resids was set to True.

-

get_params() -

Returns all trained model parameters and initial shocks.

- Returns

-

- python dictionary

- Returns all model trained parameters and initial shocks in python dictionary by keys "sarima_coefs", "trend_coefs", "ann_coefs", "ann_intercepts" and "init_shocks".

-

predict(y=None, X=None, input_shocks=None, t=None, horizon=1, intervals=False, return_last_input_shocks=False) -

Makes forecasts from fitted model.

Suppose that the last time moment in train data was T.

By default, predict returns prediction of value at T+horizon time moment, but if a model has exogenous regressors, for getting prediction you need to provide exogenous regressors matrix X of shape (horizon, k) where k is number of exogenous regressors.

If new data y was provided with N number of observations, then predict returns prediction of value at T+N+horizon time moment. All shocks corresponds to new data will be calculated. And again, if a model has exogenous regressors, you need to provide exogenous regressors matrix X but now of shape (N+horizon, k).

Also, you can just substitute new data y, shocks, time moment t and exogenous regressors X into model and get prediction of value at t+horizon time moment, but make sure that new data y has at least d+D*s+max_ar_lag observations, shocks has at least max_ma_lag values and exogenous regressors matrix has shape (horizon, k).- Parameters

-

- y : ndarray, optional

- New time series data.

- X : ndarray, optional

- Matrix of exogenous regressors.

- input_shocks : ndarray, optional

- Input shocks. Must be in reverse time order, i.e. t-1 shock by 0 index t-2 shock by 1 index etc.

- t : int, optional

- Forecasing origin.

- horizon : float, optional

- Forecasting horizon.

- intervals : bool, optional

- Wheter or not to return prediction intervals. Intervals correctly calculates only for one step prediction(i.e when horizon is 1) yet.

- return_last_input_shocks : bool, optional

- Wheter or not to return last calculated input shocks. Useful when in next time you want to just substitute inputs in model for getting prediction.

- Returns

-

- pred : float

- Model prediction of value at t+horizon time moment.

- pred_intervals : tuple

- Returns prediction intervals if intervals was True.

- last_input_shocks : ndarray

- Returns last input shocks in reverse time order, if return_input_shocks was True.

-

set_params(sarima_coefs, ann_coefs, ann_intercepts, trend_coefs, init_shocks) -

Sets all trainable model parameters and initial shocks.

- Parameters

-

- sarima_coefs : ndarray

- Weights vector of SARIMA part of the model.

- ann_coefs : list of ndarrays

- Weights matrices of ANN part of the model. The ith element in the list represents the weight matrix corresponding to layer i.

- ann_intercepts : list of ndarrays

- Bias vectors of ANN part of the model. The ith element in the list represents the bias vector corresponding to layer i + 1. Output layer has no bias.

- trend_coefs : ndarray

- Coefficients of trend polynomial.

- init_shocks : ndarray

- The first MAX_MA_LAG shocks.

>>> import sys

>>> import os

>>> import numpy as np

>>> import warnings

>>> warnings.filterwarnings("ignore")

>>> module_path = os.path.join(os.path.abspath("."), "sarimannx")

>>> if module_path not in sys.path:

... sys.path.append(module_path)

...

>>> from sarimannx import SARIMANNX

>>> np.random.seed(888)

>>> y = np.random.normal(1., 1., size=(200,))

>>> model = SARIMANNX(options={"maxiter": 500}).fit(y)

>>> model.predict()

1.093410687884555For more examples see "examples" folder.

-

R. Hyndman, G. Athanasopoulos, Forecasting: principles and practice, 3rd ed. Otexts, 2021, p. 442. Available: https://otexts.com/fpp3/

-

I. Goodfellow, Y. Bengio, A. Courville, Deep Learning. The MIT Press, 2016, p. 800. Available: https://www.deeplearningbook.org/