An improved LBFGS optimizer for PyTorch is provided with the code. Further details are given in this paper. Also see this introduction.

Examples of use:

-

Federated learning: see these examples.

-

Calibration and other inverse problems: see radio interferometric calibration.

-

K-harmonic means clustering: see LOFAR system health management.

-

Other problems: see this example.

Files included are:

lbfgsnew.py: New LBFGS optimizer

lbfgs.py: Symlink to lbfgsnew.py

cifar10_resnet.py: CIFAR10 ResNet training example (see figures below)

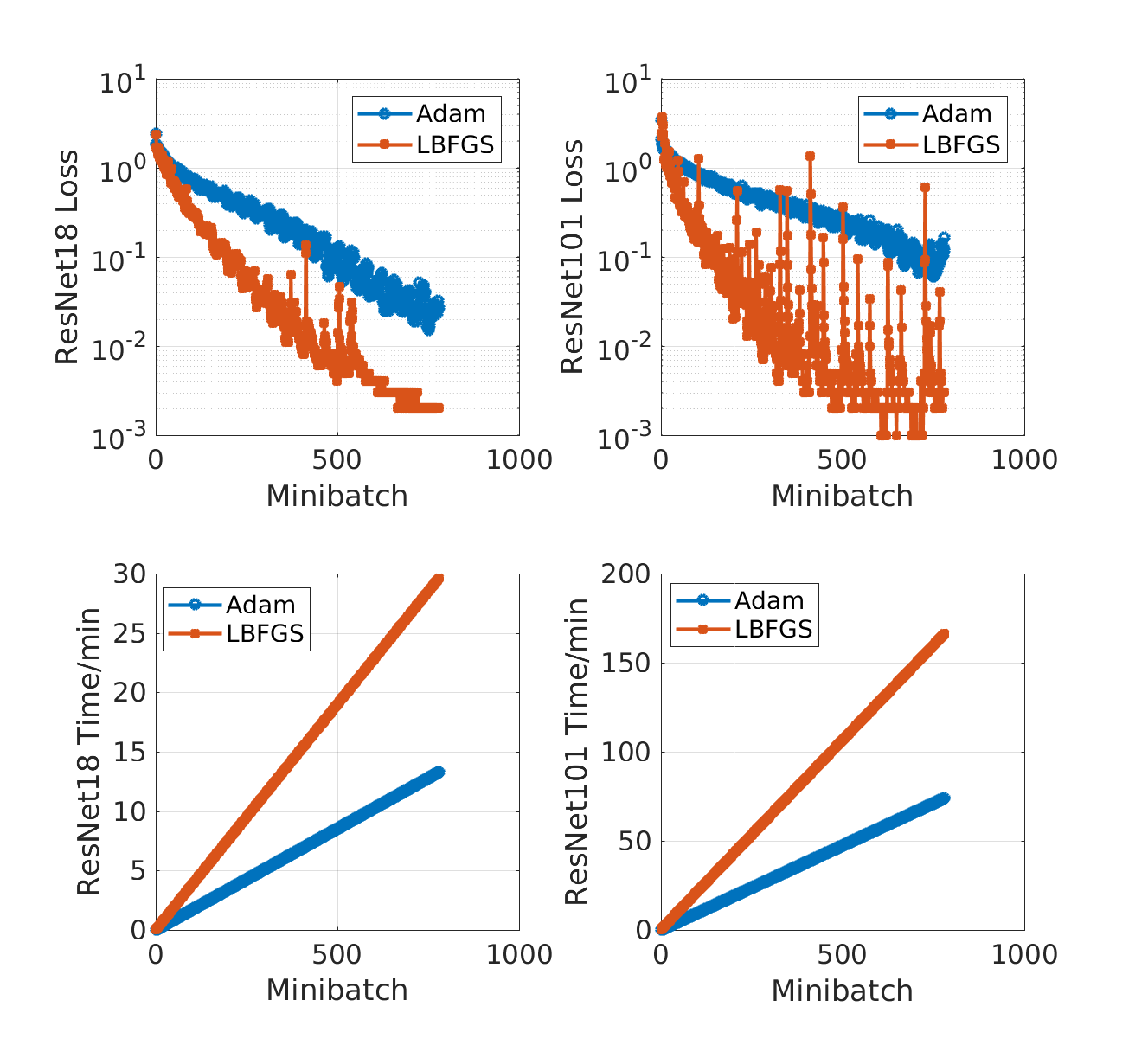

The above figure shows the training loss and training time using Colab with one GPU. ResNet18 and ResNet101 models are used. Test accuracy after 20 epochs: 84% for LBFGS and 82% for Adam.

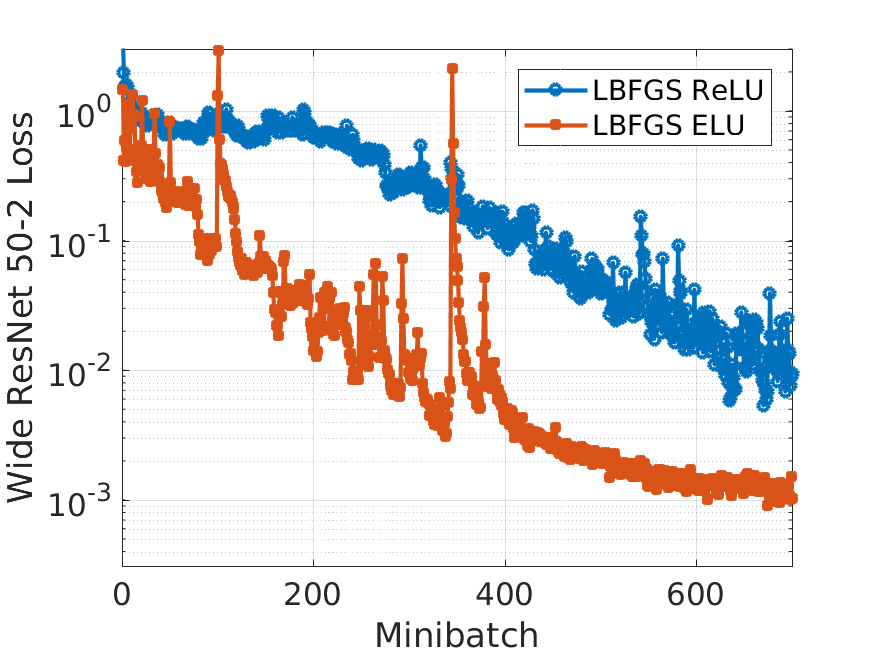

Changing the activation from commonly used ReLU to others like ELU gives faster convergence in LBFGS, as seen in the figure below.

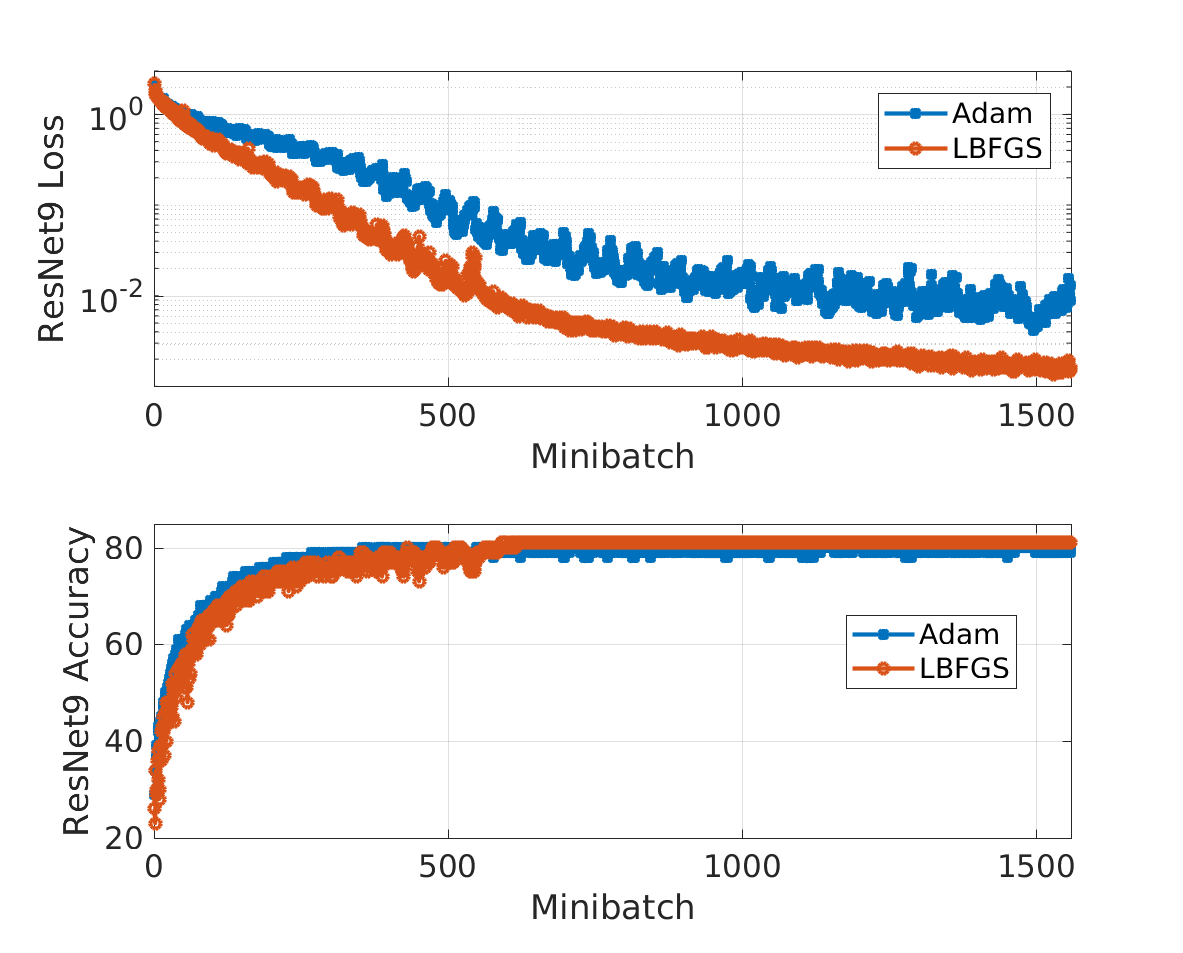

Here is a comparison of both training error and test accuracy for ResNet9 using LBFGS and Adam.

Example usage in full batch mode:

from lbfgsnew import LBFGSNew

optimizer = LBFGSNew(model.parameters(), history_size=7, max_iter=100, line_search_fn=True, batch_mode=False)

Example usage in minibatch mode:

from lbfgsnew import LBFGSNew

optimizer = LBFGSNew(model.parameters(), history_size=7, max_iter=2, line_search_fn=True, batch_mode=True)

Note: for certain problems, the gradient can also be part of the cost, for example in TV regularization. In such situations, give the option cost_use_gradient=True to LBFGSNew(). However, this will increase the computational cost, so only use when needed.