Peng Gao1, Teli Ma1, Hongsheng Li2, Jifeng Dai3, Yu Qiao1,

1 Shanghai AI Laboratory, 2 MMLab, CUHK, 3 Sensetime Research.

This repo is the official implementation of ConvMAE: Masked Convolution Meets Masked Autoencoders. It currently concludes codes and models for the following tasks:

ImageNet Pretrain: See PRETRAIN.md.

ImageNet Finetune: See FINETUNE.md.

Object Detection: See DETECTION.md.

Semantic Segmentation: See SEGMENTATION.md.

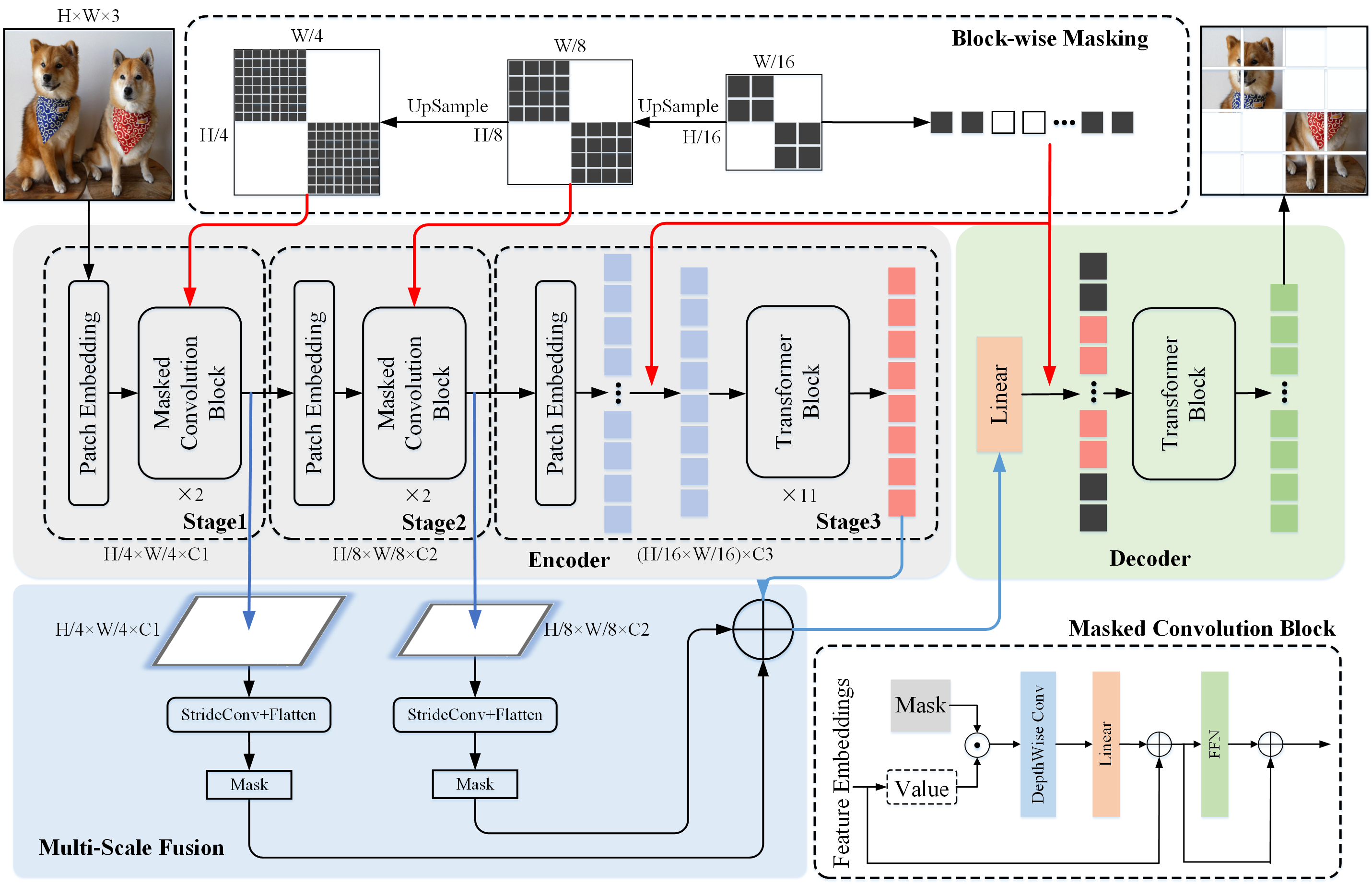

ConvMAE framework demonstrates that multi-scale hybrid convolution-transformer can learn more discriminative representations via the mask auto-encoding scheme.

- We present the strong and efficient self-supervised framework ConvMAE, which is easy to implement but show outstanding performances on downstream tasks.

- ConvMAE naturally generates hierarchical representations and exhibit promising performances on object detection and segmentation.

| Models | #Params(M) | GFLOPs | Pretrain Epochs | Finetune acc@1(%) | Linear Probe acc@1(%) | logs | weights |

|---|---|---|---|---|---|---|---|

| ConvMAE-B | 88 | 1600 | |||||

| ConvMAE-L | 322 | 800 |

| Models | Pretrain Epochs | Finetune Epochs | #Params(M) | GFLOPs | box AP | mask AP | logs | weights |

|---|---|---|---|---|---|---|---|---|

| ConvMAE-B |

| Models | Pretrain Epochs | Finetune Iters | #Params(M) | GFLOPs | mIoU | logs | weights |

|---|---|---|---|---|---|---|---|

| ConvMAE-B |

- Linux

- Python 3.7+

- CUDA 10.2+

- GCC 5+

- See PRETRAIN.md for pretraining.

- See FINETUNE.md for pretrained model finetuning and linear probing.

- See DETECTION.md for using pretrained backbone on Mask RCNN.

- See SEGMENTATION.md for using pretrained backbone on UperNet.

The pretraining and finetuning of our project are based on DeiT and MAE. The object detection and semantic segmentation parts are based on MIMDet and MMSegmentation respectively. Thanks for their wonderful work.

ConvMAE is released under the MIT License.