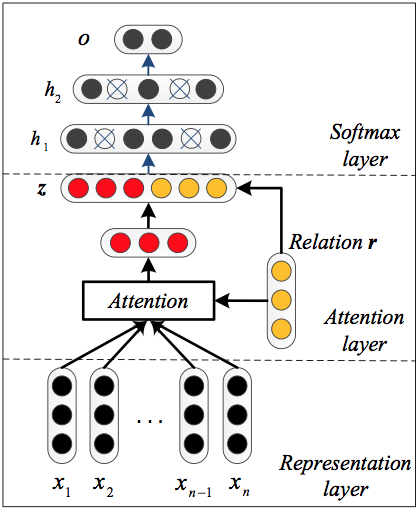

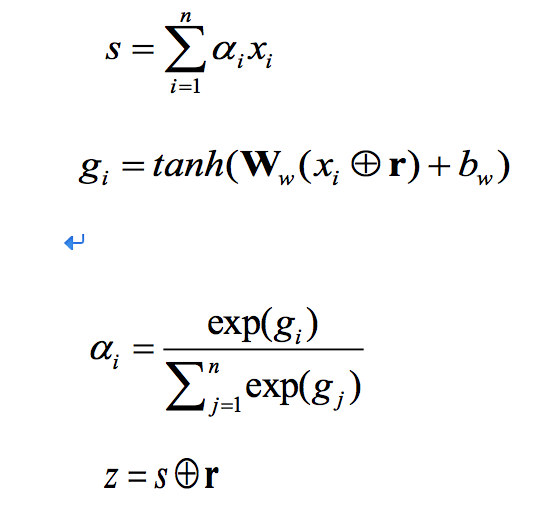

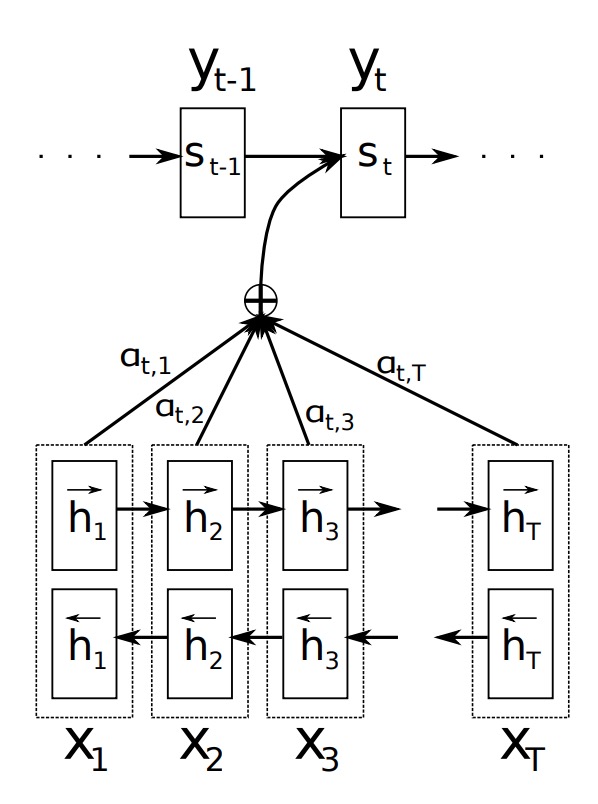

X = Input Sequence of length n.

H = LSTM(X); Note that here the LSTM has return_sequences = True,

so H is a sequence of vectors of length n.

s is the hidden state of the LSTM (h and c)

h is a weighted sum over H: 加权和

h = sigma(j = 0 to n-1) alpha(j) * H(j)

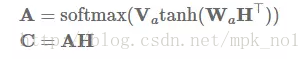

weight alpha[i, j] for each hj is computed as follows:

H = [h1,h2,...,hn]

M = tanh(H)

alhpa = softmax(w.transpose * M)

h# = tanh(h)

y = softmax(W * h# + b)

J(theta) = negative_log_likelihood + regularity

GitHub 项目

GitHub 项目

GitHub 项目

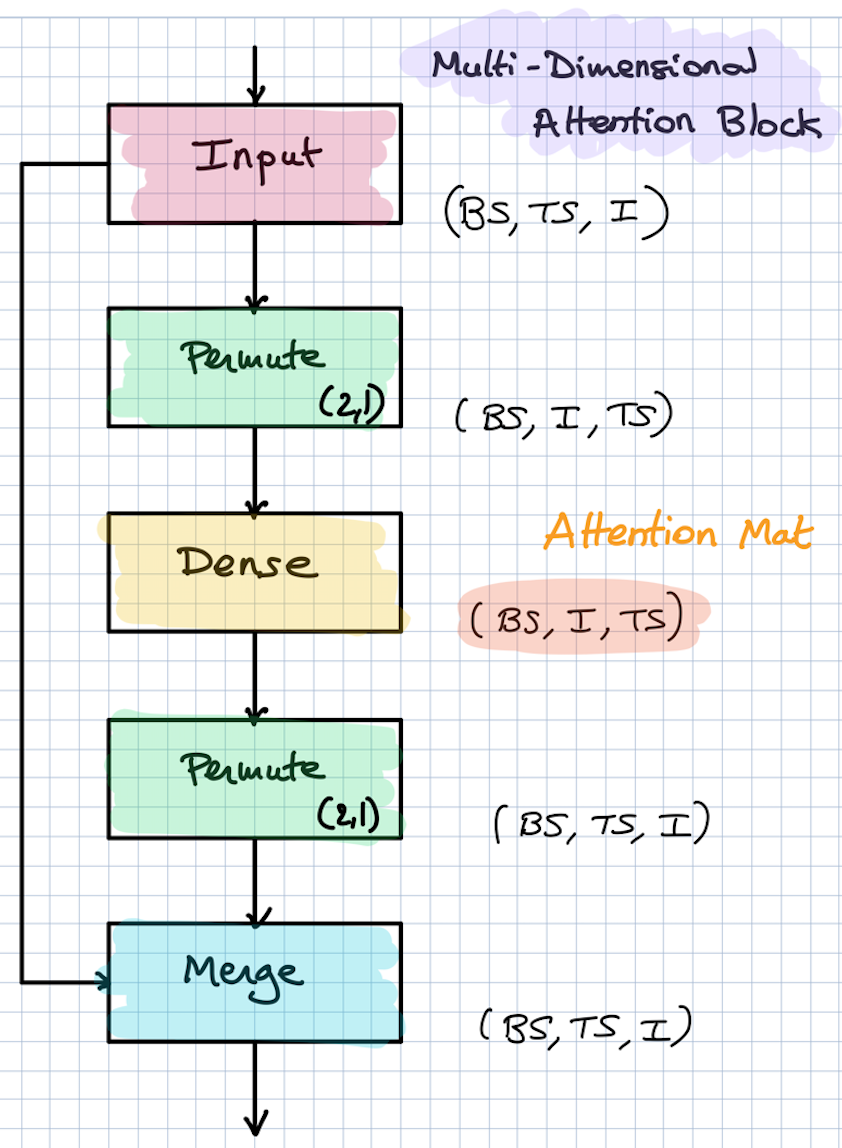

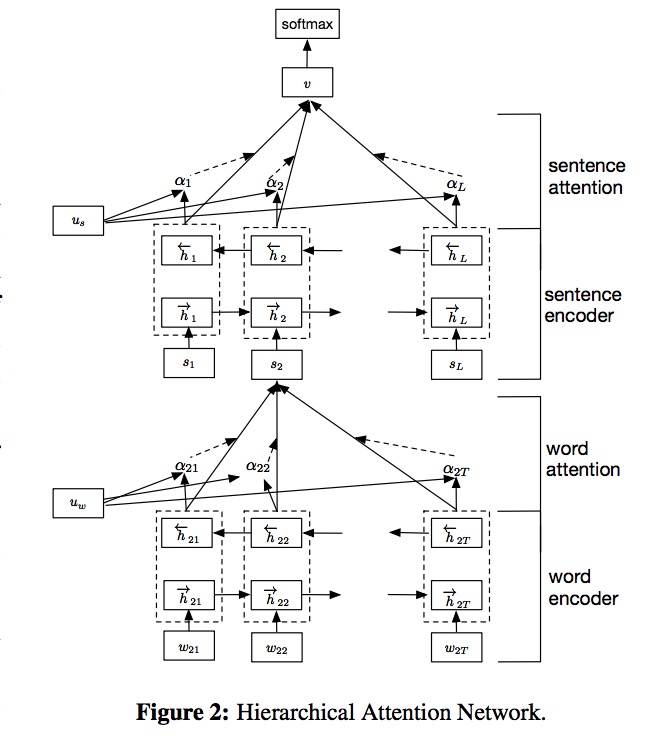

Example: Attention block*

Attention defined per time series (each TS has its own attention)

Github 项目

https://github.com/roebius/deeplearning_keras2/blob/master/nbs2/attention_wrapper.py

Github 项目

Github:

/resAtt.png)