Official PyTorch implementation and pretrained models for SimPool. [arXiv]

Convolutional networks and vision transformers have different forms of pairwise interactions, pooling across layers and pooling at the end of the network. Does the latter really need to be different:question:

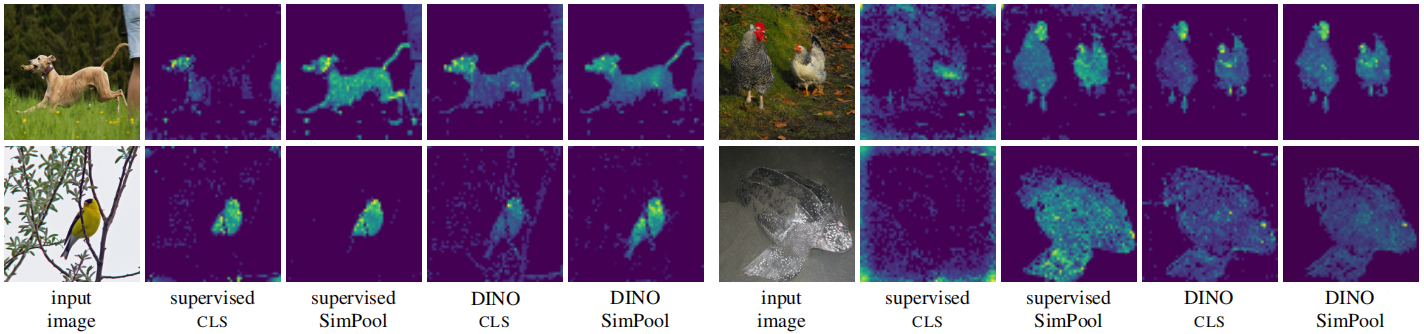

As a by-product of pooling, vision transformers provide spatial attention for free, but this is most often of low quality unless self-supervised, which is not well studied. Is supervision really the problem:question:

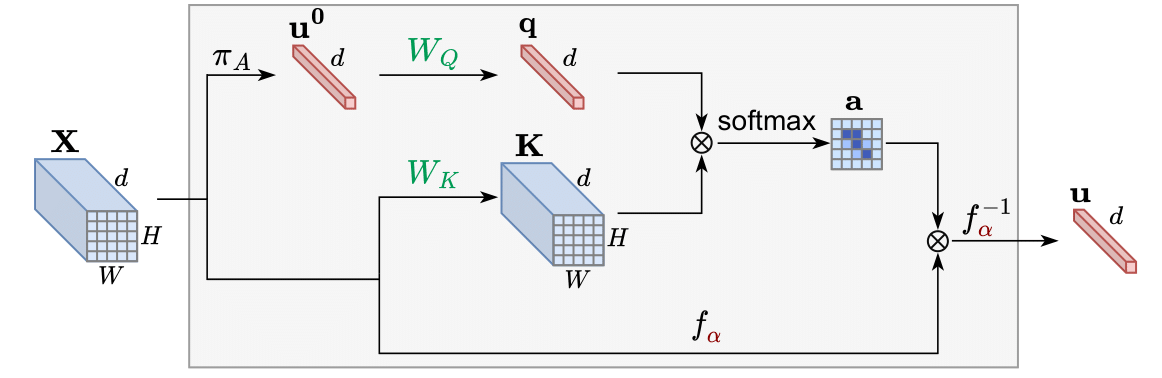

In this work, we develop a generic pooling framework and then we formulate a number of existing methods as instantiations. By discussing the properties of each group of methods, we derive SimPool, a simple attention-based pooling mechanism as a replacement of the default one for both convolutional and transformer encoders. We find that, whether supervised or self-supervised, this improves performance on pre-training and downstream tasks and provides attention maps delineating object boundaries in all cases. One could thus call SimPool universal. To our knowledge, we are the first to obtain attention maps in supervised transformers of at least as good quality as self-supervised, without explicit losses or modifying the architecture.

We introduce SimPool, a simple attention-based pooling method at the end of network, obtaining clean attention maps under supervision or self-supervision. Attention maps of ViT-S trained on ImageNet-1k. For baseline, we use the mean attention map of the [CLS] token. For SimPool, we use the attention map a. Note that when using SimPool with Vision Transformers, the [CLS] token is completely discarded.

📢 NOTE: Considering integrating SimPool into your workflow?

Use SimPool when you need attention maps of the highest quality, delineating object boundaries.

SimPool is by definition plug and play.

To integrate SimPool into any architecture (convolutional network or transformer) or any setting (supervised, self-supervised, etc.), follow the steps below:

from sp import SimPool

# this part goes into your model's __init___()

self.simpool = SimPool(dim, gamma=2.0) # dim is depth (channels)❗ NOTE: Remember to adapt the value of gamma according to the architecture. In case you don't want to use gamma, leave the default gamma=None.

Assuming input tensor X has dimensions:

- (B, d, H, W) for convolutional networks

- (B, N, d) for transformers, where:

B = batch size, d = depth (channels), H = height of the feature map, W = width of the feature map, N = patch tokens

# this part goes into your model's forward()

cls = self.simpool(x) # (B, d)❗ NOTE: Remember to integrate the above code snippets into the appropriate locations in your model definition.

We provide experiments on ImageNet in both supervised and self-supervised learning. Thus, we use two different Anaconda environments, both utilizing PyTorch. For both, you will first need to download ImageNet.

conda create -n simpoolself python=3.8 -y

conda activate simpoolself

pip3 install torch==1.8.0+cu111 torchvision==0.9.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip3 install timm==0.3.2 tensorboardX sixconda create -n simpoolsuper python=3.9 -y

conda activate simpoolsuper

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia

pip3 install pyyamlThis repository is built using Attmask, DINO, ConvNeXt, DETR, timm and Metrix repositories.

NTUA thanks NVIDIA for the support with the donation of GPU hardware. Bill thanks IARAI for the hardware support.

This repository is released under the Apache 2.0 license as found in the LICENSE file.

If you find this repository useful, please consider giving a star ⭐ and citation:

@misc{psomas2023simpool,

title={Keep It SimPool: Who Said Supervised Transformers Suffer from Attention Deficit?},

author={Bill Psomas and Ioannis Kakogeorgiou and Konstantinos Karantzalos and Yannis Avrithis},

year={2023},

eprint={2309.06891},

archivePrefix={arXiv},

primaryClass={cs.CV}

}