This repository presents an outline of my approach for the Recursion Cellular Image Classification competition.

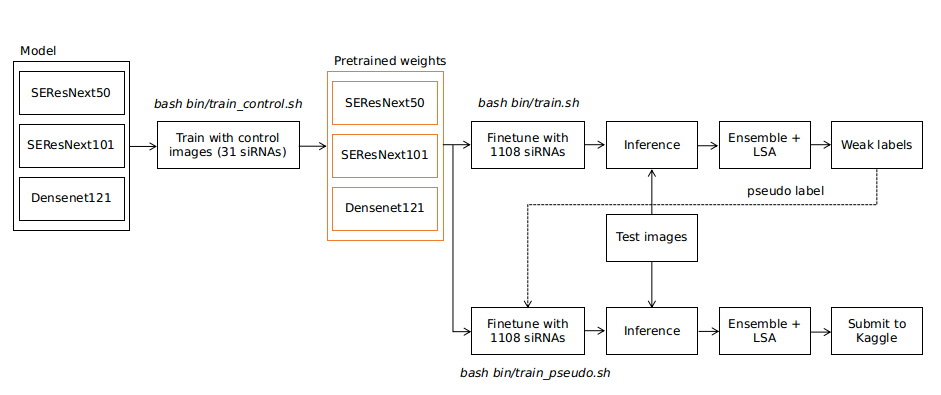

The pipeline of this solution is shown as bellows

There are 3 main parts:

- I. Pretrained from control images which has 31 siRNAs

- II. Continue fintuning models with image dataset which has 1108 siRNAs.

- III. Continue fintuning models with image dataset and pseudo labels.

The writeup can be found in here

If you run into any trouble with the setup/code or have any questions please contact me at ngxbac.dt@gmail.com

DGX Workstation: 4 x V100 (16G)

Please check the docker/Dockerfile.

Besides, you can check requirement.txt

Thing you should know about the project.

-

We run experiments via bash files which are located in

binfolder. -

The config files (

yml) are located inconfigsfolder which are corresponding to eachbash files.Ex:

train_control.shshould go withconfig_control.yml -

The yml config file allows changing either via bash scripts for the flexible settings or directly modification for the fixed settings.

Ex:stages/data_params/train_csvcan be./csv/train_0.csv, ./csv/train_2.csv,... etc. So when training K-Fold we make a for loop for the convinent.

The common settings in yml config file.

- Define the model

model_params:

model: cell_senet

n_channels: 5

num_classes: 1108

model_name: "se_resnext50_32x4d"- model: Model function (callable) which returns model for the training. It can be found in

src/models/package. All the settings bellowmodel_params/modelare considered asparametersof the function.

Ex:cell_senethas default paramters asmodel_name='se_resnext50_32x4d', num_classes=1108, n_channels=6, weight=None. Those parameters can be set/overried as the config above.

- Metric monitoring

We use MAP@3 for monitoring.state_params: main_metric: &reduce_metric accuracy03 minimize_metric: False - Loss

LabelSmoothingCrossEntropyis used.criterion_params: criterion: LabelSmoothingCrossEntropy - Data settings

batch_size: 64 num_workers: 8 drop_last: False image_size: &image_size 512 train_csv: "./csv/train_0.csv" valid_csv: "./csv/valid_0.csv" dataset: "non_pseudo" root: "/data/" sites: [1] channels: [1,2,3,4,5,6]

- train_csv: path to train csv.

- valid_csv: path to valid csv.

- dataset: can be

control, non_pseudo, pseudo.controlis used to train withcontrol images(Part I),non_pseudois used to train non-pseudo dataset (Part II) andpseudois used to train pseudo dataset (Part III). - root: path to data root. Default is:

/data - channels: a list of combination channels. Ex: [1,2,3], [4,5,6], etc.

-

Optimizer and Learning rate

optimizer_params: optimizer: Nadam lr: 0.001 -

Scheduler

OneCycleLR.

scheduler_params: scheduler: OneCycleLR num_steps: &num_epochs 40 lr_range: [0.0005, 0.00001] warmup_steps: 5 momentum_range: [0.85, 0.95]

cd docker

docker build . -t ngxbac/pytorch_cv:kaggle_cellIn Makefile, change:

DATA_DIR: path to the data from kaggle.

|-- pixel_stats.csv

|-- pixel_stats.csv.zip

|-- recursion_dataset_license.pdf

|-- sample_submission.csv

|-- test

|-- test.csv

|-- test.zip

|-- test_controls.csv

|-- train

|-- train.csv

|-- train.csv.zip

|-- train.zip

`-- train_controls.csvOUT_DIR: path to the folder which contains log, checkpoints.

Run the commands:

make run

make exec

cd /kaggle-cell/bash bin/train_control.shThis part, we use all the control images from train and test.

-

Input:

model_name: name of model.

In our solution, we train:- se_resnext50_32x4d, se_resnext101_32x4d for

cell_senet. - densenet121 for

cell_densenet.

- se_resnext50_32x4d, se_resnext101_32x4d for

-

Output: Default output folder is:

/logs/pretrained_controls/where stores the models trained by control images. Here is an example we trainse_resnext50_32x4dwith 6 combinations of channels.

/logs/pretrained_controls/

|-- [1,2,3,4,5]

| `-- se_resnext50_32x4d

|-- [1,2,3,4,6]

| `-- se_resnext50_32x4d

|-- [1,2,3,5,6]

| `-- se_resnext50_32x4d

|-- [1,2,4,5,6]

| `-- se_resnext50_32x4d

|-- [1,3,4,5,6]

| `-- se_resnext50_32x4d

`-- [2,3,4,5,6]

`-- se_resnext50_32x4dbash bin/train.sh-

Input:

PRETRAINED_CONTROL: The folder where stores the model trained with control images. Default:/logs/pretrained_controls/model_name: name of model.TRAIN_CSV/VALID_CSV: train and valid csv file for each fold. They are automaticaly changed each fold.

-

Output:

Default output folder is:/logs/non_pseudo/. Here is an example we train K-Foldse_resnext50_32x4dwith 6 combinations of channels./logs/non_pseudo/ |-- [1,2,3,4,5] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,3,4,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,3,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,4,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,3,4,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d `-- [2,3,4,5,6] |-- fold_0 | `-- se_resnext50_32x4d |-- fold_1 | `-- se_resnext50_32x4d |-- fold_2 | `-- se_resnext50_32x4d |-- fold_3 | `-- se_resnext50_32x4d `-- fold_4 `-- se_resnext50_32x4d

The different between Part III and Part II is only train/valid csv input files.

bash bin/train_pseudo.sh-

Input:

PRETRAINED_CONTROL: The folder where stores the model trained with control images. Default:/logs/pretrained_controls/model_name: name of model.TRAIN_CSV/VALID_CSV: train and valid csv file for each fold. They are automaticaly changed each fold.

-

Output:

Default output folder is:/logs/pseudo/. Here is an example we train K-Foldse_resnext50_32x4dwith 6 combinations of channels./logs/pseudo/ |-- [1,2,3,4,5] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,3,4,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,3,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,2,4,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d |-- [1,3,4,5,6] | |-- fold_0 | | `-- se_resnext50_32x4d | |-- fold_1 | | `-- se_resnext50_32x4d | |-- fold_2 | | `-- se_resnext50_32x4d | |-- fold_3 | | `-- se_resnext50_32x4d | `-- fold_4 | `-- se_resnext50_32x4d `-- [2,3,4,5,6] |-- fold_0 | `-- se_resnext50_32x4d |-- fold_1 | `-- se_resnext50_32x4d |-- fold_2 | `-- se_resnext50_32x4d |-- fold_3 | `-- se_resnext50_32x4d `-- fold_4 `-- se_resnext50_32x4d

export LC_ALL=C.UTF-8

export LANG=C.UTF-8

CUDA_VISIBLE_DEVICES=2,3 python src/inference.py predict-all --data_root=/data/ --model_root=/logs/pseudo/ --model_name=se_resnext50_32x4d --out_dir /predictions/pseudo/Where:

data_root: path to the data from kaggle.model_root: path to the log folders (Ex:/logs/pseudo/,/log/non_pseudo/)model_name: can bese_resnext50_32x4d,se_resnext101_32x4dordensenet121.out_dir: folder where stores the logit files.

The out_dir will have the structure as follows:

/predictions/pseudo/

|-- [1,2,3,4,5]

| |-- fold_0

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_1

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_2

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_3

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| `-- fold_4

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- [1,2,3,4,6]

| |-- fold_0

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_1

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_2

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_3

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| `-- fold_4

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- [1,2,3,5,6]

| |-- fold_0

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_1

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_2

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_3

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| `-- fold_4

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- [1,2,4,5,6]

| |-- fold_0

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_1

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_2

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_3

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| `-- fold_4

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- [1,3,4,5,6]

| |-- fold_0

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_1

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_2

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| |-- fold_3

| | `-- se_resnext50_32x4d

| | `-- pred_test.npy

| `-- fold_4

| `-- se_resnext50_32x4d

| `-- pred_test.npy

`-- [2,3,4,5,6]

|-- fold_0

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- fold_1

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- fold_2

| `-- se_resnext50_32x4d

| `-- pred_test.npy

|-- fold_3

| `-- se_resnext50_32x4d

| `-- pred_test.npy

`-- fold_4

`-- se_resnext50_32x4d

`-- pred_test.npy

Please note that: logits are the number of last FC layer which is not applied softmax.

In src/ensemble.py, model_names is the list of model that be used for ensemble.

Ex: model_names=['se_resnext50_32x4d', 'se_resnext101_32x4d', 'densenet121']

export LC_ALL=C.UTF-8

export LANG=C.UTF-8

python src/ensemble.py ensemble --data_root /data/ --predict_root /predictions/pseudo/ --group_json group.jsonIn our solution, we ensemble with other memeber. Following changes will make it works.

In src/ensemble.py,

ensemble_preds = (ensemble_preds + other_logits) / 121

Where: other_logits = np.load(<logit_path>).

export LC_ALL=C.UTF-8

export LANG=C.UTF-8

python src/ensemble.py ensemble --data_root /data/ --predict_root /predictions/pseudo/ --group_json group.jsonWhere:

data_root: path to the data from kaggle.predict_root: folder where stores the logit files.group_json: JSON file stores the plate groups of test set.

Output:

The submission.csv will be located at ${predict_root}/submission.csv.