This repository contains the dataset from the paper "WikiAsp: A Dataset for Multi-domain Aspect-based Summarization".

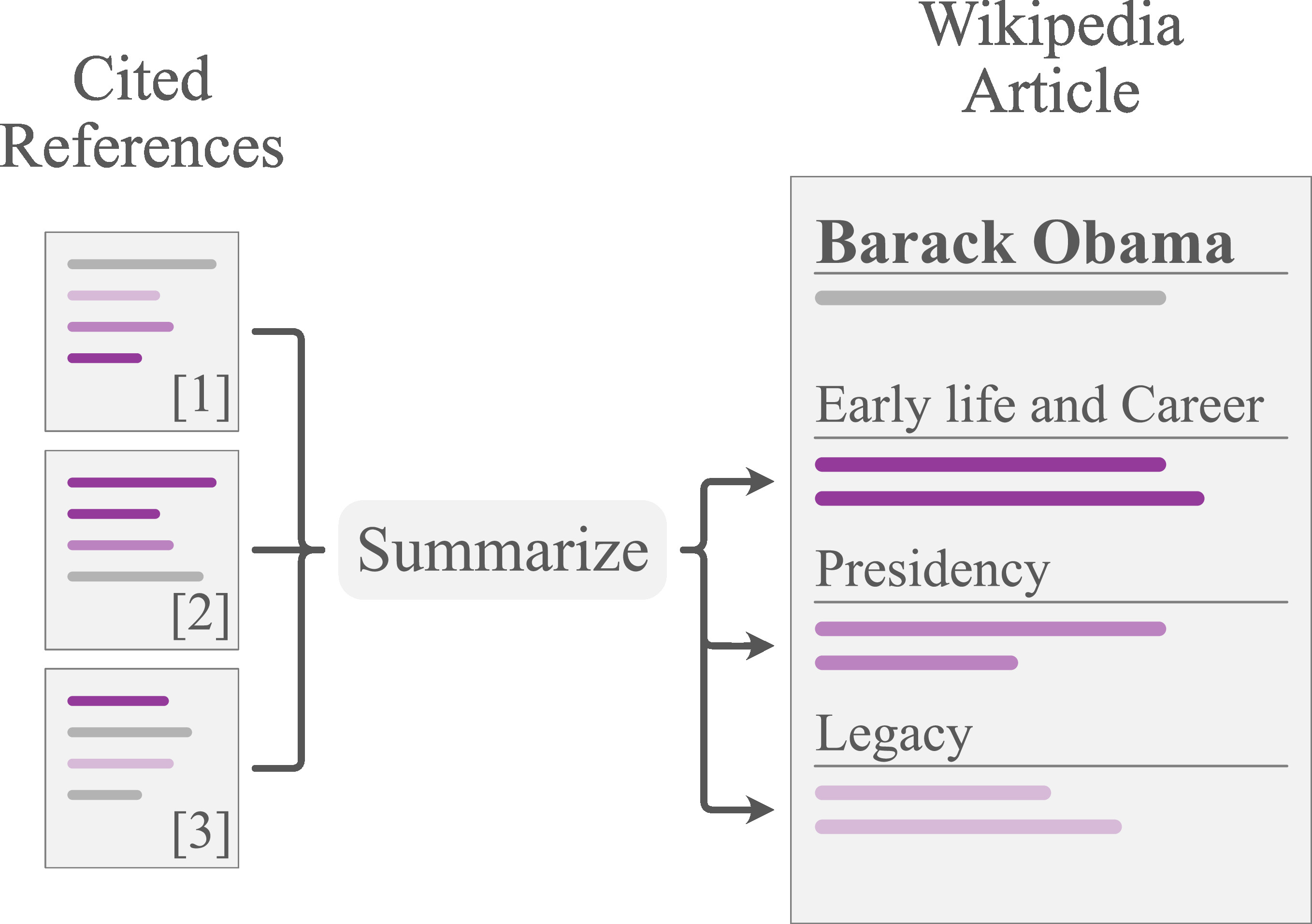

WikiAsp is a multi-domain, aspect-based summarization dataset in the encyclopedic domain. In this task, models are asked to summarize cited reference documents of a Wikipedia article into aspect-based summaries. Each of the 20 domains include 10 domain-specific pre-defined aspects.

WikiAsp is a available via 20 zipped archives, each of which corresponds to a domain. More than 28GB of storage space is necessary to download and store all the domains (unzipped). The following command will download all of them and extract archives:

./scripts/download_and_extract_all.sh /path/to/save_directoryAlternatively, one can individually download an archive for each domain from the table below. (Note: left-clicking will not prompt downloading dialogue. Open the link in a new tab, or save from the context menu on your OS, or use wget.)

| Domain | Link | Size (unzipped) |

|---|---|---|

| Album | Download | 2.3GB |

| Animal | Download | 589MB |

| Artist | Download | 2.2GB |

| Building | Download | 1.3GB |

| Company | Download | 1.9GB |

| EducationalInstitution | Download | 1.9GB |

| Event | Download | 900MB |

| Film | Download | 2.8GB |

| Group | Download | 1.2GB |

| HistoricPlace | Download | 303MB |

| Infrastructure | Download | 1.3GB |

| MeanOfTransportation | Download | 792MB |

| OfficeHolder | Download | 2.0GB |

| Plant | Download | 286MB |

| Single | Download | 1.5GB |

| SoccerPlayer | Download | 721MB |

| Software | Download | 1.3GB |

| TelevisionShow | Download | 1.1GB |

| Town | Download | 932MB |

| WrittenWork | Download | 1.8GB |

Each domain includes three files {train,valid,test}.jsonl, and each line represents one instance in JSON format.

Each instance forms the following structure:

{

"exid": "train-1-1",

"input": [

"tokenized and uncased sentence_1 from document_1",

"tokenized and uncased sentence_2 from document_1",

"...",

"tokenized and uncased sentence_i from document_j",

"..."

],

"targets": [

["a_1", "tokenized and uncased aspect-based summary for a_1"],

["a_2", "tokenized and uncased aspect-based summary for a_2"],

"..."

]

}where,

- exid:

str - input:

List[str] - targets:

List[Tuple[str,str]]

Here, input is the cited references and consists of tokenized sentences (with NLTK).

The targets key points to a list of aspect-based summaries, where each element is a pair of a) the target aspect and b) the aspect-based summary.

Inheriting from the base corpus, this dataset exhibits the following characteristics:

- Cited references are composed of multiple documents, but the document boundaries are lost, thus expressed simply in terms of list of sentences.

- Sentences in the cited references (

input) are tokenized using NLTK. - The number of target summaries for each instance varies.

If you use the dataset, please consider citing with

@article{hayashi20tacl,

title = {WikiAsp: A Dataset for Multi-domain Aspect-based Summarization},

author = {Hiroaki Hayashi and Prashant Budania and Peng Wang and Chris Ackerson and Raj Neervannan and Graham Neubig},

journal = {Transactions of the Association for Computational Linguistics (TACL)},

month = {},

url = {https://arxiv.org/abs/2011.07832},

year = {2020}

}

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.