This repository contains our pytorch implementation of Sadam in the paper Calibrating the Learning Rate for Adaptive Gradient Methods to Improve Generalization Performance.

--hist True : record information of A-LR

- pytorch

- tensorboard

CUDA_VISIBLE_DEVICES=0 python main_CIFAR.py --b 128 --NNtype ResNet20 --optimizer sgd --reduceLRtype manual0 --weight_decay 5e-4 --lr 0.1

CUDA_VISIBLE_DEVICES=1 python main_CIFAR.py --b 128 --NNtype ResNet20 --optimizer Sadam --reduceLRtype manual0 --weight_decay 5e-4 --transformer Padam --partial 0.25 --grad_transf square --lr 0.001

CUDA_VISIBLE_DEVICES=1 python main_CIFAR.py --b 128 --NNtype ResNet20 --optimizer Sadam --reduceLRtype manual0 --weight_decay 5e-4 --transformer Padam --partial 0.125 --grad_transf square --lr 0.1

CUDA_VISIBLE_DEVICES=0 python main_CIFAR.py --b 128 --NNtype ResNet20 --optimizer adabound --reduceLRtype manual0 --weight_decay 5e-4 --lr 0.01

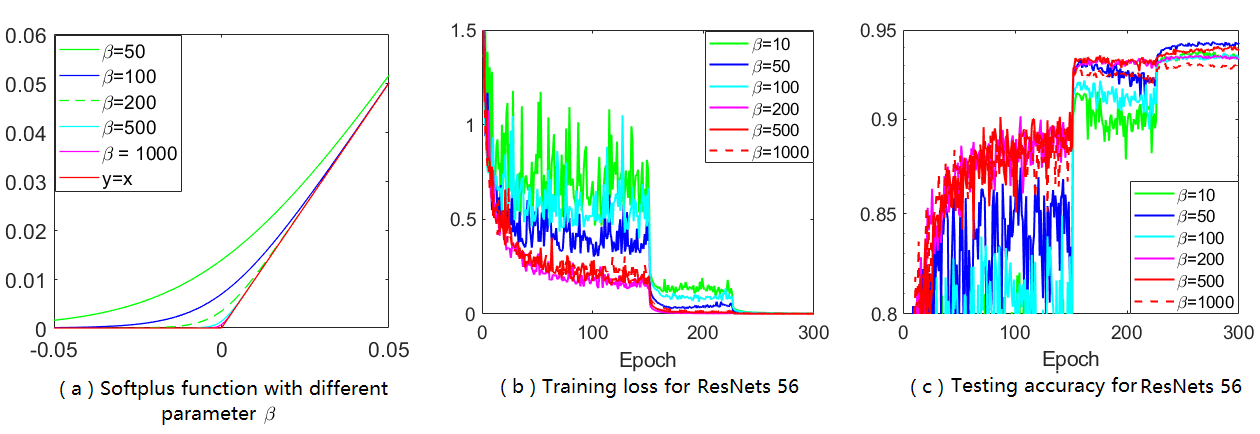

CUDA_VISIBLE_DEVICES=1 python main_CIFAR.py --b 128 --NNtype ResNet20 --optimizer Sadam --reduceLRtype manual0 --weight_decay 5e-4 --transformer softplus --smooth 50 --lr 0.01 --partial 0.5 --grad_transf square