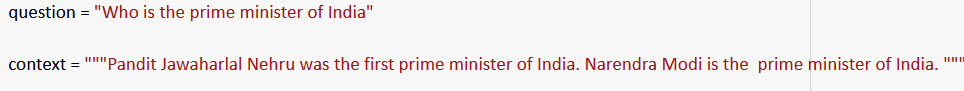

The model uses BERT to find the span of an answer for given question and context pairs.

The question along with the context is passed as input to BERT model, which then returns two integer values denoting the starting index and ending index for the possible answer in the given context.

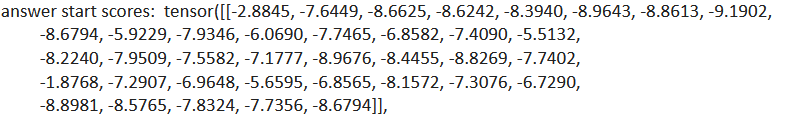

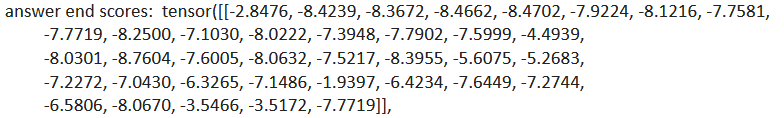

Here, for an answer span,answer_start_scores and answer_end_scores contains the log likelihood of each word being the starting word and ending word for the answer span.

We select the maximum of all the possible values in answer_start_scores as the starting index for the answer span ans similarly we select the maximum of all values in answer_end_scores as the ending index for the answer span.

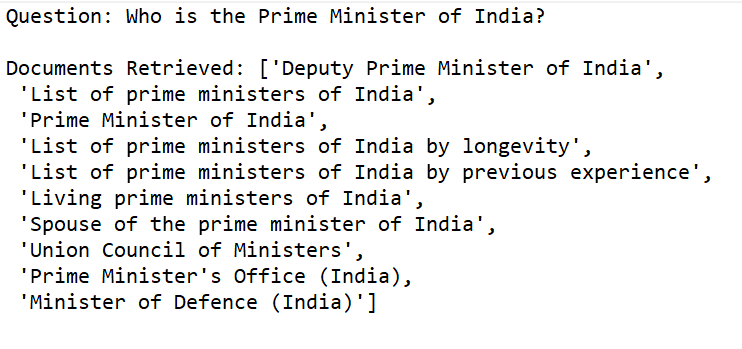

We'll try to retrieve the wikipedia documents based on the user's query and these documents will be used as the context.

Based on the question given by the user, the model first tries to fetch top 10 documents from the Wikipedia using Wiki library in Python. Among the 10 documents only first 2 are used as the context to find the answer.

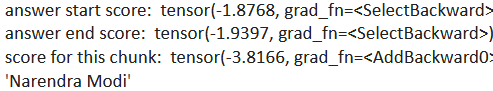

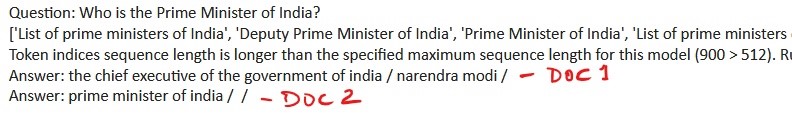

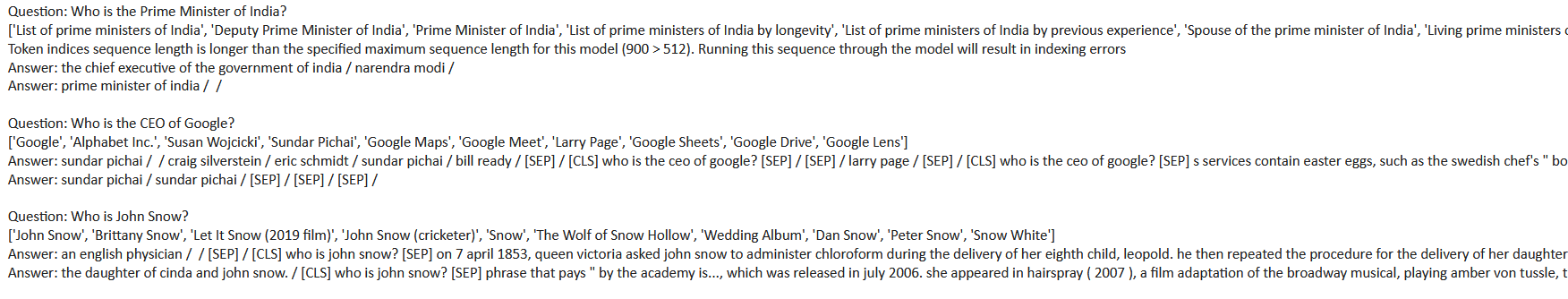

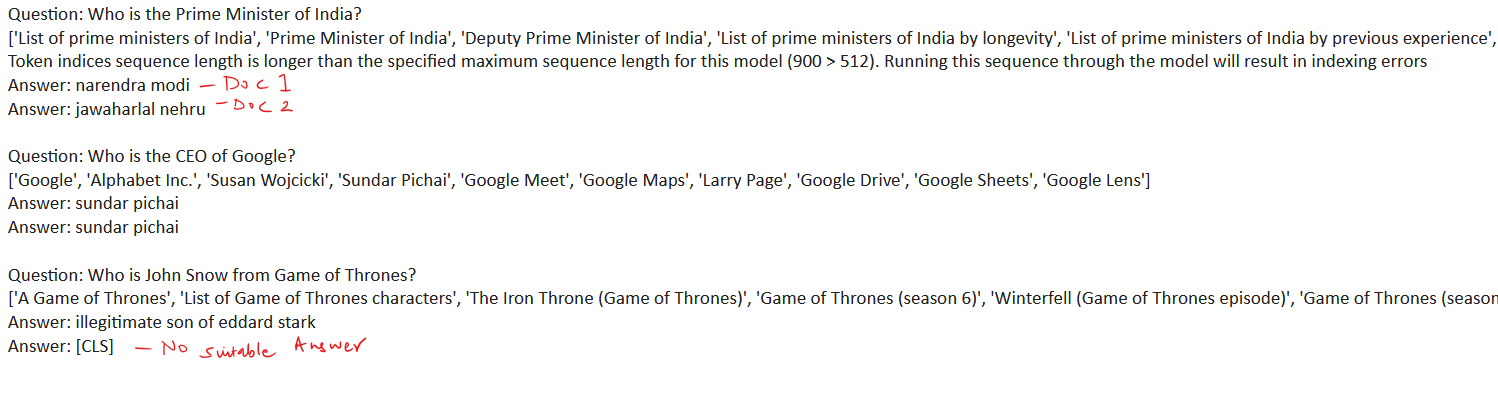

The question is same as the previous one, except the part that now no context is provided by the user.The BERT model can't have input length(question + context) greater than 512 tokens. Hence, the Wikipedia documents retrieved are broken into chunks of maximum 512 length and then each chunk is sequentially fed to the model as context along with the question. Each document will have n answers where n is the number of chunks(roughly equals ceil(l/(512 - q)), where l = length of Wikipedia article, q = length of th question) for that particular document. Each Wikipedia document returns n number of answers where ni is the number of chunks it was divided in. The model seems to be a bit messy! For each chunk, the

max_start_score and max_end_score is calculated. Now, the sum of max_start_score and max_end_score is calculated and the chunk with the maximum sum is considered to offer the best possible answer.

The Final model which returns only 2 answers, one each from the top two documents fetched.