Aimmy is a multi-functional AI-based Aim Aligner that was developed by BabyHamsta & Nori for the purposes of making gaming more accessible for a wider audience.

Unlike most products of a similar caliber, Aimmy utilizes DirectML, ONNX, and YOLOV8 to detect players and is written in C# instead of Python. This makes it incredibly fast and efficient, and is one of the only AI Aim Aligners that runs well on AMD GPUs, which would not be able to use hardware acceleration due to the lack of CUDA support.

Aimmy also has a myriad of features that sets itself apart from other AI Aim Aligners, like the ability to switch hotswap models, and settings that allow you to adjust your aiming accuracy.

Aimmy is 100% free to use, ad-free, and is actively not for profit. Aimmy is, and will never be for sale, and is considered a source-available product as we actively discourage other developers from making profit-focused forks of Aimmy.

What is the purpose of Aimmy? | How does Aimmy Work? | Features | Setup | How is Aimmy better than similar AI-Based tools? | What is the Web Model? | How do I train my own model?

- Gamers who are physically challenged

- Gamers who are mentally challenged

- Gamers who suffer from untreated/untreatable visual impairments

- Gamers who do not have access to a seperate Human-Interface Device (HID) for controlling the pointer

- Gamers trying to improve their reaction time

- Gamers with poor Hand/Eye coordination

- Gamers who perform low in FPS games

Aimmy works by using AI Image Recognition to detect opponents, pointing the player towards the direction of an opponent accordingly.

The gamer is now left to perform any actions they believe is necessary.

Additionally, a Gamer that uses Aimmy is also given the option to turn on Auto-Trigger. Auto-Trigger relieves the need to repeatedly tap the HID to shoot at a player. This is especially useful for physically challenged users who may have trouble with this action.

- AI Aim Aligning

- Aim Keybind Switching

- Adjustable FOV, Mouse Sensitivity, X Axis, Y Axis, and Model Confidence

- Auto Trigger and Trigger Delay

- Hot Model Swapping (No need to reload application)

- Image capture while playing (For labeling to further AI training)

- Download and Install the x64 version of .NET Runtime 7.0.X.X

- Download and Install the x64 version of Visual C++ Redistributable

- Download Aimmy from Releases (Make sure it's the Aimmy zip and not Source zip)

- Extract the Aimmy.zip file

- Run Aimmy.exe

- Choose your Model and Enjoy :)

Our program comes default with 2 AI models, 1 game specific (Phantom Forces) and 1 universal model. We also let users make their own models, share them, and switch between them painlessly. This makes Aimmy very versatile and universal for thousands of games.

We also provide better performance across the board compared to other AI Aim Aligners. Detecting opponents in milliseconds across the board on most CPUs & GPUs.

Aimmy comes pre-bundled with a well-designed user interface that is beautiful, and accessible. With many features to customize your personal user experience.

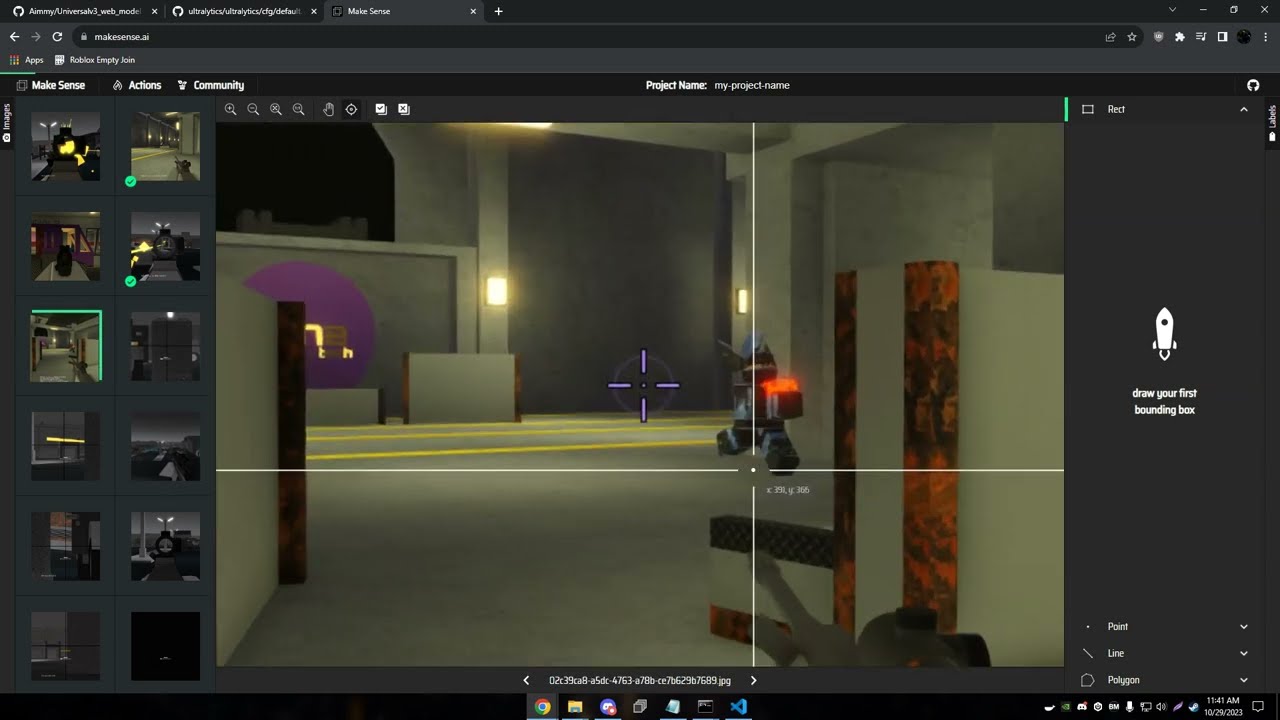

The web model is a TFJS (TensorFlow Javascript) export of the model. This allow you to use the model for image labeling, which then images can be sent to us to help further train the PF/Universal model or you can use those images to train your own YOLOv8 model. You may wonder, "Why is it in YOLOv5 and not YOLOv8?". This is due to us using the tool called MakeSense, it to me is one of the easiest tools and is all web based. I am sure there are other tools that may accept the YOLOv8 web model.

You can visit MakeSense here: https://www.makesense.ai You then can simply load all of your images in and select Object Detection.

Then run the AI locally, select YOLOv5, and upload all the web model files.

You can now go through your images and click and drag to highlight any Enemies on screen and approve the auto detected enemies from the web model:

Once you are finished labeling you'll want to export the labels for AI training:

Please see the video tutorial bellow on how to label images and train your own model. (Redirects to Youtube)