🦄️ Yatai: Model Deployment at scale on Kubernetes

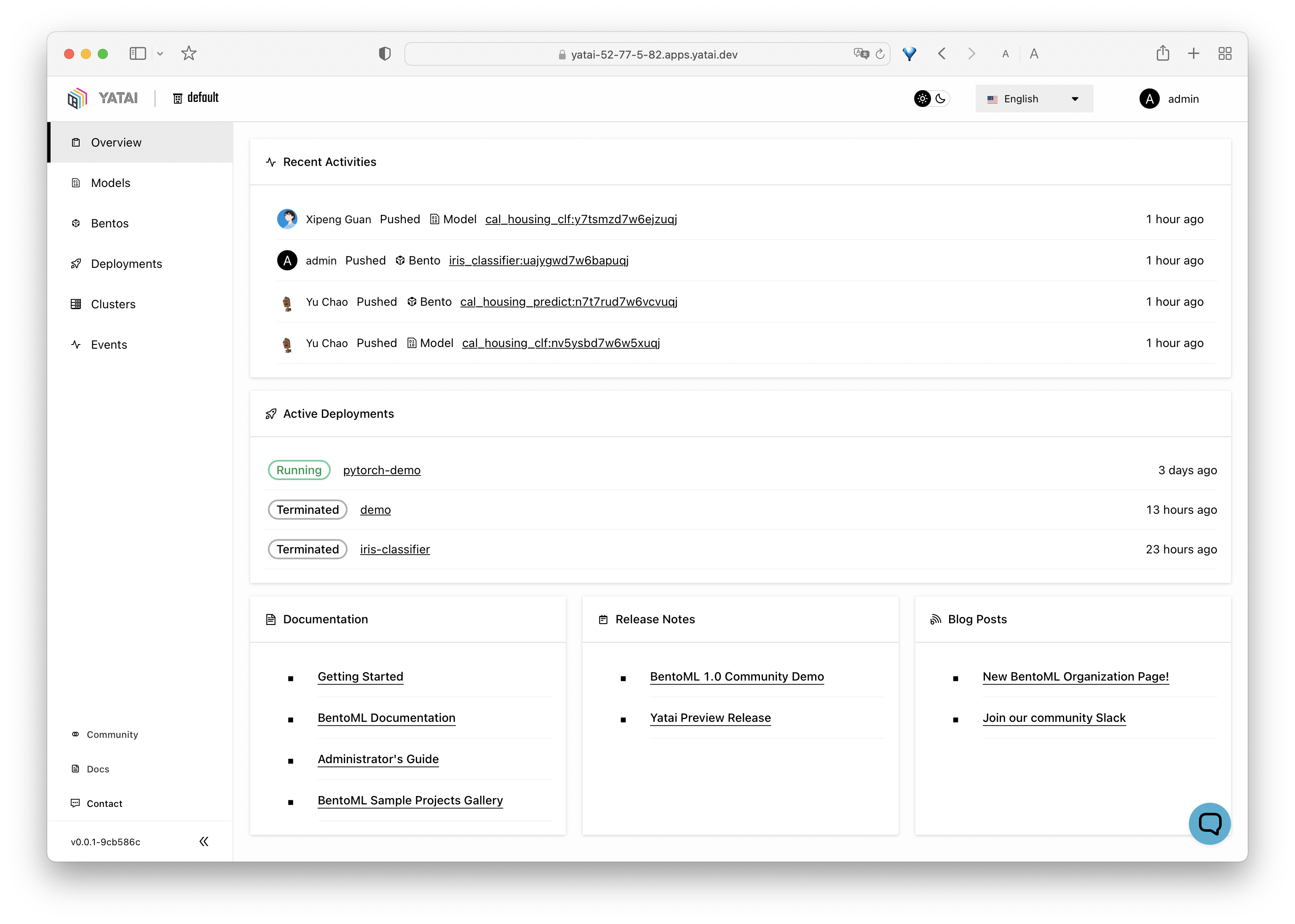

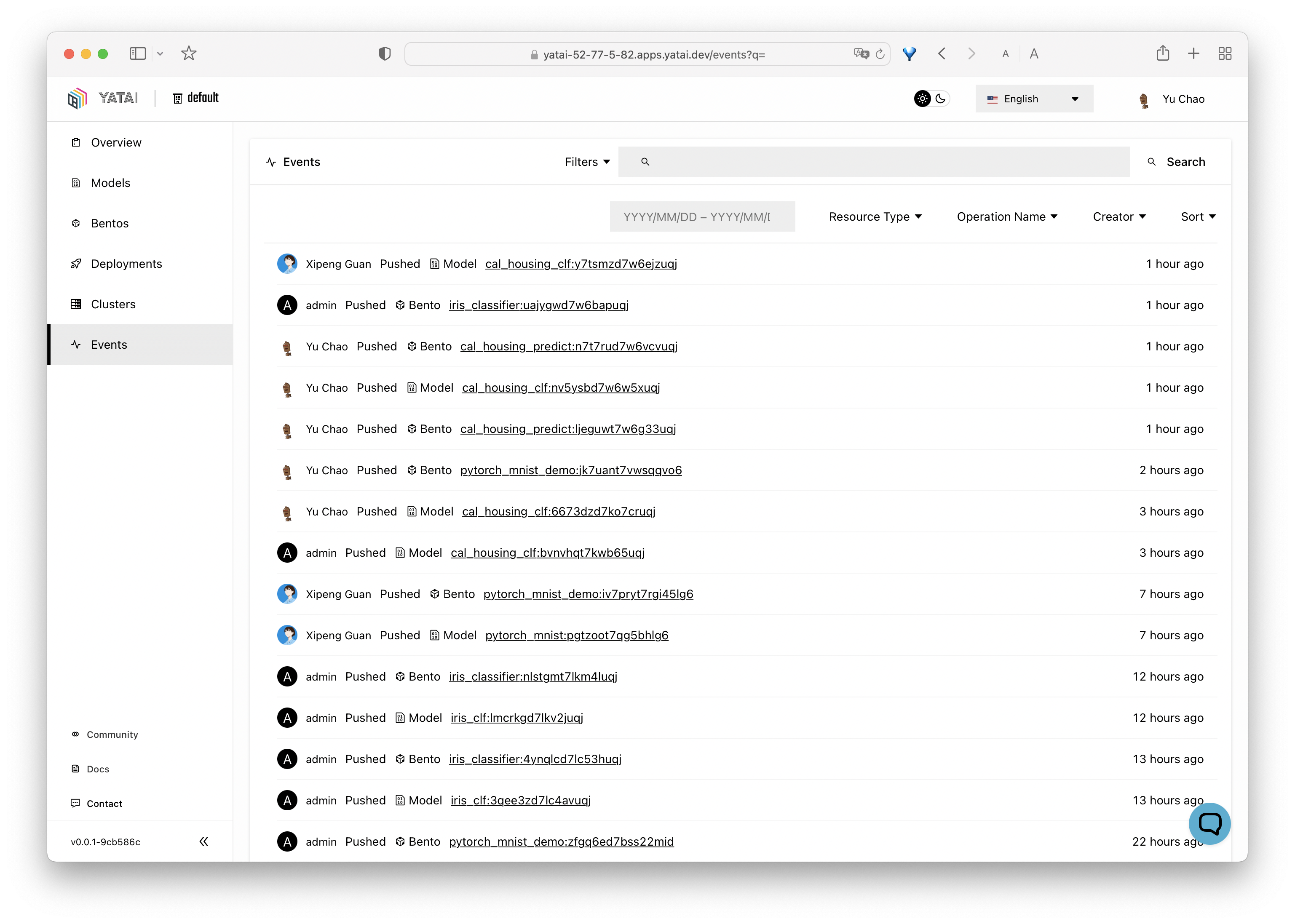

Yatai helps ML teams to deploy large scale model serving workloads on Kubernetes. It standardizes BentoML deployment on Kubernetes, provides UI for managing all your ML models and deployments in one place, and enables advanced GitOps and CI/CD workflow.

👉 Pop into our Slack community! We're happy to help with any issue you face or even just to meet you and hear what you're working on :)

Core features:

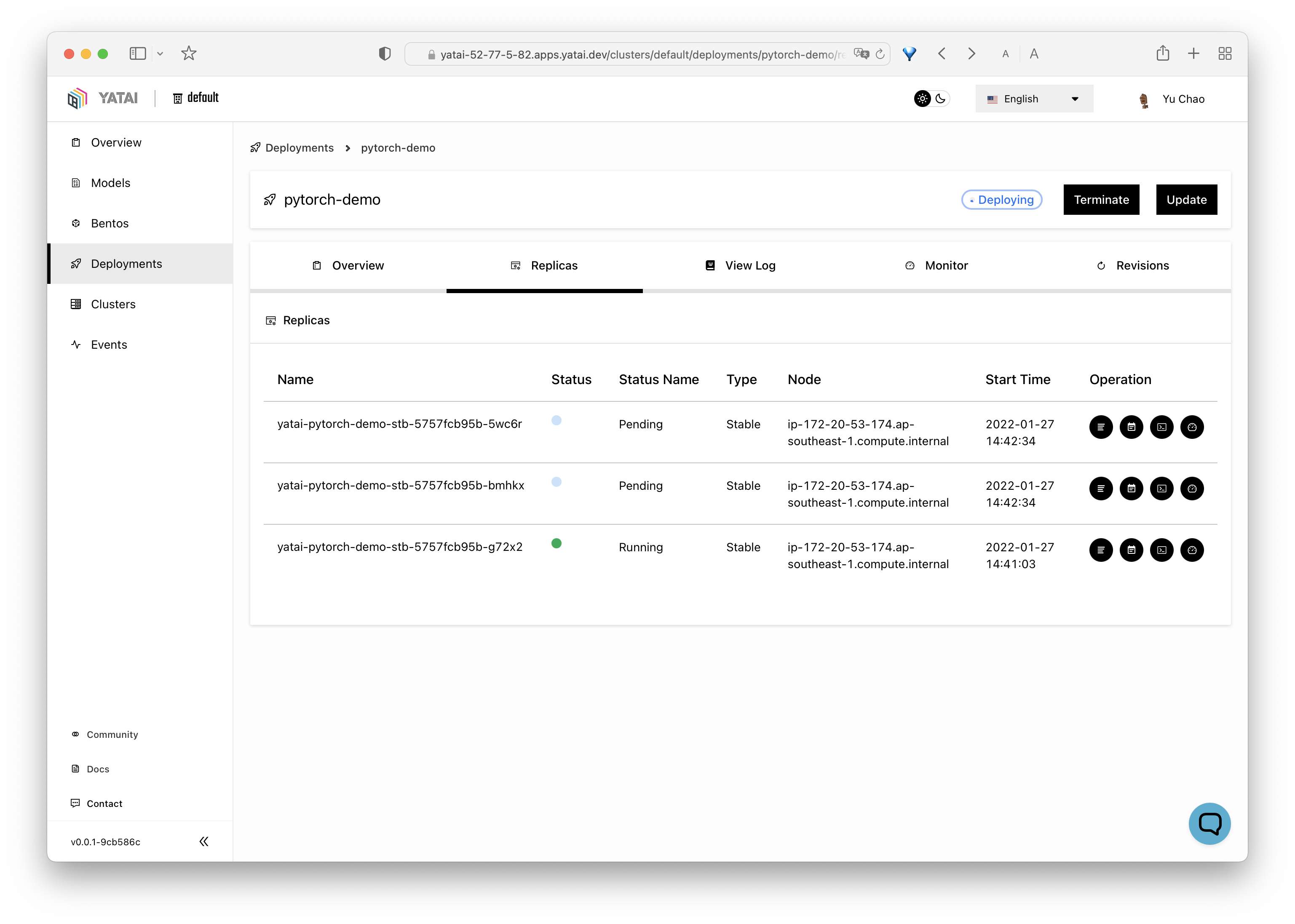

- Deployment Automation - deploy Bentos as auto-scaling API endpoints on Kubernetes and easily rollout new versions

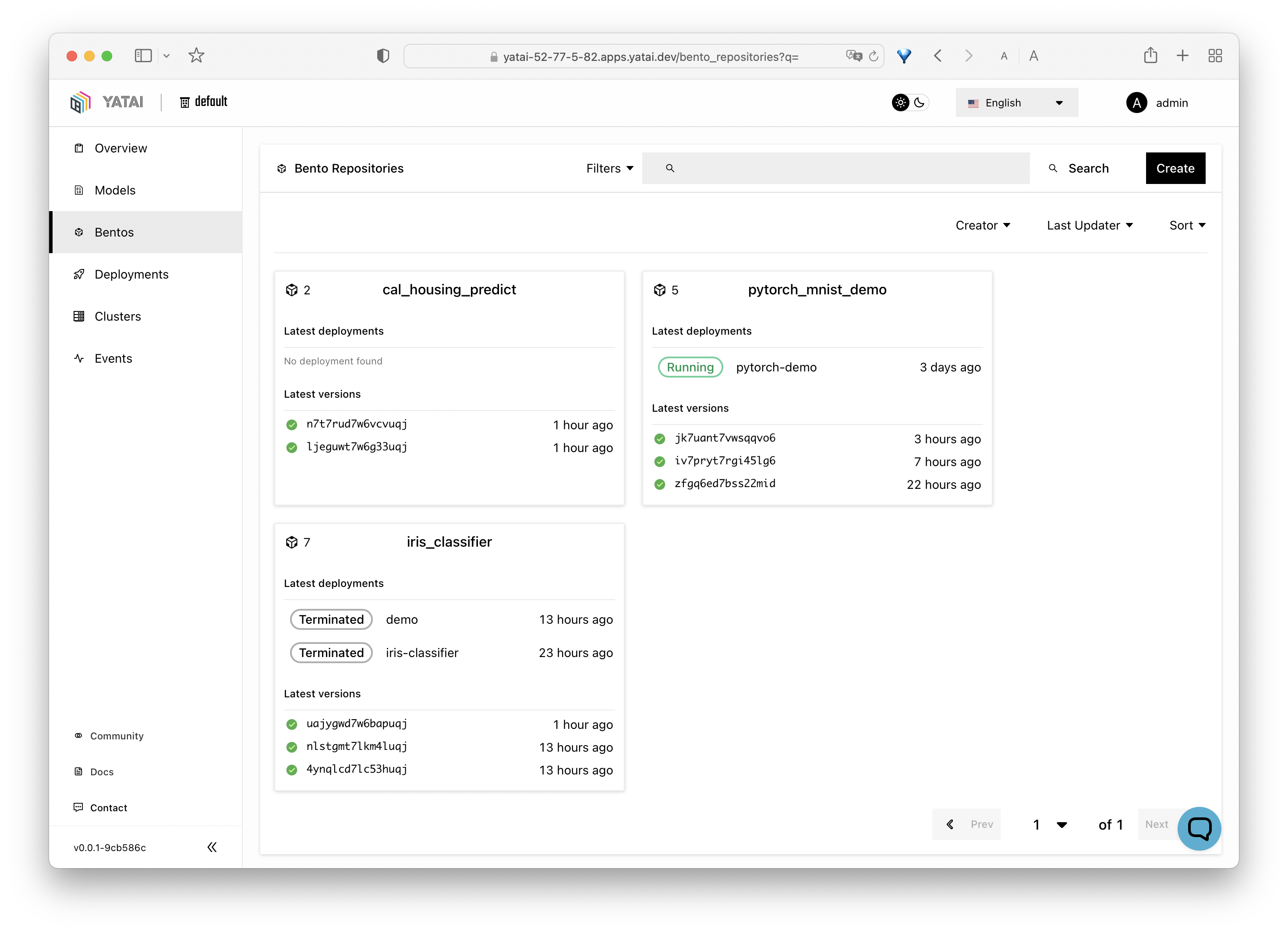

- Bento Registry - manage all your team's Bentos and Models, backed by cloud blob storage (S3, MinIO)

- Observability - monitoring dashboard helping users to identify model performance issues

- CI/CD - flexible APIs for integrating with your training and CI pipelines

Why Yatai

- Yatai is built upon BentoML, the unified model serving framework that is high-performing and feature-rich

- Yatai focus on the model serving and deployment part of your MLOps stack, works well with any ML training/monitoring platforms, such as AWS SageMaker or MLFlow

- Yatai is Kubernetes native, integrates well with other cloud native tools in the K8s eco-system

- Yatai is human-centric, provides easy-to-use Web UI and APIs for ML scientists, MLOps engineers, and project managers

Getting Started

1. Install Yatai locally with Minikube

- Prerequisites:

- Install latest minikube: https://minikube.sigs.k8s.io/docs/start/

- Install latest Helm: https://helm.sh/docs/intro/install/

- Start a minikube Kubernetes cluster:

minikube start --cpus 4 --memory 4096 - Install Yatai Helm Chart:

helm repo add yatai https://bentoml.github.io/yatai-chart helm repo update helm install yatai yatai/yatai -n yatai-system --create-namespace

- Wait for installation to complete, this may take a few minutes to complete:

helm status yatai -n yatai-system - Start minikube tunnel for accessing Yatai UI:

sudo minikube tunnel - Get initialization link for creating your admin account:

export YATAI_INITIALIZATION_TOKEN=$(kubectl get secret yatai --namespace yatai-system -o jsonpath="{.data.initialization_token}" | base64 --decode) echo "Visit: http://yatai.127.0.0.1.sslip.io/setup?token=$YATAI_INITIALIZATION_TOKEN"

2. Get an API token and login BentoML CLI

- Create a new API token in Yatai web UI: http://yatai.127.0.0.1.sslip.io/api_tokens

- Copy login command upon token creation and run as shell command, e.g.:

bentoml yatai login --api-token {YOUR_TOKEN_GOES_HERE} --endpoint http://yatai.127.0.0.1.sslip.io

3. Pushing Bento to Yatai

- Train a sample ML model and build a Bento using code from the BentoML Quickstart Project:

git clone https://github.com/bentoml/gallery.git && cd ./gallery/quickstart pip install -r ./requirements.txt python train.py bentoml build

- Push your newly built Bento to Yatai:

bentoml push iris_classifier:latest

4. Create your first deployment!

-

A Bento Deployment can be created via Web UI or via kubectl command:

-

Deploy via Web UI

- Go to deployments page: http://yatai.127.0.0.1.sslip.io/deployments

- Click

Createbutton and follow instructions on UI

-

Deploy directly via

kubectlcommand:- Define your Bento deployment in a YAML file:

# my_deployment.yaml apiVersion: serving.yatai.ai/v1alpha2 kind: BentoDeployment metadata: name: demo spec: bento_tag: iris_classifier:3oevmqfvnkvwvuqj resources: limits: cpu: 1000m requests: cpu: 500m

- Apply the deployment to your minikube cluster

kubectl apply -f my_deployment.yaml

- Define your Bento deployment in a YAML file:

-

-

Monitor deployment process on Web UI and test out endpoint when deployment created

curl \ -X POST \ -H "content-type: application/json" \ --data "[[5, 4, 3, 2]]" \ https://demo-default-yatai-127-0-0-1.apps.yatai.dev/classify

5. Moving to production

- See Administrator's Guide for a comprehensive overview for deploying and configuring Yatai for production use.

Community

- To report a bug or suggest a feature request, use GitHub Issues.

- For other discussions, use GitHub Discussions under the BentoML repo

- To receive release announcements and get support, join us on Slack.

Contributing

There are many ways to contribute to the project:

- If you have any feedback on the project, share it with the community in GitHub Discussions under the BentoML repo.

- Report issues you're facing and "Thumbs up" on issues and feature requests that are relevant to you.

- Investigate bugs and reviewing other developer's pull requests.

- Contributing code or documentation to the project by submitting a GitHub pull request. See the development guide.