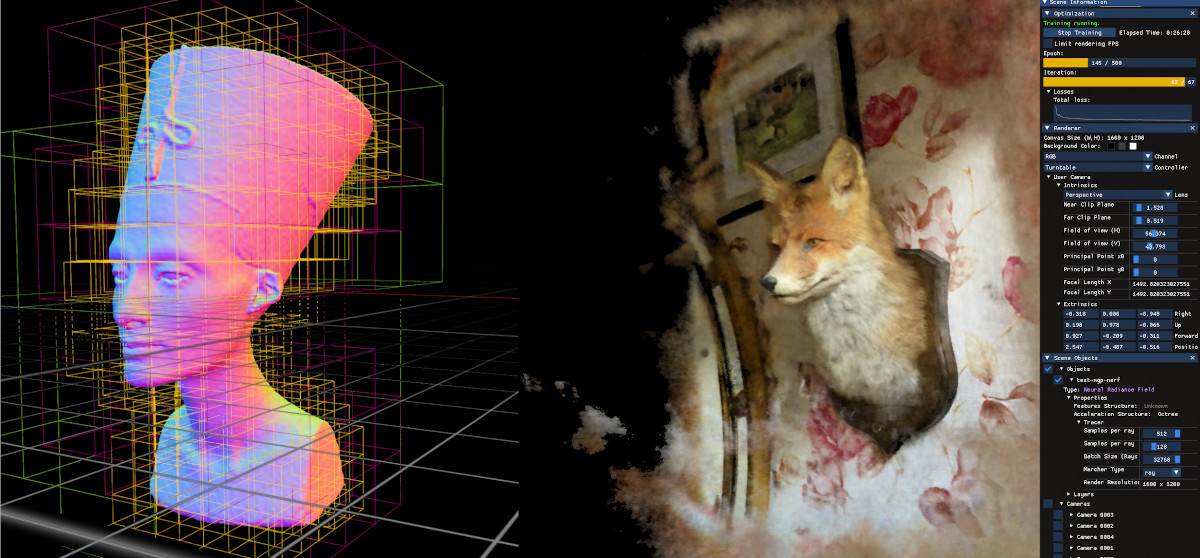

NVIDIA Kaolin Wisp is a PyTorch library powered by NVIDIA Kaolin Core to work with neural fields (including NeRFs, NGLOD, instant-ngp and VQAD).

NVIDIA Kaolin Wisp aims to provide a set of common utility functions for performing research on neural fields. This includes datasets, image I/O, mesh processing, and ray utility functions. Wisp also comes with building blocks like differentiable renderers and differentiable data structures (like octrees, hash grids, triplanar features) which are useful to build complex neural fields. It also includes debugging visualization tools, interactive rendering and training, logging, and trainer classes.

Check our docsite for additional information!

For an overview on neural fields, we recommend you check out the EG STAR report: Neural Fields for Visual Computing and Beyond.

wisp 1.0.3 <-- main

- 17/04/23 The configuration system have been replaced! Check out the config page for usage instructions and backwards compatability (breaking change). Note that the wisp core library remains compatible, mains and trainers should be updated.

wisp 1.0.2 <-- stable

- 15/04/23 Jupyter notebook support have been added - useful for machines without a display.

- 01/02/23

attrdictdependency added as part of the new datasets framework. If you pull latest, make sure topip install attrdict. - 17/01/23

pycudareplaced withcuda-python. Wisp can be installed from pip now (If you pull, run pip install -r requirements_app.txt) - 05/01/23 Mains are now introduced as standalone apps, for easier support of new pipelines (breaking change)

See installation instructions here.

We welcome & encourage external contributions to the codebase! For further details, read the FAQ and license page.

This codebase is licensed under the NVIDIA Source Code License. Commercial licenses are also available, free of charge. Please apply using this link (use "Other" and specify Kaolin Wisp): https://www.nvidia.com/en-us/research/inquiries/

If you find the NVIDIA Kaolin Wisp library useful for your research, please cite:

@misc{KaolinWispLibrary,

author = {Towaki Takikawa and Or Perel and Clement Fuji Tsang and Charles Loop and Joey Litalien and Jonathan Tremblay and Sanja Fidler and Maria Shugrina},

title = {Kaolin Wisp: A PyTorch Library and Engine for Neural Fields Research},

year = {2022},

howpublished={\url{https://github.com/NVIDIAGameWorks/kaolin-wisp}}

}

We thank James Lucas, Jonathan Tremblay, Valts Blukis, Anita Hu, and Nishkrit Desai for giving us early feedback and testing out the code at various stages throughout development. We thank Rogelio Olguin and Jonathan Tremblay for the Wisp reference data.

Special thanks for community members:

Our library is named after the atmospheric ghost light, will-o'-the-wisp, which are volumetric ghosts that are harder to model with common standard geometry representations like meshes. We provide a multiview dataset of the wisp as a reference dataset for a volumetric object. We also provide the blender file and rendering scripts if you want to generate specific data with this scene, please refer to the readme.md for greater details on how to generate the data.