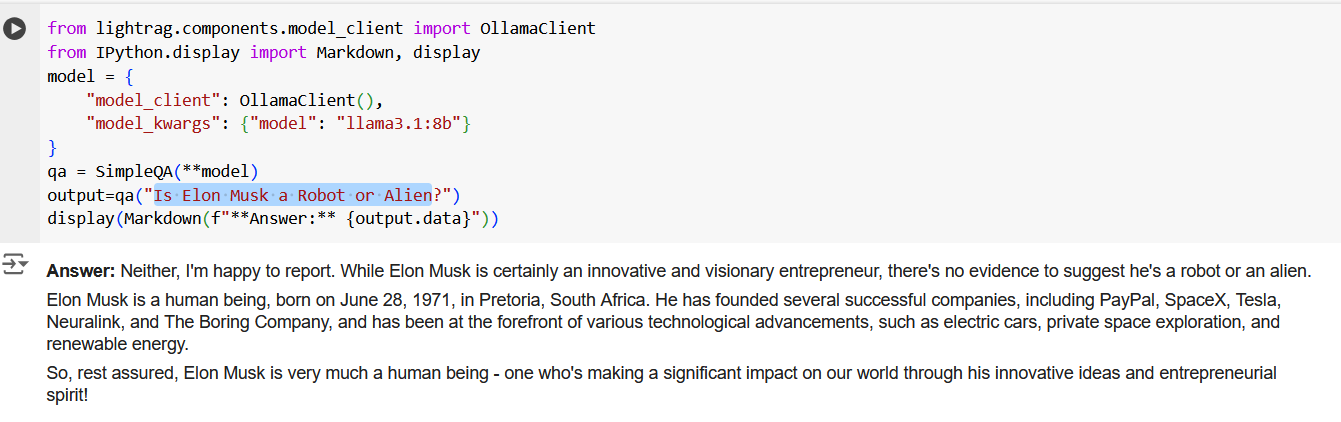

Sample output on Google Collab

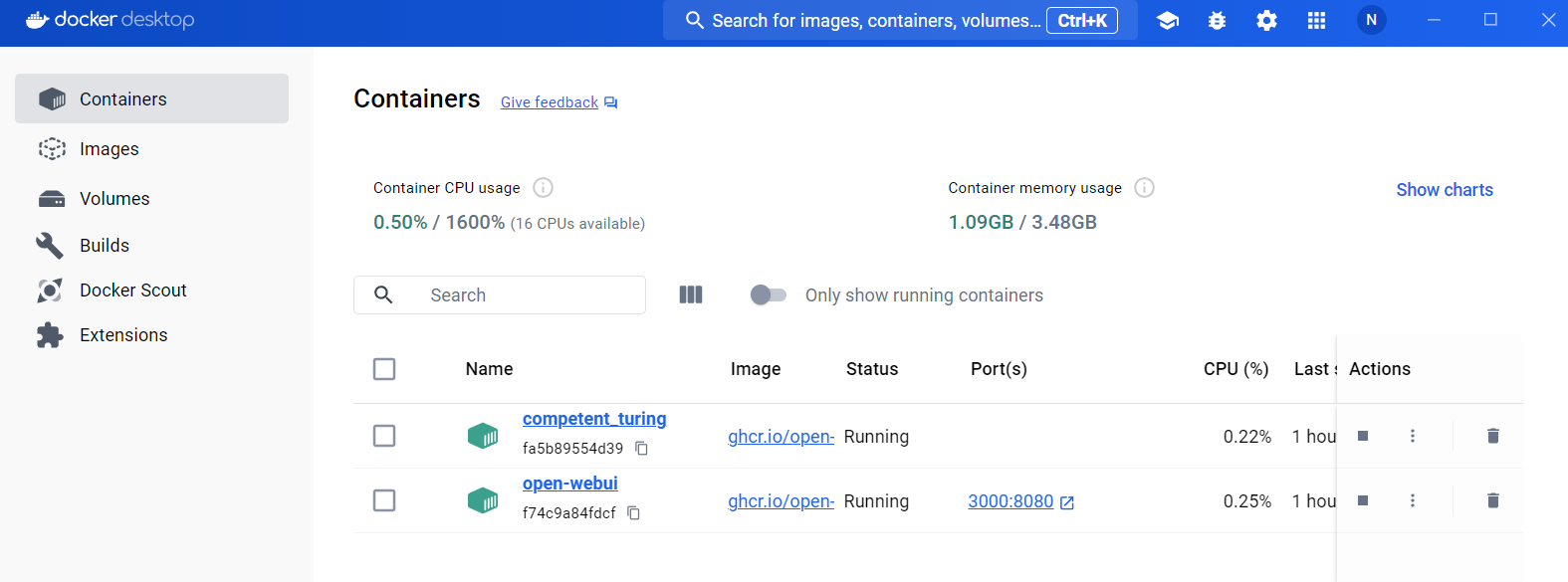

This project sets up the Ollama API server and integrates it with a simple Question-Answer (QA) component using the lightrag library.

The Ollama API server is configured to serve the llama3.1:8b model. This project demonstrates how to set up the server, pull the model, and use a QA component to generate responses based on user input.

- Python 3.x

- Git for Windows

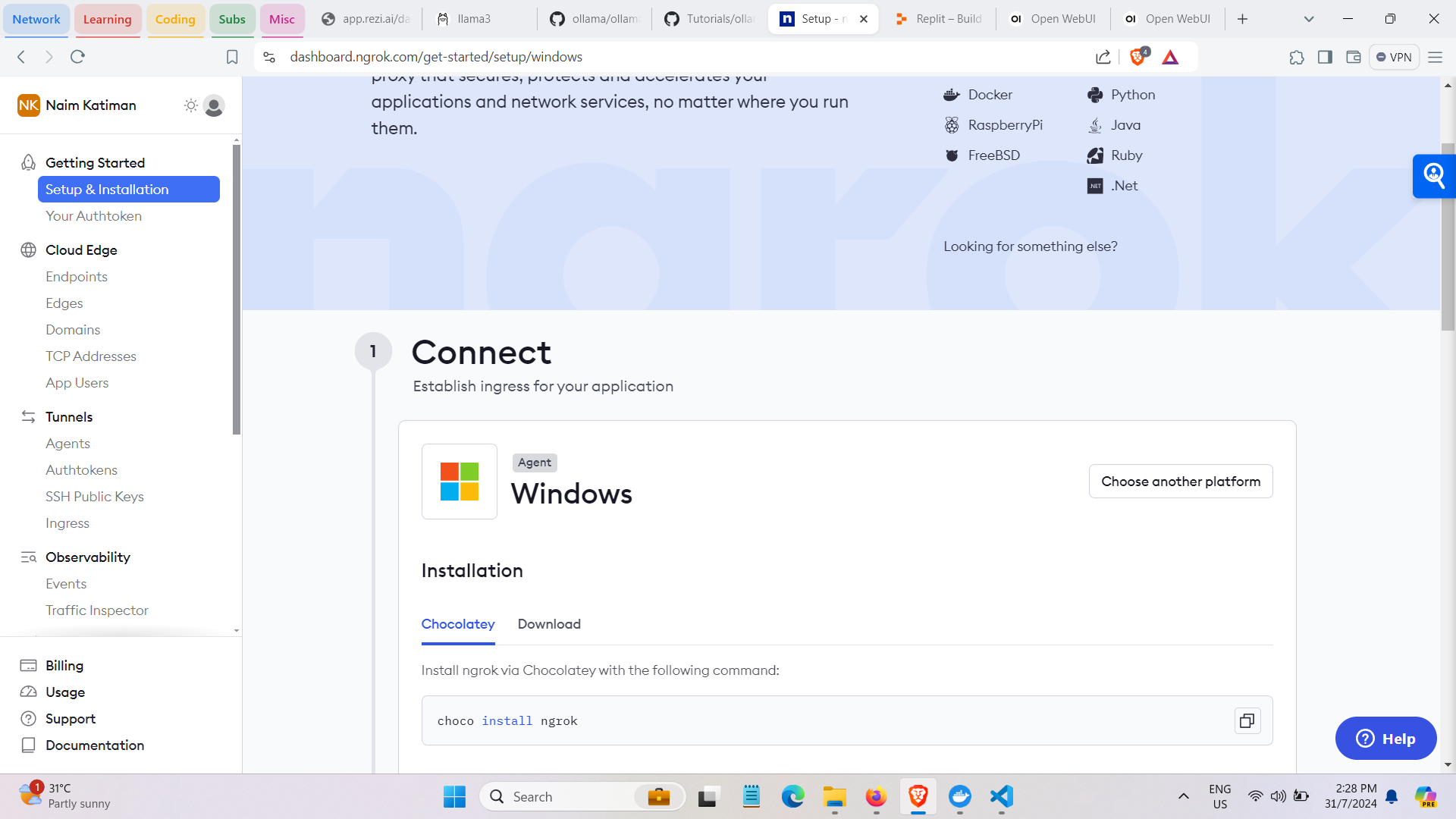

pippackage manager- Chocolatey (for installing Curl)

-

Clone the Repository:

git clone https://github.com/<username>/ollama-setup.git cd ollama-setup

-

Install Required Packages: (For Windows)

- Open Command Prompt or PowerShell as Administrator and run the following commands:

choco install curl curl -fsSL https://ollama.com/install.sh -o install.sh bash install.sh pip install -U lightrag[ollama]

-

Run the Script:

- Open Command Prompt or PowerShell in the project directory and run:

python ollama_setup.py

-

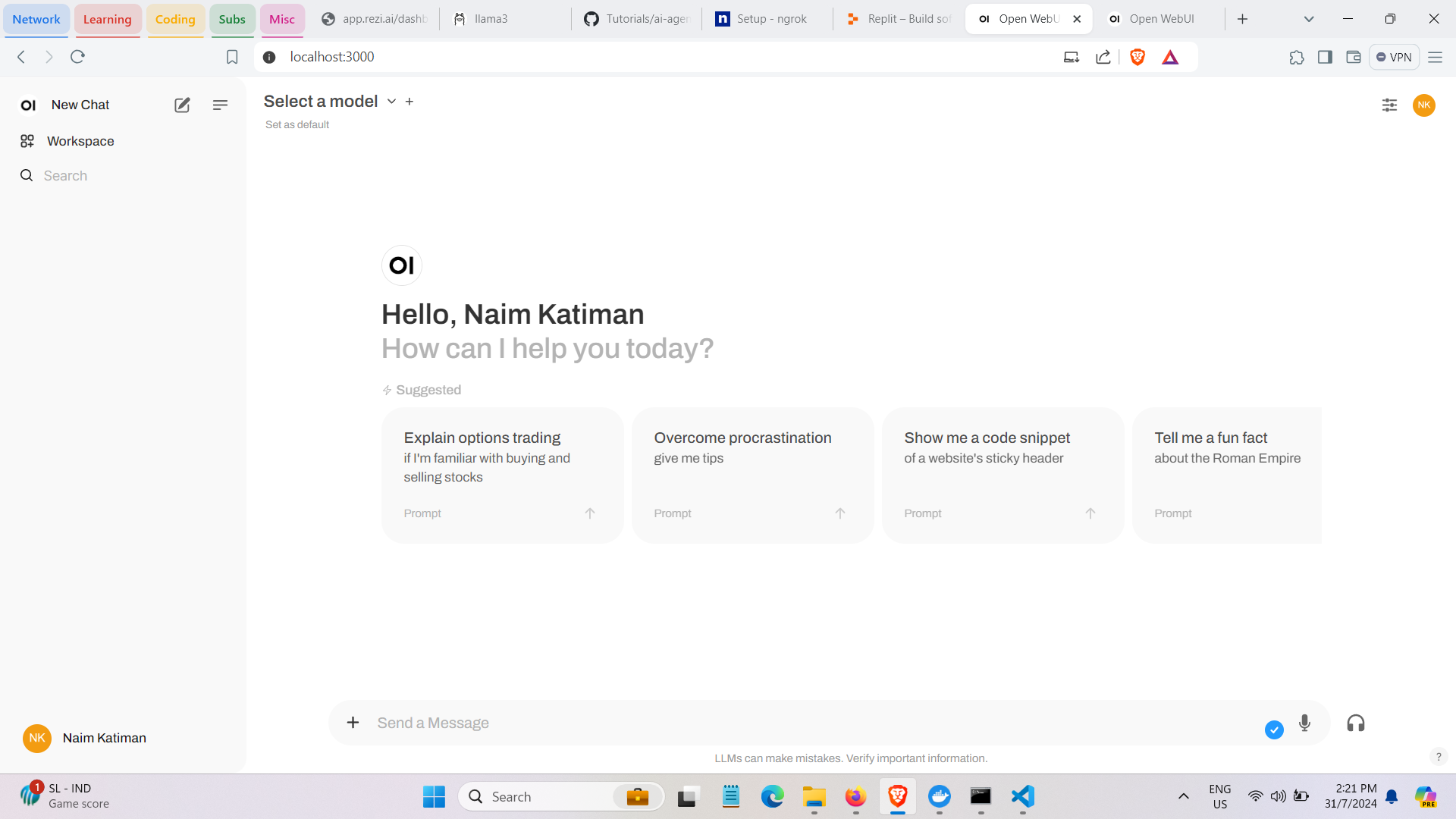

Interact with the QA Component:

The script initializes the Ollama API server and pulls the

llama3.1:8bmodel. It sets up a simple QA component to generate responses based on user input. -

Example:

When prompted, enter a question like:

How do you born?The component will generate and display an answer.

Contributions are welcome! Please fork the repository and create a pull request with your changes.

This project is licensed under the MIT License. See the LICENSE file for details.