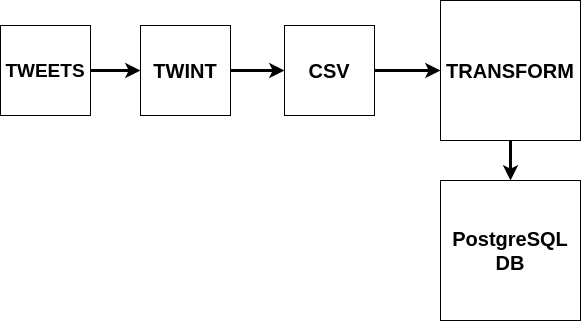

Core idea: Build an ETL pipeline to scrape twitter for a given topic and feed it into a database

In order to scrape twitter I will use twint which makes it easier to scrape tweets without any authentication or API and can fetch all tweets. After scraping for the given topic I will store the raw data to a csv file.

After having a raw csv file, I will choose the desired columns to keep, for this project these are: tweets, time, timezone, language. After that I do some data cleaning by unifying the time to be all on the UTC reference.

As a final step, I will store the data to be available for analysis. For this I will use PostgreSQL, since I'm using a SQL database I need to define a primary key, which is a constraint that uniquely identifies each record on the table. For the primary key I will choose the tweet id given by twint.

A good way to keep the data flowing to the database is good to have a tool that can automate this procedure. The tool that I will use is Apache Airflow. The reason that I chose Airflow is because it is an open-source project and is used by many data companies.