Updates | Main Results | Usage | Citation | Acknowledgement

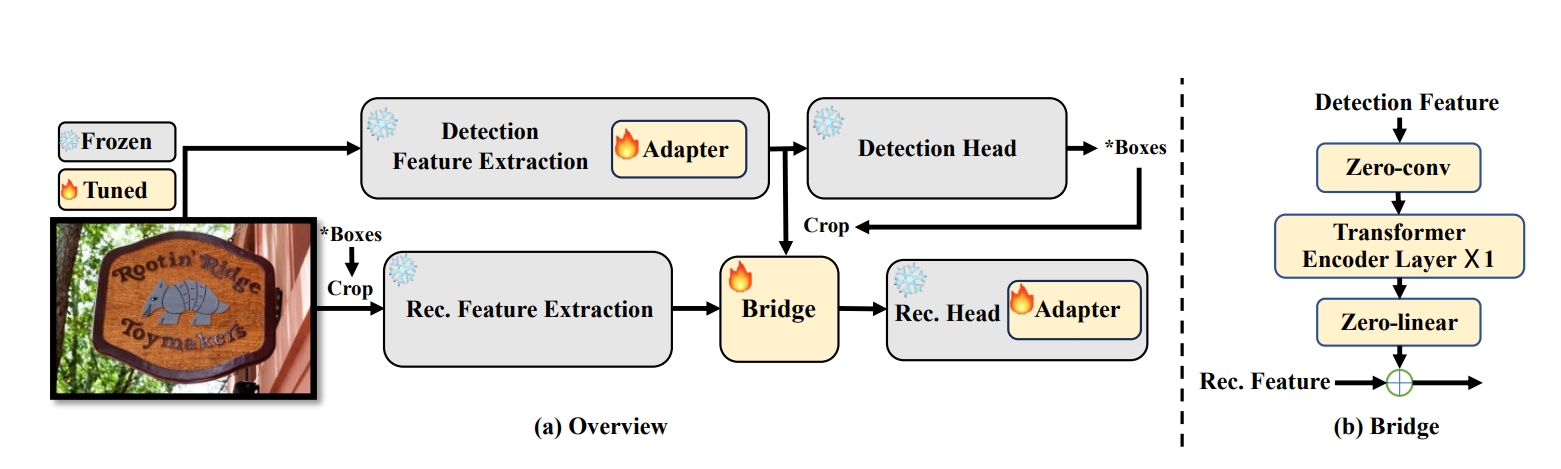

This is the official repo for the paper "Bridging the Gap Between End-to-End and Two-Step Text Spotting", which is accepted to CVPR 2024.[Apr.08, 2024]The paper is submitted to ArXiv. 🔥🔥🔥 You can use only a single 3090 to train the model.

Total-Text

| Method | Det-P | Det-R | Det-F1 | E2E-None | E2E-Full | Weights |

|---|---|---|---|---|---|---|

| DG-Bridge Spotter | 92.0 | 86.5 | 89.2 | 83.3 | 88.3 | Google Drive |

CTW1500

| Method | Det-P | Det-R | Det-F1 | E2E-None | E2E-Full | Weights |

|---|---|---|---|---|---|---|

| DG-Bridge Spotter | 92.1 | 86.2 | 89.0 | 69.8 | 83.9 | Google Drive |

ICDAR 2015 (IC15)

| Backbone | Det-P | Det-R | Det-F1 | E2E-S | E2E-W | E2E-G | Weights |

|---|---|---|---|---|---|---|---|

| TG-Bridge Spotter | 93.8 | 87.5 | 90.5 | 89.1 | 84.2 | 80.4 | Google Drive |

It's recommended to configure the environment using Anaconda. Python 3.8 + PyTorch 1.9.1 (or 1.9.0) + CUDA 11.1 + Detectron2 (v0.6) are suggested.

conda create -n Bridge python=3.8 -y

conda activate Bridge

pip install torch==1.9.1+cu111 torchvision==0.10.1+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install opencv-python scipy timm shapely albumentations Polygon3

pip install setuptools==59.5.0

git clone https://github.com/mxin262/Bridging-Text-Spotting.git

cd Bridging-Text-Spotting

cd detectron2

python setup.py build develop

cd ..

python setup.py build develop

Total-Text (including rotated images): link

CTW1500 (including rotated images): link

ICDAR2015 (images): link.

Json files for Total-Text and CTW1500: OneDrive | BaiduNetdisk(44yt)

Json files for ICDAR2015: link,

Evaluation files: link. Extract them under

datasetsfolder.

Organize them as follows:

|- datasets

|- totaltext

| |- test_images_rotate

| |- train_images_rotate

| |- test_poly.json

| |- test_poly_rotate.json

| |─ train_poly_ori.json

| |─ train_poly_pos.json

| |─ train_poly_rotate_ori.json

| └─ train_poly_rotate_pos.json

|- ctw1500

| |- test_images

| |- train_images_rotate

| |- test_poly.json

| └─ train_poly_rotate_pos.json

|- icdar2015

| | - test_images

| | - train_images

| | - test.json

| | - train.json

|- evaluation

| |- lexicons

| |- gt_totaltext.zip

| |- gt_ctw1500.zip

| |- gt_inversetext.zip

| └─ gt_totaltext_rotate.zip

The generation of positional label form for DPText-DETR is provided in process_positional_label.py

Download the pre-trained model from DPText-DETR, DiG, TESTR.

With the pre-trained model, use the following command to fine-tune it on the target benchmark. For example:

python tools/train_net.py --config-file configs/Bridge/TotalText/R_50_poly.yaml --num-gpus 4 MODEL.WEIGHTS totaltext_final.pth

python tools/train_net.py --config-file ${CONFIG_FILE} --eval-only MODEL.WEIGHTS ${MODEL_PATH}

python demo/demo.py --config-file ${CONFIG_FILE} --input ${IMAGES_FOLDER_OR_ONE_IMAGE_PATH} --output ${OUTPUT_PATH} --opts MODEL.WEIGHTS <MODEL_PATH>

If you find Bridge Text Spotting useful in your research, please consider citing:

@inproceedings{huang2024bridge,

title={Bridging the Gap Between End-to-End and Two-Step Text Spotting},

author={Huang, Mingxin and Li, Hongliang and Liu, Yuliang and Bai, Xiang and Jin, Lianwen},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}AdelaiDet, DPText-DETR, DiG, TESTR. Thanks for their great work!