See our publication using PointSDF for real robotic grasping here

PointSDF is a novel, point cloud based, implicit surface reconstruction learning architecture based on recent advances in the Computer Vision community [1,2,3]. Our approach uses new advances in point cloud based learning [1] in an architecture designed similar to [2], but with the implicit surface representation introduced in [3]. Our approach allows for fast point cloud based, reconstructions, specifically to be utilized in grasp planning.

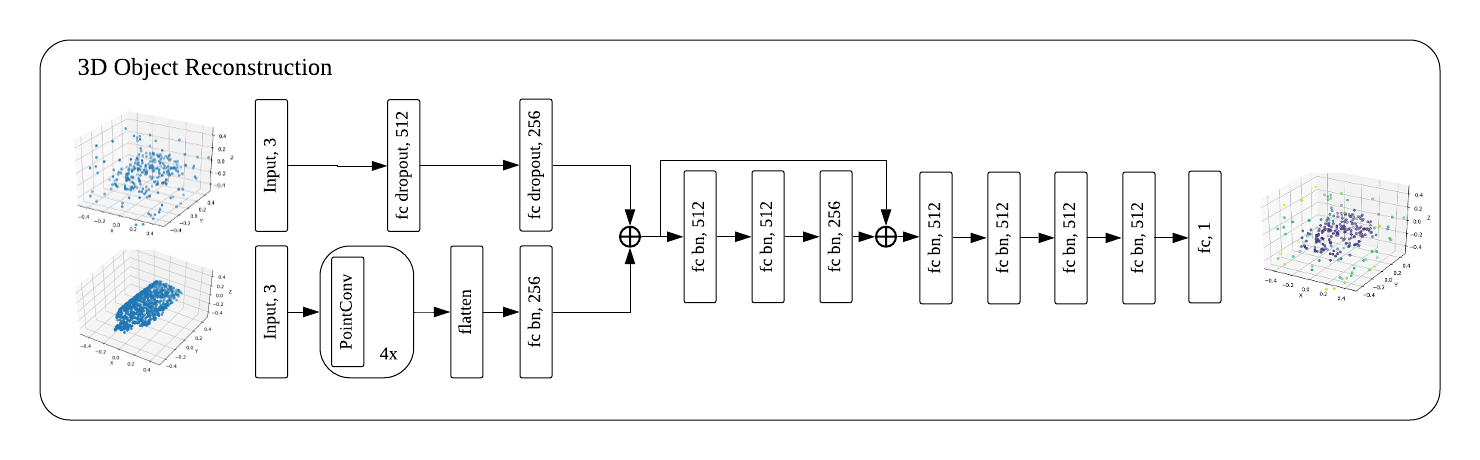

PointSDF represents an object as the zero level set of a function that predicts the Signed Distance Function value for a given query point in arbitrary 3D space around an object. This is a regression problem, which we train using MSE loss.

We embed input point clouds using 4 PointConv embedding layers [1]. Query points are embedded using dropout FC layers. The point clouds are embedded and appended to each input query points's embedding. This joint embedding is passed through 8 FC layers with Batch Norm and ReLU activations (also fed in at layer 4).

Our code depends on the following python libraries:

- Tensorflow (>=1.9.0)

- numpy

- mcubes

- trimesh

- matplotlib

We additionally rely on PointConv for our embedding layers. PointConv should be cloned from here and their instructions should be followed to compile their custom TF operations. Additionally, the environment variable POINTCONV_HOME should be set to point at the pointconv repository folder.

You can find relevant datasets and model checkpoint files here.

For full list of options run:

python main.py -h

Not all options are necessary each run and have reasonable defaults.

Training run example:

python main.py --learning_rate 1e-5 --optimizer adam --model_func pointconv --model_name test_training --model_path ~/models/sdf/ --log_path ~/logs/ --batch_size 8 --epochs 100 --training --train_path /dataspace/ReconstructionData/SDF_Full/Train/ --validation_path /dataspace/ReconstructionData/SDF_Full/Validation/ --sdf_count 256

[1] Wu, Wenxuan, Zhongang Qi, and Li Fuxin. "PointConv: Deep Convolutional Networks on 3D Point Clouds." arXiv preprint arXiv:1811.07246 (2018).

[2] Joon Park, Jeong, et al. "DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019.

[3] Mescheder, Lars, et al. "Occupancy Networks: Learning 3D Reconstruction in Function Space." arXiv preprint arXiv:1812.03828 (2018).

[4] Kappler, Daniel, Jeannette Bohg, and Stefan Schaal. "Leveraging big data for grasp planning." 2015 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2015.

Mark Van der Merwe: mark.vandermerwe@utah.edu