This is an ongoing project aims to Edit and Generate Anything in an image, powered by Segment Anything, ControlNet, BLIP2, Stable Diffusion, etc.

A project for fun. Any forms of contribution and suggestion are very welcomed!

2023/04/10 - An initial version of edit-anything is in sam2edit.py.

2023/04/10 - We transfer the pretrained model into diffusers style, the pretrained model is auto loaded when using sam2image_diffuser.py. Now you can combine our pretrained model with different base models easily!

2023/04/09 - We released a pretrained model of StableDiffusion based ControlNet that generate images conditioned by SAM segmentation.

Highlight features:

- Pretrained ControlNet with SAM mask as condition enables the image generation with fine-grained control.

- category-unrelated SAM mask enables more forms of editing and generation.

- BLIP2 text generation enables text guidance-free control.

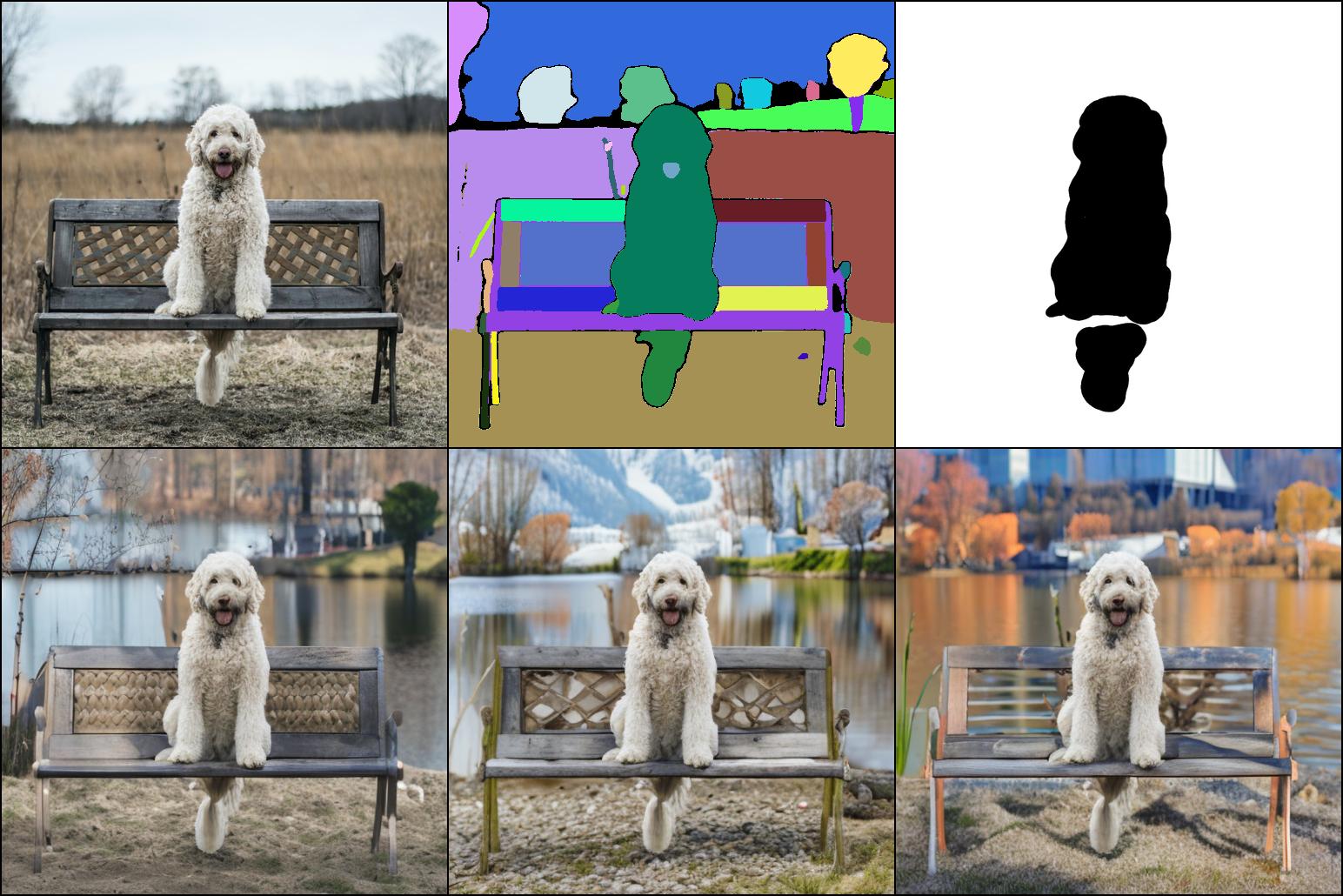

Human Prompt: "chairs by the lake, sunny day, spring"

An initial version of edit-anything. (We will add more controls on masks very soon.)

An initial version of edit-anything. (We will add more controls on masks very soon.)

BLIP2 Prompt: "a large white and red ferry"

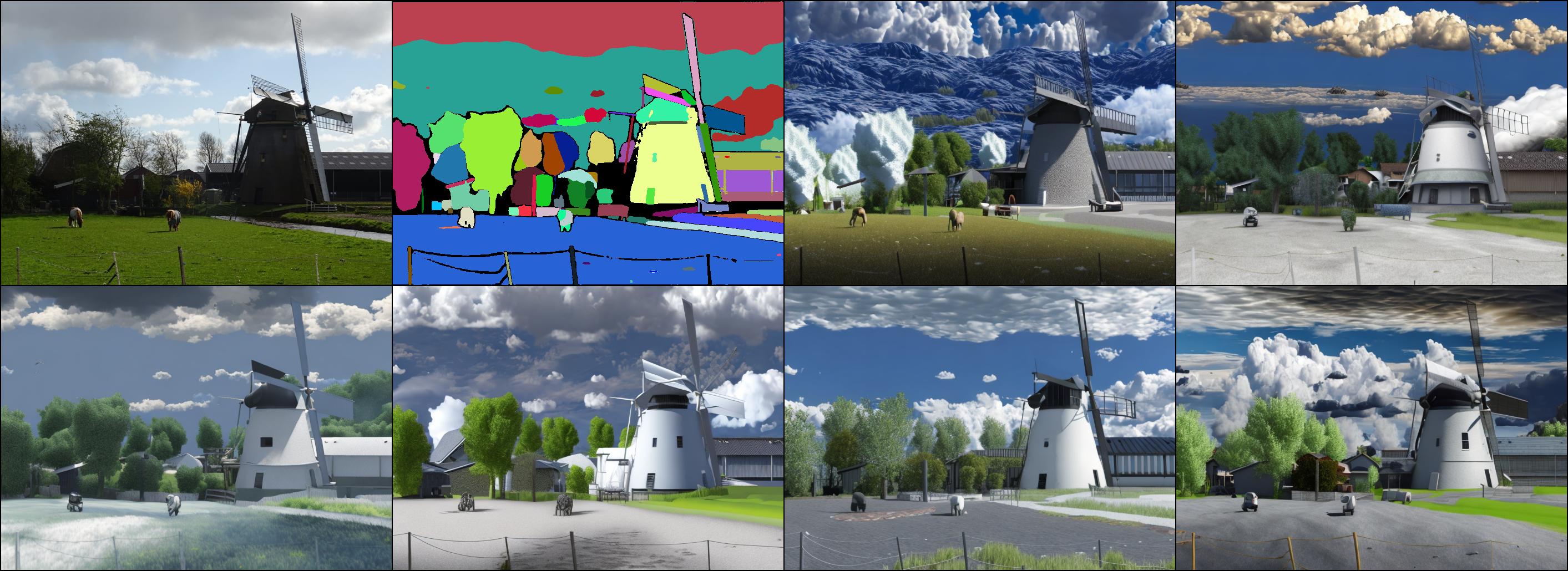

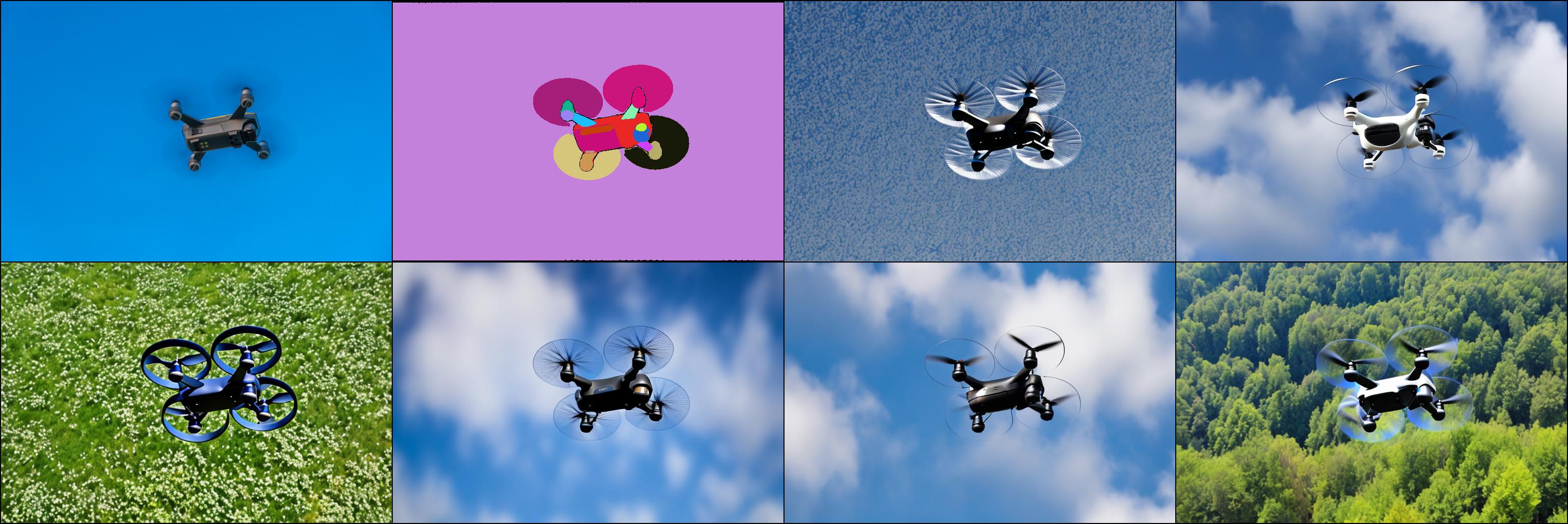

(1:input image; 2: segmentation mask; 3-8: generated images.)

(1:input image; 2: segmentation mask; 3-8: generated images.)

BLIP2 Prompt: "a black drone flying in the blue sky"

- The human prompt and BLIP2 generated prompt build the text instruction.

- The SAM model segment the input image to generate segmentation mask without category.

- The segmentation mask and text instruction guide the image generation.

Note: Due to the privacy protection in the SAM dataset, faces in generated images are also blurred. We are training new models with unblurred images to solve this.

-

Conditional Generation trained with 85k samples in SAM dataset.

-

Training with more images from LAION and SAM.

-

Interactive control on different masks for image editing.

-

Using Grounding DINO for category-related auto editing.

-

ChatGPT guided image editing.

Create a environment

conda env create -f environment.yaml

conda activate controlInstall BLIP2 and SAM

Put these models in models folder.

pip install git+https://github.com/huggingface/transformers.git

pip install git+https://github.com/facebookresearch/segment-anything.gitDownload pretrained model

# Segment-anything ViT-H SAM model.

cd models/

wget https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth

# BLIP2 model will be auto downloaded.

# Get edit-anything-ckpt-v0-1.ckpt pretrained model from huggingface.

# No need to download this if your are using sam2image_diffuser.py!!!

https://huggingface.co/shgao/edit-anything-v0-1

Run Demo

python sam2image_diffuser.py

# or

python sam2image.py

# or

python sam2edit.pySet 'use_gradio = True' in these files if you have GUI to run the gradio demo.

- Generate training dataset with

dataset_build.py. - Transfer stable-diffusion model with

tool_add_control_sd21.py. - Train model with

sam_train_sd21.py.

This project is based on:

Segment Anything, ControlNet, BLIP2, MDT, Stable Diffusion, Large-scale Unsupervised Semantic Segmentation

Thanks for these amazing projects!